When you run a Spark job in a Container Service for Kubernetes (ACK) cluster, a large number of logs are generated and distributed in different pods. This complicates log management. You can use Simple Log Service to collect, process, query, analyze, and visualize logs, and generate alerts. This allows you to efficiently manage Spark logs. This topic describes how to use Simple Log Service to manage the logs of Spark jobs in an ACK cluster.

Prerequisites

The ack-spark-operator component is installed. For more information, see Step 1: Install the ack-spark-operator component.

The Simple Log Service project is created. For more information, see Manage a project.

Logtail components are installed. For more information, see Install Logtail components in an ACK cluster.

Procedure overview

This topic describes how to configure Simple Log Service to manage system logs and business logs generated by Spark jobs.

Build a Spark container image: Build a Spark container image that contains the log4j JSON template layout dependency and push the image to your image repository.

Configure Log4j2 logs: Create a ConfigMap to configure Log4j2 logs, set the log level to INFO, and then set the log print format to JSONL.

Create a Logtail configuration: Create an AliyunConfig resource. Simple Log Service creates a Logtail configuration in the specified Logstore to collect the logs of Spark jobs that are submitted by using the Spark Operator.

Submit a sample Spark job: Create and run a sample Spark job, check whether the pod log output is in JSONL format, and then describe the meaning of specific fields.

Query and analyze Spark logs: Log on to the Simple Log Service console and query and analyze Spark job logs within a specified period of time.

(Optional) Clear the environment: After the test is completed, remove unnecessary Spark jobs and resources to prevent additional costs.

Step 1: Build a Spark container image

Create the following Dockerfile and add the required dependencies to the classpath of Spark. In this example, Spark 3.5.3 is used. After the image is built, push the image to your image repository. To facilitate log collection and parsing, the logs are output in the JSONL format.

ARG SPARK_IMAGE=<SPARK_IMAGE> # Replace <SPARK_IMAGE> with your Spark base image.

FROM ${SPARK_IMAGE}

# Add dependency for log4j-layout-template-json

ADD --chown=spark:spark --chmod=644 https://repo1.maven.org/maven2/org/apache/logging/log4j/log4j-layout-template-json/2.24.1/log4j-layout-template-json-2.24.1.jar ${SPARK_HOME}/jarsStep 2: Configure Log4j logs

Use the following content to create a file named spark-log-conf.yaml, set the log level to INFO, and set the log printing format to JSONL format. Use the Elastic Common Schema (ECS) as the log template, which is a standardized log format. For more information, see Collect Log4j logs.

apiVersion: v1

kind: ConfigMap

metadata:

name: spark-log-conf

namespace: default

data:

log4j2.properties: |

# Set everything to be logged to the console and file

rootLogger.level = info

rootLogger.appenderRefs = console, file

rootLogger.appenderRef.console.ref = STDOUT

rootLogger.appenderRef.file.ref = FileAppender

appender.console.name = STDOUT

appender.console.type = Console

appender.console.layout.type = JsonTemplateLayout

appender.console.layout.eventTemplateUri = classpath:EcsLayout.json

appender.file.name = FileAppender

appender.file.type = File

appender.file.fileName = /opt/spark/logs/spark.log

appender.file.layout.type = JsonTemplateLayout

appender.file.layout.eventTemplateUri = classpath:EcsLayout.jsonRun the following command to create a ConfigMap:

kubectl apply -f spark-log-conf.yamlExpected output:

configmap/spark-log-conf createdStep 3: Create a Logtail configuration

Create an AliyunLogConfig manifest file named aliyun-log-config.yaml by using the following content. Replace <SLS_PROJECT> with the name of your Simple Log Service project and <SLS_LOGSTORE> with the name of your Simple Log Service Logstore. For more information about the configurations, see Use AliyunLogConfig to manage a Logtail configuration.

apiVersion: log.alibabacloud.com/v1alpha1

kind: AliyunLogConfig

metadata:

name: spark

namespace: default

spec:

# (Optional) The name of the project. Default value: k8s-log-<Your_Cluster_ID>.

project: <SLS_PROJECT>

# The name of the Logstore. If the Logstore that you specify does not exist, Simple Log Service automatically creates a Logstore.

logstore: <SLS_LOGSTORE>

# The Logtail configuration.

logtailConfig:

# The name of the Logtail configuration.

configName: spark

# The type of the data source. The value file specifies text logs.

inputType: file

# The configurations of the log input.

inputDetail:

# The directory in which the log file is located.

logPath: /opt/spark/logs

# The name of a log file. Wildcard characters are supported.

filePattern: '*.log'

# The encoding of the log file.

fileEncoding: utf8

# The log type.

logType: json_log

localStorage: true

key:

- content

logBeginRegex: .*

logTimezone: ''

discardNonUtf8: false

discardUnmatch: true

preserve: true

preserveDepth: 0

regex: (.*)

outputType: LogService

topicFormat: none

adjustTimezone: false

enableRawLog: false

# Collect text logs from containers.

dockerFile: true

# Advanced configurations.

advanced:

# Preview the container metadata.

collect_containers_flag: true

# Logtail configurations in Kubernetes.

k8s:

# Filter pods based on the tag.

IncludeK8sLabel:

sparkoperator.k8s.io/launched-by-spark-operator: "true"

# Filter containers based on the container name.

K8sContainerRegex: "^spark-kubernetes-(driver|executor)$"

# Additional log tag configurations.

ExternalK8sLabelTag:

spark-app-name: spark-app-name

spark-version: spark-version

spark-role: spark-role

spark-app-selector: spark-app-selector

sparkoperator.k8s.io/submission-id: sparkoperator.k8s.io/submission-id

# The log processing plug-in.

plugin:

processors:

# Log isolation.

- type: processor_split_log_string

detail:

SplitKey: content

SplitSep: ''

# Parse the JSON field.

- type: processor_json

detail:

ExpandArray: false

ExpandConnector: ''

ExpandDepth: 0

IgnoreFirstConnector: false

SourceKey: content

KeepSource: false

KeepSourceIfParseError: true

NoKeyError: false

UseSourceKeyAsPrefix: false

# Extract the log timestamp.

- type: processor_strptime

detail:

SourceKey: '@timestamp'

Format: '%Y-%m-%dT%H:%M:%S.%fZ'

KeepSource: false

AdjustUTCOffset: true

UTCOffset: 0

AlarmIfFail: falseRun the following command to create a Logtail configuration:

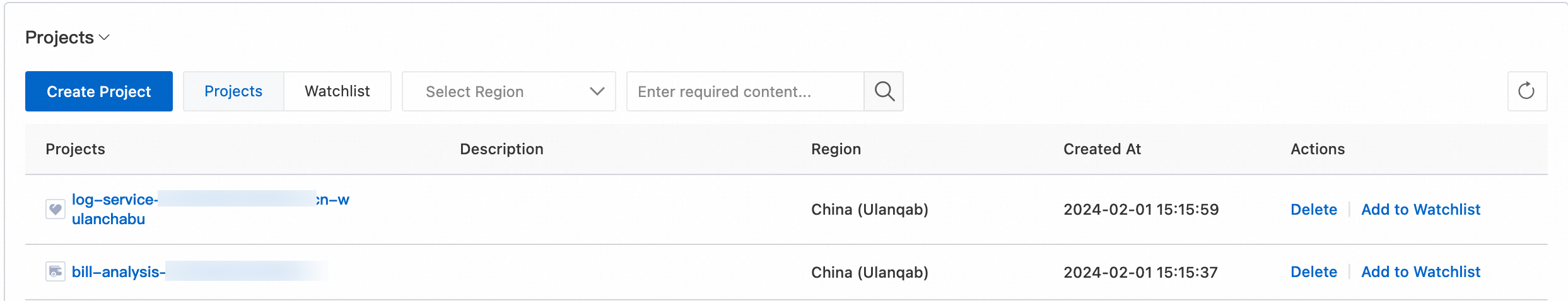

kubectl apply -f aliyun-log-config.yamlTo view the new Logstore and Logtail configurations, perform the following steps.

Log on to the Simple Log Service console.

In the Projects section, click the project you want.

Choose . Click the > icon of the target Logtail configurations. Choose .

Click the target Logtail configurations to view the details of the configurations.

Step 4: Submit a sample Spark job

Create a SparkApplication manifest file named spark-pi.yaml by using the following content:

apiVersion: sparkoperator.k8s.io/v1beta2

kind: SparkApplication

metadata:

name: spark-pi

namespace: default

spec:

type: Scala

mode: cluster

image: <SPARK_IMAGE>

mainClass: org.apache.spark.examples.SparkPi

mainApplicationFile: local:///opt/spark/examples/jars/spark-examples_2.12-3.5.3.jar

arguments:

- "5000"

sparkVersion: 3.5.3

sparkConfigMap: spark-log-conf

driver:

cores: 1

memory: 512m

serviceAccount: spark-operator-spark

executor:

instances: 1

cores: 1

memory: 4gRun the following command to submit the job:

kubectl apply -f spark-pi.yamlWait until the job execution is complete and check the last 10 lines of the driver pod log.

kubectl logs --tail=10 spark-pi-driver Expected output:

{"@timestamp":"2024-11-20T11:45:48.487Z","ecs.version":"1.2.0","log.level":"WARN","message":"Kubernetes client has been closed.","process.thread.name":"-937428334-pool-19-thread-1","log.logger":"org.apache.spark.scheduler.cluster.k8s.ExecutorPodsWatchSnapshotSource"}

{"@timestamp":"2024-11-20T11:45:48.585Z","ecs.version":"1.2.0","log.level":"INFO","message":"MapOutputTrackerMasterEndpoint stopped!","process.thread.name":"dispatcher-event-loop-7","log.logger":"org.apache.spark.MapOutputTrackerMasterEndpoint"}

{"@timestamp":"2024-11-20T11:45:48.592Z","ecs.version":"1.2.0","log.level":"INFO","message":"MemoryStore cleared","process.thread.name":"main","log.logger":"org.apache.spark.storage.memory.MemoryStore"}

{"@timestamp":"2024-11-20T11:45:48.592Z","ecs.version":"1.2.0","log.level":"INFO","message":"BlockManager stopped","process.thread.name":"main","log.logger":"org.apache.spark.storage.BlockManager"}

{"@timestamp":"2024-11-20T11:45:48.596Z","ecs.version":"1.2.0","log.level":"INFO","message":"BlockManagerMaster stopped","process.thread.name":"main","log.logger":"org.apache.spark.storage.BlockManagerMaster"}

{"@timestamp":"2024-11-20T11:45:48.598Z","ecs.version":"1.2.0","log.level":"INFO","message":"OutputCommitCoordinator stopped!","process.thread.name":"dispatcher-event-loop-1","log.logger":"org.apache.spark.scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint"}

{"@timestamp":"2024-11-20T11:45:48.602Z","ecs.version":"1.2.0","log.level":"INFO","message":"Successfully stopped SparkContext","process.thread.name":"main","log.logger":"org.apache.spark.SparkContext"}

{"@timestamp":"2024-11-20T11:45:48.604Z","ecs.version":"1.2.0","log.level":"INFO","message":"Shutdown hook called","process.thread.name":"shutdown-hook-0","log.logger":"org.apache.spark.util.ShutdownHookManager"}

{"@timestamp":"2024-11-20T11:45:48.604Z","ecs.version":"1.2.0","log.level":"INFO","message":"Deleting directory /var/data/spark-f783cf2e-44db-452c-83c9-738f9c894ef9/spark-2caa5814-bd32-431c-a9f9-a32208b34fbb","process.thread.name":"shutdown-hook-0","log.logger":"org.apache.spark.util.ShutdownHookManager"}

{"@timestamp":"2024-11-20T11:45:48.606Z","ecs.version":"1.2.0","log.level":"INFO","message":"Deleting directory /tmp/spark-dacdfd95-f166-4b23-9312-af9052730417","process.thread.name":"shutdown-hook-0","log.logger":"org.apache.spark.util.ShutdownHookManager"}The output log is printed in the JSONL format. The following section describes the meaning of each field:

@timestamp: The time when the log is generated.ecs.version: The ECS version number.log.level: The level of the log.message: The log message.process.thread.name: The name of the thread that generates the log.log.logger: The name of the logger that records the log.

Step 5: Query and analyze Spark logs

You can query and analyze logs to specify a time range for job execution to check whether logs are collected.

(Optional) Step 6: Clear the environment

After you perform all the steps in this topic, run the following command to delete the Spark job and release the resources that you no longer require.

Run the following command to delete the Spark job:

kubectl delete -f spark-pi.yamlRun the following command to delete the Logtail configuration:

kubectl delete -f aliyun-log-config.yamlRun the following command to delete the Log4j2 log configuration:

kubectl delete -f spark-log-conf.yaml