To collect server logs for a Simple Log Service (SLS) project, you must first install the Logtail client on the target server. This topic describes how to install, run, upgrade, and uninstall Logtail on a target server.

Overview

Logtail can be used in two types of scenarios based on the server type:

Host scenarios: These apply to traditional computing environments, such as physical servers and Elastic Compute Service (ECS) instances.

Container scenarios: These apply to business scenarios where applications are deployed on the Kubernetes containerization platform.

Choose the appropriate method based on your server environment. The procedures and configuration requirements differ between scenarios. For hybrid environments, you must complete the installation and configuration for each environment separately.

Host scenarios

Install Logtail

Install Logtail using one-click installation or manual installation. One-click installation is supported only if you use an ECS instance that is in the same region and belongs to the same Alibaba Cloud account as the project. Otherwise, you must manually install Logtail.

One-click installation

SLS lets you install Logtail on ECS instances with a single click. This feature uses CloudOps Orchestration Service (OOS) and eliminates the need to log on to an ECS instance to perform manual installation steps. If you log on with an Alibaba Cloud account, you have all the required permissions by default and can perform the operations directly.

If you log on with a Resource Access Management (RAM) user, contact your Alibaba Cloud account to grant the permissions to operate OOS resources. The Alibaba Cloud account can create a RAM user and grant permissions to you. For more information, see Create a RAM user and grant permissions.

System permissions:

AliyunOOSFullAccess: Grants full permissions to manage CloudOps Orchestration Service (OOS).

AliyunECSFullAccess: Grants permissions to manage ECS.

Custom policies: If you have high data security requirements, create custom permission policies for fine-grained authorization. The following code shows an access policy for operating OOS resources.

{ "Version": "1", "Statement": [ { "Effect": "Allow", "Action": [ "ecs:DescribeTagKeys", "ecs:DescribeTags", "ecs:DescribeInstances", "ecs:DescribeInvocationResults", "ecs:RunCommand", "ecs:DescribeInvocations", "ecs:InvokeCommand" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "oos:ListTemplates", "oos:StartExecution", "oos:ListExecutions", "oos:GetExecutionTemplate", "oos:ListExecutionLogs", "oos:ListTaskExecutions" ], "Resource": "*" } ] }

Follow these steps to install Logtail on an ECS instance with a single click and create and configure a machine group at the same time.

Log on to the Simple Log Service console. Click the project that is used to manage log resources to view the list of logstores. Click the

icon before the name of the destination logstore to expand it. Then, click the

icon before the name of the destination logstore to expand it. Then, click the  icon that appears after Data Collection. In the dialog box that appears, select a text log collection template and click Integrate Now.

icon that appears after Data Collection. In the dialog box that appears, select a text log collection template and click Integrate Now.SLS provides various text log templates, such as regular expression, single-line, and multi-line templates. These templates differ only in their log parsing plug-ins. You can also add or remove log parsing plug-ins within a template. Select a template based on the characteristics of your logs or select any text log template and then configure the plug-ins as needed.

On the Machine Group Configurations page, set Scenario to Servers and Installation Environment to ECS for the installation environment, and then click Create Machine Group.

In the Create Machine Group panel, select one or more ECS instances that are in the same region as the project. Click Install and Create Machine Group. Wait for the installation to complete, specify a Name for the machine group, and then click OK.

If the installation fails or remains in the waiting state, check whether the ECS instance is in the same region as the project.

After the installation, go to the page. Click the new machine group. In the section, check the Heartbeat status. If the status is OK, the machine group is created.

Manual installation

Select a download and installation method from the following table.

In the sample code, ${region_id} is the region where the SLS project is located. Replace it as needed. For more information, see Regions and endpoints. For example, the ${region_id} for China (Hangzhou) is cn-hangzhou.

If you use a machine with low specifications or an old operating system, you may encounter compatibility issues when you install Logtail 2.0, which can prevent the software from running correctly. We recommend that you download version 1.8.7 and run the

./logtail.sh install ${region_id} -v 1.8.7command to install it.

Host type | Architecture | Download method | Installation method |

Linux

| ARM | For hosts with an internet connection, download directly: | Select an installation command based on your network type: |

x86-64 | |||

ARM | For offline hosts, first download the installation script and package on a server with an internet connection: | Copy the installation script and package to the server where you want to install Logtail. Then, select an installation command based on your network type: | |

x86-64 | For offline hosts, first download the installation script and package on a server with an internet connection: | ||

Windows Note

| 32-bit | China regions: Logtail 32-bit installation package | Unzip the installation package. Run Windows PowerShell as an administrator and go to the |

Regions outside China: Logtail 32-bit installation package | |||

64-bit | China regions: Logtail 64-bit installation package | ||

Regions outside China: Logtail 64-bit installation package |

Batch install Logtail

Use one of the following methods to install Logtail in batches.

OOS orchestration: This method is suitable for scenarios with permission requirements. It supports high concurrency and is ideal for large-scale batch operations. For more information, see Use OOS to install or upgrade Logtail in batches.

ECS Cloud Assistant: This method is easy to use. Run commands to execute temporary tasks. The procedure is as follows.

In the top navigation bar, select the region and resource group of the resource that you want to manage.

In the upper-right corner of the ECS Cloud Assistant page, click Create/Run Command.

In the Create Command panel, enter the installation command in the Command content field. This example uses the internet installation method. For more installation commands, see Install Logtail.

The installation command used here is as follows:

#!/bin/bash region_id='cn-hangzhou' wget http://logtail-release-${region_id}.oss-${region_id}.aliyuncs.com/linux64/logtail.sh -O logtail.sh chmod +x logtail.sh ./logtail.sh install ${region_id}-internetImportantAfter you install Logtail, if you switch the network type of the ECS instance from classic network to VPC, you must update the Logtail configuration. For more information, see How do I update the machine group configuration after I switch an ECS instance from the classic network to a VPC?.

In the Select Instance section, confirm that the agent status of the target instance is Normal. If the status is not Normal, see Install the Cloud Assistant Agent to install Cloud Assistant. Select the target instance and click Run. The execution status then becomes Successful. If the execution fails, see View execution results and fix common issues.

Start and stop Logtail

Linux

Start Logtail

sudo /etc/init.d/ilogtaild startStop Logtail

sudo /etc/init.d/ilogtaild stop

Windows

Log on to the target server.

Choose .

In the Services dialog box, select the corresponding service.

For version 0.x.x.x, select the LogtailWorker service.

For version 1.0.0.0 or later, select the LogtailDaemon service.

Right-click and select the desired operation, such as Start, Stop, or Restart.

Check the Logtail status and version

Linux

Check the Logtail status

Run the sudo /etc/init.d/ilogtaild status command to check the Logtail status. If the system returns ilogtail is running, Logtail is running. If the Logtail status shows that it is not running, uninstall and then reinstall it.

Check the Logtail version

Logtail stores its version information in the logtail_version field of the /usr/local/ilogtail/app_info.json file. Run the following command to view the Logtail version information.

cat /usr/local/ilogtail/app_info.jsonThe following result is returned:

{

"logtail_version" : "0.16.30",

}Windows

Check the Logtail status

Check the Logtail status to determine whether Logtail is installed on the target server.

Open the Run window, enter

services.msc, and open the Services window.Check the running status of the LogtailDaemon service (for Logtail 1.0.0.0 or later) or the LogtailWorker service (for Logtail 0.x.x.x).

If it shows as running, Logtail is running.

Check the Logtail version

Check the Logtail version in the logtail_version field of the app_info.json file in the installation path.

For example, the following content indicates that the Logtail version is 1.0.0.0.

{

"logtail_version" : "1.0.0.0"

}Upgrade Logtail

Linux

To upgrade Logtail, use the

upgradecommand. If you use theinstallcommand, it performs an overwrite installation and the original configuration will be lost.During the upgrade, Logtail stops briefly. After the upgrade is complete, Logtail starts automatically and is registered as a startup item. The upgrade overwrites only necessary files. Configuration files and checkpoint files are retained to ensure that no logs are lost during the upgrade.

In the sample code, ${region_id} is the region where the SLS project is located. Replace it as needed. For more information, see Regions and endpoints. For example, the ${region_id} for China (Hangzhou) is cn-hangzhou.

Select a Logtail upgrade method from the following table.

Operating system | Download method | Upgrade method |

ARM and x86-64 | For hosts with an internet connection: | After the download is complete, run the upgrade command: |

ARM | For offline hosts, first download the installation script and package on a server with an internet connection:

| Copy the installation script and package to the server where you want to upgrade Logtail. Then, run the following upgrade command: |

x86-64 | For offline hosts, first download the installation script and package on a server with an internet connection: |

If the following information is displayed, the upgrade is successful.

stop successfully

Stop logtail successfully.

Upgrading logtail files ...

Upgrade logtail files successfully.

Starting logtail ...

ilogtail is running

Upgrade logtail successfully.

{

"UUID" : "XXXXXXXX-XXXX",

"compiler" : "GCC 9.3.1",

"hostname" : "xxx",

"instance_id" : "XXXXXXXX-XXXX_172.16.0.75_1730950372",

"ip" : "172.16.0.75",

"logtail_version" : "2.0.8",

"os" : "Linux; 5.10.134-13.an8.x86_64; #1 SMP Mon Jan 9 10:39:46 CST 2023; x86_64",

"update_time" : "2024-11-07 11:32:52"

}Windows

The upgrade procedure is the same as the installation procedure. Download and unzip the latest installation package, and then follow the steps to install it. For more information, see Install Logtail.

Upgrading is equivalent to automatically uninstalling and reinstalling. The contents of your original installation directory will be deleted. Back up your data before upgrading.

On a 64-bit Windows operating system, if you want to upgrade a 32-bit Logtail to a 64-bit version, you must first uninstall the 32-bit Logtail and then reinstall the 64-bit Logtail.

Uninstall Logtail

Linux

Obtain the ${region_id} that corresponds to the region of your Simple Log Service project. Replace ${region_id} and run the following command to uninstall Logtail.

For the ${region_id} of each region, see Regions and endpoints. For example, the ${region_id} for China (Hangzhou) is cn-hangzhou.

wget http://logtail-release-${region_id}.oss-${region_id}.aliyuncs.com/linux64/logtail.sh -O logtail.sh; chmod +x logtail.sh; ./logtail.sh uninstallWindows

Run Windows PowerShell or Command Prompt as an administrator. Go to the logtail_installer directory, which is the directory where you unzipped the installation package, and run the following command.

.\logtail_installer.exe uninstallAfter a successful uninstallation, the Logtail installation directory is deleted. However, some configuration files are retained in the C:\LogtailData directory. You can manually delete them as needed. The remaining information includes the following:

checkpoint: Stores the checkpoint information for all Logtail plug-ins. This file is created only after you use a Logtail plug-in.

user_config.d: The directory where local collection configurations are stored.

Files ending with .json are treated as collection configurations. The format is similar to /usr/local/ilogtail/user_log_config.json.

logtail_check_point: Stores the checkpoint information for the main part of Logtail.

users: Stores the user identity files that you have configured.

Container scenarios

Install the Logtail component

If you use an ACK cluster and the cluster belongs to the same Alibaba Cloud account as SLS, follow the instructions for ACK clusters. If you use a self-managed cluster, or if the ACK cluster and SLS belong to different Alibaba Cloud accounts, follow the instructions for self-managed clusters.

Installation on ACK clusters

This operation applies only to ACK dedicated clusters and ACK managed clusters.

Install the Logtail component on an existing ACK cluster

Log on to the ACK console.

On the Clusters page, find the target cluster and choose in the Actions column.

On the Logs and Monitoring tab, find logtail-ds and click Install.

After the installation is complete, SLS automatically creates a project named k8s-log-${your_k8s_cluster_id}.

Install the Logtail component when creating a new ACK cluster

Log on to the ACK console.

In the navigation pane on the left, click Clusters.

On the Clusters page, click Create Kubernetes Cluster.

Select Enable Log Service.

NoteThis section describes only the key steps to enable SLS. For more information about how to create a cluster, see Create an ACK managed cluster.

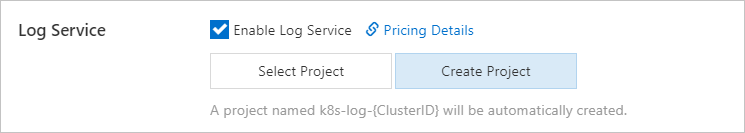

When you select Enable Log Service, a prompt to create a project appears. For more information about the organization of logs in SLS, see Projects. Create a project in one of the following two ways.

Select Project

Select an existing project to manage the collected container logs.

Create Project

SLS automatically creates a project to manage the collected container logs.

ClusterIDis the unique identifier of your new Kubernetes cluster.

After the installation is complete, the following SLS resources are automatically created in the selected project.

Resource type | Resource name | Purpose | Example |

Machine group | k8s-group-${your_k8s_cluster_id} | The machine group for the logtail-daemonset, mainly used for log collection scenarios. | k8s-group-my-cluster-123 |

k8s-group-${your_k8s_cluster_id}-statefulset | The machine group for the logtail-statefulset, mainly used for metric collection scenarios. | k8s-group-my-cluster-123-statefulset | |

k8s-group-${your_k8s_cluster_id}-singleton | A single-instance machine group, mainly used for some single-instance collection configurations. | k8s-group-my-cluster-123-singleton | |

Logstore | config-operation-log | Used to store the logs of the alibaba-log-controller in the Logtail component. We recommend that you do not create collection configurations in this logstore. You can delete this logstore. After deletion, the runtime logs of the alibaba-log-controller will no longer be collected. The billing for this logstore is the same as for a normal logstore. For more information, see Pay-by-data-written billing items. | config-operation-log |

Installation on self-managed clusters

Log on to your Kubernetes cluster. Select a command based on the region to download Logtail and its dependent components.

# China regions wget https://logtail-release-cn-hangzhou.oss-cn-hangzhou.aliyuncs.com/kubernetes/0.5.5/alibaba-cloud-log-all.tgz; tar xvf alibaba-cloud-log-all.tgz; chmod 744 ./alibaba-cloud-log-all/k8s-custom-install.sh # Regions outside China wget https://logtail-release-ap-southeast-1.oss-ap-southeast-1.aliyuncs.com/kubernetes/0.5.5/alibaba-cloud-log-all.tgz; tar xvf alibaba-cloud-log-all.tgz; chmod 744 ./alibaba-cloud-log-all/k8s-custom-install.shModify the

./alibaba-cloud-log-all/values.yamlconfiguration file.Metric description

values.yaml

# ===================== Required fields ===================== # The name of the destination Project. SlsProjectName: # The region where the Project is located. Region: # The ID of the Alibaba Cloud account that owns the Project. Enclose the ID in double quotation marks (""). AliUid: "11099" # The AccessKey ID and AccessKey secret of the Alibaba Cloud account or RAM user. The AliyunLogFullAccess permission is required. AccessKeyID: AccessKeySercret: # The custom cluster ID. The name can contain only uppercase letters, lowercase letters, digits, and hyphens (-). ClusterID: # ========================================================== # Whether to enable metric collection components. Optional values: true, false. Default value: true. SlsMonitoring: true # The network type. Optional values: Internet, Intranet. Default value: Internet. Net: Internet # Whether the container runtime is containerd. Optional values: true, false. Default value: false. SLS_CONTAINERD_USED: trueSlsProjectNameThe name of the project to which Logtail will upload logs.

RegionThe ID of the region where your project is located. For example, the region ID for China (Hangzhou) is

cn-hangzhou. For more information, see Regions and endpoints.AliUidThe ID of the Alibaba Cloud account that owns the project. Enclose the ID in double quotation marks (""), for example,

AliUid: "11**99". For information about how to obtain the ID, see Obtain the ID of the Alibaba Cloud account that owns Simple Log Service.AccessKeyIDThe AccessKey ID of the Alibaba Cloud account that owns the project. We recommend that you use the AccessKey of a RAM user and grant the AliyunLogFullAccess permission to the RAM user. For more information, see Create a RAM user and grant permissions.

AccessKeySecretThe AccessKey secret of the Alibaba Cloud account that owns the project. We recommend that you use the AccessKey of a RAM user and grant the AliyunLogFullAccess permission to the RAM user. For more information, see Create a RAM user and grant permissions.

ClusterIDThe custom cluster ID. The name can contain only uppercase letters, lowercase letters, digits, and hyphens (-). This parameter corresponds to

${your_k8s_cluster_id}in subsequent operations. Do not configure the same cluster ID for different Kubernetes clusters.SlsMonitoringA switch to enable cluster metric data collection. The options are:

true (default): Enable.

false: Disable.

NetThe network type for Logtail data transfer. If your cluster does not have access to the Alibaba Cloud internal network, use the internet. The options are:

Internet (default): Internet.

Intranet: Internal network.

SLS_CONTAINERD_USEDSpecifies whether the container runtime is containerd. The options are:

true: Yes.

false (default): No.

In a self-managed Kubernetes cluster that uses containerd as the container runtime, if this parameter is not enabled, Logtail may not collect logs.

Install Logtail and its dependent components.

NoteRun the

echo "$(uname -s | tr '[:upper:]' '[:lower:]')-$(uname -m)"command to query your host'sOS-architecture. Thek8s-custom-install.shscript supports the followingOS-architecturecombinations: linux-386, linux-amd64, linux-arm, linux-arm64, linux-ppc64le, linux-s390x, and darwin-amd64. If you have other requirements, submit a ticket.bash k8s-custom-install.sh; kubectl apply -R -f result

After the installation is complete, the following SLS resources are automatically created in the project. If the creation fails, carefully check the modified values.yaml file.

Resource type | Resource name | Purpose | Example |

Machine group | k8s-group-${your_k8s_cluster_id} | The machine group for the logtail-daemonset, mainly used for log collection scenarios. | k8s-group-my-cluster-123 |

k8s-group-${your_k8s_cluster_id}-statefulset | The machine group for the logtail-statefulset, mainly used for metric collection scenarios. | k8s-group-my-cluster-123-statefulset | |

k8s-group-${your_k8s_cluster_id}-singleton | A single-instance machine group, mainly used for some single-instance collection configurations. | k8s-group-my-cluster-123-singleton | |

Logstore | config-operation-log | Used to store the logs of the alibaba-log-controller in the Logtail component. We recommend that you do not create collection configurations in this logstore. You can delete this logstore. After deletion, the runtime logs of the alibaba-log-controller will no longer be collected. The billing for this logstore is the same as for a normal logstore. For more information, see Pay-by-data-written billing items. | None |

Check the Logtail status, version, and IP address

Run the following command to check the Logtail status.

kubectl get po -n kube-system | grep logtailThe following result is returned:

NAME READY STATUS RESTARTS AGE logtail-ds-gb92k 1/1 Running 0 2h logtail-ds-wm7lw 1/1 Running 0 4dRun the following command to view information, such as the Logtail version number and IP address.

kubectl exec logtail-ds-gb92k -n kube-system cat /usr/local/ilogtail/app_info.jsonThe following result is returned:

{ "hostname" : "logtail-ds-gb92k", "instance_id" : "0EBB2B0E-0A3B-11E8-B0CE-0A58AC140402_172.20.4.2_1517810940", "ip" : "192.0.2.0", "logtail_version" : "0.16.2", "os" : "Linux; 3.10.0-693.2.2.el7.x86_64; #1 SMP Tue Sep 12 22:26:13 UTC 2017; x86_64", "update_time" : "2021-02-05 06:09:01" }

Upgrade and roll back Logtail

Before upgrading, back up the description files related to the Logtail component.

ImportantIf significant collection latency exists before the upgrade, the upgrade may cause a small amount of log loss.

kubectl get ds -n kube-system logtail-ds -o yaml > logtail-ds.yaml kubectl get deployment -n kube-system alibaba-log-controller -o yaml > alibaba-log-controller.yaml kubectl get crd aliyunlogconfigs.log.alibabacloud.com -o yaml > aliyunlogconfigs-crd.yaml kubectl get cm -n kube-system alibaba-log-configuration -o yaml > alibaba-log-configuration.yaml kubectl get aliyunlogconfigs --all-namespaces -o yaml > aliyunlogconfigs-cr.yamlSelect an upgrade method based on your cluster type. If you use an ACK cluster and the cluster belongs to the same Alibaba Cloud account as SLS, follow the instructions for ACK clusters. If you use a self-managed cluster, or if the ACK cluster and SLS belong to different Alibaba Cloud accounts, follow the instructions for self-managed clusters.

Upgrade on ACK clusters

We recommend that you use the automatic upgrade method. If you have modified parameters, such as environment variables, in the logtail-ds DaemonSet or the alibaba-log-controller deployment, use the manual upgrade method to prevent your changes from being reset.

Automatic upgrade

ImportantAutomatic upgrade will reset the configurations that you manually modified in logtail-ds and alibaba-log-controller.

Log on to the ACK console.

On the Logs and Monitoring tab, find logtail-ds and click Upgrade.

In the Upgrade Component dialog box, click OK.

ImportantIf you cannot upgrade to the latest version of Logtail, your Kubernetes cluster version may be too old. Upgrade your Kubernetes cluster first or use the manual upgrade method.

After you perform the upgrade, view the status of the logtail-ds pods in the ACK console. If all logtail-ds pods are in the running state, the upgrade is successful.

On the Clusters page, find the target cluster and choose in the Actions column.

Manual upgrade

ImportantManual upgrade does not update your configuration based on the latest Logtail component. Some feature optimizations may not be available.

Manual upgrade includes upgrading logtail-ds and alibaba-log-controller. Typically, you only need to upgrade logtail-ds to obtain the collection capabilities provided by the new Logtail version. When you need to obtain the collection capabilities of the new Logtail CRD method, you need to upgrade alibaba-log-controller. The following steps use logtail-ds as an example.

Log on to the ACK console.

Choose .

NoteTo upgrade alibaba-log-controller, choose . Then, in the kube-system namespace, find alibaba-log-controller and complete the upgrade.

Set Namespace to kube-system, and then click Edit in the row of logtail-ds.

Check whether the following environment variables exist.

If the ALIYUN_LOGTAIL_CONFIG, ALIYUN_LOGTAIL_USER_ID, and ALIYUN_LOGTAIL_USER_DEFINED_ID environment variables do not exist, your Logtail version may be too old. Submit a ticket to inquire about the upgrade method.

Click Select Image Tag next to Image Tag.

In the Image Tag dialog box, click the latest version, and then click OK.

On the right side of the page, click Update.

After you perform the upgrade, view the status of the logtail-ds pods in the ACK console. If all logtail-ds pods are in the Running state, the upgrade is successful.

On the Clusters page, find the target cluster and choose in the Actions column.

Upgrade on self-managed clusters

NoteWe recommend that you upgrade by installing the latest Logtail component. If you only update the image version of some components, such as logtail-ds or alibaba-log-controller, the upgrade may fail.

Reinstall the Logtail component to complete the automatic upgrade. For more information, see Install the Logtail component.

To roll back to a specific version, follow these steps.

NoteThe YAML files backed up before the upgrade contain redundant information that you need to manually delete before using them to restore the Logtail configuration. You can use the kubectl-neat tool to do this. The fields to be deleted are metadata.creationTimestamp, metadata.generation, metadata.resourceVersion, metadata.uid, and status.

Determine whether to keep the new Logtail configuration after the upgrade based on your business needs.

If you do not need to keep it, delete the new Logtail configuration.

Delete the redundant information from the backup files.

cat logtail-ds.yaml | kubectl-neat > neat-logtail-ds.yaml cat alibaba-log-controller.yaml | kubectl-neat > neat-alibaba-log-controller.yaml cat aliyunlogconfigs-crd.yaml | kubectl-neat > neat-aliyunlogconfigs-crd.yaml cat alibaba-log-configuration.yaml | kubectl-neat > neat-alibaba-log-configuration.yaml cat aliyunlogconfigs-cr.yaml | kubectl-neat > neat-aliyunlogconfigs-cr.yamlApply the streamlined backup files to restore the Logtail configuration.

kubectl apply -f neat-logtail-ds.yaml kubectl apply -f neat-alibaba-log-controller.yaml kubectl apply -f neat-aliyunlogconfigs-crd.yaml kubectl apply -f neat-alibaba-log-configuration.yaml kubectl apply -f neat-aliyunlogconfigs-cr.yaml

Uninstall Logtail

Select an uninstallation method based on your cluster type. If you use an ACK cluster and the cluster belongs to the same Alibaba Cloud account as SLS, follow the instructions for ACK clusters. If you use a self-managed cluster, or if the ACK cluster and SLS belong to different Alibaba Cloud accounts, follow the instructions for self-managed clusters.

Uninstallation from ACK clusters

Log on to the ACK console.

On the Logs and Monitoring tab, find logtail-ds and click Uninstall.

Follow the on-screen prompts and click OK to complete the uninstallation.

On the Clusters page, find the target cluster and choose in the Actions column.

Uninstallation from self-managed clusters

How do I uninstall installed components such as logtail-ds and alibaba-log-controller?

Run kubectl delete -R -f result to uninstall installed components such as logtail-ds and alibaba-log-controller.

This command recursively deletes all resources in the result directory. Use it with caution if the directory contains other resources.

Cluster FAQ

How do I use one SLS Project for multiple Kubernetes clusters?

If you want to collect container logs from multiple clusters into the same SLS project, set the installation parameters for the other clusters' SLS components to be the same as those you used when you first installed the components.

How do I view Logtail logs?

Logtail logs are stored in the /usr/local/ilogtail/ directory within the Logtail container. The file names are ilogtail.LOG and logtail_plugin.LOG.

The standard output in the Logtail container is not for reference. You can ignore the following standard output content.

start umount useless mount points, /shm$|/merged$|/mqueue$

umount: /logtail_host/var/lib/docker/overlay2/3fd0043af174cb0273c3c7869500fbe2bdb95d13b1e110172ef57fe840c82155/merged: must be superuser to unmount

umount: /logtail_host/var/lib/docker/overlay2/d5b10aa19399992755de1f85d25009528daa749c1bf8c16edff44beab6e69718/merged: must be superuser to unmount

umount: /logtail_host/var/lib/docker/overlay2/5c3125daddacedec29df72ad0c52fac800cd56c6e880dc4e8a640b1e16c22dbe/merged: must be superuser to unmount

......

xargs: umount: exited with status 255; aborting

umount done

start logtail

ilogtail is running

logtail status:

ilogtail is runningHow do I check the status of SLS components in a Kubernetes cluster?

Run the following commands to check.

kubectl get deploy alibaba-log-controller -n kube-system

kubectl get ds logtail-ds -n kube-systemWhat do I do if the alibaba-log-controller fails to start?

Confirm that you have followed these installation instructions.

Run the installation command on the master node of the Kubernetes cluster.

Enter your cluster ID as a parameter in the installation command.

If the installation fails because of these issues, run the kubectl delete -f deploy command to delete the generated installation template and then run the installation command again.

How do I check the status of the Logtail-ds DaemonSet in a Kubernetes cluster?

Run the kubectl get ds -n kube-system command to check the status of the Logtail-ds DaemonSet.

The namespace where the Logtail container is located is kube-system by default.

How do I view the Logtail runtime logs?

Logtail runtime logs are saved in the /usr/local/ilogtail/ directory. The file name is ilogtail.LOG. Rotated files are compressed and stored as ilogtail.LOG.x.gz. For example, run the following command to view the logs.

kubectl exec logtail-ds-gb92k -n kube-system tail /usr/local/ilogtail/ilogtail.LOGThe result is as follows:

[2018-02-05 06:09:02.168693] [INFO] [9] [build/release64/sls/ilogtail/LogtailPlugin.cpp:104] logtail plugin Resume:start

[2018-02-05 06:09:02.168807] [INFO] [9] [build/release64/sls/ilogtail/LogtailPlugin.cpp:106] logtail plugin Resume:success

[2018-02-05 06:09:02.168822] [INFO] [9] [build/release64/sls/ilogtail/EventDispatcher.cpp:369] start add existed check point events, size:0

[2018-02-05 06:09:02.168827] [INFO] [9] [build/release64/sls/ilogtail/EventDispatcher.cpp:511] add existed check point events, size:0 cache size:0 event size:0 success count:0How do I restart Logtail in a specific pod?

Stop Logtail.

logtail-ds-gb92kis the container name andkube-systemis the namespace. Replace them as needed.kubectl exec logtail-ds-gb92k -n kube-system /etc/init.d/ilogtaild stopThe following result indicates that Logtail is stopped.

kill process Name: ilogtail pid: 7 kill process Name: ilogtail pid: 9 stop successStart Logtail.

logtail-ds-gb92kis the container name andkube-systemis the namespace. Replace them as needed.kubectl exec logtail-ds-gb92k -n kube-system /etc/init.d/ilogtaild start

The following result indicates that Logtail is started.

ilogtail is running