You can use Data Transmission Service (DTS) to migrate incremental data between PostgreSQL databases. The source or destination database can be a self-managed PostgreSQL database or an ApsaraDB RDS for PostgreSQL instance. DTS supports schema migration, full data migration, and incremental data migration. When you configure a data migration task, you can select all of the supported migration types to ensure service continuity. This topic describes how to migrate incremental data from a self-managed PostgreSQL database to an ApsaraDB RDS for PostgreSQL instance.

Prerequisites

The self-managed PostgreSQL database is of a version from 10.1 to 13.0.

An ApsaraDB RDS for PostgreSQL instance is created. For more information, see Create an ApsaraDB RDS for PostgreSQL instance.

NoteTo ensure compatibility, make sure that the database version of the ApsaraDB RDS for PostgreSQL instance is the same as the version of the self-managed PostgreSQL database.

The available storage space of the ApsaraDB RDS for PostgreSQL instance is larger than the total size of the data in the self-managed PostgreSQL database.

Usage notes

DTS uses read and write resources of the source and destination databases during full data migration. This may increase the loads of the database servers. If the database performance is unfavorable, the specification is low, or the data volume is large, database services may become unavailable. For example, DTS occupies a large amount of read and write resources in the following cases: a large number of slow SQL queries are performed on the source database, the tables have no primary keys, or a deadlock occurs in the destination database. Before you migrate data, evaluate the impact of data migration on the performance of the source and destination databases. We recommend that you migrate data during off-peak hours. For example, you can migrate data when the CPU utilization of the source and destination databases is less than 30%.

The tables to be migrated in the source database must have PRIMARY KEY or UNIQUE constraints and all fields must be unique. Otherwise, the destination database may contain duplicate data records.

If you select a schema as the object to be migrated and create a table in the schema or execute the RENAME statement to rename the table during incremental data migration, you must execute the

ALTER TABLE schema.table REPLICA IDENTITY FULL;statement before you write data to the table.NoteReplace the

schemaandtablevariables in the preceding statement with your schema name and table name.To ensure that the latency of incremental data migration is accurate, DTS adds a heartbeat table to the source database. The name of the heartbeat table is dts_postgres_heartbeat.

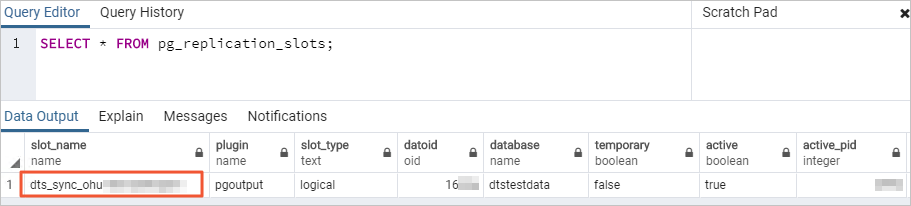

During incremental data migration, DTS creates a replication slot for the source database. The replication slot is prefixed with

dts_sync_. DTS automatically clears historical replication slots every 90 minutes to reduce storage usage.NoteIf the data migration task is released or fails, DTS automatically clears the replication slot. If a primary/secondary switchover is performed on the source self-managed PostgreSQL database, you must log on to the secondary database to clear the replication slot.

To ensure that the data migration task runs as expected, you can perform primary/secondary switchover only on an ApsaraDB RDS for PostgreSQL 11 instance. In this case, you must set the

rds_failover_slot_modeparameter tosync. For more information, see Logical Replication Slot Failover.WarningIf you perform a primary/secondary switchover on a self-managed PostgreSQL database or an ApsaraDB RDS for PostgreSQL instance of a version other than 11, the data migration task stops.

- If a data migration task fails, DTS automatically resumes the task. Before you switch your workloads to the destination instance, stop or release the data migration task. Otherwise, the data in the source database overwrites the data in the destination instance after the task is resumed.

If the source database has long-running transactions and the task contains incremental data migration, the write-ahead logging (WAL) logs that are generated before the long-running transactions are submitted may not be cleared and therefore pile up, resulting in insufficient storage space in the source database.

Limits

A data migration task can migrate data from only one database. To migrate data from multiple databases, you must create a data migration task for each database.

The name of the source database cannot contain hyphens (-). For example, dts-testdata is not allowed.

If a primary/secondary switchover is performed on the source database during incremental data migration, the transmission cannot be resumed.

Data may be inconsistent between the primary and secondary nodes of the source database due to synchronization latency. Therefore, you must use the primary node as the data source when you migrate data.

NoteWe recommend that you migrate data during off-peak hours. You can modify the transfer rate of full data migration based on the read and write performance of the source database. For more information, see Modify the transfer rate of full data migration.

Incremental data migration does not support the data of the BIT type.

During incremental data migration, DTS migrates only DML operations including INSERT, DELETE, and UPDATE.

NoteOnly data migration tasks that are created after October 1, 2020 can migrate DDL operations. You must create a trigger and function in the source database to obtain the DDL information before you configure the task. For more information, see Use triggers and functions to implement incremental DDL migration for PostgreSQL databases.

After your workloads are switched to the destination database, newly written sequences do not increment from the maximum value of the sequences in the source database. Therefore, you must query the maximum value of the sequences in the source database before you switch your workloads to the destination database. Then, you must specify the queried maximum value as the starting value of the sequences in the destination database.

DTS does not check the validity of metadata such as sequences. You must manually check the validity of metadata.

Billing rules

Migration type | Task configuration fee | Internet traffic fee |

Schema migration and full data migration | Free of charge. | Charged only when data is migrated from Alibaba Cloud over the Internet. For more information, see Billing overview. |

Incremental data migration | Charged. For more information, see Billing overview. |

Permissions required for database accounts

| Database | Schema migration | Full data migration | Incremental data migration |

| Self-managed PostgreSQL database | USAGE permission on pg_catalog | SELECT permission on the objects to be migrated | superuser |

| ApsaraDB RDS for PostgreSQL instance | CREATE and USAGE permissions on the objects to be migrated | Permissions of the schema owner | Permissions of the schema owner |

For more information about how to create a database account and grant permissions to the database account, see the following topics:

Self-managed PostgreSQL database: CREATE USER and GRANT

ApsaraDB RDS for PostgreSQL instance: Create an account.

Data migration process

The following table describes how DTS migrates the schemas and data of the source PostgreSQL database. The process prevents data migration failures that are caused by dependencies between objects.

For more information about schema migration, full data migration, and incremental data migration, see Terms.

Step | Description |

1. Schema migration | DTS migrates the schemas of tables, views, sequences, functions, user-defined types, rules, domains, operations, and aggregates to the destination database. Note DTS does not migrate plug-ins. In addition, DTS does not migrate functions that are written in the C programming language. |

2. Full data migration | DTS migrates all historical data of objects to the destination database. |

3. Schema migration | DTS migrates the schemas of triggers and foreign keys to the destination database. |

4. Incremental data migration | DTS migrates incremental data of objects to the destination database. Incremental data migration ensures service continuity of self-managed applications. Note

|

Preparations

Log on to the server on which the self-managed PostgreSQL database resides.

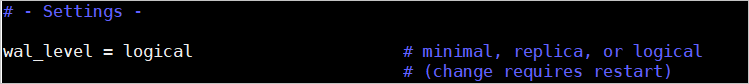

Set the value of the

wal_levelparameter in the postgresql.conf configuration file tological. Note

NoteSkip this step if you do not need to perform incremental data migration.

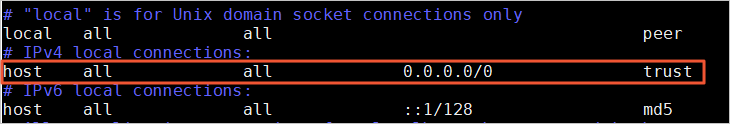

Add the CIDR blocks of DTS servers to the pg_hba.conf configuration file of the self-managed PostgreSQL database. Add only the CIDR blocks of the DTS servers that reside in the same region as the destination database. For more information, see Add the CIDR blocks of DTS servers.

NoteFor more information, see The pg_hba.conf File. Skip this step if you have set the IP address in the pg_hba.conf file to

0.0.0.0/0.

Optional: Create a trigger and function in the source database to obtain the DDL information. For more information, see Use triggers and functions to implement incremental DDL migration for PostgreSQL databases.

NoteSkip this step if you do not need to migrate DDL operations.

Procedure

Log on to the DTS console.

NoteIf you are redirected to the Data Management (DMS) console, you can click the

icon in the

icon in the  to go to the previous version of the DTS console.

to go to the previous version of the DTS console.In the left-side navigation pane, click Data Migration.

At the top of the Migration Tasks page, select the region where the destination cluster resides.

In the upper-right corner of the page, click Create Migration Task.

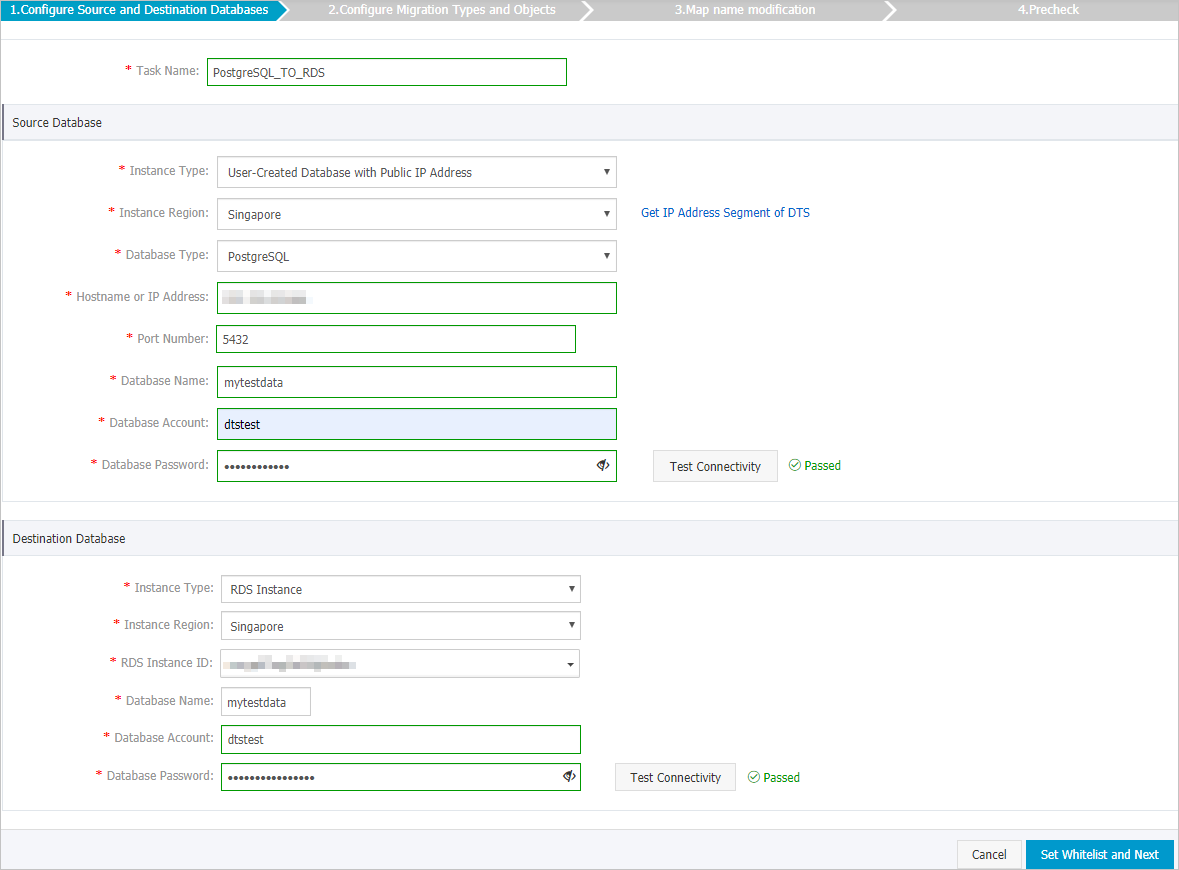

Configure the source and destination databases.

Section

Parameter

Description

N/A

Task Name

The task name that DTS automatically generates. We recommend that you specify a descriptive name that makes it easy to identify the task. You do not need to specify a unique task name.

Source Database

Instance Type

The access method of the source database. In this example, User-Created Database with Public IP Address is selected.

NoteIf the source self-managed database is of another type, you must set up the environment that is required for the database. For more information, see Preparation overview.

Instance Region

If you select User-Created Database with Public IP Address as the instance type, you do not need to set the Instance Region parameter.

Database Type

The type of the source database. Select PostgreSQL.

Hostname or IP Address

The endpoint that is used to connect to the self-managed PostgreSQL database. In this example, enter the public IP address.

Port Number

The service port number of the self-managed PostgreSQL database. The port must be accessible over the Internet.

Database Name

The name of the self-managed PostgreSQL database.

Database Account

The account that is used to log on to the self-managed PostgreSQL database. For information about the permissions that are required for the account, see Permissions required for database accounts.

Database Password

The password of the database account.

NoteAfter you specify the information about the source database, you can click Test Connectivity next to Database Password to check whether the information is valid. If the information is valid, the Passed message appears. If the Failed message appears, click Check next to Failed. Then, modify the information based on the check results.

Destination Database

Instance Type

The type of the destination database. Select RDS Instance.

Instance Region

The region in which the destination ApsaraDB RDS for PostgreSQL instance resides.

RDS Instance ID

The ID of the destination ApsaraDB RDS for PostgreSQL instance.

Database Name

The name of the destination database in the ApsaraDB RDS for PostgreSQL instance. The name can be different from the name of the source database.

NoteThe destination database must exist in the ApsaraDB RDS for PostgreSQL instance. If the destination database does not exist, create a database. For more information, see Create a database.

Database Account

The database account of the destination ApsaraDB RDS for PostgreSQL instance. For information about the permissions that are required for the account, see Permissions required for database accounts

Database Password

The password of the database account.

NoteAfter you specify the information about the RDS instance, you can click Test Connectivity next to Database Password to check whether the information is valid. If the information is valid, the Passed message appears. If the Failed message appears, click Check next to Failed. Then, modify the information based on the check results.

In the lower-right corner of the page, click Set Whitelist and Next.

WarningIf the CIDR blocks of DTS servers are automatically or manually added to the whitelist of the database or instance, or to the ECS security group rules, security risks may arise. Therefore, before you use DTS to migrate data, you must understand and acknowledge the potential risks and take preventive measures, including but not limited to the following measures: enhance the security of your username and password, limit the ports that are exposed, authenticate API calls, regularly check the whitelist or ECS security group rules and forbid unauthorized CIDR blocks, or connect the database to DTS by using Express Connect, VPN Gateway, or Smart Access Gateway.

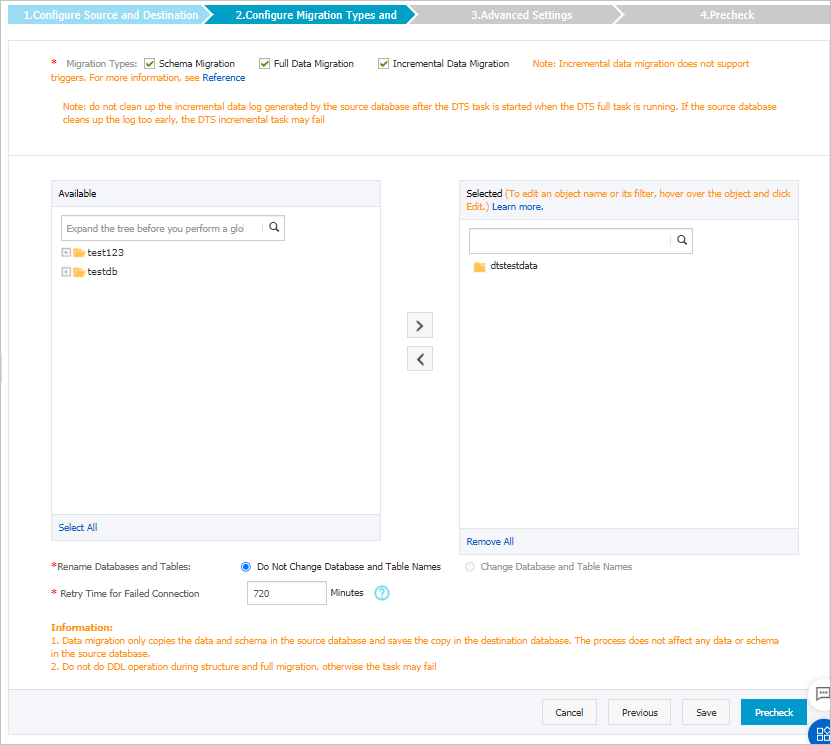

Select the migration types and the objects that you want to migrate.

Setting

Description

Select migration types

To perform only full data migration, select Schema Migration and Full Data Migration.

To ensure service continuity during data migration, select Schema Migration, Full Data Migration, and Incremental Data Migration. In this example, select the three migration types.

NoteIf Incremental Data Migration is not selected, do not write data to the source database during full data migration. This ensures data consistency between the source and destination databases.

Select the objects that you want to migrate

Select one or more objects from the Source Objects section and click the

icon to move the objects to the Selected Objects section. Note

icon to move the objects to the Selected Objects section. NoteYou can select columns, tables, or schemas as the objects to be migrated.

By default, the name of the object remains the same as that in the self-managed PostgreSQL database after an object is migrated to the RDS instance. You can use the object name mapping feature to rename the objects that have been migrated to the destination RDS instance. For more information, see Object name mapping.

If you use the object name mapping feature to rename an object, other objects that are dependent on the object may fail to be migrated.

Specify whether to rename objects

You can use the object name mapping feature to rename the objects that are migrated to the destination database. For more information, see Object name mapping.

Specify the retry time range for failed connections to the source or destination database

By default, if DTS fails to connect to the source and destination databases, DTS retries within the following 12 hours. You can specify the retry time range based on your business requirements. If DTS is reconnected to the source and destination databases within the specified time range, DTS resumes the data migration task. Otherwise, the data migration task fails.

NoteWithin the time range in which DTS attempts to reconnect to the source and destination databases, you are charged for the DTS instance. We recommend that you specify the retry time range based on your business requirements. You can also release the DTS instance at the earliest opportunity after the source and destination databases are released.

In the lower-right corner of the page, click Precheck.

NoteBefore you can start the data migration task, DTS performs a precheck. You can start the data migration task only after the task passes the precheck.

If the task fails to pass the precheck, you can click the

icon next to each failed item to view details.

icon next to each failed item to view details. You can troubleshoot the issues based on the causes and run a precheck again.

If you do not need to troubleshoot the issues, you can ignore failed items and run a precheck again.

After the task passes the precheck, click Next.

In the Confirm Settings dialog box, specify the Channel Specification parameter and select Data Transmission Service (Pay-As-You-Go) Service Terms.

Click Buy and Start to start the data migration task.

Stop the data migration task

We recommend that you prepare a rollback solution to migrate incremental data from the destination database to the source database in real time. This allows you to minimize the negative impact of switching your workloads to the destination database. For more information, see Switch workloads to the destination database. If you do not need to switch your workloads, you can perform the following steps to stop the data migration task.

- Full data migration

Do not manually stop a task during full data migration. Otherwise, the system may fail to migrate all data. Wait until the migration task automatically ends.

- Incremental data migration

The task does not automatically end during incremental data migration. You must manually stop the migration task.

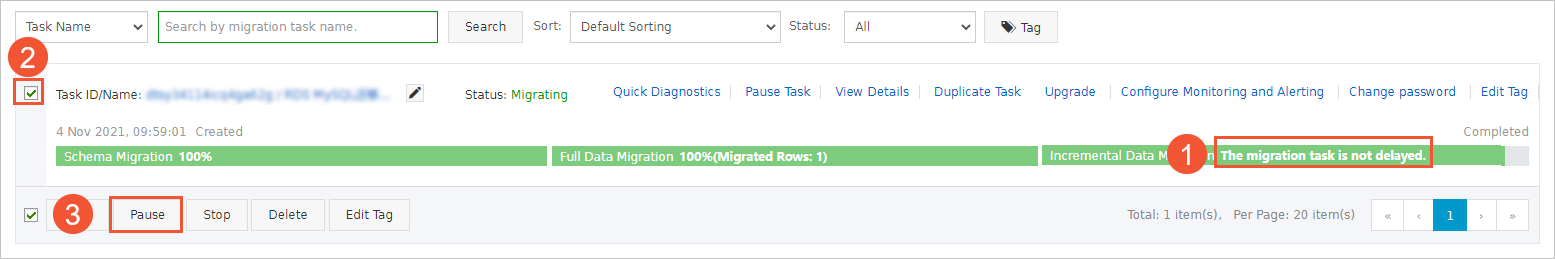

- Wait until the task progress bar shows Incremental Data Migration and The migration task is not delayed. Then, stop writing data to the source database for a few minutes. In some cases, the progress bar shows the delay time of incremental data migration.

- After the status of incremental data migration changes to The migration task is not delayed, manually stop the migration task.