Tair (Redis OSS-compatible) is a high-performance key-value database service that you can use to store large volumes of important data in various scenarios. This topic describes the disaster recovery solutions provided by Tair (Redis OSS-compatible).

Evolution of disaster recovery solutions

Instances may fail due to reasons such as device or power failures in data centers. In this case, disaster recovery can ensure data consistency and service availability.

Figure 1. Evolution of disaster recovery solutions

Disaster recovery solution | Protection level | Description |

★★★☆☆ | The master and replica nodes are deployed on different machines in the same zone. If the master node fails, the high availability (HA) system performs a failover to prevent service interruption caused by a single point of failure (SPOF). | |

★★★★☆ | The master and replica nodes are deployed in two different zones of the same region. If the zone in which the master node resides is disconnected due to factors such as a power or network failure, the HA system performs a failover to ensure continuous availability of the entire instance. | |

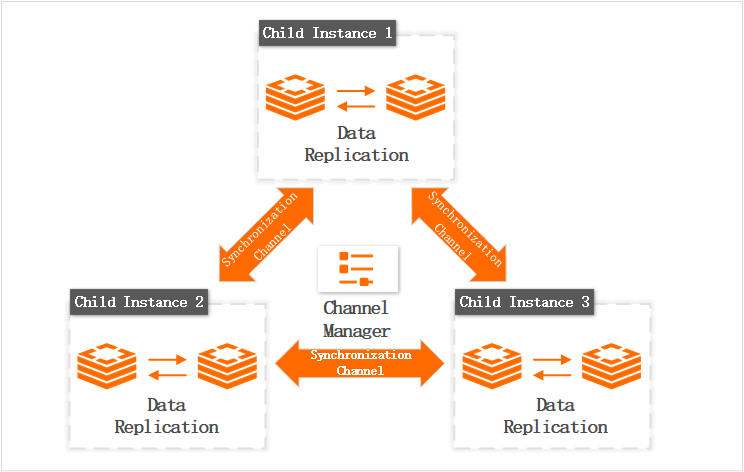

★★★★★ | In the architecture of Global Distributed Cache, a distributed instance consists of multiple child instances that synchronize data in real time by using synchronization channels. The channel manager monitors the health status of child instances and handles exceptions that occur on child instances, such as a switchover between the master and replica nodes. Global Distributed Cache is suitable for scenarios such as geo-disaster recovery, active geo-redundancy, nearby application access, and load balancing. |

Single-zone HA solution

All instances support a single-zone HA architecture. The HA system monitors the health status of the master and replica nodes and performs failovers to prevent service interruption caused by SPOFs.

Deployment architecture | Description |

Figure 2. HA architecture for a standard master-replica instance  A standard master-replica instance runs in a master-replica architecture. If the HA system detects a failure on the master node, the system switches the workloads from the master node to the replica node and the replica node assumes the role of the master node. After recovery, the original master node works as the replica node. | |

Figure 3. HA architecture for a multi-replica cluster instance  On a multi-replica cluster instance, data is stored on data shards. Each data shard consists of a master node and multiple replica nodes. The master and replica nodes are deployed on different machines in an HA architecture. If the master node fails, the HA system performs a master-replica switchover to ensure high service availability. | |

Figure 4. HA architecture for a read/write splitting instance

|

Zone-disaster recovery (multi-zone) solution

Tair (Redis OSS-compatible) provides a zone-disaster recovery solution that involves multiple zones. If your workloads are deployed in a single region and have high requirements for disaster recovery, you can select the multi-zone deployment mode that supports zone-disaster recovery when you create an instance. For more information, see Step 1: Create an instance.

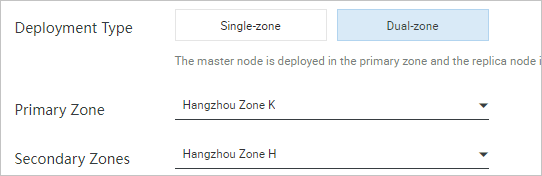

Figure 5. Create a zone-disaster recovery instance

After you create a zone-disaster recovery instance, a replica node that has the same specifications as the master node is deployed in a different zone from the master node. The master node synchronizes data to the replica node over a dedicated channel.

If a power failure or network error occurs on the master node, the replica node assumes the role of the master node. The system calls an API operation on the configuration server to update the routing information of the proxy nodes. In addition, Tair (Redis OSS-compatible) provides an optimized Redis synchronization mechanism. Similar to global transaction identifiers (GTIDs) of MySQL, Tair (Redis OSS-compatible) uses global operation identifiers (OpIDs) to indicate synchronization offsets and runs lock-free threads in the background to search for OpIDs. The system asynchronously synchronizes AOF binary logs (binlogs) from the master node to the replica node. You can throttle synchronization to ensure the performance of Redis.

Cross-region disaster recovery solution

As your business expands into multiple regions, cross-region and long-distance access can result in high latency and deteriorate user experience. Global Distributed Cache for Tair can help you reduce the high latency caused by cross-region access. Global Distributed Cache has the following benefits:

Allows you to directly create child instances or specify the child instances that must be synchronized without the need to build redundancy into your application. This significantly reduces the complexity of application design and allows you to focus on application development.

Provides the geo-replication capability to implement geo-disaster recovery or active geo-redundancy.

This feature is suitable for cross-region data synchronization scenarios and global business deployment in industries such as multimedia, gaming, and e-commerce. For more information, see Global Distributed Cache.

Figure 7. Architecture of Global Distributed Cache for Tair

How to respond to failures

Failures such as device malfunctions, data center power outages, and natural disasters can be classified as master node failures or zone-level failures. Although the probability of such failures occurring is low, they can cause instances to be temporarily unable to write data, experience transient connections, or even face complete downtime or data loss. The reliability of instances is closely tied to their architecture. In most cases, the cluster architecture provides higher reliability. To minimize the impact of failures, multi-zone instances with multiple replicas automatically perform failovers in the event of failures, which minimizes downtime. The following section describes how instances that use different disaster recovery solutions respond to failures.

Respond to node failures

When the master node fails, the handling mechanisms vary based on the deployment configuration of the instance:

If the instance has multiple replica nodes in a single zone, the system automatically performs a failover when the master node fails. The system selects the replica node that has the lowest replication latency as the new master node, and updates the routing relationship.

If the instance is deployed across multiple zones, the system automatically performs a failover when the master node fails. The system selects a replica node in another zone as the new master node, and updates the routing relationship. However, this may lead to cross-zone access between the instance and other services.

NoteTo prevent cross-zone access in a multi-zone cluster architecture, workloads are preferentially switched to a replica node in the primary zone when replica nodes exist in the primary and secondary zones.

Respond to zone-level failures

When a zone-level failure such as power outage or fire occurs, the entire data center becomes unavailable. The handling mechanisms vary based on the deployment configuration of the instance:

If the instance is deployed in a single zone, the instance becomes unavailable. You must wait for the zone to recover. In this case, you can create an instance in another zone based on historical backup data.

If the instance is deployed across multiple zones, an automatic switchover is triggered.

In terms of security, you can select multiple zones and create multiple replica nodes in each zone to minimize downtime. However, you must choose between the probability of failures, the importance of business data, and the cost.

The preceding principles also apply to child instances of Global Distributed Cache. However, when a single child instance fails, the availability of the other child instances is not affected. To prevent data write failures caused by the failure of a single child instance, we recommend that you deploy child instances of Global Distributed Cache across multiple zones.

References

Prevent cross-zone switchover by specifying a custom number of nodes