Data integration is nothing but the process of combining data from different sources into a single synchronized repository. Data integration supports both online, and offline data collection processes be it from different networks, locations, statistical data, data sources, etc. Data warehouse is the best example of data integration operation. Data warehouse is useful to gather the numerous amounts of data in a simple way.

Data has become an important aspect of any enterprise. But to have a combined data, residing in different sources and providing users with a unified view of it, is somewhat painful for enterprises. Say, for example, a production company wants to make a product for one of its clients. It will start its analysis, research, data gathering process from multiple points which might be time consuming and costly. In such a situation, Alibaba Cloud Data Integration helps to gather different data points in one single synchronized repository. This helps to save time and reduce cost.

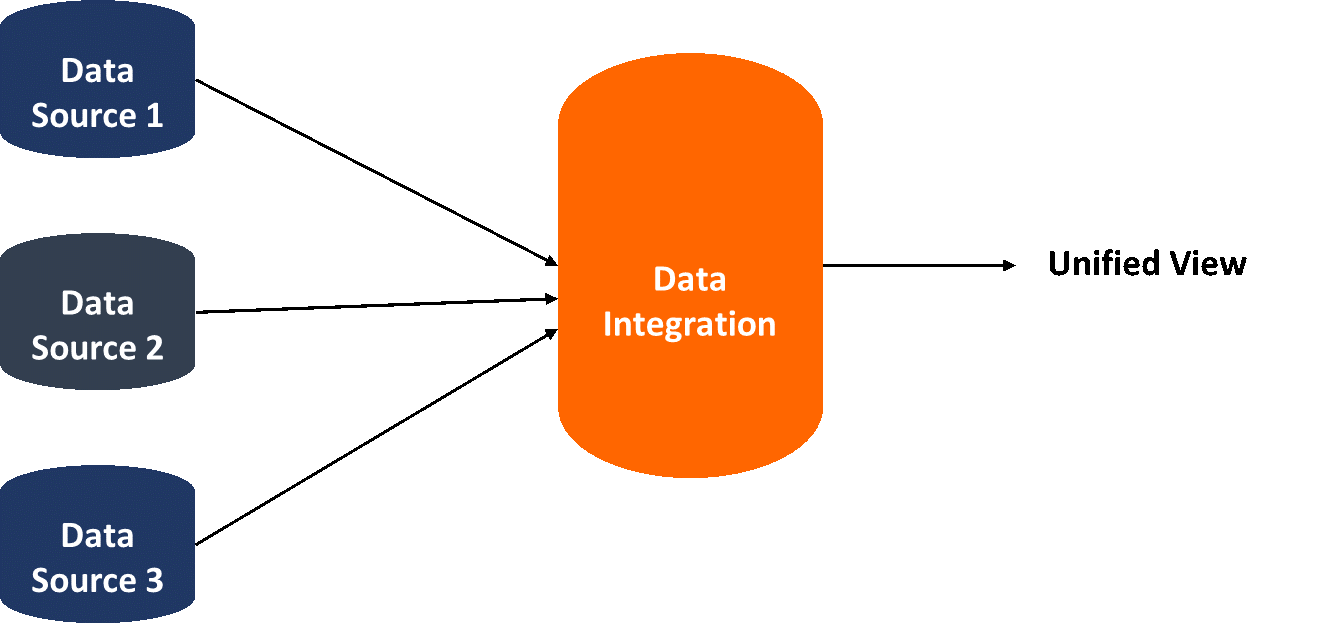

Below mentioned diagram of data integration clearly specifies that data comes from different sources and data integration does a collection of it and provides in a unified view.

There is some typical process in data integration, that includes a client data sources, a server, and a master server. A server collects the data send by client and passes on to the master server. A master server fetches all the external and internal data sources and provides a server with a synchronized single repository data. This data then server collects from a master server and sent back to a client node.

Alibaba Cloud Data Integration is an advanced version that offers its customers with data transmission, data conversion, and synchronization of multiple data into a single repository. It gives a variety of features like scalability, fast data transmission, mass synchronization, gather multiple data from different sources.

Alibaba Cloud Data Integration is useful in saving time. When a consumer demands for data, data integration helps to provide a synchronized data quickly.

Data integration helps in eliminating errors from data sets that is used for business intelligence and decision making.

It allows multiple integration of data from different sources like data warehouse, systems, applications, databases, etc.

Sales, IT, government sectors, educational sector, medical field, data integration is rapidly using by different systems which leads to an increase in its adoption.

With Data Integration, organizations able to manage their customer and partner relationships. This provides the synchronized data in a short time period.

To learn Data Integration covers areas, you can refer to Data Integration: A Data Synchronization Platform.

This tutorial describes how we can easily import data from OSS into MaxCompute on a daily basis with Data Integration.

Global businesses are facing increasing complexity and market volatility amid today's fierce competition. In response to this, all business functions are turning to data-driven strategies as a means to manage this increasing uncertainty. A data-driven approach also helps organizations better understand their customer bases and allows them to grow their businesses. Growth in digital technologies has given organizations the ability to analyze more data, even in real time. This in turn has generated more and more data to help fuel enterprises' needs.

However with this increase, there needs to be an effective way of storing large amounts of data. Nowadays, most organizations would use cloud solutions, such as Alibaba Cloud's Object Storage Service (OSS), as a data storage, data lake, and for data backups. In some cases, an organization may put all their Internet of Things (IoT) data into a file format and store it in the cloud for backup, as well as using it for historical data analysis. So, how can we devise a solution to import data from OSS into MaxCompute on a daily basis in an easy way?

This scenario allows you to partition easily based on the data generation pattern because the data remains unchanged after being generated. Typically, you can partition by date, such as creating one partition on a daily basis.

Generate the data with the name "IOTDataSet"+"date".csv for each date and upload it to OSS bucket. Here we have created a sample file named "IOTDataSet20180824.csv" and uploaded it to OSS. The format of the date for your data should be in yyyymmddhhmmss, which specifies the scheduled time (Year Month Date Hour Minute Second) for the routinely scheduled instance by Data Integration.

Support online real-time & offline data exchange between all data sources, networks and locations with Alibaba Cloud Data Integration.

Big Data is the new corporate currency. If used correctly, there is immense value to be extracted. Revenues from Big Data services, software and hardware are predicted to reach USD $187BN in 2019, representing an increase of more than 50 percent over a five-year period.

Much of this data will pass through the cloud, with 50 percent of organizations predicted to embrace a cloud-first policy in 2018 for Big Data and analytics. Enterprises are clearly demanding more flexibility and control over costs than on-premises solutions can deliver.

As the maturity of cloud-based technologies and the surge of Big Data converge, it is impossible to ignore the competitive edge a data processing and warehousing solution that is infinitely scalable and equally elastic brings to the enterprise. The tipping point for Big Data is here.

But why are we seeing a surge in cloud demand now? One major reason is the fact that technologies powering the cloud have not just increased in sophistication but concerns about security in the cloud have also diminished.

From complex, secured APIs to robust authentication and best practices, cloud platforms are investing in a range of features and support to ensure greater security and scalability. This strategy is paying off with the total number of organizations who distrust cloud dropping from 50 percent to 29 percent within just 12 months.

As a major cloud and big data infrastructure provider, Alibaba Cloud provides an expanding suite of cloud-based products to manage commercial big data problems, including Alibaba Cloud Data Integration, which has just recently been launched for the international market.

Data Integration is an all-in-one data synchronization platform that supports online real-time and offline data exchange between all data sources, networks, and locations. Based on an advanced distribution architecture with multiple modules (such as dirty data processing and flow control distributed system), the service provides data transmission, data conversion and synchronization services. It also supports multiple features, including support for multiple data sources, fast transmission, high reliability, scalability, and mass synchronization. Below we will take a closer look at the features and benefits of this new product and how your organization can add Data Integration to fulfill your Big Data processing needs.

Data Integration supports data synchronization between more than 400 pairs of disparate data sources (including RDS databases, semi-structured storage, non-structured storage (such as audio, video, and images), NoSQL databases, and big data storage). This also includes important support for real-time data reading and writing between data sources such as Oracle, MySQL, and DataHub.

Data Integration allows you to schedule offline tasks by setting a specific trigger time (including year, month, day, hour, and minute). It only requires a few steps to configure periodical incremental data extraction. Data Integration works perfectly with DataWorks data modeling. The entire workflow is an integration of operations and maintenance.

Data Integration leverages the computing capability of Hadoop clusters to synchronize the HDFS data from clusters to MaxCompute, known as Mass Cloud Upload. Data Integration can transmit up to 5TB of data per day and the maximum transmission rate is 2GB/s.

With 19 built-in monitoring rules, Data Integration applies to most monitoring scenarios. You can set alarm rules based on these monitoring rules. Additionally, you can pre-define the task failure notification mode for Data Integration.

By leveraging the data sources and datasets that define the source and destination of data, Data Integration provides two data management plug-ins. The Reader plug-in is used to read data and the Writer plug-in is used to write data. Based on this framework, a set of simplified intermediate data transmission formats is developed to exchange data between arbitrary structured and semi-structured data sources.

This article offers suggestions on how to generate quality data while constructing a MaxCompute data warehouse, and thus provides guidance for practical data governance.

For enterprises, data has become an important asset; and as an asset, it certainly needs to be managed. Increases in business lead to more and more data applications. As enterprises go about creating data warehouses, their requirements for data management grow, and data quality is a vital component of data warehouse construction that cannot be overlooked. This article offers suggestions on how to generate quality data while constructing a MaxCompute data warehouse, and thus provides guidance for practical data governance.

To manage data quality and formulate quality management standards that meet the above data quality principles, several aspects must be considered:

Defining which data requires quality management can generally be done through data asset classification and metadata application link analysis. Determine the data asset level based on the degree of impact of the application. According to the data link lineage, the data asset level is pushed to all stages of data production and processing, so as to determine the asset level of the data involved in the link and the different processing methods adopted based on the different asset levels in each processing link.

When it comes to data asset levels, from a quality management perspective, the nature of impact that data has on the business can be divided into five classes, namely: destructive in nature, global in nature, local in nature, general in nature, and unknown in nature. The different levels decline in importance incrementally. Specific definitions of each are as follows:

For example, in the label level of the table, the asset level can be marked 'Asset': destructive-A1, global-A2, local-A3, general-A4, unknown-Ax. Degree of importance: A1> A2> A3> A4> Ax. If a piece of data appears in multiple application scenarios, the higher value principle is observed.

This course explains what is Data Integration and the basic terminology, functions and characteristics of Data Integration. We will also learn how to develop and configure data synchronization tasksl, what are the common configurations synchronization tasks and what role configuration plays.

The second class of Alibaba Cloud Big Data Quickstart series. It mainly introduces basic concepts of architecture and the offline processing engine MaxCompute, as well as the online data processing platform DataWorks. The course explains and demonstrates how to use the Data Integration component, DataWorks to integrate unstructured data stored in OSS, and the structured data stored on Apsara RDS (MySQL) to MaxCompute.

Understand the basic data and business related to the current take-out industry, and analyze relevant industry data using the Alibaba Cloud Big Data Platform.

This course briefly explains the basic knowledge of Alibaba Cloud big data product system and several products in large data applications, such as MaxCompute, DataWorks, RDS, DRDS, QuickBI, TS, Analytic DB, OSS, Data Integration, etc.

Data Integration is a reliable, secure, cost-effective, elastic, and scalable data synchronization platform provided by Alibaba Cloud. It supports data storage across heterogeneous systems and offers offline (both full and incremental) data access channels in diverse network environments for more than 20 types of data sources. For more information, visit Supported data stores and plug-ins.

In both the preceding scenarios, you can execute a synchronization task in Data Integration. For more information about how to create and configure a synchronization task including the data source and whitelist, visit the DataWorks documentation. The following sections detail how to import data to and export data from AnalyticDB for PostgreSQL.

This topic introduces a simple Continuous Integration pipeline based on GitLab CI/CD. Although we keep it simple now, this pipeline will be extended in the next topic.

This topic is based on a simple web application written on top of Spring Boot (for the backend) and React (for the frontend).

The application consists in a todo list where a user can add or remove items. The goal is to have a simple 3-tier architecture with enough features that allow us to explore important concepts:

Data Integration is an all-in-one data synchronization platform. The platform supports online real-time and offline data exchange between all data sources, networks, and locations.

DataWorks is a Big Data platform product launched by Alibaba Cloud. It provides one-stop Big Data development, data permission management, offline job scheduling, and other features.

2,593 posts | 793 followers

FollowApache Flink Community - July 28, 2025

Rupal_Click2Cloud - December 15, 2023

Apache Flink Community - August 1, 2025

Alibaba Clouder - January 20, 2021

Apache Flink Community - April 16, 2024

Alibaba Clouder - January 7, 2021

2,593 posts | 793 followers

Follow DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn More DataV

DataV

A powerful and accessible data visualization tool

Learn MoreMore Posts by Alibaba Clouder