By Huolang, from Alibaba Cloud Storage Team

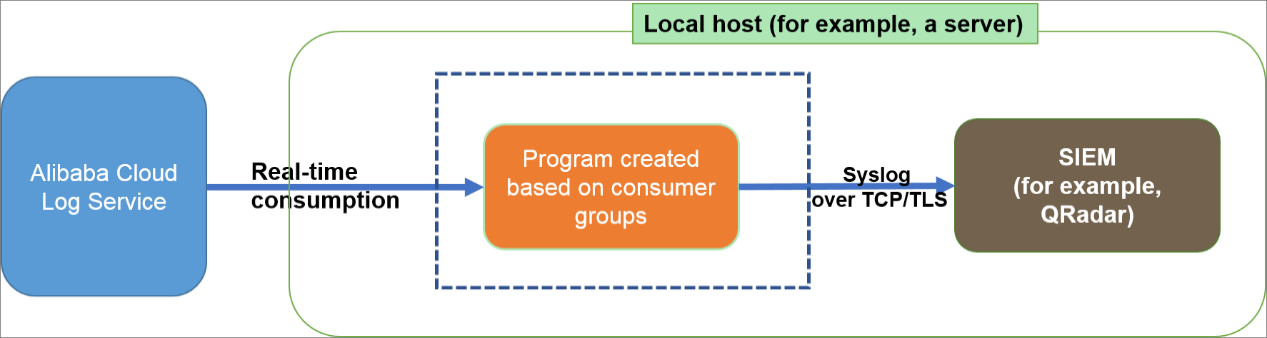

Syslog is a widely used logging standard that applies to most security information and event management (SIEM) systems, such as IBM QRadar and HP ArcSight. This article describes how to ship logs from Log Service to a SIEM system over Syslog.

• Syslog is defined in RFC 5424 and RFC 3164. RFC 3164 was published in 2001, and RFC 5424 was an upgraded version published in 2009. We recommend that you use RFC 5424 because this version is compatible with RFC 3164 and solves more issues than RFC 3164. For more information, see RFC 5424 and RFC 3164.

• Syslog over TCP/TLS: Syslog defines the standard format of log messages. Both TCP and UDP support Syslog to ensure the stability of data transmission. RFC 5425 defines the use of Transport Layer Security (TLS) to enable secure transport of Syslog messages. If your SIEM system supports TCP or TLS, we recommend that you send Syslog messages over TCP or TLS. For more information, see RFC 5425.

• Syslog facility: the program component defined by earlier versions of UNIX. You can select a user as the default facility. For more information, see Program components.

• Syslog severity: the severity defined for Syslog messages. You can set the log with specific content to a higher severity level based on your business requirements. The default value is info. For more information, see Log levels.

We recommend that you write a program based on consumer groups in Log Service. This way, you can use the program to ship Syslog messages over TCP or TLS to the SIEM system.

This article uses the Logstore of Log Service as the data source, implements consumer groups in Python, and uses the Syslog protocol to deliver to the SIEM platform. The SIEM platform selected in this article is IBM Qradar.

Create Project Directory qradar-demo.

mkdir qradar-demo

Create index.py and copy the following code.

Most of the code refers to shipping logs to SIEM through Syslog [6], and some modifications have been made, including environment variables and comments. Users can modify the configurations according to the comments.

# -*- coding: utf-8 -*-

import os

import logging

import threading

import six

from datetime import datetime

from logging.handlers import RotatingFileHandler

from multiprocessing import current_process

from aliyun.log import PullLogResponse

from aliyun.log.consumer import LogHubConfig, CursorPosition, ConsumerProcessorBase, ConsumerWorker

from aliyun.log.ext import syslogclient

from pysyslogclient import SyslogClientRFC5424 as SyslogClient

user = logging.getLogger()

handler = RotatingFileHandler("{0}_{1}.log".format(os.path.basename(__file__), current_process().pid),

maxBytes=100 * 1024 * 1024, backupCount=5)

handler.setFormatter(logging.Formatter(

fmt='[%(asctime)s] - [%(threadName)s] - {%(module)s:%(funcName)s:%(lineno)d} %(levelname)s - %(message)s',

datefmt='%Y-%m-%d %H:%M:%S'))

user.setLevel(logging.INFO)

user.addHandler(handler)

user.addHandler(logging.StreamHandler())

logger = logging.getLogger(__name__)

def get_option():

##########################

# Basic options

##########################

# Obtain parameters and options for Log Service from environment variables.

endpoint = os.environ.get('SLS_ENDPOINT', '')

accessKeyId = os.environ.get('SLS_AK_ID', '')

accessKey = os.environ.get('SLS_AK_KEY', '')

project = os.environ.get('SLS_PROJECT', '')

logstore = os.environ.get('SLS_LOGSTORE', '')

consumer_group = os.environ.get('SLS_CG', '')

syslog_server_host = os.environ.get('SYSLOG_HOST', '')

syslog_server_port = int(os.environ.get('SYSLOG_PORT', '514'))

syslog_server_protocol = os.environ.get('SYSLOG_PROTOCOL', 'tcp')

# The starting point of data consumption. The first time that you run the program, the starting point is specified by this parameter. The next time you run the program, the consumption starts from the last consumption checkpoint.

# You can set the parameter to begin, end, or a time in the ISO 8601 standard.

cursor_start_time = "2023-03-15 0:0:0"

##########################

# Advanced options

##########################

# We recommend that you do not modify the consumer name, especially when concurrent consumption is required.

consumer_name = "{0}-{1}".format(consumer_group, current_process().pid)

# The heartbeat interval. If the server does not receive a heartbeat for a specific shard for two consecutive intervals, the consumer is considered disconnected. In this case, the server allocates the task to another consumer.

# If the network performance is poor, we recommend that you specify a larger interval.

heartbeat_interval = 20

# The maximum interval between two data consumption processes. If data is generated at a fast speed, you do not need to adjust the parameter.

data_fetch_interval = 1

# Create a consumer group that contains the consumer.

option = LogHubConfig(endpoint, accessKeyId, accessKey, project, logstore, consumer_group, consumer_name,

cursor_position=CursorPosition.SPECIAL_TIMER_CURSOR,

cursor_start_time=cursor_start_time,

heartbeat_interval=heartbeat_interval,

data_fetch_interval=data_fetch_interval)

# syslog options

settings = {

"host": syslog_server_host, # Required.

"port": syslog_server_port, # Required. The port number.

"protocol": syslog_server_protocol, # Required. The value is TCP, UDP, or TLS (only Python3).

"sep": "||", # Required. The separator that is used to separate key-value pairs. In this example, the separator is two consecutive vertical bars (||).

"cert_path": None, # Optional. The location where the TLS certificate is stored.

"timeout": 120, # Optional. The timeout periods. The default value is 120 seconds.

"facility": syslogclient.FAC_USER, # # Optional. You can refer to the values of the syslogclient.FAC_* parameter in other examples.

"severity": syslogclient.SEV_INFO, # Optional. You can refer to the values of the syslogclient.SEV_* parameter in other examples.

"hostname": "aliyun.example.com", # Optional. The machine name. By default, the machine name is selected.

"tag": "tag" # Optional. The tag. Default value: hyphens (-).

}

return option, settings

class SyncData(ConsumerProcessorBase):

"""

The consumer consumes data from Log Service and ships it to the Syslog server.

"""

def __init__(self, target_setting):

"""Initiate the Syslog server and test network connectivity."""

super(SyncData, self).__init__()

assert target_setting, ValueError("You need to configure settings of remote target")

assert isinstance(target_setting, dict), ValueError(

"The settings should be dict to include necessary address and confidentials.")

self.option = target_setting

self.protocol = self.option['protocol']

self.timeout = int(self.option.get('timeout', 120))

self.sep = self.option.get('sep', "||")

self.host = self.option["host"]

self.port = int(self.option.get('port', 514))

self.cert_path = self.option.get('cert_path', None)

# try connection

client = SyslogClient(self.host, self.port, proto=self.protocol)

def process(self, log_groups, check_point_tracker):

logs = PullLogResponse.loggroups_to_flattern_list(log_groups, time_as_str=True, decode_bytes=True)

logger.info("Get data from shard {0}, log count: {1}".format(self.shard_id, len(logs)))

try:

client = SyslogClient(self.host, self.port, proto=self.protocol)

for log in logs:

# suppose we only care about audit log

timestamp = datetime.fromtimestamp(int(log[u'__time__']))

del log['__time__']

io = six.StringIO()

# Modify the formatted content based on your business requirements. The data is transmitted by using key-value pairs that are separated with two consecutive vertical bars (||).

for k, v in six.iteritems(log):

io.write("{0}{1}={2}".format(self.sep, k, v))

data = io.getvalue()

# Modify the facility and severity settings based on your business requirements.

client.log(data,

facility=self.option.get("facility", None),

severity=self.option.get("severity", None),

timestamp=timestamp,

program=self.option.get("tag", None),

hostname=self.option.get("hostname", None))

except Exception as err:

logger.debug("Failed to connect to remote syslog server ({0}). Exception: {1}".format(self.option, err))

# Add code to handle errors. For example, you can add the code to retry requests or report errors.

raise err

logger.info("Complete send data to remote")

self.save_checkpoint(check_point_tracker)

def main():

option, settings = get_option()

logger.info("*** start to consume data...")

worker = ConsumerWorker(SyncData, option, args=(settings,))

worker.start(join=True)

if __name__ == '__main__':

main()Install dependencies in the qradar-demo directory.

cd qradar-demo

pip install aliyun-log-python-sdk -t .

pip install pysyslogclient -t .Configure Environment Variables

export SLS_ENDPOINT=<Endpoint of your region>

export SLS_AK_ID=<YOUR AK ID>

export SLS_AK_KEY=<YOUR AK KEY>

export SLS_PROJECT=<SLS Project Name>

export SLS_LOGSTORE=<SLS Logstore Name>

export SLS_CG=< Consumer group name, such as syc_data>

export SYSLOG_HOST=<Host of the syslog server>

export SYSLOG_PORT=<Port of the syslog server, default is 514>

export SYSLOG_PROTOCOL=<syslog protocol, default is tcp>Start the consumption program.

Programs based on the preceding consumer groups can directly start multiple processes for concurrent consumption.

cd qradar-demo

nohup python3 index.py &

nohup python3 index.py &

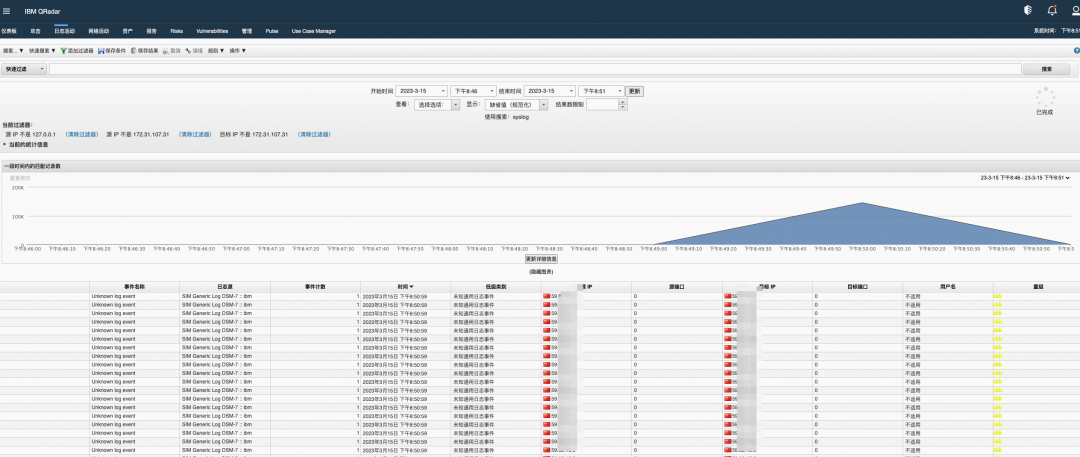

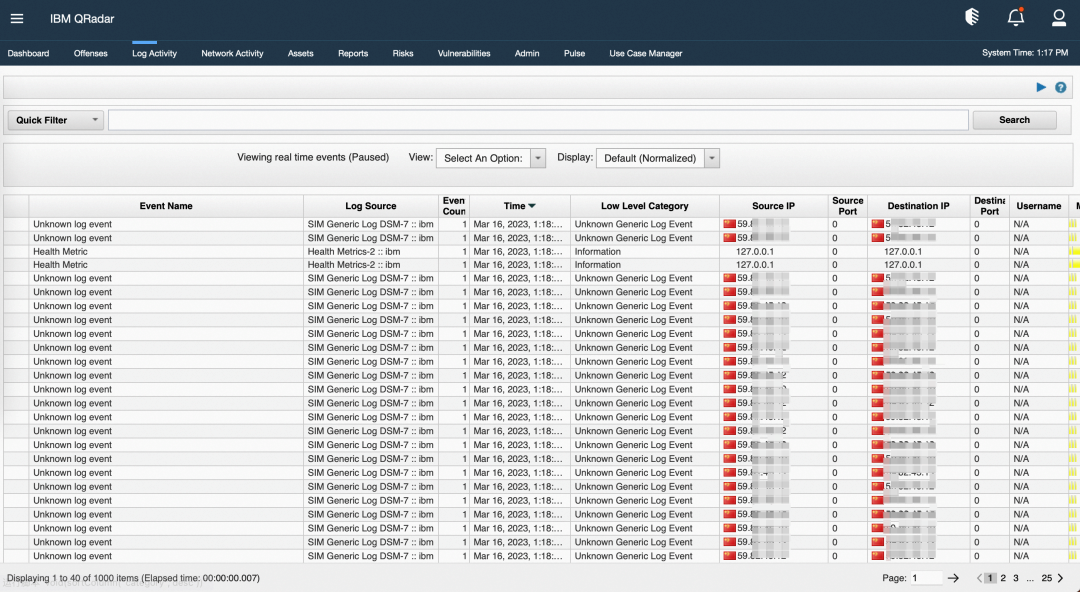

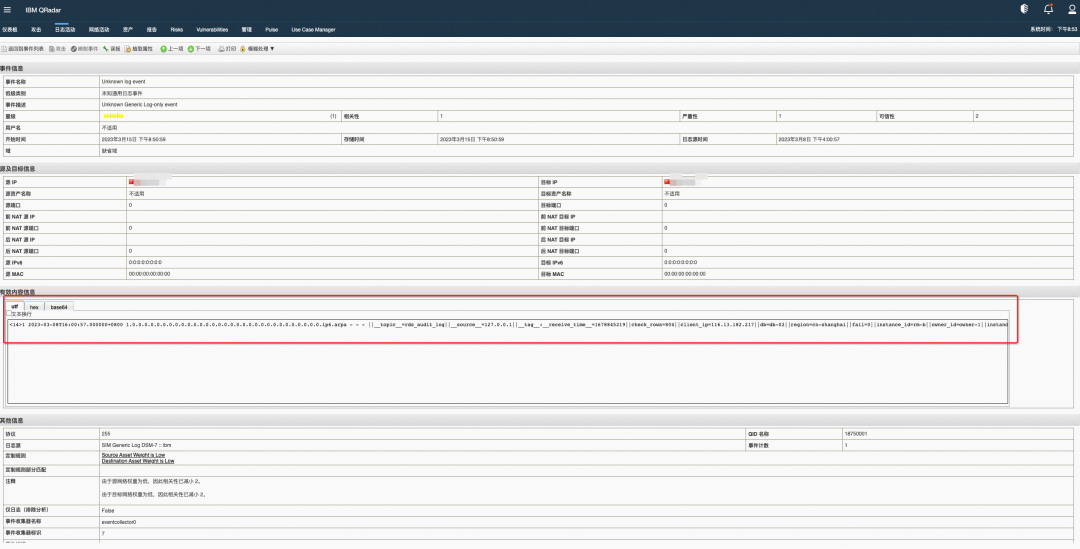

nohup python3 index.py &IBM Qradar View Results

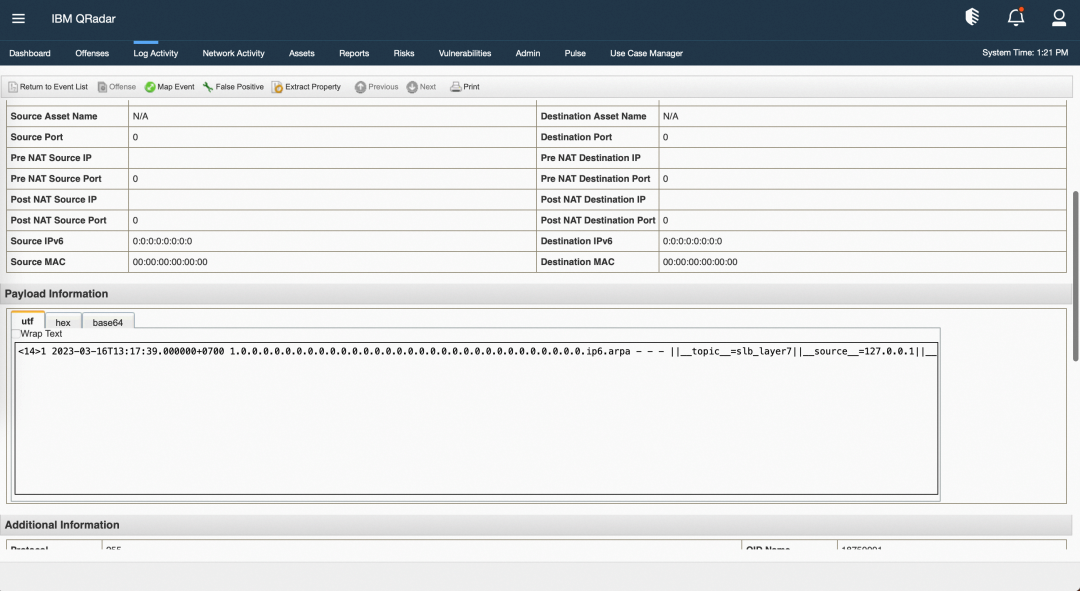

Double click an event entry to view the details. The simulated rds audit logs are used as an example.

Throughput is tested in the following scenario: Python 3 is used to run the program in the preceding example, the bandwidth and receiving speed, such as the receiving speed on Splunk, are not limited, and a single consumer consumes about 20% of the single-core CPU resources. The test results indicate that the consumption speed of raw logs can reach 10 MB/s. Therefore, if 10 consumers consume data at the same time, the consumption speed of raw logs can reach 100 MB/s per CPU core. Each CPU core can consume up to 0.9 TB of raw logs per day.

A consumer group stores checkpoints on the server. When the data consumption process of one consumer stops, another consumer automatically takes over the data consumption process and continues the process from the checkpoint of the last consumption. You can start consumers on different machines. If a machine stops or is damaged, a consumer on another machine can take over the data consumption process and continue the process from the checkpoint of the last consumption. To have a sufficient number of consumers, you can start more consumers than shards on different machines.

Resumable upload: A consumer group stores checkpoints on the server. When the data consumption process of one consumer stops, another consumer automatically takes over the data consumption process and continues the process from the checkpoint of the last consumption. You can start consumers on different machines. If a machine stops or is damaged, a consumer on another machine can take over the data consumption process and continue the process from the checkpoint of the last consumption. To have a sufficient number of consumers, you can start more consumers than shards on different machines.

Daemon process: To ensure the high availability of python programs, you can use the daemon supervisor [7] to protect python consumers. When a process crashes for various reasons, the program is automatically restarted.

Logstore use shards to control read and write capabilities. You may need to split or merge shards based on the actual amount of log data. The principle of the program in this article is to use consumer groups to consume logs [8]. Essentially, when the number of shards changes, the consumer group SDK will automatically detect the change of shards and adjust the consumption of shards.

This article mainly refers to the log service document: delivering logs to SIEM through Syslog [9], in which the program is hosted on the ECS, completing the consumption of SLS Logstore data, and delivering it to IBM Qradar through the Syslog protocol. You can use the daemon supervisor to protect programs or deploy programs in a container environment to achieve elasticity and high availability.

[1] https://datatracker.ietf.org/doc/rfc5424/

[2] https://tools.ietf.org/html/rfc3164

[3] https://datatracker.ietf.org/doc/html/rfc5425

[4] https://datatracker.ietf.org/doc/html/rfc3164#section-4.1.1

[5] https://datatracker.ietf.org/doc/html/rfc3164#section-4.1.1

[6] https://www.alibabacloud.com/help/en/log-service/latest/ship-logs-to-a-siem-system-over-syslog

[7] http://supervisord.org/

[8] https://www.alibabacloud.com/help/en/log-service/latest/use-consumer-groups-to-consume-log-data

[9] https://www.alibabacloud.com/help/en/log-service/latest/ship-logs-to-a-siem-system-over-syslog

Tech for Innovation | Alibaba Cloud Top 10 Artificial Intelligence Blogs of 2023

2023 Gartner® Magic Quadrant™ for Cloud Database Management Systems

1,311 posts | 463 followers

FollowAlibaba Cloud Community - October 19, 2021

Alibaba Cloud Native Community - September 5, 2025

JDP - May 27, 2022

Alibaba Cloud Indonesia - August 1, 2023

Alibaba Clouder - January 5, 2021

Alibaba Cloud Storage - March 3, 2021

1,311 posts | 463 followers

Follow Simple Log Service

Simple Log Service

An all-in-one service for log-type data

Learn More Log Management for AIOps Solution

Log Management for AIOps Solution

Log into an artificial intelligence for IT operations (AIOps) environment with an intelligent, all-in-one, and out-of-the-box log management solution

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreMore Posts by Alibaba Cloud Community