By Yunhua

This article shows you how you can upload complex data to MaxCompute through Tunnel SDK. First, we introduce the types of complex MaxCompute data:

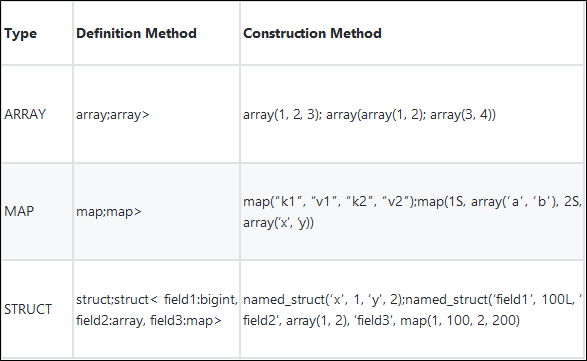

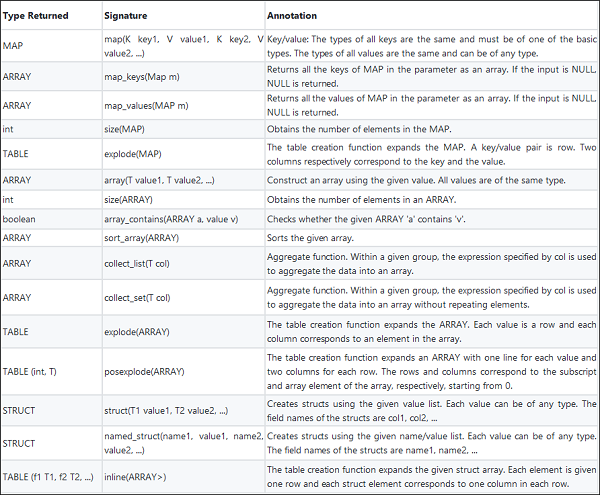

MaxCompute uses the ODPS 2.0-based SQL engine to enrich the support for complex data. MaxCompute supports the ARRAY, MAP, and STRUCT types, which can be used in a nested manner, and provides built-in functions.

MaxCompute Tunnel is the data channel of MaxCompute. You can use it to upload data to or download data from MaxCompute.

TableTunnel is the entry class to access MaxCompute Tunnel. It only supports upload and download of table data (non-view).

Session is a process for uploading and downloading a table or a partition. A session is composed of one or more HTTP requests to Tunnel RESTful APIs.

The session ID is used to identify a session. The timeout time of a session is 24 hours. If massive data is transferred for more than 24 hours, you need to split it into multiple sessions.

Sessions TableTunnel.UploadSession and TableTunnel.DownloadSession are responsible for data upload and download.

TableTunnel provides methods to create UploadSession DownloadSession objects.

1) Create TableTunnel

2) Create UploadSession

3) Create RecordWriter and write the record

4) Submit the upload operation

1) Create TableTunnel

2) Create DownloadSession

3) Create RecordReader and read the record

Sample code:

RecordWriter recordWriter = uploadSession.openRecordWriter(0);

ArrayRecord record = (ArrayRecord) uploadSession.newRecord();

// prepare data

List arrayData = Arrays.asList(1, 2, 3);

Map<String, Long> mapData = new HashMap<String, Long>();

mapData.put("a", 1L);

mapData.put("c", 2L);

List<Object> structData = new ArrayList<Object>();

structData.add("Lily");

structData.add(18);

// set data to record

record.setArray(0, arrayData);

record.setMap(1, mapData);

record.setStruct(2, new SimpleStruct((StructTypeInfo) schema.getColumn(2).getTypeInfo(),

structData));

// write the record

recordWriter.write(record);

Sample code:

RecordReader recordReader = downloadSession.openRecordReader(0, 1);

// read the record

ArrayRecord record1 = (ArrayRecord)recordReader.read();

// get array field data

List field0 = record1.getArray(0);

List<Long> longField0 = record1.getArray(Long.class, 0);

// get map field data

Map field1 = record1.getMap(1);

Map<String, Long> typedField1 = record1.getMap(String.class, Long.class, 1);

// get struct field data

Struct field2 = record1.getStruct(2);

The complete code is as follows:

import java.io.IOException;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import com.aliyun.odps.Odps;

import com.aliyun.odps.PartitionSpec;

import com.aliyun.odps.TableSchema;

import com.aliyun.odps.account.Account;

import com.aliyun.odps.account.AliyunAccount;

import com.aliyun.odps.data.ArrayRecord;

import com.aliyun.odps.data.RecordReader;

import com.aliyun.odps.data.RecordWriter;

import com.aliyun.odps.data.SimpleStruct;

import com.aliyun.odps.data.Struct;

import com.aliyun.odps.tunnel.TableTunnel;

import com.aliyun.odps.tunnel.TableTunnel.UploadSession;

import com.aliyun.odps.tunnel.TableTunnel.DownloadSession;

import com.aliyun.odps.tunnel.TunnelException;

import com.aliyun.odps.type.StructTypeInfo;

public class TunnelComplexTypeSample {

private static String accessId = "<your access id>";

private static String accessKey = "<your access Key>";

private static String odpsUrl = "<your odps endpoint>";

private static String project = "<your project>";

private static String table = "<your table name>";

// partitions of a partitioned table, eg: "pt=\'1\',ds=\'2\'"

// If the table is not a partitioned table, do not need it

private static String partition = "<your partition spec>";

public static void main(String args[]) {

Account account = new AliyunAccount(accessId, accessKey);

Odps odps = new Odps(account);

odps.setEndpoint(odpsUrl);

odps.setDefaultProject(project);

try {

TableTunnel tunnel = new TableTunnel(odps);

PartitionSpec partitionSpec = new PartitionSpec(partition);

// ---------- Upload Data ---------------

// create upload session for table

// the table schema is {"col0": ARRAY<BIGINT>, "col1": MAP<STRING, BIGINT>, "col2": STRUCT<name:STRING,age:BIGINT>}

UploadSession uploadSession = tunnel.createUploadSession(project, table, partitionSpec);

// get table schema

TableSchema schema = uploadSession.getSchema();

// open record writer

RecordWriter recordWriter = uploadSession.openRecordWriter(0);

ArrayRecord record = (ArrayRecord) uploadSession.newRecord();

// prepare data

List arrayData = Arrays.asList(1, 2, 3);

Map<String, Long> mapData = new HashMap<String, Long>();

mapData.put("a", 1L);

mapData.put("c", 2L);

List<Object> structData = new ArrayList<Object>();

structData.add("Lily");

structData.add(18);

// set data to record

record.setArray(0, arrayData);

record.setMap(1, mapData);

record.setStruct(2, new SimpleStruct((StructTypeInfo) schema.getColumn(2).getTypeInfo(),

structData));

// write the record

recordWriter.write(record);

// close writer

recordWriter.close();

// commit uploadSession, the upload finish

uploadSession.commit(new Long[]{0L});

System.out.println("upload success!") ;

// ---------- Download Data ---------------

// create download session for table

// the table schema is {"col0": ARRAY<BIGINT>, "col1": MAP<STRING, BIGINT>, "col2": STRUCT<name:STRING,age:BIGINT>}

DownloadSession downloadSession = tunnel.createDownloadSession(project, table, partitionSpec);

schema = downloadSession.getSchema();

// open record reader, read one record here for example

RecordReader recordReader = downloadSession.openRecordReader(0, 1);

// read the record

ArrayRecord record1 = (ArrayRecord)recordReader.read();

// get array field data

List field0 = record1.getArray(0);

List<Long> longField0 = record1.getArray(Long.class, 0);

// get map field data

Map field1 = record1.getMap(1);

Map<String, Long> typedField1 = record1.getMap(String.class, Long.class, 1);

// get struct field data

Struct field2 = record1.getStruct(2);

System.out.println("download success!") ;

} catch (TunnelException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

}

}

135 posts | 18 followers

FollowAlibaba Cloud MaxCompute - January 24, 2019

Alibaba Cloud MaxCompute - May 5, 2019

Alibaba Cloud MaxCompute - December 18, 2018

Alibaba Cloud MaxCompute - January 15, 2019

Alibaba Clouder - December 29, 2017

Alibaba Cloud MaxCompute - November 15, 2021

135 posts | 18 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Quick Starts

Quick Starts

Deploy custom Alibaba Cloud solutions for business-critical scenarios with Quick Start templates.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba Cloud MaxCompute