A: Currently, Tunnel does not support automatic data upload and allocation to each partition. Data can only be uploaded to one table or one partition of the table each time. When uploading data to a partition table, you must specify the target partition. If multiple levels of partitions exist, you must specify the partitions to the last hierarchy. For more information about the Java SDK, see Java SDK.

A: You must create partitions first and specify the target partition when uploading data through the SDK. You can also upload data to a table in MaxCompute and run SQL statements to dynamically create partitions.

A: DataWorks does not support the Upload statement provided by the MaxCompute Tunnel command line tool.

A: A block is defined as follows: A block corresponds to an HTTP request. Data of multiple blocks can be uploaded in parallel and atomically. No matter whether a synchronization request succeeds or fails, one block does not affect other blocks. The number of retries for a failed block is limited. When the number of retries reaches the limit, the current block is ignored and the next block of data is uploaded. After the upload is completed, you can run the select count(*) statement to check whether any data is lost.

A: The tunnel upload command does not have a speed limit for data upload. The upload speed is restricted by the network bandwidth and server performance. To improve the performance, you can distribute data to different partitions or tables and upload data to and download data from multiple ECS instances.

/odpscmd/bin/odpscmd

tunnel upload "$FILE" project.tableA: Refer to the Client to set the startup parameters for CLT. The startup command in the shell script is as follows:

/odpscmd/bin/odpscmd -e "tunnel upload "$FILE" project.table"A: If the data includes a carriage return or a space, you can replace the carriage return or space with other delimiters. Specify the column and row delimiters by using -rd and -fd, respectively, to upload the data. If you cannot replace the delimiters in the data, you can upload the data as a single row and use UDFs to parse it.

For example, the following data includes a carriage return. You can use the comma (,) as the column delimiter (rd) and the at sign (@) as the row delimiter (fd). Then, the data can be properly uploaded.

Data content:

shopx,x_id,100@

shopy,y_id,200@

shopz,z_i

d,300@Upload command:

odps@ MaxCompute_DOC>tunnel u d:\data.txt sale_detail/sale_date=201312,region=hangzhou -s false -fd "," -rd "@";Upload result:

+-----------+-------------+-------------+-----------+--------+

| shop_name | customer_id | total_price | sale_date | region |

+-----------+-------------+-------------+-----------+--------+

| shopx | x_id | 100.0 | 201312 | hangzhou |

| shopy | y_id | 200.0 | 201312 | hangzhou |

| shopz | z_id

d | 300.0 | 201312 | hangzhou |

+-----------+-------------+-------------+-----------+--------+A: If the data in the description field already includes a comma (,), you can use another symbol as the delimiter and specify it using -fd. An example is as follows:

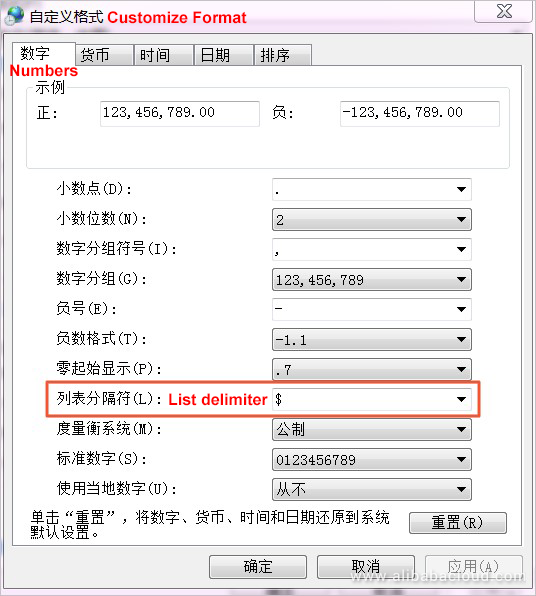

A user wants to run the tunnel upload command in Windows to upload an EXCEL file that describes the job requirements. The table content includes the comma (,). To upload the data, first set the default delimiter for converting the EXCEL file to a CSV file: In the Windows 7 operating system, open Control Panel and click Clock, Language and Region. In the displayed window, click Change the date, time or number formats. On the Formats tab page, click Additional settings. In this example, the original data does not include the dollar sign ($). Set the delimiter to the dollar sign ($), as shown in the following figure.

After the settings, save the EXCEL file as a CSV file and use a text editing tool such as Notepad++ to transcode the file to UTF-8 encoding (default encoding format of Tunnel). Check whether the file delimiter has been changed to the dollar sign ($).

Title$Location$Salary$Company$Company introduction link$Company type$Company size$Industry$Work experience$Education background$Enrollment$Release time$Tag$Job information$Work address$Company information$Website$Collection timeRun the tunnel upload command to upload the data and specify the delimiter. (You must first create a table in MaxCompute.) The following information indicates that the data has been successfully uploaded:

odps@ MaxCompute_DOC>tunnel u d:\12JD.CSV JD2 -s false -fd "$";

Upload session: 201808201531180d47df0b0b18fa45

Start upload:d:\12JD.CSV

Using \r\n to split records

Upload in strict schema mode: true

Total bytes:111028 Split input to 1 blocks

2018-08-20 15:31:18 upload block: '1'

2018-08-20 15:31:18 upload block complete, blockid=1

upload complete, average speed is 108.4 KB/s

OKA: You can use the -fd parameter to specify another delimiter in the CSV file.

If the data includes multiple symbols that may conflict with the delimiter, you can customize the delimiter in the data to prevent the conflict, for example, #@#@@ or )#@#@@$.

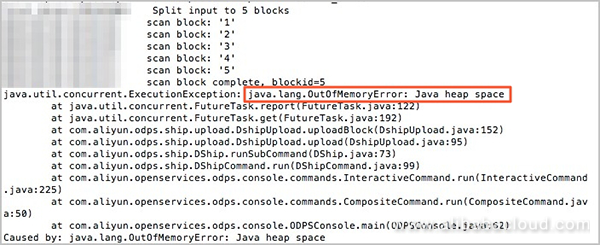

A: The error message indicates that when the data is uploaded, a memory overflow error has occurred. Currently, you can run the tunnel upload command to upload large amounts of data. If an OOM error occurs, the row and column delimiters of data may be incorrect. As a result, the entire text will be considered as one piece of data for buffering in the memory and splitting.

If this is the case, test a small amount of data with -td and -fd, and then upload the full amount of data.

A: The tunnel upload command can be used to upload a file or a level-1 folder.

For example, to upload the folder d:data, run the following command:

odps@ MaxCompute_DOC>tunnel u d:\data sale_detail/sale_date=201312,region=hangzhou -s false;For more information, see Tunnel commands.

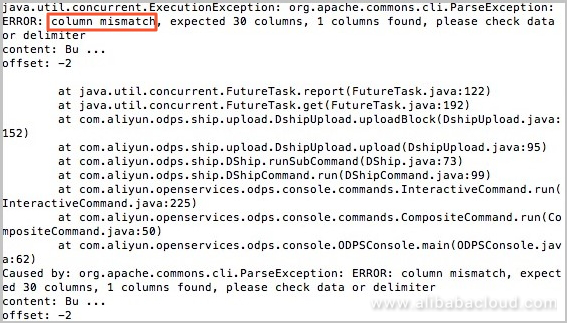

A: Set -dbr=false -s true in the upload command to verify the data format. This error is returned because the number of columns is mismatched. A possible reason is that the column delimiter is incorrect or a blank row that cannot be separated by the delimiter exists at the bottom of the file.

D:\odps\bin\odpscmd.bat -e"tunnel upload d:\data1.txt sale_detail/sale_data=201312 -fd="$" -mbr=5 --scan=true; "

D:\odps\bin\odpscmd.bat -e"tunnel upload d:\data2.txt sale_detail/sale_data=201312 -fd="$" -mbr=5 --scan=true; "A: You have used CLT of an earlier version. In this case, when the tunnel upload command is run and the command includes the --scan parameter, the parameter may fail to be properly transferred in the relay mode. You can delete --scan=true from the command and try again.

Unrecognized option: -acp

FAILED: error occurred while running tunnel commandA: This error occurs when an unsupported command or character is used. The tunnel upload command of MaxCompute does not support wildcards and regular expressions.

A: This error occurs because of data format issues; for example, the data type is mismatched. Set the -dbr parameter to true to ignore dirty data (such as excessive columns, insufficient columns, and mismatched column data type). For more information, see Tunnel commands. The default value of the -dbr parameter is false, indicating that the dirty data will be handled. When the value is true, all data not conforming to the table definition is ignored.

java.io.IOException: RequestId=XXXXXXXXXXXXXXXXXXXXXXXXX, ErrorCode=StatusConflict, ErrorMessage=You cannot complete the specified operation under the current upload or download status.

at com.aliyun.odps.tunnel.io.TunnelRecordWriter.close(TunnelRecordWriter.java:93)

at com.xgoods.utils.aliyun.maxcompute.OdpsTunnel.upload(OdpsTunnel.java:92)

at com.xgoods.utils.aliyun.maxcompute.OdpsTunnel.upload(OdpsTunnel.java:45)

at com.xeshop.task.SaleStatFeedTask.doWork(SaleStatFeedTask.java:119)

at com.xgoods.main.AbstractTool.excute(AbstractTool.java:90)

at com.xeshop.task.SaleStatFeedTask.main(SaleStatFeedTask.java:305)A: This error message indicates that an upload or download task is running. The same operation cannot be performed again.

RequestId=20170116xxxxxxx, ErrorCode=StatusConflict, ErrorMessage=You cannot complete the specified operation under the current upload or download status. java.io.IOException: RequestId=20170116xxxxxxx, ErrorCode=StatusConflict, ErrorMessage=You cannot complete the specified operation under the current upload or download status.

at com.aliyun.odps.tunnel.io.TunnelRecordWriter.close(TunnelRecordWriter.java:93)A: This error message indicates that the error occurs when the writer is to be closed. The possible reasons are as follows:

You can diagnose the problem based on the preceding possible reasons, for example, printing the current status of the writer and session.

A: The JAR package size cannot exceed 10 MB. If the size is larger than 10 MB, we recommend that you run the tunnel upload command to upload data to MaxCompute.

A: The tunnel upload command does not have a limit for the size of data to be uploaded.

A: We recommend that you set the -h parameter to true to skip the table header.

A: If you use the Tunnel SDK to upload data to MaxCompute, a maximum of 60,000 partitions are supported.

If too many partitions are specified, the performance of statistics and analysis will be affected. MaxCompute limits the maximum number of instances in a job, which is closely related to the amount of data you upload and the number of partitions. We recommend that you evaluate your business and select the appropriate partition strategy to prevent the impact of excessive partitions.

For more information about partition tables, see Partition.

In addition, MaxCompute supports batch data submission with the Tunnel Python SDK. For more information, see Data upload/download configuration in Python SDK.

ErrorCode=StatusConflict, ErrorMessage=You cannot complete the specified operation under the current upload or download status.A: This error message indicates that the session status is incorrect. We recommend that you create a session and upload the data again. A possible reason is that the session has been closed or committed in the previous operation. Each partition requires a separate session.

To prevent this error caused by multiple commit operations, check whether the data has been successfully uploaded. If data upload failed, upload the data again. For more information, see Example of multi-thread uploading.

A: MaxCompute Java SDK 0.21.3-public and later versions support the BufferedWriter SDK, which simplifies the data upload process and provides a fault tolerance function.

BufferedWriter hides the block from users. From the users' perspective, a writer is enabled on the session for data writing. During implementation, BufferedWriter stores the data in the buffer of the client and enables an HTTP connection for data upload after the buffer is filled.

BufferedWriter provides a fault tolerance function to ensure successful data upload.

A: A possible reason is that the data encoding format or the delimiter is incorrect so that the data uploaded to the table is incorrect. We recommend that you standardize the original data and upload the file again.

A: You can write a shell script to run the tunnel upload command. Use /odpscmd/bin/odpscmd -e to run the script and paste the table configuration in the script.

A: Reasons for generation of small files:

Apsara Distributed File System of MaxCompute stores data by block. A file whose size is smaller than the block size (64 MB by default) is called a small file.

Currently, the following small files may be generated in MaxCompute:

Impact of excessive small files:

Command for checking the number of small files in a table:

desc extended + Table nameMethods for handling small files:

The method for handling small files varies depending on the generation causes:

Run INSERT OVERWRITE on the source table (or partition) or write data to the new table and delete the source table.

Run ALTER TABLE tablename [PARTITION] and MERGE SMALLFILES to merge small files:set odps.merge.cross.paths=true;

set odps.merge.max.partition.count=100; --Ten partitions are optimized by default. In this example, 100 partitions are optimized.

ALTER TABLE tablename [PARTITION] MERGE SMALLFILES;We recommend that you set a lifecycle for all temporary tables so that expired temporary tables are automatically moved to the recycle bin.

A: The tunnel upload command does not support regular expressions. To use the space as the column delimiter or filter data through regular expressions, use MaxCompute UDFs.

Upload the data as a single column. The following is the original data in this example. The space and carriage return are used as the column and row delimiters, respectively. The data to be extracted is enclosed in quotation marks. Certain data, such as hyphens "-", needs to be filtered out. You can use regular expressions to complete this complex task.

10.21.17.2 [24/Jul/2018:00:00:00 +0800] - "GET https://help.aliyun.com/document_detail/73477.html" 200 0 81615 81615 "-" "iphone" - HIT - - 0_0_0 001 - - - -

10.17.5.23 [24/Jul/2018:00:00:00 +0800] - "GET https://help.aliyun.com/document_detail/73478.html" 206 0 49369 49369 "-" "huawei" - HIT - - 0_0_0 002 - - - -

10.24.7.16 [24/Jul/2018:00:00:00 +0800] - "GET https://help.aliyun.com/document_detail/73479.html" 206 0 83821 83821 "-" "vivo" - HIT - - 0_0_0 003 - - - -To upload the data as a single column, first run the following command to create a single-column table in the project of MaxCompute for receiving data:

odps@ bigdata_DOC>create table userlog1(data string);Upload the data with the column delimiter "u0000", which does not exist in the data, to keep the column integrity:

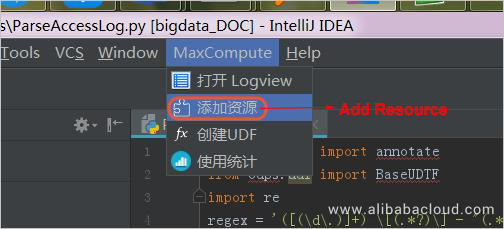

odps@ bigdata_DOC>tunnel upload C:\userlog.txt userlog1 -s false -fd "\u0000" -rd "\n";After uploading the original data, use MaxCompute IntelliJ IDEA to define a Python UDF. (You can also define a JAVA UDF.) To use the Python UDF, submit a ticket to apply for the permission.

Write the following code:

from odps.udf import annotate

from odps.udf import BaseUDTF

import re #Enter the regular function.

regex = '([(\d\.)]+) \[(.*?)\] - "(.*?)" (\d+) (\d+) (\d+) (\d+) "-" "(.*?)" - (.*?) - - (.*?) (.*?) - - - -' #Regular expression in use

# line -> ip,date,request,code,c1,c2,c3,ua,q1,q2,q3

@annotate('string -> string,string,string,string,string,string,string,string,string,string,string') #The number of strings must be consistent with the actual data. In this example, 11 columns of data exist.

class ParseAccessLog(BaseUDTF):

def process(self, line):

try:

t = re.match(regex, line).groups()

self.forward(t[0], t[1], t[2], t[3], t[4], t[5], t[6], t[7], t[8], t[9], t[10])

except:

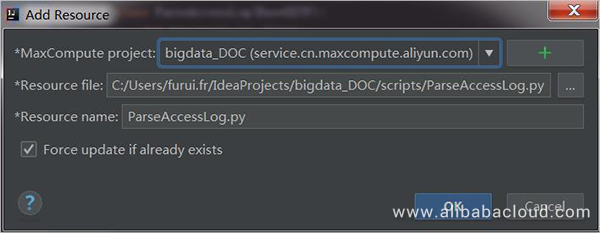

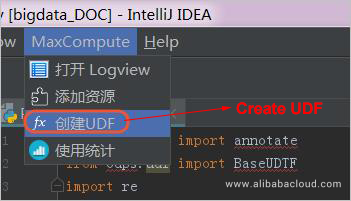

passAfter the UDF is defined, upload the code.

Set MaxCompute project to the current project.

After the code is uploaded, register the UDF and enter the UDF name, which is ParseAccessLog in this example.

After the UDF is uploaded, use it to process the original data uploaded to the userlog1 table. Make sure that you enter the correct column name, which is data in this example. You can use common SQL syntax to create the userlog2 table to store the processed data.

odps@ bigdata_DOC>create table userlog2 as select ParseAccessLog(data) as (ip,date,request,code,c1,c2,c3,ua,q1,q2,q3) from userlog1;After the processing is completed, you can view the target table that has been created. The data has been successfully divided into columns.

+----+------+---------+------+----+----+----+----+----+----+----+

| ip | date | request | code | c1 | c2 | c3 | ua | q1 | q2 | q3 |

+----+------+---------+------+----+----+----+----+----+----+----+

| 10.21.17.2 | 24/Jul/2018:00:00:00 +0800 | GET https://help.aliyun.com/document_detail/73477.html | 200 | 0 | 81615 | 81615 | iphone | HIT | 0_0_0 | 001 |

| 10.17.5.23 | 24/Jul/2018:00:00:00 +0800 | GET https://help.aliyun.com/document_detail/73478.html | 206 | 0 | 4936 | 4936 | huawei | HIT | 0_0_0 | 002 |

| 10.24.7.16 | 24/Jul/2018:00:00:00 +0800 | GET https://help.aliyun.com/document_detail/73479.html | 206 | 0 | 83821 | 83821 | vivo | HIT | 0_0_0 | 003 |

+----+------+---------+------+----+----+----+----+----+----+----+A: You can write a shell script to enable this function. The following describes how to use the shell script to call CLT in Windows. You can use the shell script in Linux in a similar way.

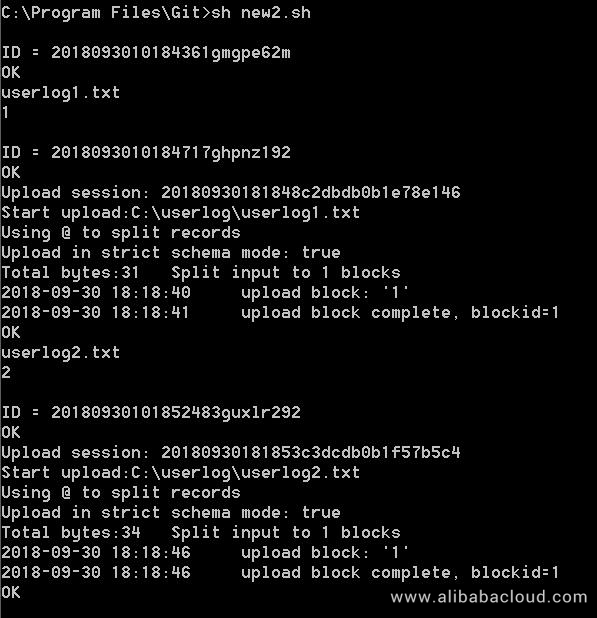

The content of the shell script is as follows:

#!/bin/sh

C:/odpscmd_public/bin/odpscmd.bat -e "create table user(data string) partitioned by (dt int);" //Create a user partition table and set the partition keyword to dt. In this example, CLT is installed in C:/odpscmd_public/bin/odpscmd.bat. You can modify the installation path as required.

dir=$(ls C:/userlog) //Define the dir variable as the name of all files under the folder.

pt=0 //The pt variable indicates the partition value, whose initial value is 0. Each time a file is uploaded, this value is incremented by 1. This ensures that each file is stored in a separate partition.

for i in $dir //Define the cycle. All files under the folder C:/userlog are traversed.

do

let pt=pt+1 //After each cycle is completed, the value of the pt variable is incremented by 1.

echo $i //Display the file name.

echo $pt //Display the partition name.

C:/odpscmd_public/bin/odpscmd.bat -e "alter table user add partition (dt=$pt);tunnel upload C:/userlog/$i user/dt=$pt -s false -fd "%" -rd "@";" //Use CLT to add partitions and upload files to the partitions.

doneThe userlog1 and userlog2 files are used in this example. The following figure shows the result of running the shell script.

After the upload is completed, check the table data in CLT.

java.io.IOException: Error writing request body to serverA: This error message indicates that an exception occurred when you uploaded data to the server. A possible reason is that the network is disconnected or timed out during the upload.

Solution:

If you store your data on an ECS instance, see Configure endpoint to configure the appropriate endpoint to improve the speed and save costs.

A: Besides configuring endpoint, you must configure tunnel_endpoint in the odps_config.ini configuration file. For more information, see Configure endpoint. Currently, only the Tunnel endpoint of China (Shanghai) does not need to be configured.

A: Currently, the tunnel upload command of MaxCompute does not support throttling. You can use Tunnel SDK to achieve throttling.

A: The bandwidth is charged based on the data size after compression.

To learn more about Alibaba Cloud MaxCompute, visit www.alibabacloud.com/product/maxcompute

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - January 24, 2019

Alibaba Cloud MaxCompute - April 25, 2019

Alibaba Cloud MaxCompute - November 15, 2021

Alibaba Cloud MaxCompute - August 15, 2022

Alibaba Cloud MaxCompute - March 7, 2024

Alibaba Cloud MaxCompute - June 24, 2019

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by Alibaba Cloud MaxCompute