What is computer vision? What is the difference between computer graphics and image process?

To sum up it in one sentence, Computer Vision (CV) is the science that allows computers to extract useful information from images and analyze it. The information extracted through CV can be used to assist in decision-making or tasks, such as medical image analysis, engineering measurement, autonomous driving, robotics, etc.

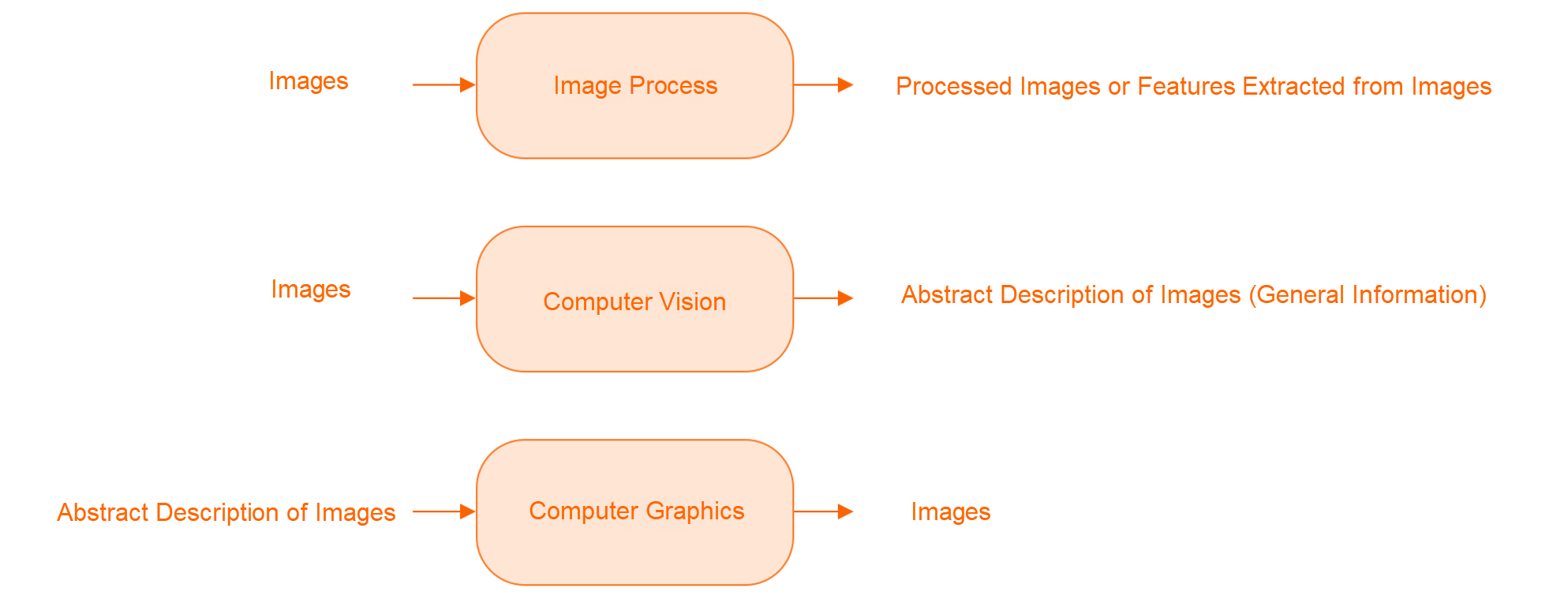

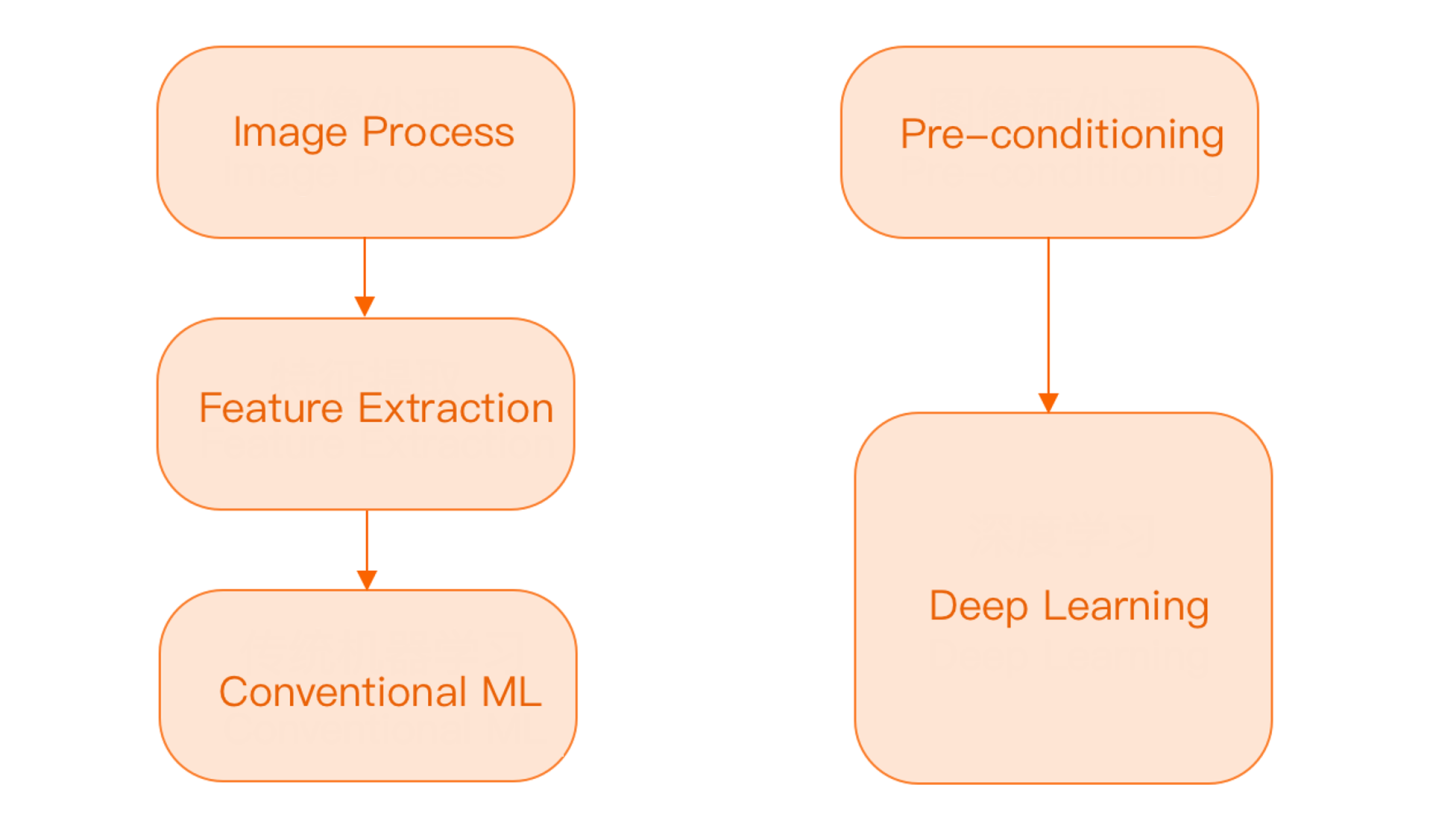

Computer Graphics (CG) and image process are two confusing concepts often discussed together. The difference between them is very simple. CV and CG are two processes in opposite directions. CV refines useful information from images on the computer, and CG uses mathematical models and computer algorithms to generate images. The input and output of image process are images, but the output of images is enhanced or extracted in some features. The flow chart is listed below:

Based on the definitions above, CV is not the core technology used by some applications related to Google Street View, Google Glass, Pokémon Go (AR), and deep fake. Perhaps, CV will be used in some sections, but not the core technology. However, CV is extensively applied in scenarios that require computers to analyze images to refine information, including self-driving cars, OCR, intelligent screening of packages during security checks, medical imaging analysis, robot vision, screening and positioning of targets through images in the military, etc.

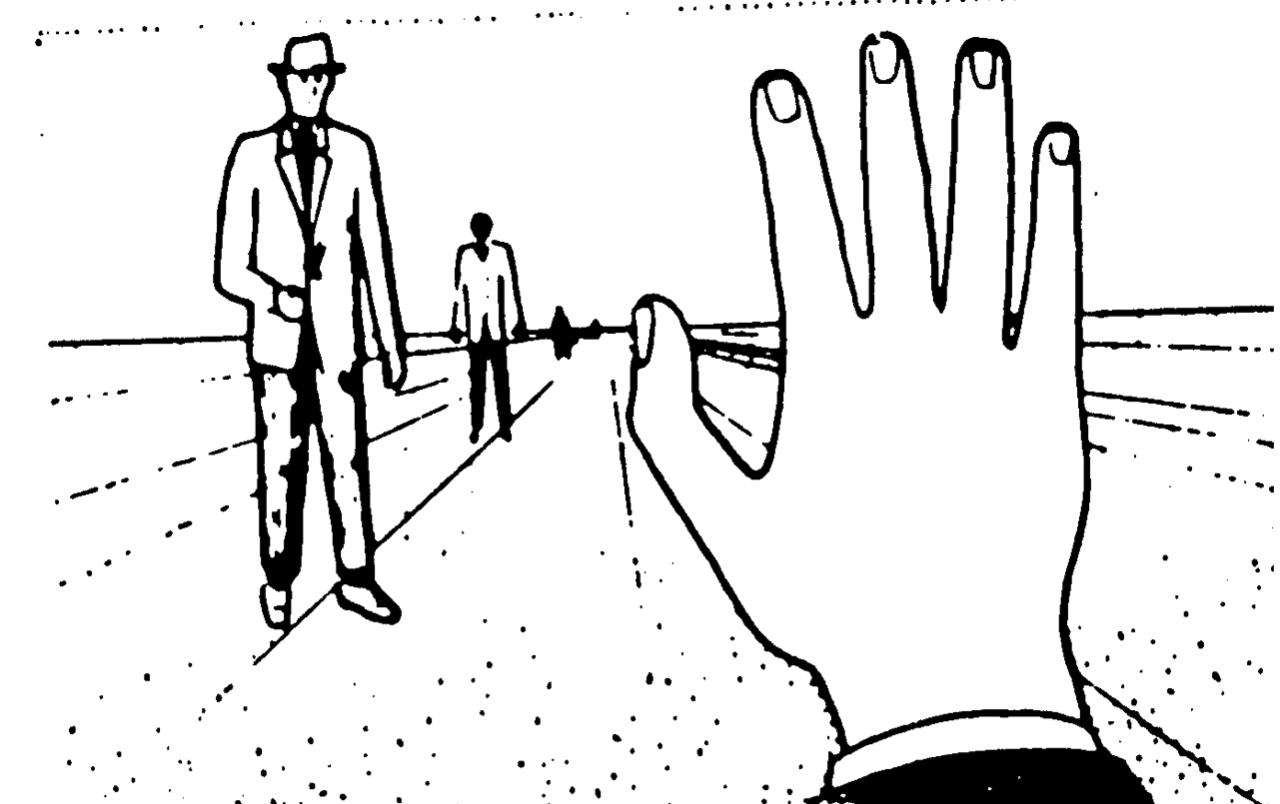

Simply speaking, the vision of the human eye is a two-dimensional projection of the three-dimensional world (and is affected by the transformation of the perspective). The wavelength of visible light ranges from 400 to 800 nanometers, and the color gamut is included in the RGB system. One-third of the human brain is connected or indirectly related to the retina and optic nerve in the human eye.

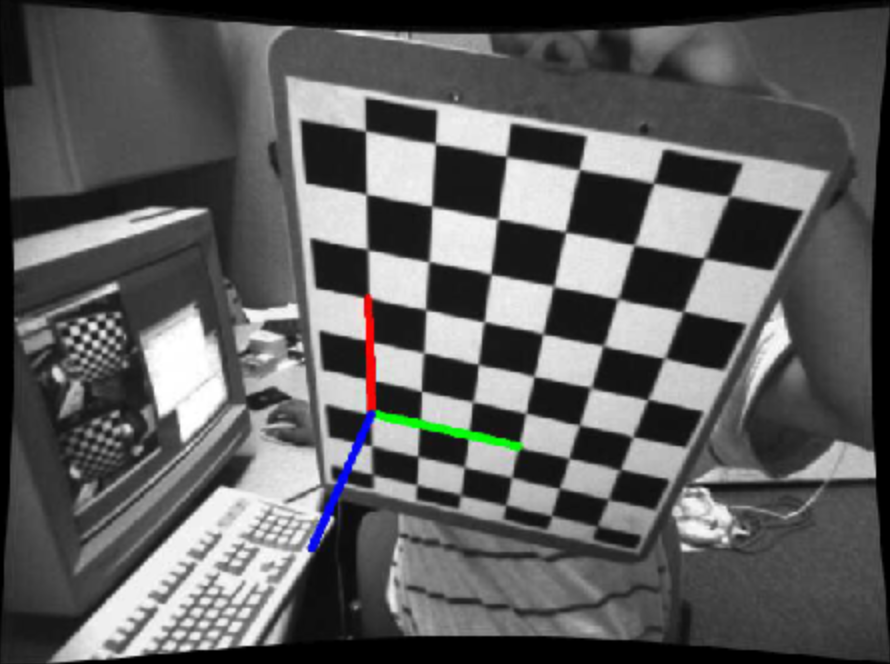

The imaging instrument of computer vision is not as single as the human eye. Compared with the visible light frequency domain of the human eye, computer vision is not even limited to the field of electromagnetic waves (such as B-scan ultrasonography imaging). In terms of the dimensions of imaging, traditional cameras are two-dimensional images, radar is 2.5D images (the extra half dimension is distance), and nuclear magnetic resonance is 3D images (a large number of two-dimensional images are superimposed on the Z-axis to form the third dimension).

If we try to make an abstract summary of the CV process step by step, we can get two classic procedures. In traditional CV methods, it is rare to directly flatten two-dimensional images (or higher-dimensional images) to one-dimensional and then directly input them into traditional machine learning models (such as decision tree family, SVM, KNN, MLP, etc.) Usually, we perform feature extraction on original images and use some more data-based features as the input of machine learning models to make the models run more accurately and efficiently. In recent years, the rise of deep learning has largely disrupted people's cognition of this empirical rule. CNN and its derivative models (such as ResNet), GAN, and self-supervised learning (such as comparative learning) have been very popular over the last two years, replacing manual feature extraction in many situations. Let's use a very classic example. Engineers would extract information in the past Go AI as the input of models, such as the size of the positions enclosed by both sides. However, the emergence of the AlphaGo series proves that manual feature extraction is far less accurate and efficient in this scenario than self-extraction of the convolution and pooling layer of a deep learning model.

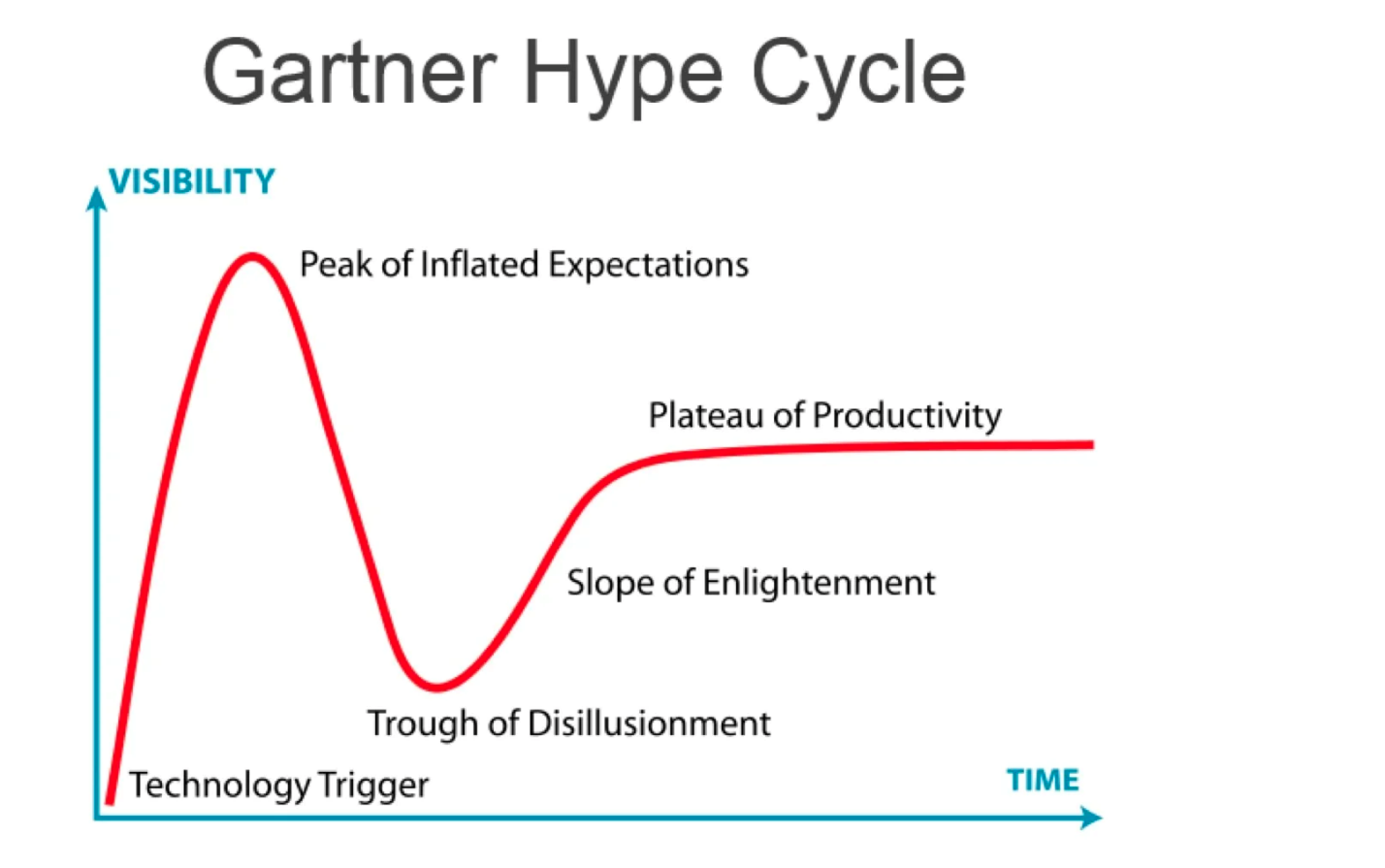

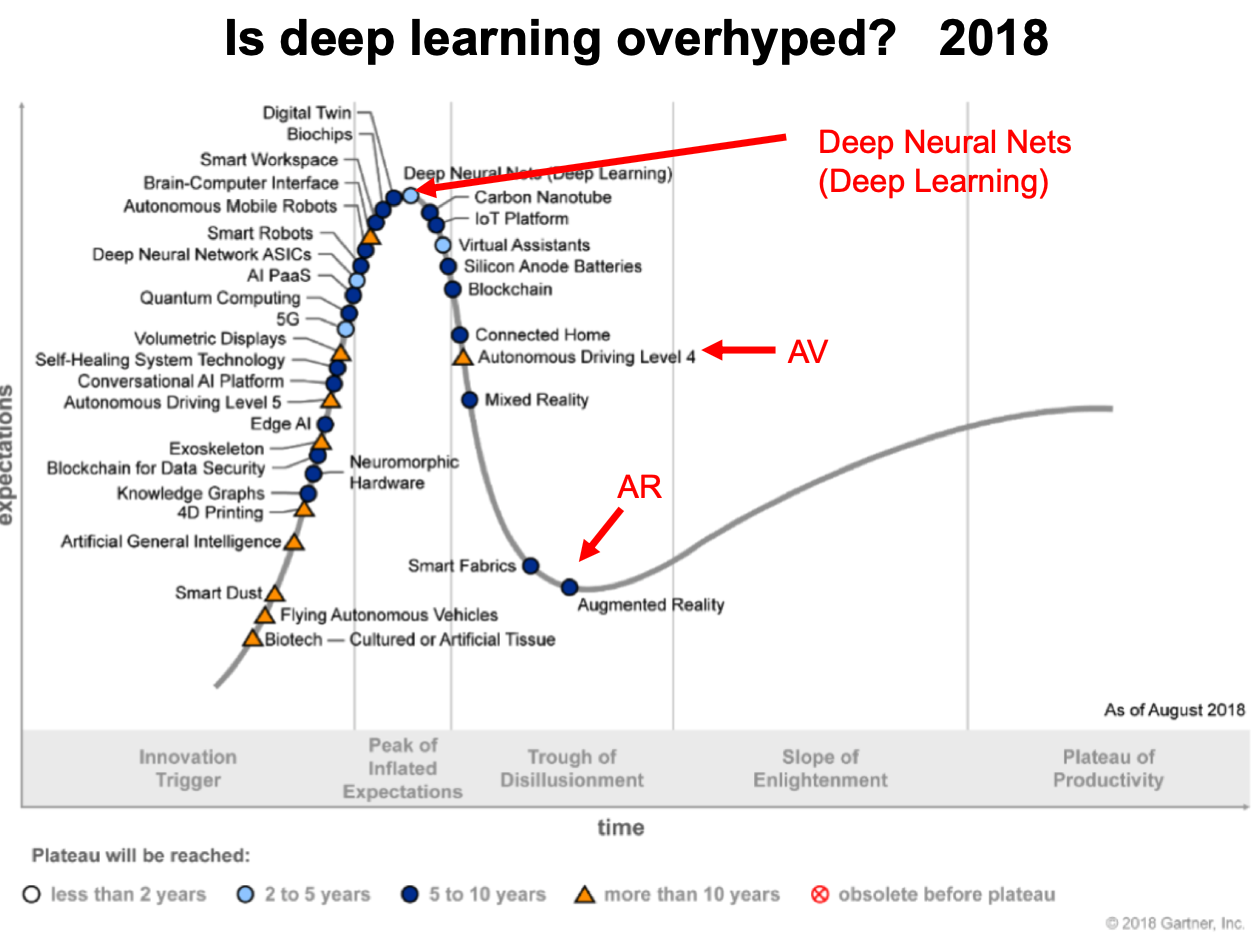

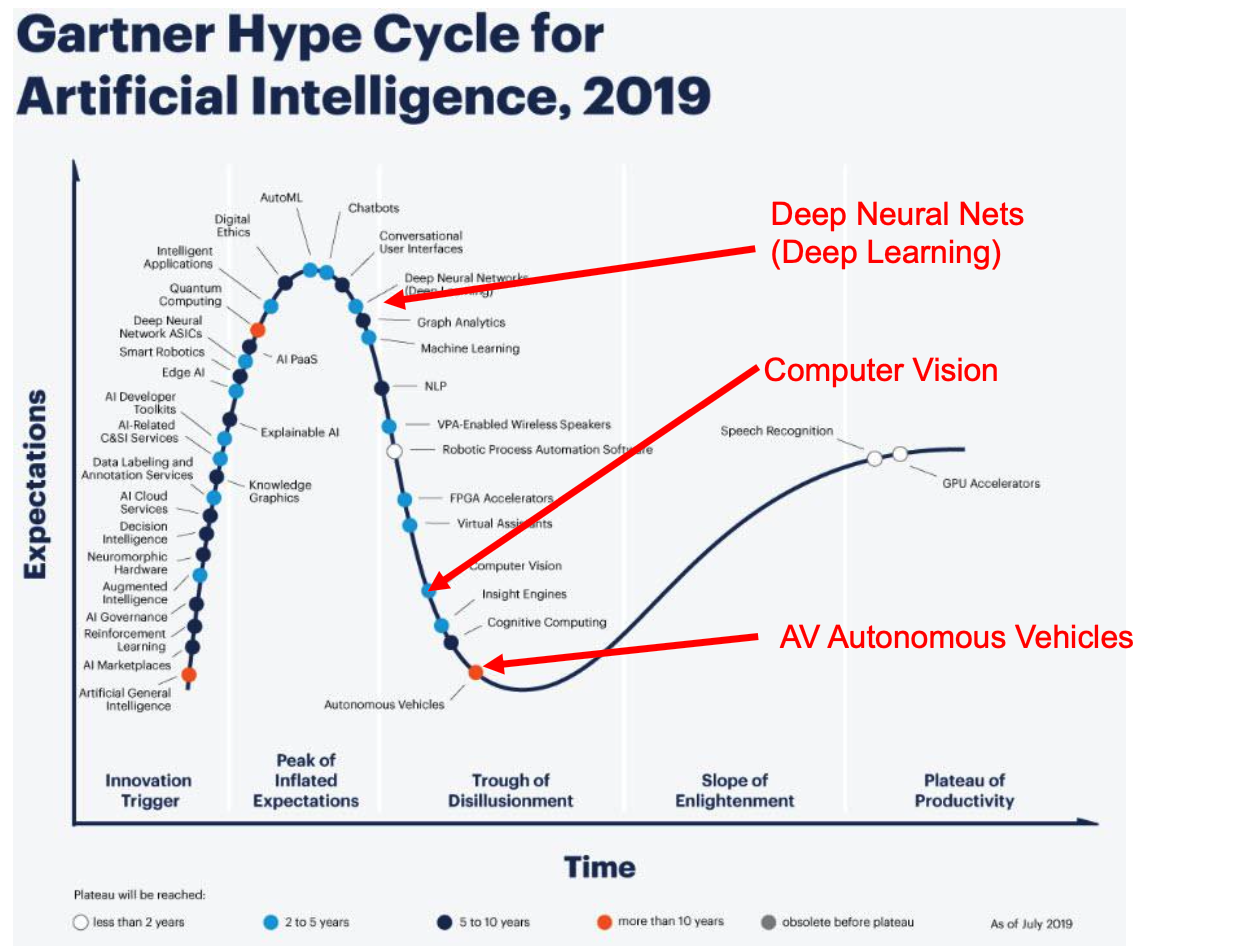

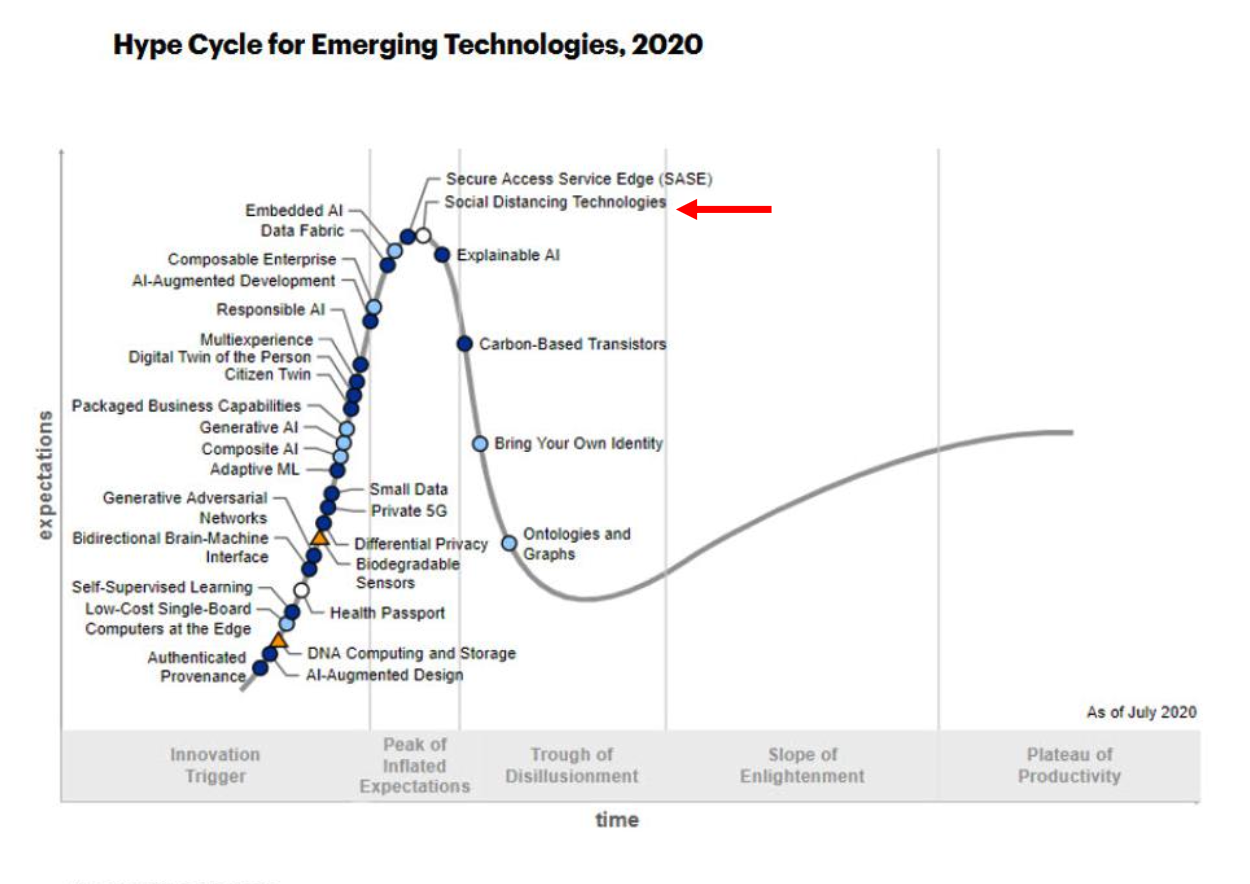

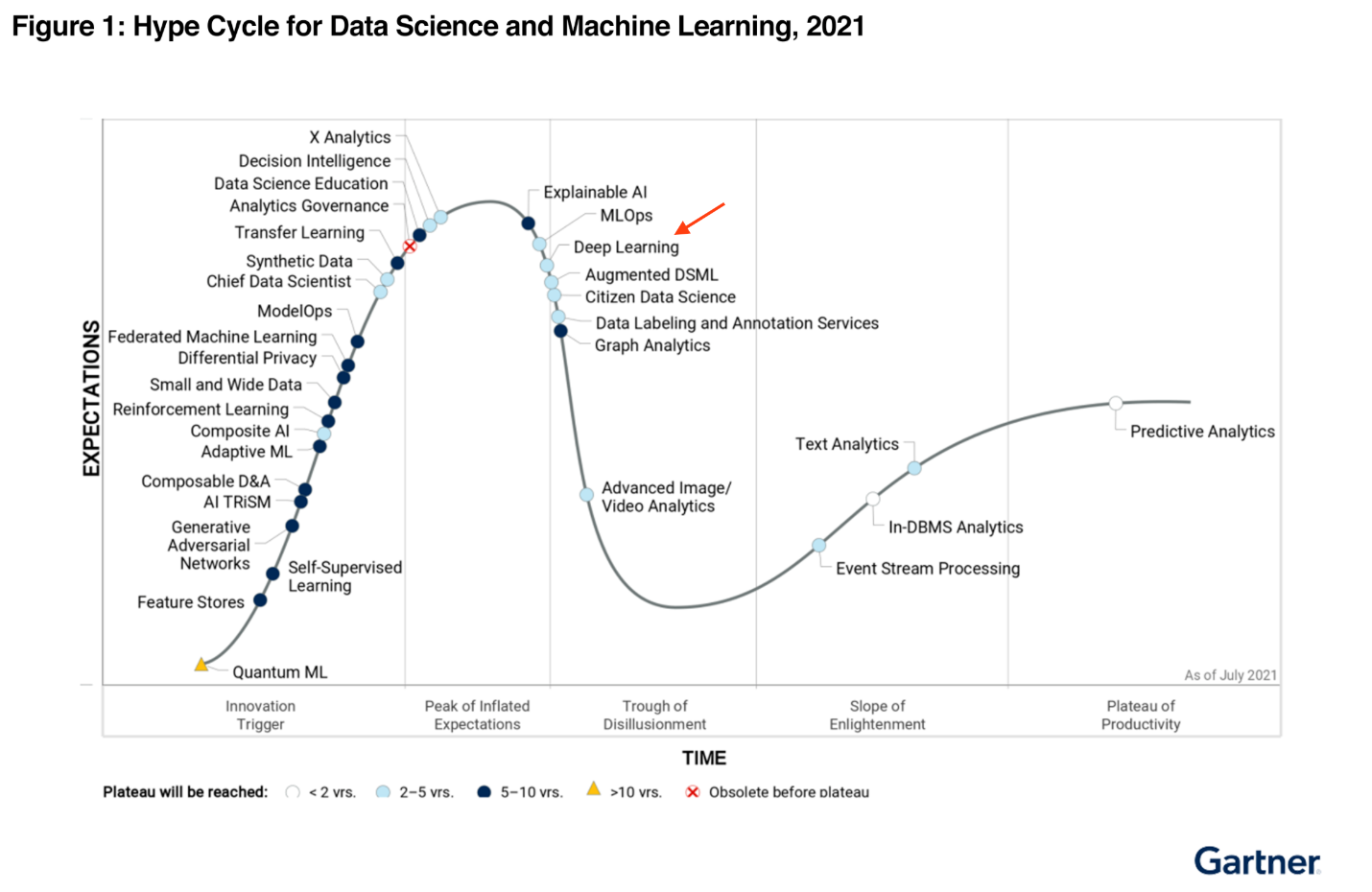

Many engineers with expectations for deep learning have invested in this booming field over the past few years, but they might find that it is not as amazing as expected. After calming down, people begin to reflect on whether deep learning is overhyped. You can start with the Gartner Hype Cycle to elaborate on this issue. The lifecycle of technology can often be divided into five stages:

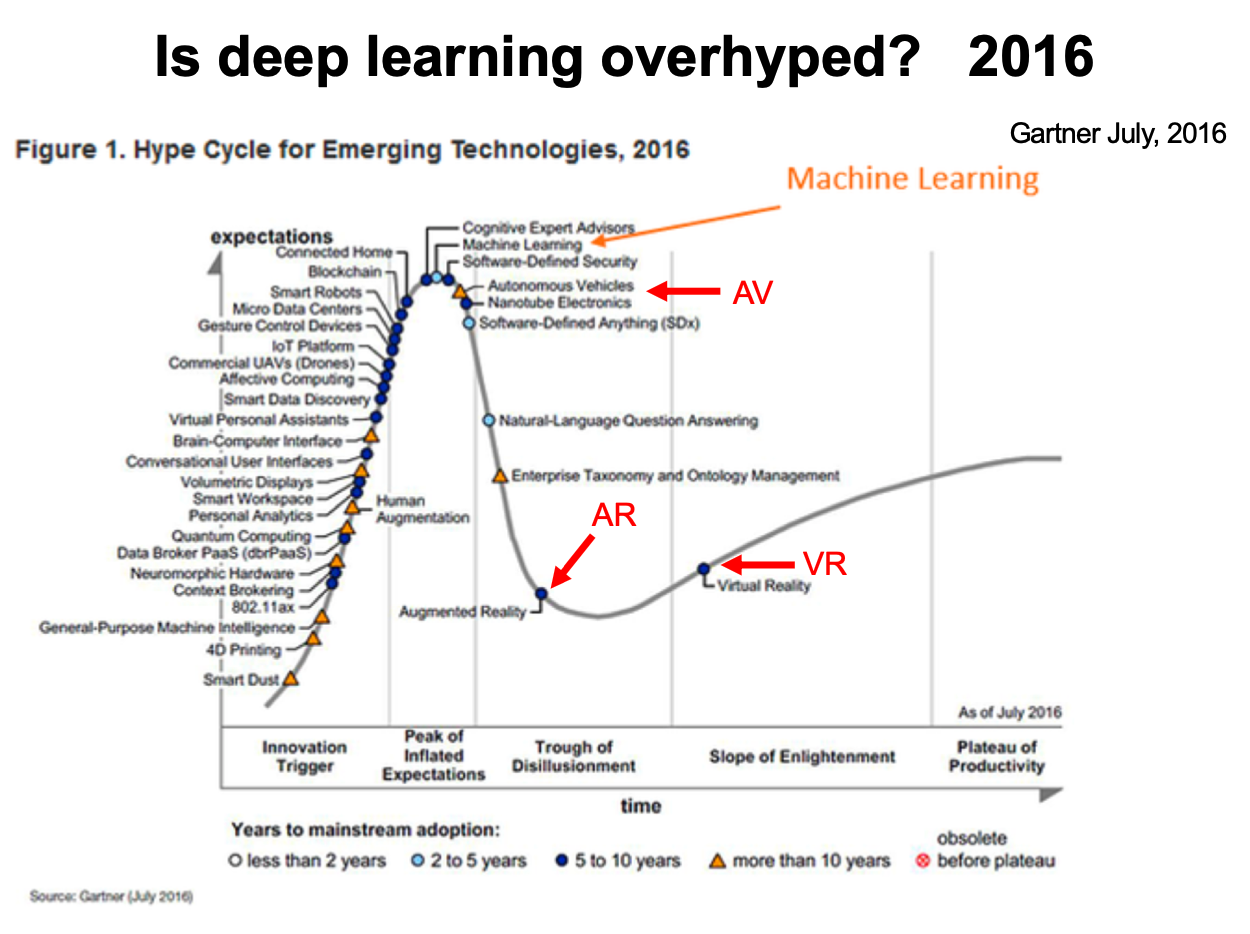

The following figures show the official statistics of Gartner on some popular cutting-edge technologies recently. As shown in the figures, deep learning (indicated by the arrow) has gradually moved from the peak of inflated expectations in 2016 to the trough of disillusionment over the past five years. Thus, it can be expected that deep learning will go through a long-dormant period with doubts and pessimistic expectations until it becomes mature.

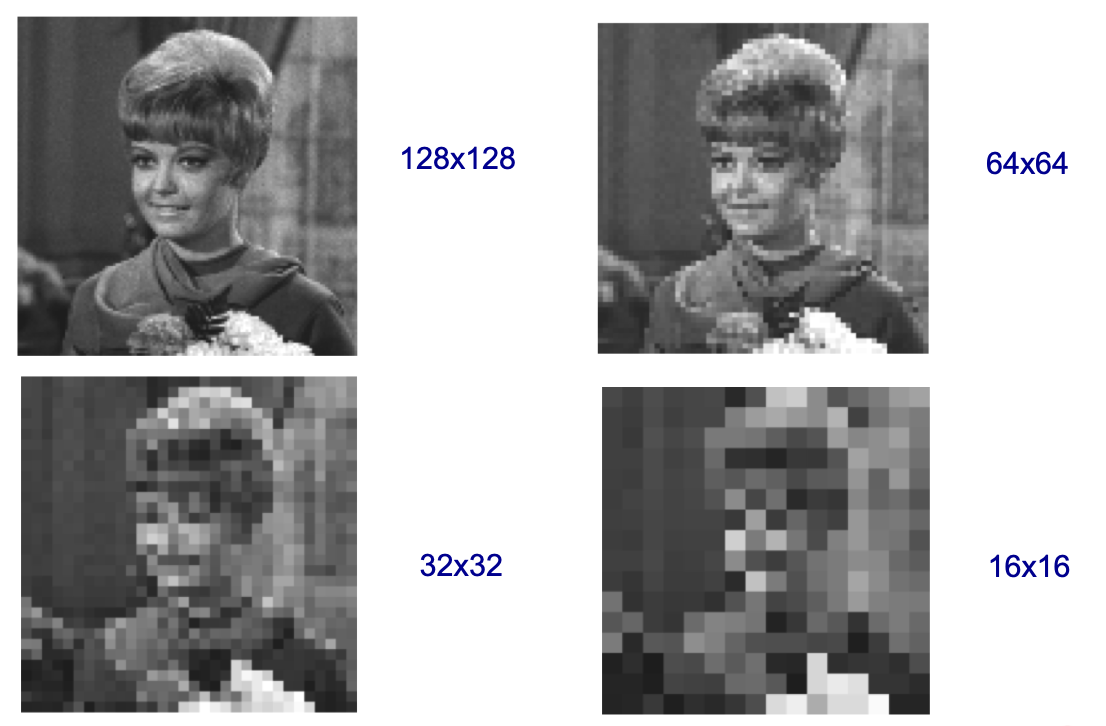

Spatial sampling, quantization, and connectivity strategies are important concepts involved in the digital expression of images. Spatial Sampling describes the number of samples in a unit space.

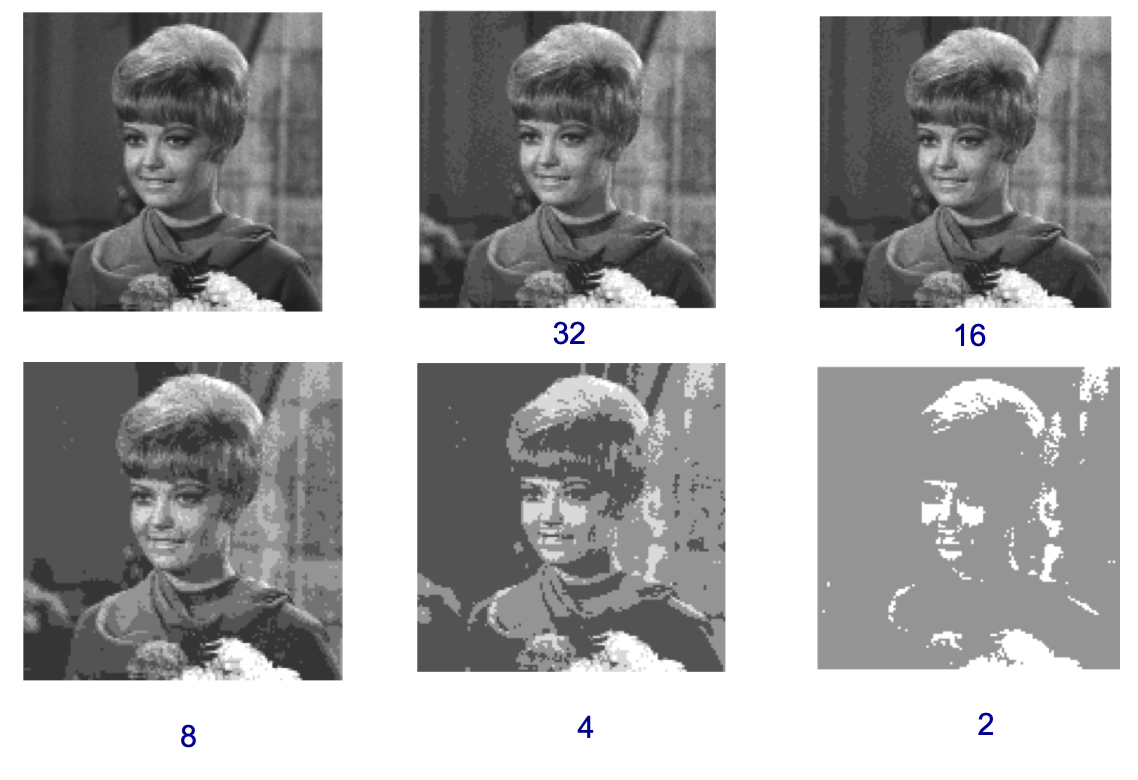

Quantization is a process in which the brightness of each pixel in a color channel is converted from a continuous distribution to a discrete distribution according to intervals.

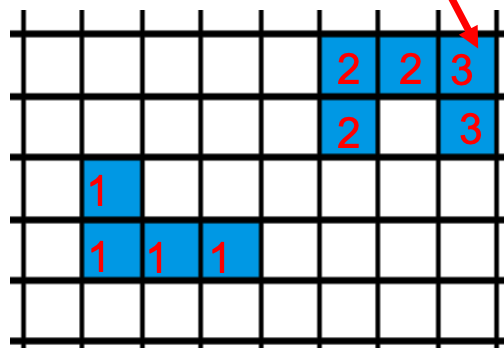

Four Connections and Eight Connections: It is a classification standard in the field of pixel computing. Four connections are the area where the four sides of the pixel are connected. Eight connections have four more corners than four connections.

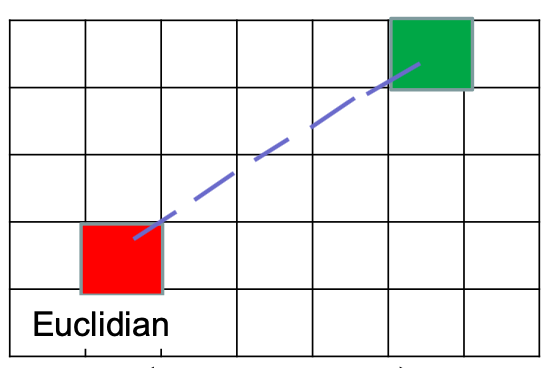

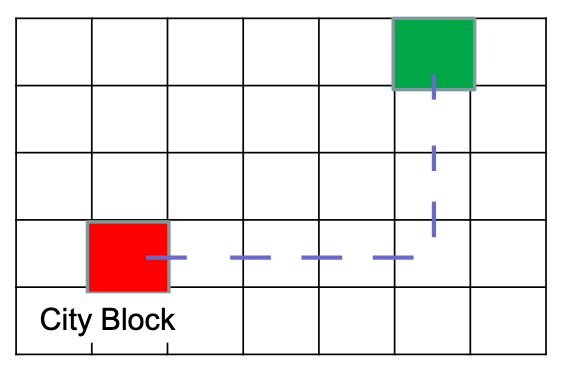

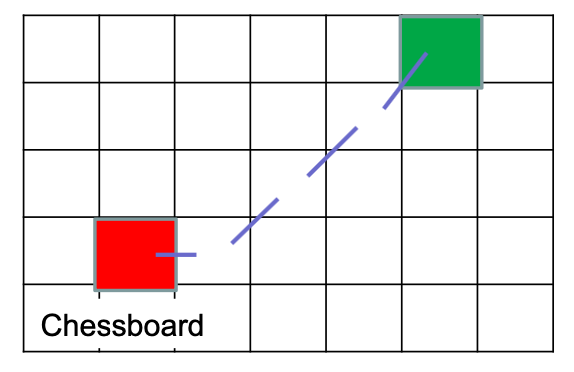

Distance: European distance (Euclidean) is the straight distance between two points. Manhattan distance (City Block) is the distance between two points, walking along grids (only moving in four directions horizontally and vertically). Chebyshev distance (Chess Board) is the distance between two points, walking along grids and diagonals (moving in eight directions horizontally, vertically, and diagonally).

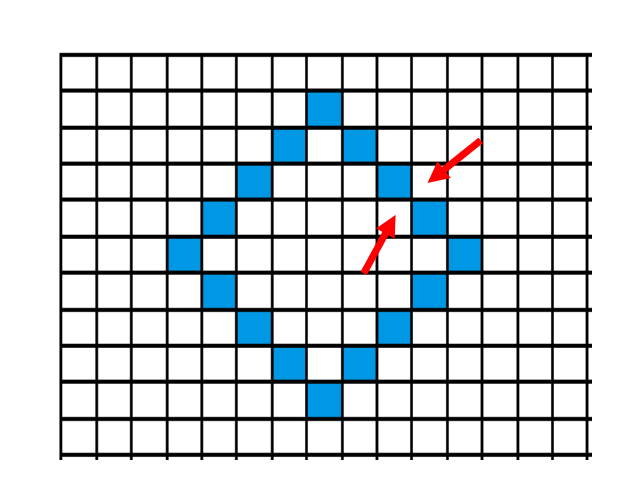

A picture often contains foreground and background. Before we determine which pixels in the picture are connected into a block, we need to determine the connectivity strategies of the foreground and background. Usually, there are two kinds of connectivity strategies: four-connection connected background and eight-connection foreground and then eight-connected background and four-connected foreground. Why do background and foreground not adopt strategies that are both four-connected or both eight-connected? As shown in the following figure:

If the background and foreground maintain the same connectivity strategy, it will lead to an extreme situation in which the foreground and background in the figure above are fully connected or completely broken.

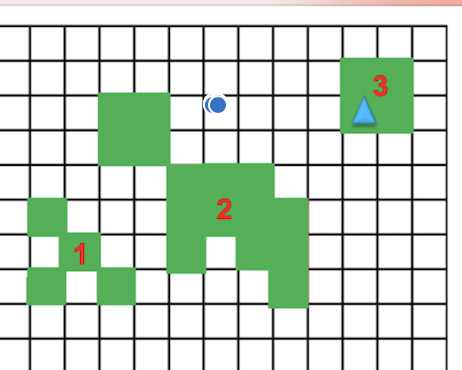

As a basic idea for computing pixel connected areas, the strategy of the recursive algorithm is very simple. All pixels in an image are traversed, and if it encounters a point not marked area number, it will recursively mark all pixels connected to the point with the current area number.

The recursive algorithm can solve problems, but it costs so many resources. Here is an advanced method to deal with the connectivity problem of pixel areas:

66 posts | 5 followers

FollowAlibaba Cloud Community - June 24, 2022

Alibaba F(x) Team - September 1, 2021

Alibaba F(x) Team - December 31, 2020

xungie - November 23, 2020

Uday Tank - November 15, 2022

Alibaba F(x) Team - June 22, 2021

66 posts | 5 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn MoreMore Posts by Alibaba F(x) Team