By Gao Xiangyang (a Senior R&D Engineer at Zhuanzhuan.com)

As people's requirements for timeliness are growing, the market demand for intra-city express delivery is also increasing. For example, on Valentine's Day, Teacher's Day, Mid-Autumn Festival, and other festivals, intra-city express delivery platforms will overflow with orders, so stable services are required.

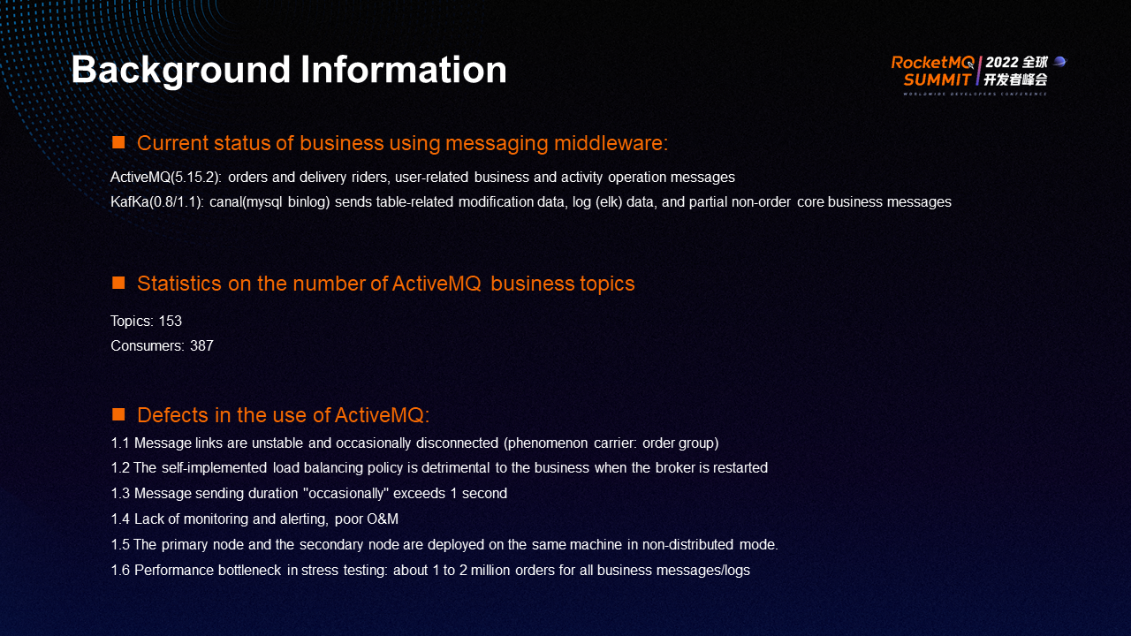

Previously, ActiveMQ was used in all core business messages of the intra-city express service (such as orders, delivery riders, users, and other core data and logs). Kafka was used for non-core business messages. The number of topics is 153, and the number of consumers is 387. The preceding data shows that if the migration is performed, a large number of business changes will be generated, which will affect the intelligent iteration of business lines and occupy a lot of research and development resources. At the same time, the large amount of research and development work also means the testing work is large and uncontrollable.

There are many defects in the use of ActiveMQ:

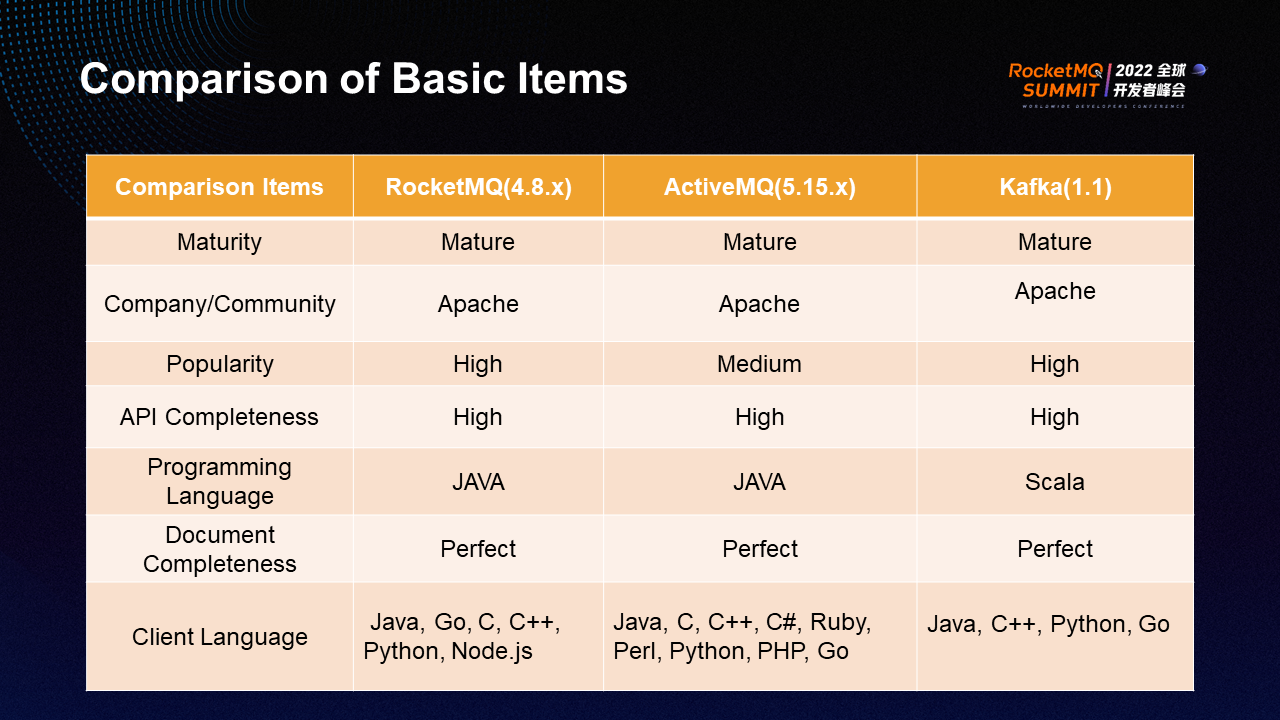

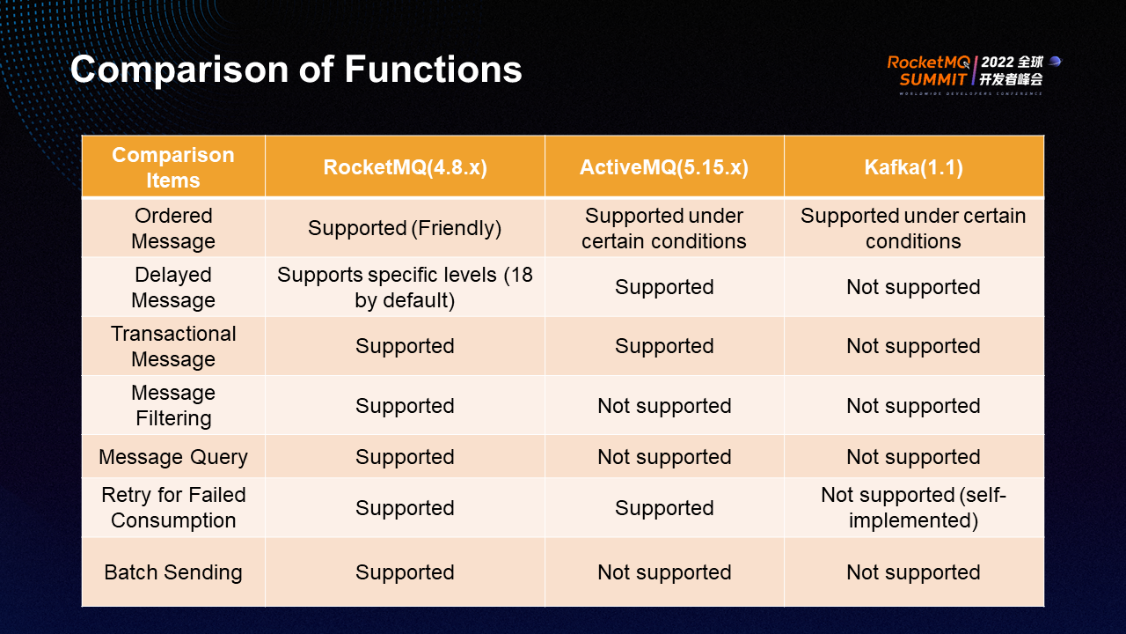

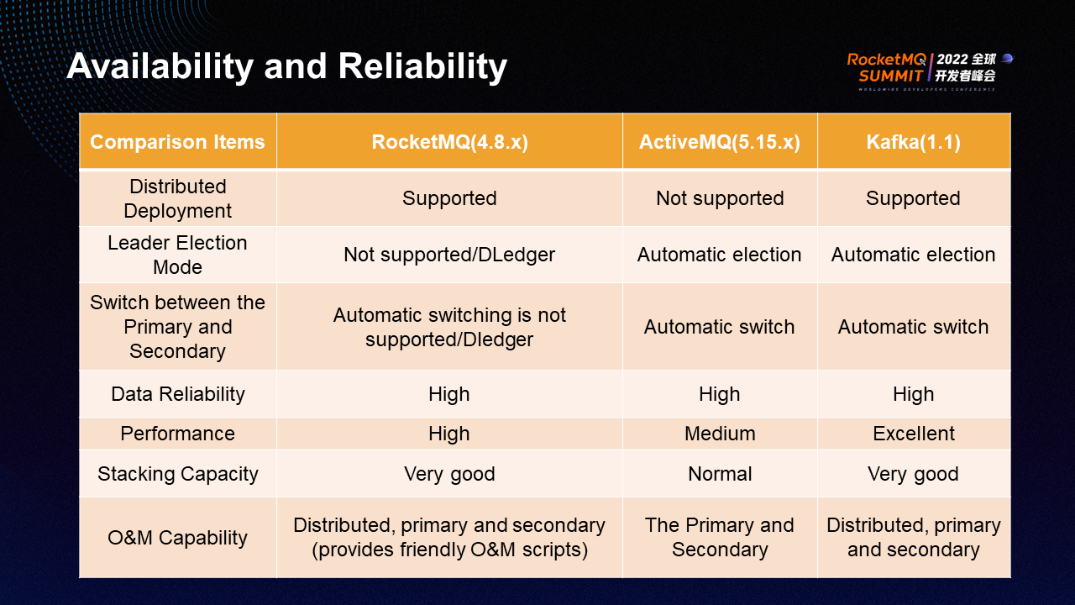

The selection of MQ technical components is mainly compared from five dimensions: basic items, supporting functions, availability, reliability, and O&M capabilities. In addition, a comprehensive analysis is required from the perspective of companies and business R&D partners. The company's perspective includes technology costs (such as the costs of the current technology stack, server, MQ expansion, post-maintenance, and labor). Business R&D partners' perspective includes difficulties transforming business services and compatibility issues with the current service framework.

The preceding figure compares the basic items of RocketMQ, ActiveMQ, and Kafka (including maturity, company/community, popularity, API perfection, programming language, document completeness, and client language). ActiveMQ's popularity is slightly lower than the other two.

The preceding figure compares the functions (including ordered messages, delayed messages, transactional messages, message filtering, message query, retry for failed consumption, and batch sending). In terms of functions, RocketMQ has great advantages.

The preceding figure compares availability, reliability, and O&M capabilities. RocketMQ 4.5.0 introduces the Dledger mode to solve the problem of automatic failover in the broker group. The community has fully upgraded the performance stability and functions of Dledger 4.8.0. In terms of O&M capabilities, RocketMQ provides relatively complete support for service scaling, service migration, upgrading management platforms, and monitoring and alerting platforms to facilitate the company's internal secondary development based on business characteristics.

In summary, we chose RocketMQ to replace ActiveMQ.

The migration solution is divided into the following five steps: topic statistics, making a migration time plan and determining the test scope, extension solution, SDK client design and usage mode, smooth migration solution, and arbitrary message delay solution.

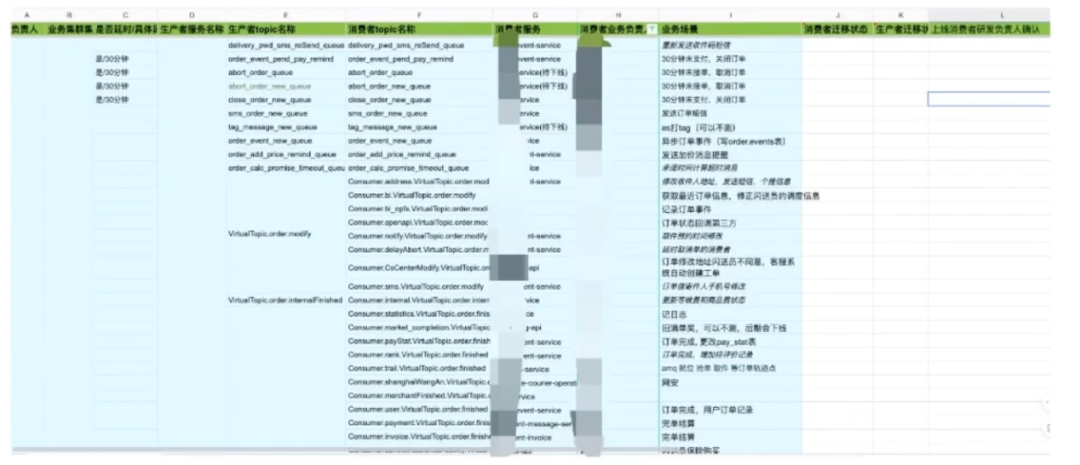

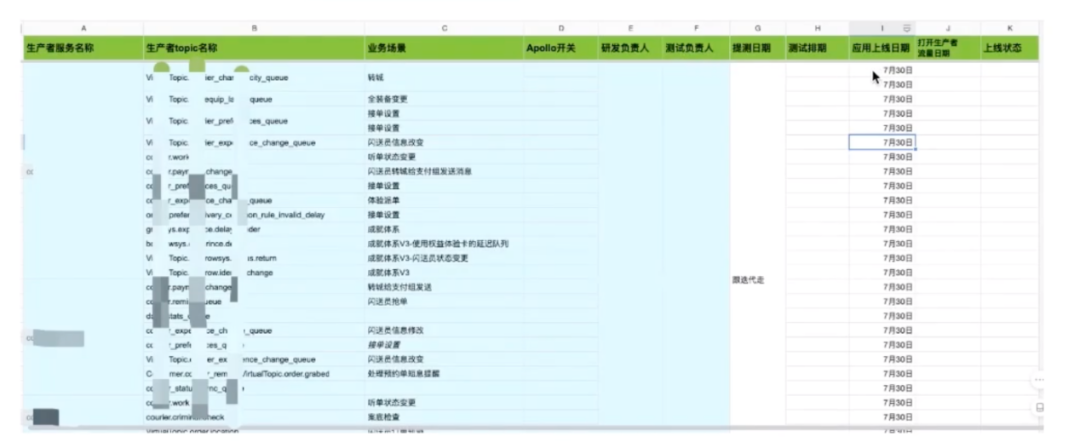

First, export a list of all the topics and the corresponding consumers for each topic. The statistical items of the consumer include the owner, business cluster name, latency, producer service name, producer topic name, consumer topic name, consumer service, consumer business owner, business scenario, migration status, and launch leader.

The statistical items of the producer business include the producer service name, producer topic name, Apollo switch, R&D owner, test owner, and launch date.

Our migration principle is that business changes are as few as possible without affecting normal business iterations. The goal is smooth and lossless migration of the company's full businesses.

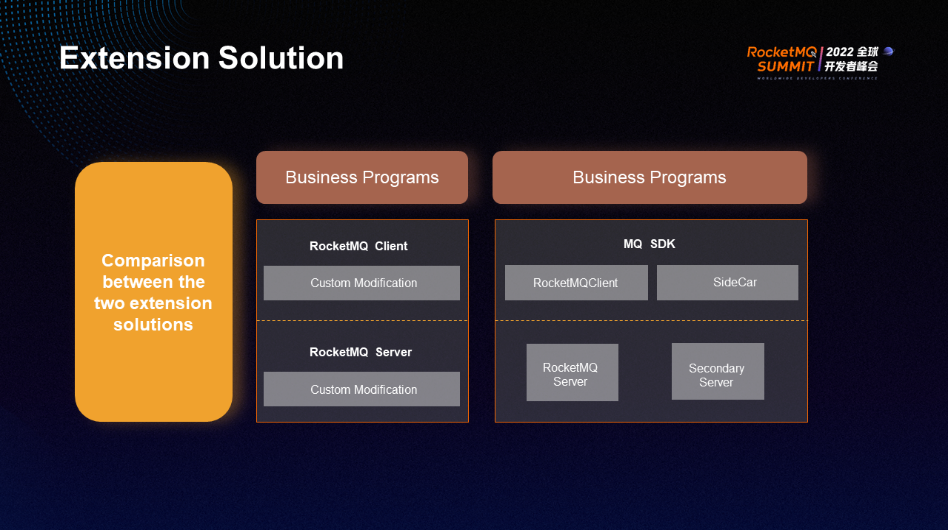

There are inevitably differences between the two products before and after the migration, so secondary development is required. Generally, there are two ways:

(1) Make in-depth modifications directly on native products to achieve the customized functions required by the company. This method is relatively simple to implement, but it will drift away from the open-source version of the community, and subsequent upgrades are more difficult.

(2) Do not modify the original product, but do encapsulation on its periphery to meet the specific needs of the company.

We chose the second method for secondary development to ensure we can continue to enjoy the benefits brought by the open-source version of the community (such as the optimization of synchronous replication delay in version 4.7 and the overall improvement of Dledge mode in version 4.8).

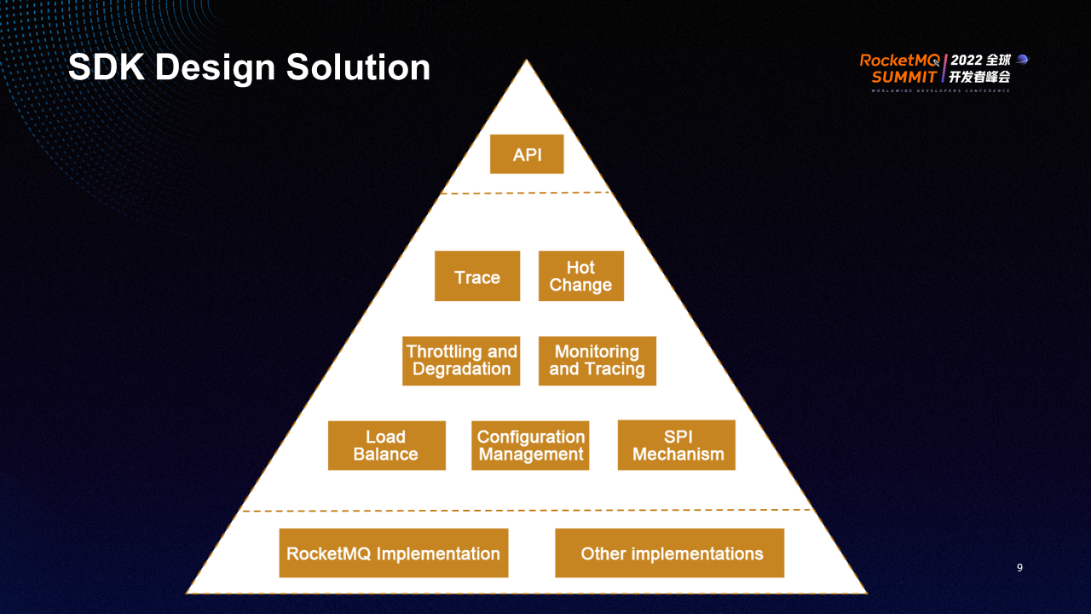

The SDK design solution adopts a pyramid structure, which is divided into three layers. The top layer is the API layer, which provides the most basic APIs. All access must go through APIs. The API is like an iceberg. Everything below (except for the simple external interface) can be upgraded and replaced without breaking compatibility. Its business development is also relatively simple. You only need to provide the topic, group, and server name for production and consumption.

The second layer is the universal layer. It aggregates some of the personalized requirements that the company currently needs (such as trace, log change, monitoring, configuration, and load balance). Some functions are required to extend in some special business scenarios, so the SPI mechanism is also provided.

The third layer is the MQ implementation layer, which facilitates the expansion of more MQ products in the later stage and shields the differences between middleware products.

When using ActiveMQ, the SDK structure is already a pyramid design model. Therefore, the business development workload of the migration is small. This also shows that when an open-source product is launched within the company, appropriate encapsulation is more conducive to later maintenance and expansion.

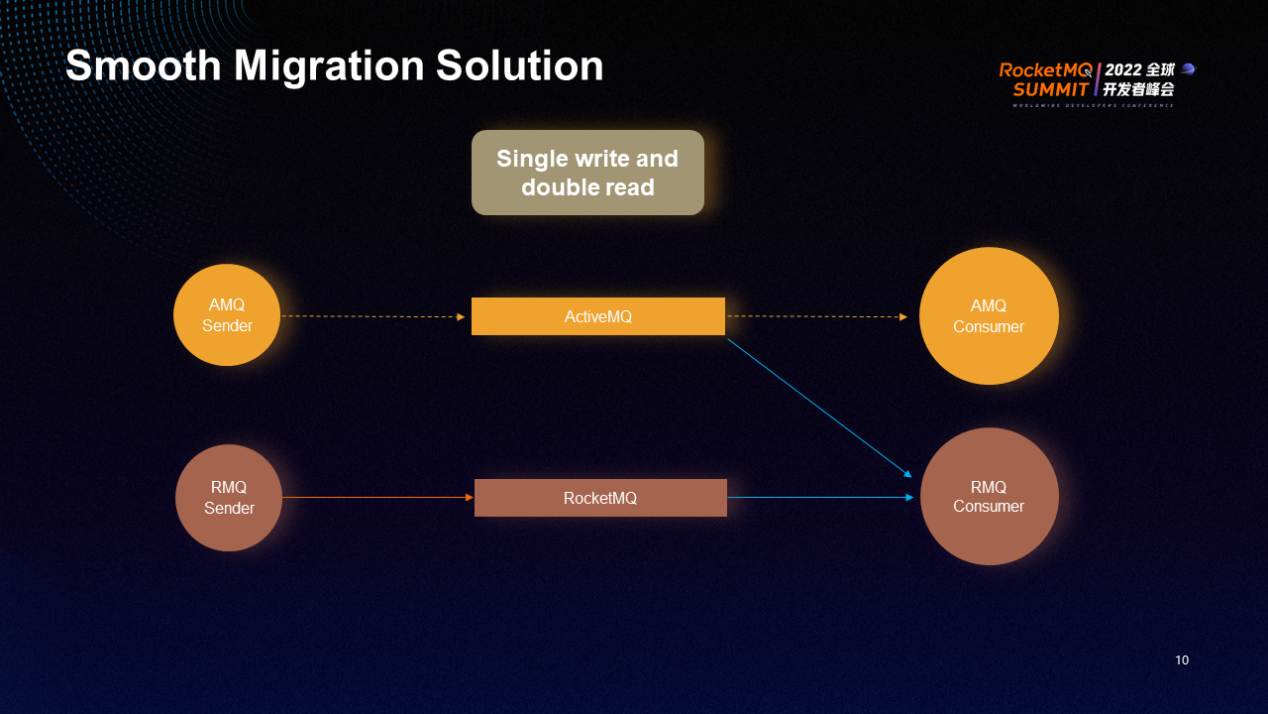

The core of the smooth migration solution is single write and double read: single write for producers and double read for consumers. Double read refers to reading both ActiveMQ and RocketMQ.

In the preceding figure, the yellow part is the production and consumption process of ActiveMQ, and the brown part is the production and consumption process of RocketMQ. The blue line represents the consumer. After the SDK is upgraded, a two-way link has been established for the consumer to perform the double read. The producer also upgrades the SDK to complete the transition from ActiveMQ to RocketMQ. Here, the SDK upgrade is based on the topic level rather than the overall service upgrade.

The business process is also divided into two steps. First, consumers go online to do the double read and consume both ActiveMQ and RocketMQ to ensure messages will not be lost due to migration. The consumer comes online first, followed by the producer. Therefore, there may be consumption by multiple consumers, and the migration of producers will take place after all consumers come online and are confirmed error-free.

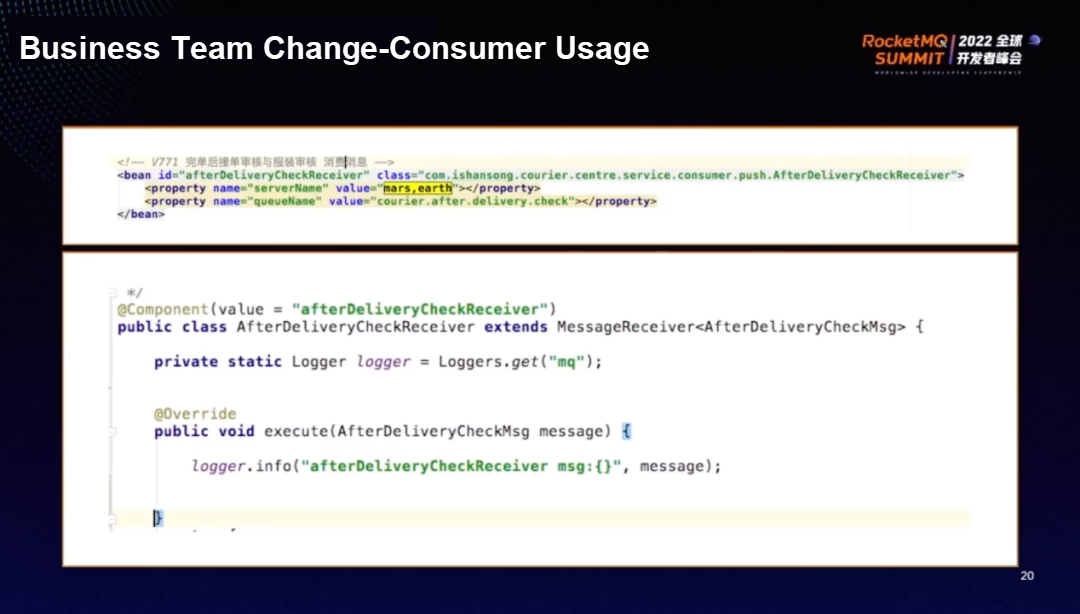

You only need to change a few lines of code to change the consumer usage, as shown in the yellow part above. The mar is an ActiveMQ cluster, and the newly added earth is a RocketMQ cluster.

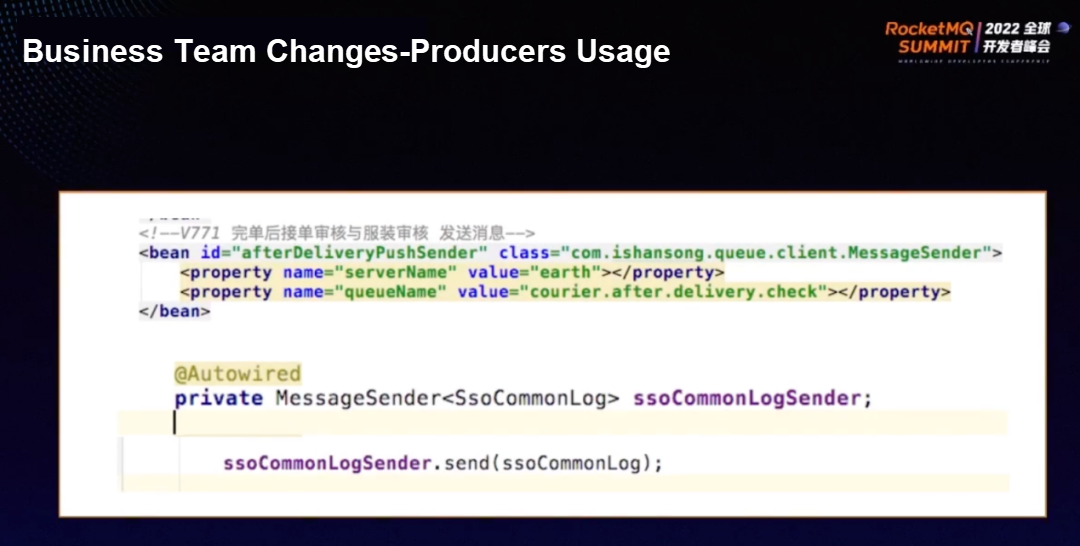

Since the producer does single write, you only need to change the original ActiveMQ name at the server name to the RocketMQ name.

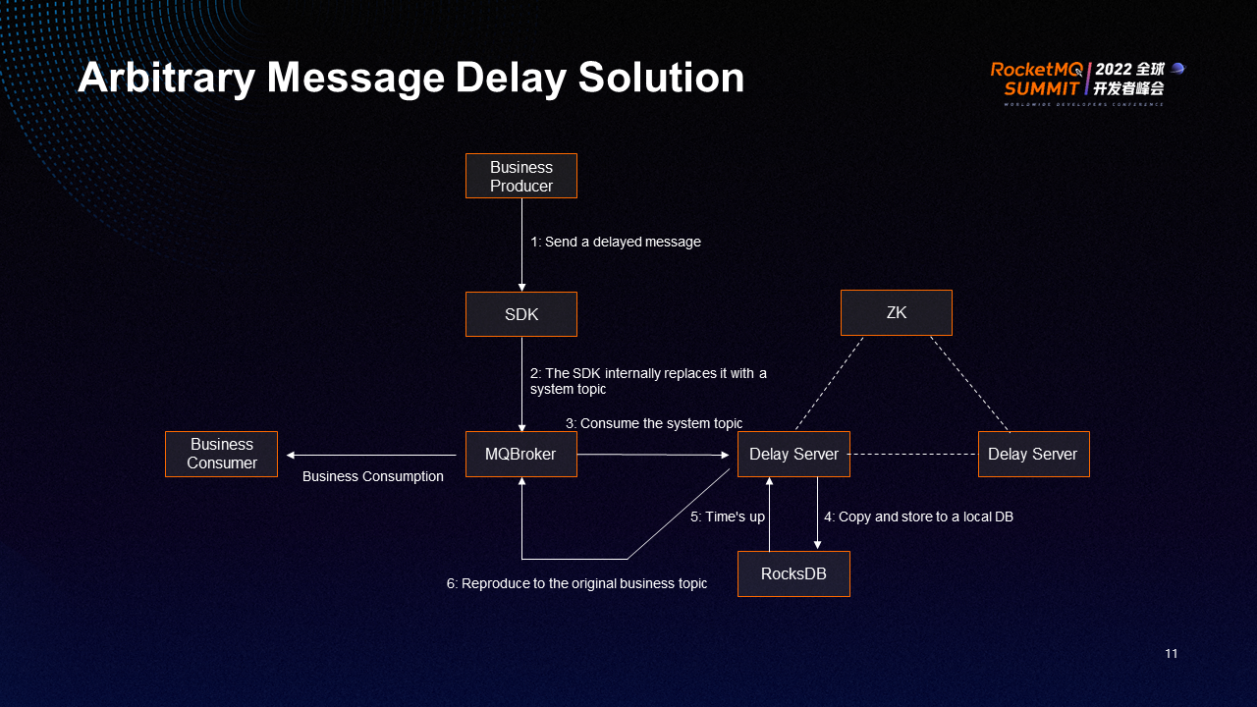

The delayed message is a function commonly used in businesses. However, the built-in delayed message of RocketMQ only supports a few fixed delay levels. Although it supports manual configuration, the configuration requires a restart of the broker. Therefore, we separately developed a delay service to schedule delayed messages.

The preceding figure shows the overall process of delayed messages. The producer sends a delayed message, SDK converts it into a global delayed message topic, the MQBroker cluster stores it, and the Delay Server consumes the delay topic and stores it in the local EocksDB. When time is up, it will be pushed to the topic of the original business, achieving delaying message processing at any level.

Instead of sending messages directly to the delay service, the topics are replaced and stored in RocketMQ. The advantage is that the existing message sending interface and all the extension capabilities on it can be reused. You only need to specify the delay time when constructing the message for the business, and everything else remains the same.

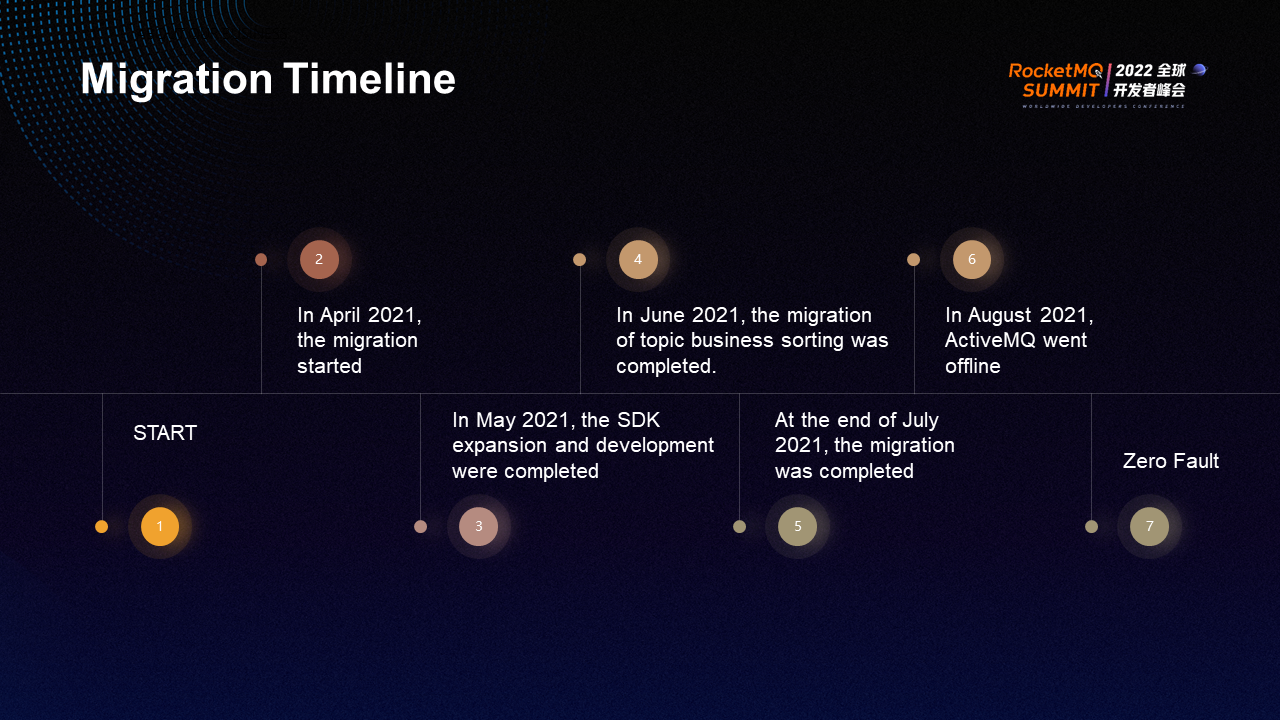

The migration started in April 2021, SDK expansion and development were completed in May, topic business sorting was completed in June, migration was completed in July, and ActiveMQ went offline in August. The migration ended perfectly.

The pain points caused by ActiveMQ were mostly solved after observing the first half year after migration.

A distributed message governance platform includes clusters, themes, and consumer groups. Clusters include NameSrv and Broker. Therefore, the core issue that needs to be considered by the distributed message governance platform is the cluster monitoring design of RocketMQ.

There are two roles: administrator (O&M) and business party (R&D). The O&M side focuses on the health status of the cluster, the sender focuses on the topic, and the consumer focuses on the consumer group.

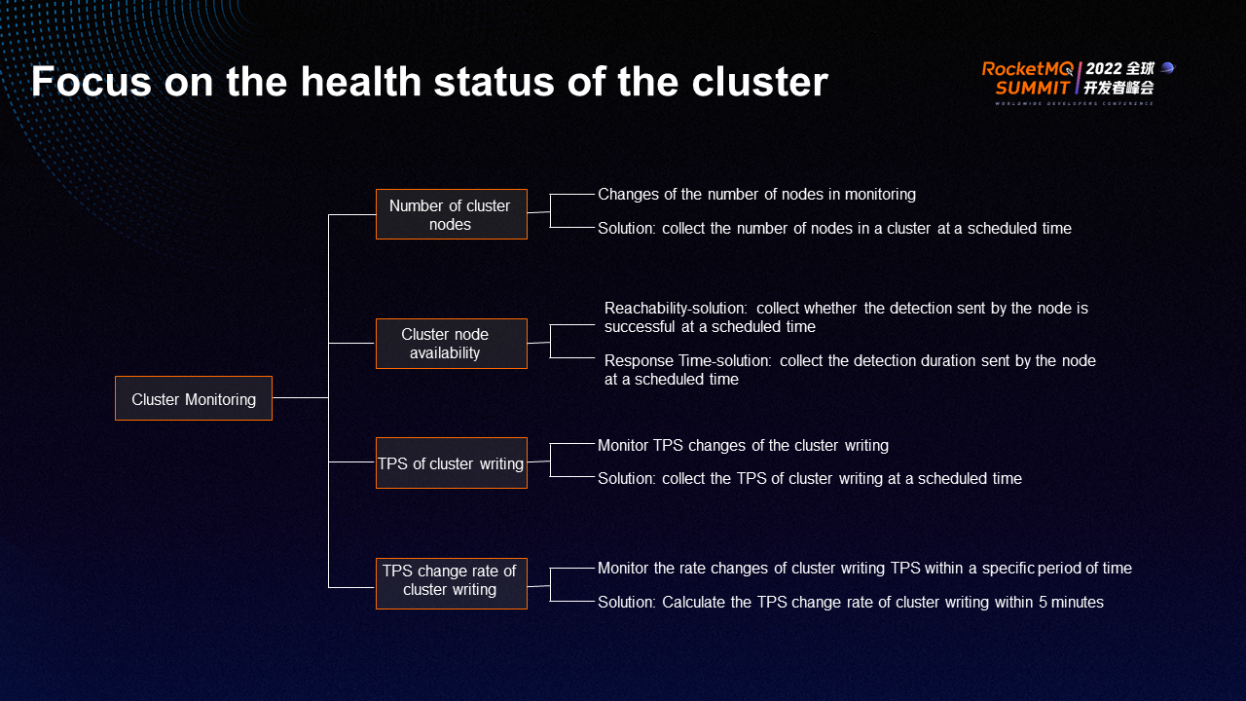

Cluster monitoring can be divided into four parts: the number of cluster nodes, cluster node availability, TPS of cluster writing, and TPS change rate of cluster writing.

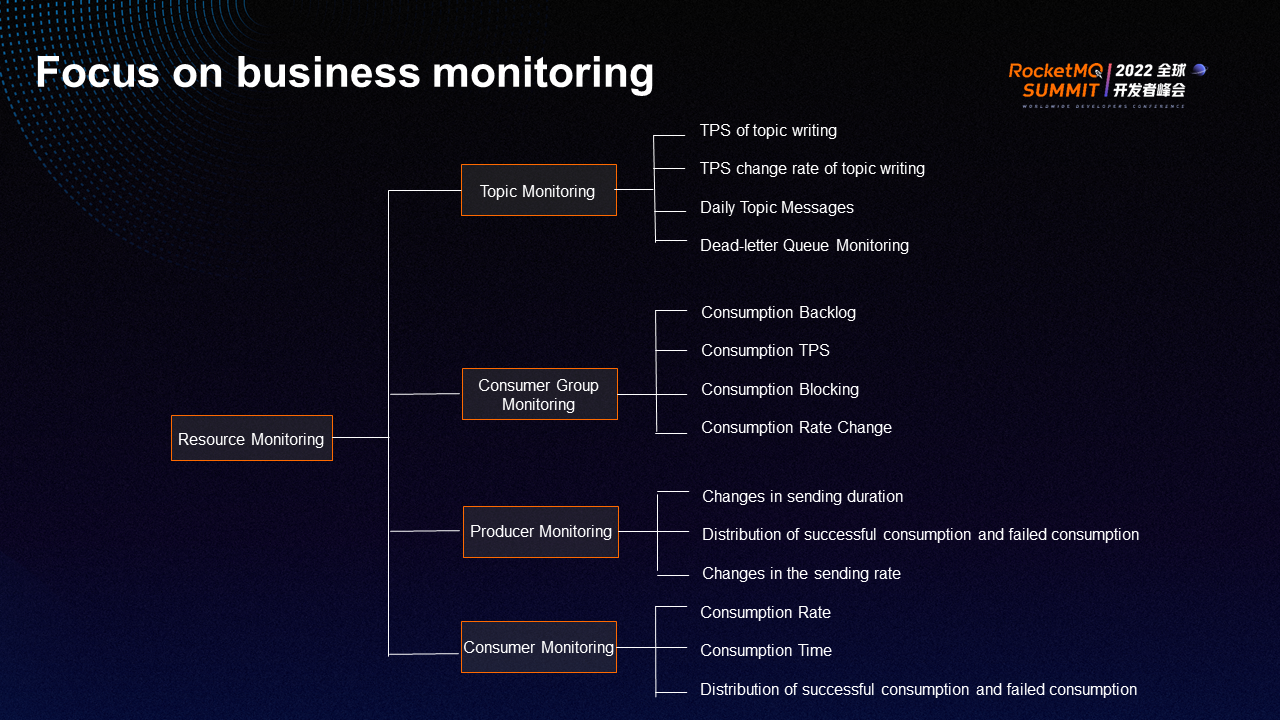

The R&D side focuses on topic monitoring, consumer group monitoring, producer monitoring, and consumer monitoring.

Join the Apache RocketMQ Community: https://github.com/apache/rocketmq

How China Everbright Bank Uses the RocketMQ-Based Distributed Messaging Platform

Deploying your OAM Applications in the Napptive Cloud-native Application Platform

634 posts | 55 followers

FollowAlibaba Cloud Native - June 6, 2024

Alibaba Developer - February 7, 2022

Aliware - October 20, 2020

Alibaba Cloud Native Community - March 22, 2023

Alibaba Cloud Native - June 11, 2024

Alibaba Cloud Native Community - March 14, 2022

634 posts | 55 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Quick Starts

Quick Starts

Deploy custom Alibaba Cloud solutions for business-critical scenarios with Quick Start templates.

Learn MoreMore Posts by Alibaba Cloud Native Community