Text To Speech (TTS) is a component of the human-machine dialogue that allows the machine to speak. It's a fantastic piece that combines linguistics and psychology. It cleverly translates text into a genuine voice stream with the help of the built-in chip and the design of a neural network.

Text To Speech (TTS) technology turns text into speech in real time, with conversion times measured in seconds. The voice rhythm of text output is smooth thanks to its unique intelligent voice controller. This gives the listener a more natural experience when receiving information, rather than the apathy and choppy impression of a computer voice.

Text To Speech (TTS) is a sort of speech synthesis tool that translates computer data, such as help files or web pages, into genuine speech output. Text To Speech not only assists visually impaired individuals in reading computer information, but it also improves the readability of text documents. Voice-driven mail and voice-sensitive systems are examples of Text To Speech applications, which are frequently utilized with voice recognition algorithms.

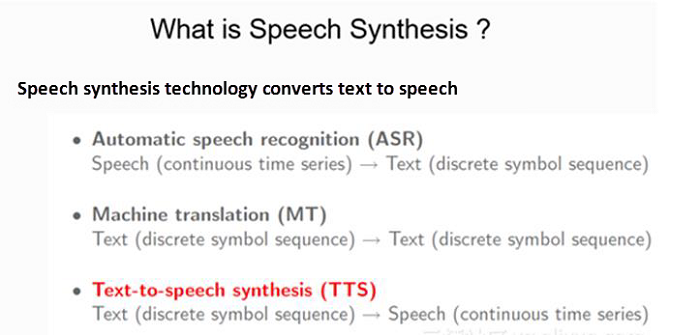

Text-to-speech conversion is possible thanks to speech synthesis technology. It's a must-have component for human-computer interaction. Computers can "listen" to human speech and convert audio signals to words using speech recognition technology. Speech conversion technology allows computers to "say" the words we type into them and transform them to speech.

Application Scenarios and Research Scope of Speech Synthesis Technology

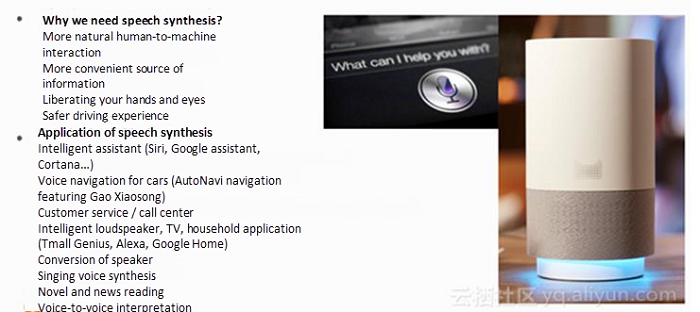

For human-to-computer interaction, speech synthesis technology is a must-have component. It's widely used in a variety of scenarios, including map navigation apps (like AutoNavi's voice navigation with Gao Xiaosong), voice assistants (Siri, Google Assistant, Cortana), novels and news readers (Shuqi.com, Baidu Novels), smart speakers (Alexa, Tmall Genie), real-time voice interpretation, and even airport, subway, and bus announcements.

Aside from text-to-speech conversion, speech synthesis technology includes, but is not limited to, speaker conversion (as seen in James Bond films), speech bandwidth expansion (e.g., Hatsune Miku, a Japanese pop star), whisper synthesis, dialect synthesis (dialects in Sichuan and Guangdong Provinces, articulation of ancient Chinese language), animal sound synthesis, and so on.

A Typical Speech Synthesis System Flow-process Diagram

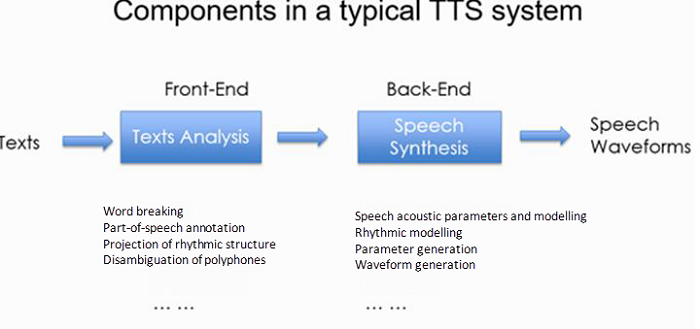

As shown in the diagram below, a typical speech synthesis system consists of two components: front-end and back-end.

The front-end component is responsible for analyzing text input and extracting data for back-end modeling. Word breaking (word boundary judgment), annotation of components of speech (e.g., noun, verb, adjective), projection of rhythmic structure (whether it's a metrical phrase boundary), and disambiguation of polyphones are all examples of this.

The back-end component reads the findings of the front-end analysis and models the speech and text data. Using the text input and well-trained acoustic models, the back end generates speech signal output during the synthesis process.

Speech Production Process

The pulmonary airflow along the vocal cords is regulated by the shape of the oral cavity and produced through the lips when a person speaks. When a person speaks softly, the pulmonary airflow does not induce the vocal chords to vibrate, producing a white noise signal. The vocal cords vibrate rhythmically when a human creates vowel and voiced consonant sounds, which is referred to as an impulse train. The fundamental frequency is the frequency at which the vocal cords vibrate (f0). The structure of the mouth cavity influences the tone and sound of human speech. Simply put, human speech is the result of a pumping signal (airflow) being modified by a filter (oral cavity shape) and issued via the lips.

Existing speech synthesis systems are classified into three types based on the different methods and frameworks adopted:

Parametric speech synthesis system

Splicing speech synthesis system

Waveform-based statistical speech synthesis system (WaveNet)

Currently, the first two are mainstream systems used by big companies, while the WaveNet method is the hottest area of the research at present. Let’s look at each of these systems in detail:

Parametric Speech Synthesis System

Speech waves are translated into vocal or rhythmic parameters, such as frequency spectrum, fundamental frequency, and duration, during the analysis step of a parametric speech synthesis system, utilizing vocoder in accordance with speech production characteristics. Models for vocal parameters are created during the modeling step. Using a vocoder, the speech signals in time domain are retrieved from the projected vocal parameters during the speech synthesis stage.

The advantages of a parametric speech synthesis system are smaller models, ease of model parameter adjustment (speaker conversion, rising or decreasing tone), and speech synthesis stability. The flaw arises in the fact that after parameterizing synthesized speech, there is a loss in acoustic quality when compared to the original recording.

Splicing Speech Synthesis System

The original recording is not parameterized in this approach; instead, it is cut into and stored as fundamental units. During the synthesis process, certain algorithms or models are used to compute the target cost and splicing cost of each unit, and then the synthesized speech is "spliced" using Viterbi algorithm and signal processing methods like PSOLA (Pitch Synchronized Overlap-Add) and WSOLA (Waveform Similarity based Overlap-Add).

The benefit of splicing speech synthesis is that it improves acoustic quality without sacrificing it due to parameterization of speech units. There may be faults in the synthesized speech or the rhythm or sound may not be consistent enough in small databases that lack sufficient speech units. As a result, more storage is necessary.

Waveform-based statistical speech synthesis system (WaveNet)

Deep Mind was the first to introduce WaveNet, with the basic unit being the Dilated CNN (dilated convolutional neural network). Speech signals are not specified in this method, and the neural network is utilized to anticipate each sampling point in the time domain for speech waveform generation. The advantage is that the acoustic fidelity is better than with parametric synthesis, but the quality is slightly lower than with splicing synthesis. It is, nevertheless, more stable than the splicing synthesis method. The flaw is that a larger calculating capacity is required, and the synthesis process is slower because each sampling point must be projected. WaveNet has been shown to anticipate voice signals in the time domain, which was previously impossible. WaveNet is the trendiest scientific topic right now.

Relying on Alibaba's leading natural language processing and deep learning technology, based on massive e-commerce data, we provide customized high-quality machine translation services for Alibaba Cloud users.

Intelligent Speech Interaction is developed based on state-of-the-art technologies such as speech recognition, speech synthesis, and natural language understanding. Enterprises can integrate Intelligent Speech Interaction into their products to enable them to listen, understand, and converse with users, providing users with an immersive human-computer interaction experience. Intelligent Speech Interaction is currently available in Mandarin Chinese, Cantonese Chinese, English, Japanese, Korean, French and Indonesian, and please stay tuned for other languages.

Three Kinds of Existing Speech Synthesis System

1,304 posts | 461 followers

FollowAlibaba Clouder - February 1, 2018

Alibaba Clouder - March 31, 2021

Alibaba Cloud Community - June 24, 2022

Alibaba Clouder - May 6, 2020

Alibaba Clouder - July 12, 2018

Alibaba Cloud Community - January 7, 2022

1,304 posts | 461 followers

Follow Machine Translation

Machine Translation

Relying on Alibaba's leading natural language processing and deep learning technology.

Learn More Intelligent Speech Interaction

Intelligent Speech Interaction

Intelligent Speech Interaction is developed based on state-of-the-art technologies such as speech recognition, speech synthesis, and natural language understanding.

Learn MoreMore Posts by Alibaba Cloud Community