By Alibaba Cloud Storage Team

The performance of non-volatile memory express solid-state drives (NVMe SSDs) is sometimes elusive. Therefore, we need to take a look inside the mysterious SSD, and analyze the factors that affect SSD performance from multiple perspectives. We also need to think about how to use storage software to optimize NVMe SSD performance to promote the use of flash memory in IDCs. This article briefly introduces SSDs, analyzes NVMe SSDs from the perspective of factors that influence performance, and then provides some thoughts on flash memory design.

The storage industry has undergone earth-shaking changes in recent years. Semiconductor storage has appeared on the scene. Semiconductor storage media have natural advantages over traditional disk storage media. They are much better than traditional disks in terms of reliability, performance, and power consumption. NVMe SSD is a commonly used semiconductor storage medium today. It uses the PCIe interface to interact with the host, which greatly improves the read/write performance and unleashes the performance of the storage medium itself. Usually, NVMe SSDs use the NAND flash storage medium internally for data storage. The medium itself has issues such as read/write asymmetry and short service life. To resolve the preceding issues, NVMe SSDs use the Flash Translation Layer (FTL) internally to provide the same interface and usage mode for upper-layer apps as a common disk does.

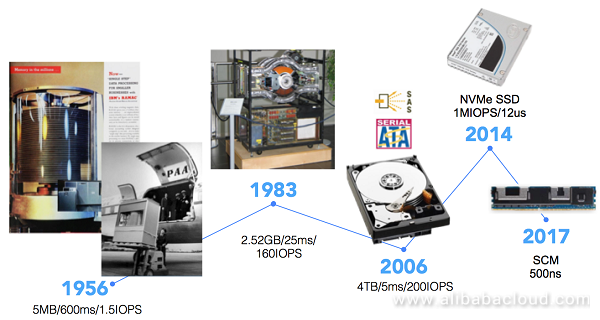

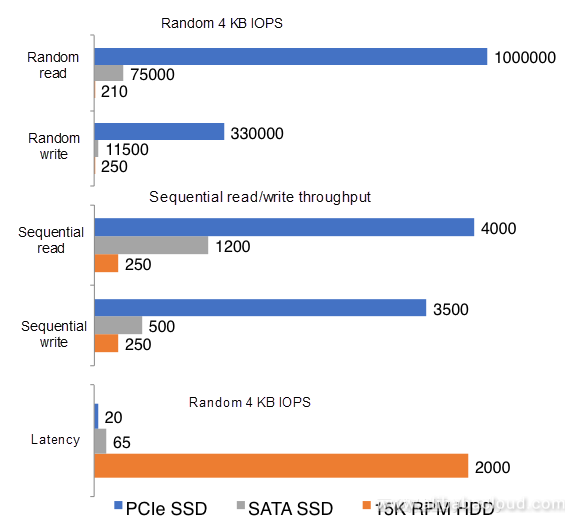

As shown in the above figure, the development of semiconductor storage media has greatly improved the I/O performance of computer systems. There has always been a tricky performance scissors gap between the magnetic medium-based data storage disk and the CPU. With the evolution and innovation of storage media, this performance scissors gap will disappear. From the perspective of the entire system, the I/O performance bottleneck is shifting from the backend disk to the CPU and network. As shown in the following figure, at 4 KB access granularity, the random read IOPS and write IOPS of an NVMe SSD are about 5,000 times and 1,000+ times faster than a 15k rpm HDD respectively. With the further development of non-volatile storage media, semiconductor storage media will have even better performance and I/O QoS capabilities.

The storage media revolution has brought about possibilities of storage system performance improvement, and also many challenges for the design of storage systems. Disk-oriented storage systems are no longer suitable for new storage media. We need to redesign a more rational storage software stack for new storage media to unleash their performance and avoid the new issues that they bring. Reconstructing storage software stacks and storage systems for new storage media has been a hot topic in the storage field in recent years.

When designing an NVMe SSD-based storage system, we must first be familiar with the features of NVMe SSDs, and understand the factors that affect SSD performance. In the design process, we need to use software to optimize the storage system based on the SSD features.

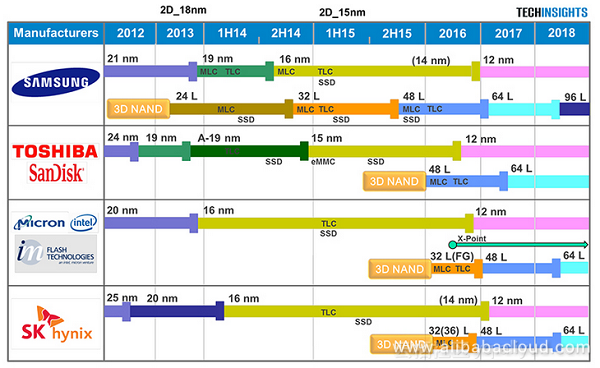

At present, mainstream NVMe SSDs use NAND flash as the storage medium. In recent years, the NAND flash technology has developed rapidly, mainly in two directions—increasing the storage density through 3D stacking and increasing the number of bits per cell. 3D NAND flash has become the standard for SSDs. Currently, all mainstream SSDs use this technology. NAND flash can store three bits per cell, which is commonly known as triple-level cell (TLC) NAND flash. This year, the storage density of a single cell increased by 33% to store four bits, evolving to quad-level cell (QLC) NAND flash. The continuous evolution of NAND flash has driven the increasing storage density of SSDs. As of today, a single 3.5-inch SSD can have a capacity of up to 128 TB, which is much greater than that of a common disk. The following figure shows the development and evolution process of NAND flash technology in recent years.

As can be seen from the above figure, some new non-volatile memory technologies emerged in the evolution process of NAND flash. Intel has already launched Apache Pass (AEP) memory. It is expected that the future will be the era of semiconductor storage where non-volatile memory and flash memory coexist.

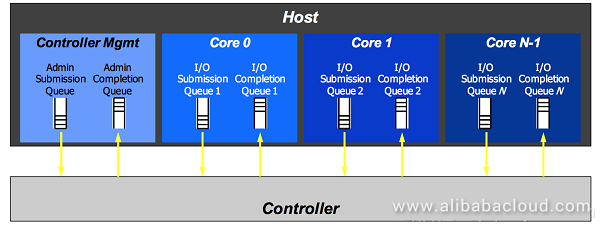

From the perspective of interfaces, NVMe SSDs are not much different from common disks. They are both standard block devices in the Linux environment. Because NVMe SSDs use the latest NVMe protocol, the software stack for NVMe SSDs has been significantly simplified. A big difference between traditional SATA/SAS and NVMe is that NVMe introduces a multi-queue mechanism as shown in the following figure.

What is multi-queue technology? Data interaction between a host (x86 server) and an SSD is implemented using the "producer-consumer" queue. The Advanced Host Controller Interface (AHCI) protocol defines only one interactive queue. Accordingly, data interaction between a host and an HDD can only be implemented through one queue. This restriction also applies to data interaction between a multi-core CPU and an HDD. In the age of disk storage, one queue is sufficient because disks are slow devices. All CPU cores use one shared queue to interact with the disk, which may lead to resource contention between different CPU cores. However, the overhead introduced by such resource contention is negligible compared to disk performance. In addition, the single-queue model is advantageous in that it provides one I/O scheduler for one queue to optimize the I/O order of requests.

Compared to a disk, a semiconductor storage medium has much better performance. The AHCI protocol no longer applies, and the original assumptions have ceased to exist. In this context, the NVMe protocol came into being. The NVMe protocol replaces the AHCI protocol. The software-level processing commands have also been redefined, and the SCSI/ATA command set is no longer used. In the NVMe era, peripherals are closer to the CPU. The connection-oriented storage communication network like serial-attached SCSI (SAS) is no longer needed. Unlike previous protocols such as AHCI and SAS, the NVMe protocol is a simplified protocol specification for new storage media. With the introduction of this protocol, the storage peripherals are connected to the CPU local bus, greatly improving performance. In addition, a multi-queue design is used between the host and SSD to adapt to the development trend of multi-core CPUs. This allows each CPU core to use an independent hardware queue pair to interact with the SSD.

From a software perspective, each CPU core can create a queue pair to interact with the SSD. A queue pair consists of a submission queue and a completion queue. The CPU places commands into a submission queue, and the SSD places completions into the associated completion queue. The SSD hardware and host driver software control the head and tail pointers of queues to complete the data interaction.

Compared to a disk, the biggest change in NVMe SSDs is that the storage medium itself has changed. Currently, NVMe SSDs generally use 3D NAND flash as the storage medium. NAND flash consists of an array of internal memory cells, and uses a floating gate or a charge trap to store charges. It maintains the storage status of data based on the amount of stored charges. Because of the capacitance effect, wear, aging, and operating voltage disturbance, NAND flash inherently has a charge leakage issue that may cause stored data to change. Therefore, in essence, NAND flash is an unreliable medium because it is prone to bit flips. SSDs turn the unreliable NAND flash into a reliable data storage medium through controllers and firmware.

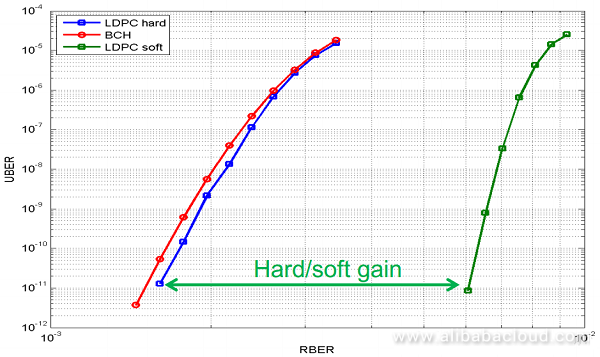

To build reliable storage on such an unreliable medium, a lot of work has been done inside the SSD. At the hardware level, SSDs need to resolve the frequently occurring bit flips through the error-correcting code (ECC) hardware unit. Each time data is stored, the ECC hardware unit calculates the ECC for the stored data. When reading data, the ECC hardware unit restores the corrupted bit data based on the ECC. Integrated inside the SSD controller, the ECC hardware unit represents the capabilities of the SSD controller. In the era of MLC storage, the Bose-Chaudhuri-Hocquenghem (BCH) codec technology can correct 100 bit flips in 4 KB data. In the era of TLC storage, however, the number of bit flips greatly increases. We need to use the low-density parity-check (LDPC) codec technology that has a higher error correction capability. We can use LDPC hard-decision decoding to restore data even when there are up to 550 bit flips in 4 KB data. The following figure compares the capabilities of LDPC hard-decision decoding, BCH, and LDPC soft-decision decoding. We can see that LDPC soft-decision decoding has a stronger error correction capability. Therefore, it is usually used if hard-decision decoding fails. The shortcoming of LDPC soft-decision decoding is that it increases the I/O latency.

At the software level, an FTL is designed inside an SSD. The design idea of the FTL is similar to that of the log-structured file system. The FTL records data by appending records to a log file. It uses the LBA-to-PBA address mapping table to record the data organization method. One of the biggest issues with a log-structured system is garbage collection (GC). The high I/O performance of NAND flash is compromised when it is used in the SSD because of the GC issue. There is also a serious I/O QoS issue faced by the standard NVMe SSDs currently. SSDs use the FTL to resolve the issue that NAND flash cannot perform in-place write, use the wear leveling algorithm to resolve the issue of uneven wear of NAND flash, use the data retention algorithm to resolve the issue of long-term charge leakage of NAND flash, and use data migration to resolve the issue of read diatribe. The FTL is the core technology for the large-scale application of NAND flash, and is an important component of SSDs.

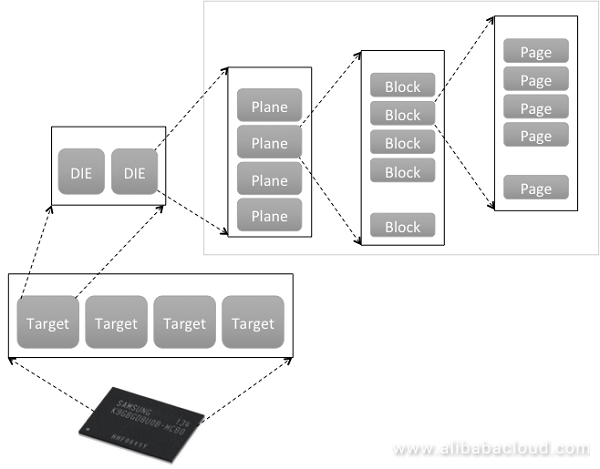

NAND flash itself has many concurrent units. As shown in the above figure, a NAND flash chip consists of multiple targets, each containing multiple dies. Each die is an independent storage unit, which consists of multiple planes. Multiple planes share the same operation bus and can be combined into one unit for multi-plane concurrent operations. Each plane consists of several blocks. A block is an erase unit, and the size of a block determines the GC granularity at the SSD software level. Each block consists of multiple pages. A page is the smallest write (programming) unit, which is usually 16 KB in size. The SSD internal software (firmware) needs to make full use of these concurrent units to build a high-performance storage drive.

A common NVMe SSD has a simple physical hardware structure that consists of a large number of NAND flash memories. These NAND flash memories are controlled by the SSD controller system-on-chip (SoC). The FTL software runs inside the SoC and uses a multi-queue PCIe bus to interact with the host. To improve performance, enterprise-oriented SSDs require on-board Dynamic Random Access Memory (DRAM). DRAMs are used to cache data for better write performance, and to cache FTL mapping tables. Enterprise-oriented SSDs usually use flat mapping to improve performance, which requires more memory usage (0.1%). The memory capacity limits the development of large-capacity NVMe SSDs. A practical method to resolve this issue is to increase the sector size. A standard NVMe SSD uses 4 KB sectors. To further expand the capacity of an NVMe SSD, some vendors have used 16 KB sectors. The popularity of 16 KB sectors will accelerate the promotion of large-capacity NVMe SSDs.

Continue reading Part 2 to learn more about NVMe SSD Performance Factors and Analysis.

View Database Tables as Standard Java Streams Using Speedment

Storage System Design Analysis: Factors Affecting NVMe SSD Performance (2)

2,605 posts | 747 followers

FollowAlibaba Clouder - January 15, 2019

Alibaba Clouder - August 13, 2019

ApsaraDB - December 26, 2023

Alibaba Clouder - July 5, 2018

Alibaba Clouder - March 21, 2018

Alibaba Cloud Community - April 25, 2022

2,605 posts | 747 followers

Follow OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn More Apsara File Storage NAS

Apsara File Storage NAS

Simple, scalable, on-demand and reliable network attached storage for use with ECS instances, HPC and Container Service.

Learn MoreMore Posts by Alibaba Clouder

Raja_KT March 14, 2019 at 11:37 am

The journey from NVMe SSDs.... SCM is interesting as so many other choices are there and competition among storage vendors that want faster storage.