By Chuheng

Although service mesh is no longer a new concept, it is still a popular area of exploration. This article describes the core concepts of service mesh, the reasons why we need it, and its main implementations. I hope this article can help you better understand the underlying mechanism and trends of service mesh.

With the advent of the cloud-native era, the microservices architecture and the container deployment model have become increasingly popular, gradually evolving from buzz words into the technological benchmarks for modern IT enterprises. The giant monolithic application that was once taken for granted is carefully split into small but independent microservices by architects, then packaged into Docker images with their own dependencies by engineers through container deployment, and finally deployed and run in the well-known Kubernetes system through the mysterious DevOps pipeline.

This all sounds easy enough. But there is no such thing as free lunch. Every coin has two sides and microservices are no exception.

Does this mean microservices only look good on the outside, while causing headaches for developers and operators? This is obviously not the case. Those "lazy" programmers have a lot of tricks to overcome these difficulties. For example, the DevOps and containerization advocated by cloud native are an almost perfect solution to the first problem. Multiple applications can be integrated, built, and deployed quickly through the automated CI/CD pipeline. In addition, resource scheduling and O&M are facilitated through Docker images and Kubernetes orchestration. As for the second problem, let's look at how service mesh ensures communication between microservices.

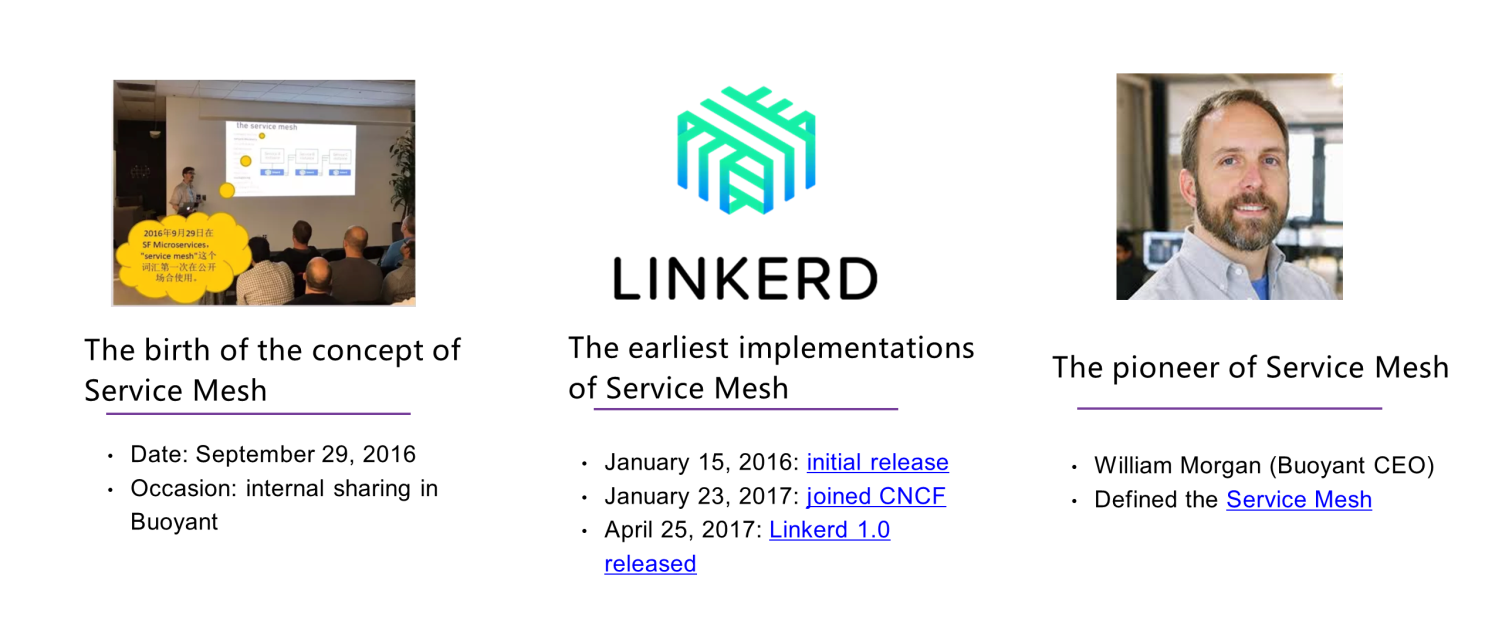

From concept to implementation? No, from implementation to concept.

On September 29, 2016, we were about to take a holiday. At an internal sharing session on microservices in Buoyant, "Service Mesh", the buzz word that would dominate cloud native field over the next few years, was created.

Naming is really an art. Microservices -> Service Mesh is a link between past and future and allows development to take its course. Just looking at its name, I can understand what it does: connects the various service nodes of microservices through a mesh. In this way, microservices that were split into small parts are closely connected by a service mesh. Though separated in different processes, microservices are connected in a monolithic application, making communication easier.

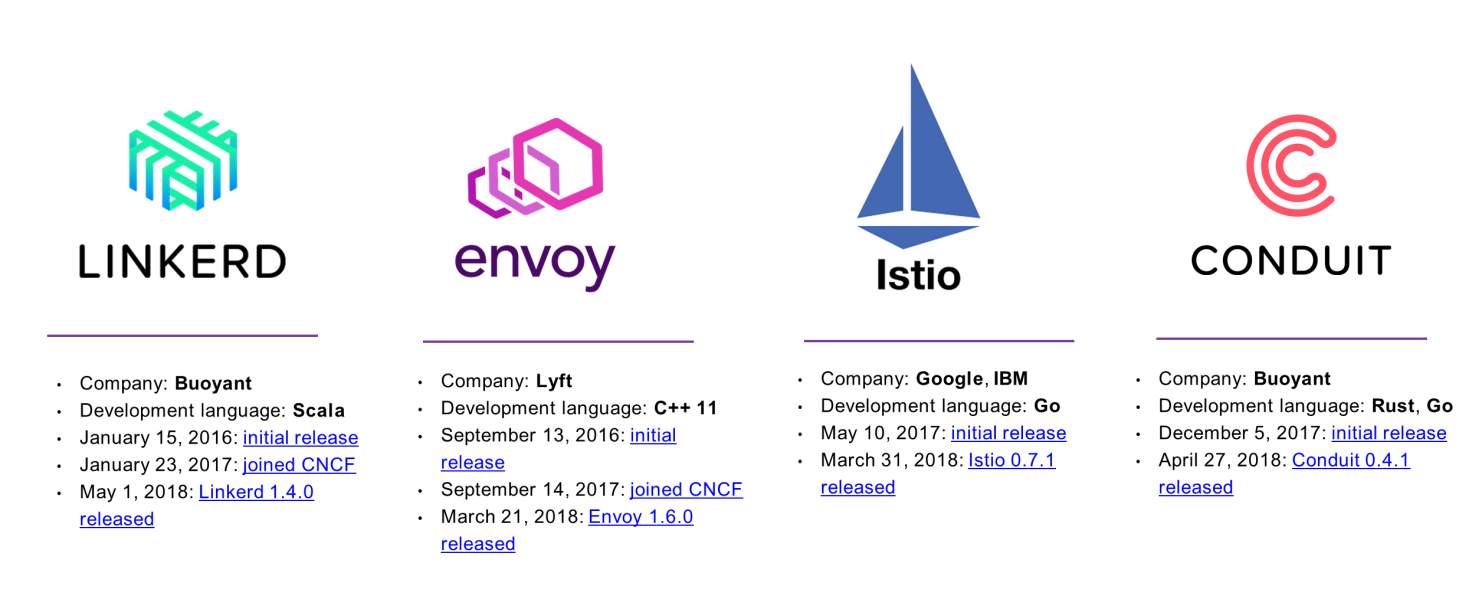

Unlike most concepts, this one had substance. On January 15, 2016, service mesh initially released its first implementation Linkerd[1]. On January 23, 2017, service mesh joined the Cloud Native Computing Foundation (CNCF) and released Linkerd 1.0 on April 25 of the same year. For Buoyant, this may be a small step, but it is a big step towards maturity in the cloud-native field. Today, the concept of service mesh has taken root with a variety of production-level implementations and large-scale practices. But we must remember the hero behind all of this, William Morgan, the CEO of Buoyant and the pioneer of the service mesh, and his definition of and ideas about service mesh. His thoughts are presented in the article: What is a service mesh? And why do I need one?[2]

Service mesh can be defined in one sentence as follows:

A service mesh is a dedicated infrastructure layer for handling service-to-service communication. It is responsible for the reliable delivery of requests through the complex topology of services that comprise a modern and cloud native application. In practice, the service mesh is typically implemented as an array of lightweight network proxies that are deployed alongside application code, without the application needing to be aware.

This is how the CEO OF Buoyant defined the service mesh. Let's look at some key phrases.

The Chinese have a saying: "Building the road is the first step to become rich". However, in today's world, the roads we build must be for cars, not the horses of the past.

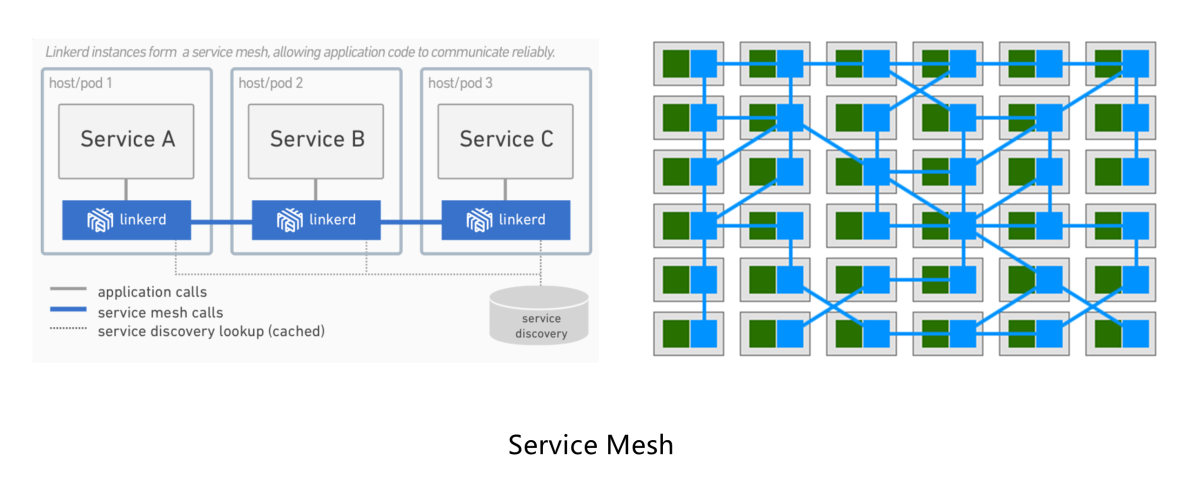

The figure on the left shows the deployment of Linkerd. On the host or pod where each microservice is located, a Linkerd proxy is deployed for RPCs between microservice application instances. The application is not aware of any of this. It initiates its own RPCs as usual and does not need to know the address of the peer server because the service discovery is handled by the proxy node.

On the right is a higher-dimension abstract figure to help us better understand the logic of a service mesh. Imagine that this is a large-scale microservices cluster at the production level, where hundreds of service instances and corresponding proxy nodes of the service mesh are deployed. Service-to-service communication relies on these dense proxy nodes, which together form the pattern of a modern traffic grid.

The chaos after the Big Bang.

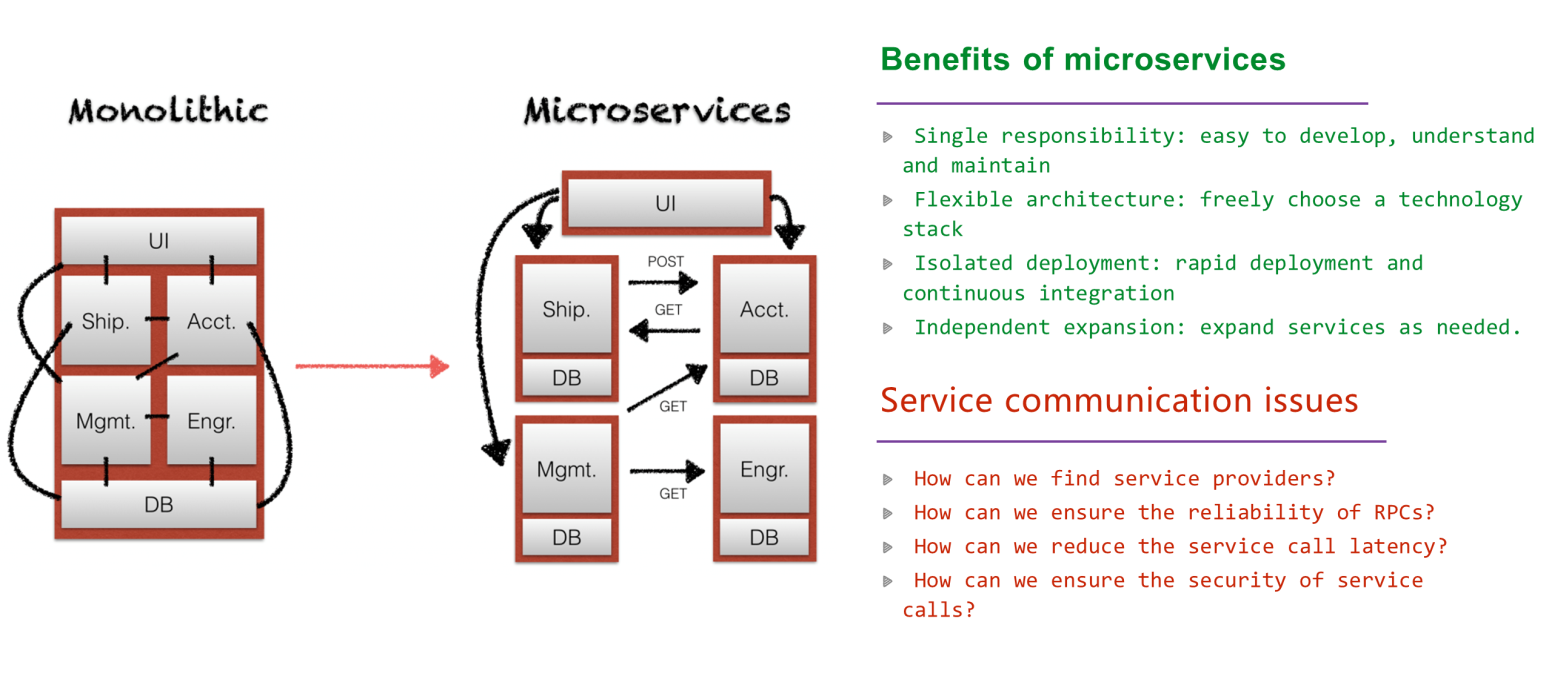

Most of us grew up in the era of the monolithic application. Monolithic means that all components are packed into the same application. Therefore, these components are naturally and closely linked. They are developed based on the same technology stack, access shared databases, and support joint deployment, O&M, and scaling. Moreover, the communication among these components tends to be frequent and coupled, taking the form of function calls. There was nothing wrong with this architecture. After all, the software systems of that time were relatively simple. A monolithic application with 20,000 lines of codes could easily handle all business scenarios.

Those long divided, must unite; those long united, must divide. Thus it has ever been. The inherent limitations of monolithic applications begin to be exposed with the increasing complexity of modern software systems and more and more collaborators. Just like the singularity before the Big Bang, monolithic applications began to expand at an accelerated rate and exploded with a "bang" when they reached the critical point a few years ago. In this way, the advent of the microservices has made software development "small but beautiful" again.

However, microservices are not a solution to every problem. Although the Big Bang ended the hegemony of monolithic applications, the era that follows it was a chaotic period were various architectures will exist together in competition. Developers in the era of monolithic applications were forced to adapt to the changes brought about by microservices. The biggest change is in service-to-service communication.

Microservice communication must be implemented through RPCs. HTTP and REST protocols are essentially RPCs. When one application needs to consume the services of another application, you cannot obtain service instances through a simple in-process mechanism (such as Spring dependencies) as in monolithic applications. You do not even know if there is such a service provider.

RPCs must rely on the IP network. As we all know, the network (compared with computing and storage) is the most unreliable thing in the world. Despite the transmission control protocol (TCP), frequent packet loss, exchange failure, and even cable damage often occur. Even if the network connection is strong, what if the other machine goes down or the process is overloaded?

Network communication suffers from latency in addition to being unreliable. Microservice applications in the same system are usually deployed together, resulting in low latency from calls in the same data center. However, for complex service links, it is very common for one service access to involve dozens of RPCs, which results in severe latency.

In addition to unreliability and latency, network communication is not secure. In the Internet age, you never know who you are actually communicating with. Similarly, if you simply adopt a bare communication protocol for service-to-service communication, you will never know whether the peer is authentic or if communication is monitored by a man-in-the-middle.

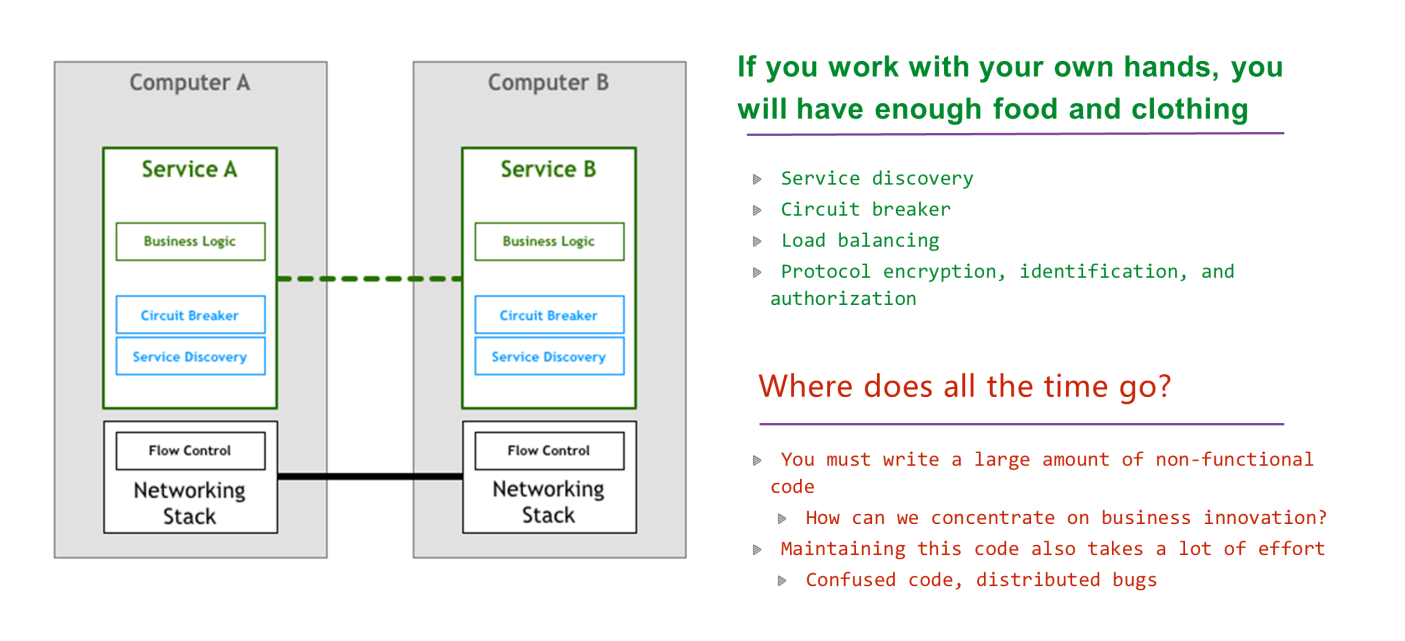

Chairman Mao once said, "If you work with your own hands, you will have enough food and clothing."

To introduce the preceding microservice architecture, the earliest engineers each had to reinvent the wheel themselves.

Programmers are used to writing code to solve problems. This is their job. But, where does all their time go?

Sharing and reuse is key.

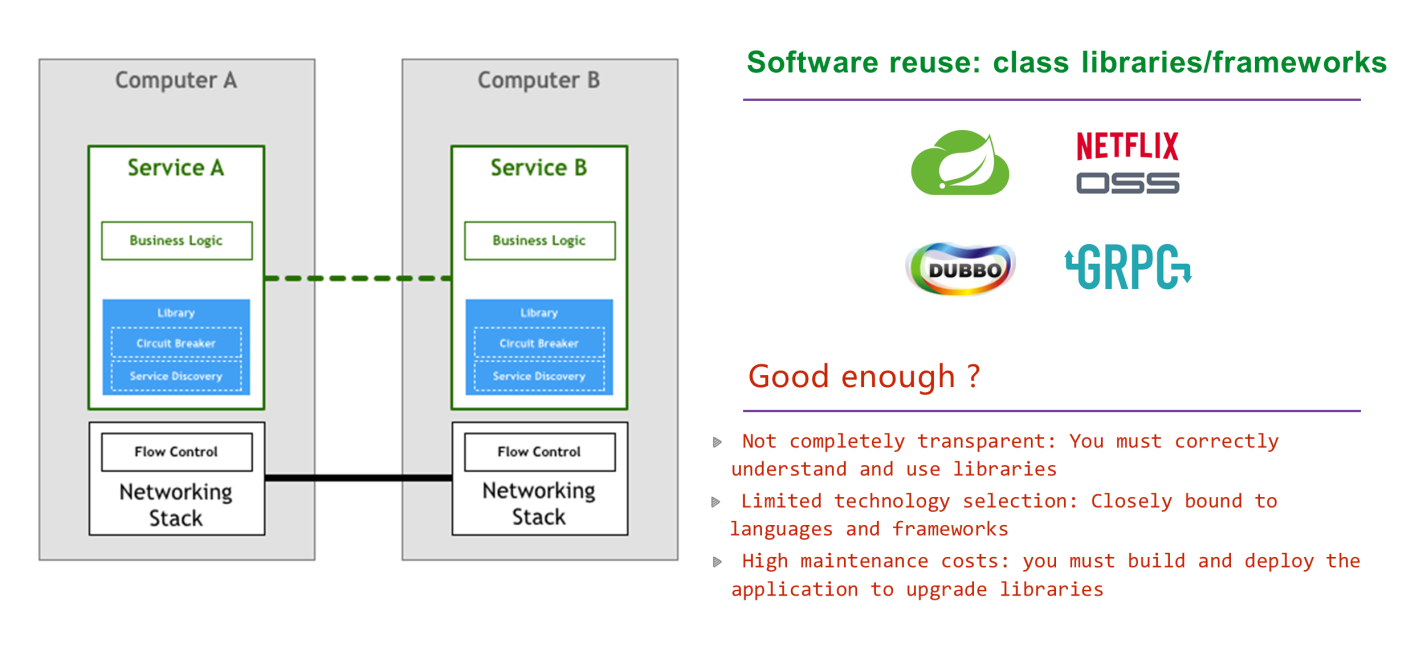

The more conscientious engineers cannot sit still. They think you are violating the principle of sharing and reuse. How can you face the pioneers of GNU? As a result, a variety of high-quality, standardized, and universal products, including Apache Dubbo, Spring Cloud, Netflix OSS, and gRPC, were developed.

These reusable class libraries and frameworks have greatly improved quality and efficiency, but are they good enough? Not quite. These have the following problems:

Service Mesh is just a porter.

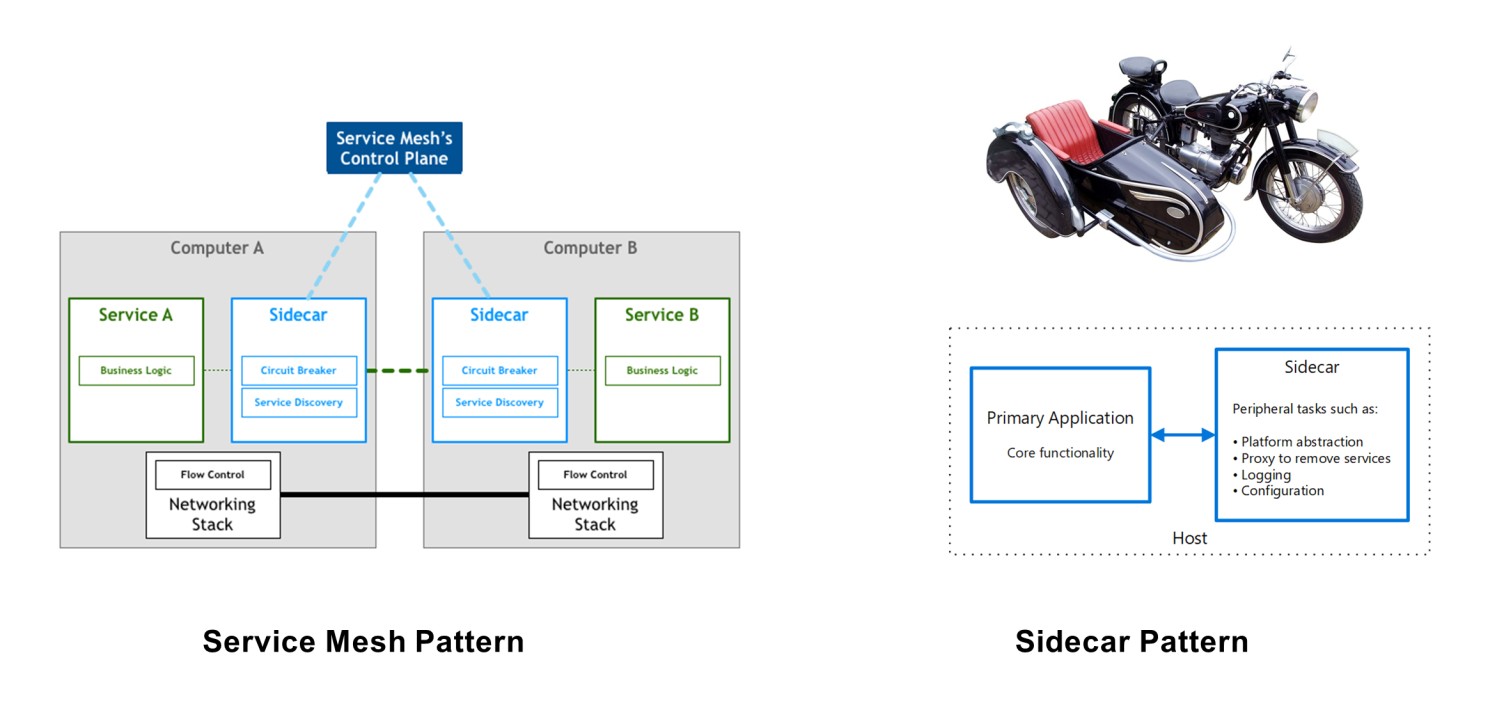

The service mesh solves all of the preceding problems. It sounds amazing. How is it done? In short, the service mesh strips the functions of class libraries and frameworks the application by introducing a sidecar mode[3] and sinks these functions to the infrastructure layer. This following the ideas of abstraction and layering in old operating systems (applications do not need to select a specific network protocol stacks) and the software as a service (SaaS) idea of modern cloud computing platforms with services managed from the bottom up (IaaS -> CaaS -> PaaS -> SaaS).

The diagrams of service mesh evolution used above were taken from Service Mesh Pattern[4], which you can refer to for more information.

Note: The following contents are collected for reference only. For further study, see the latest authoritative materials.

Mainstream implementations:

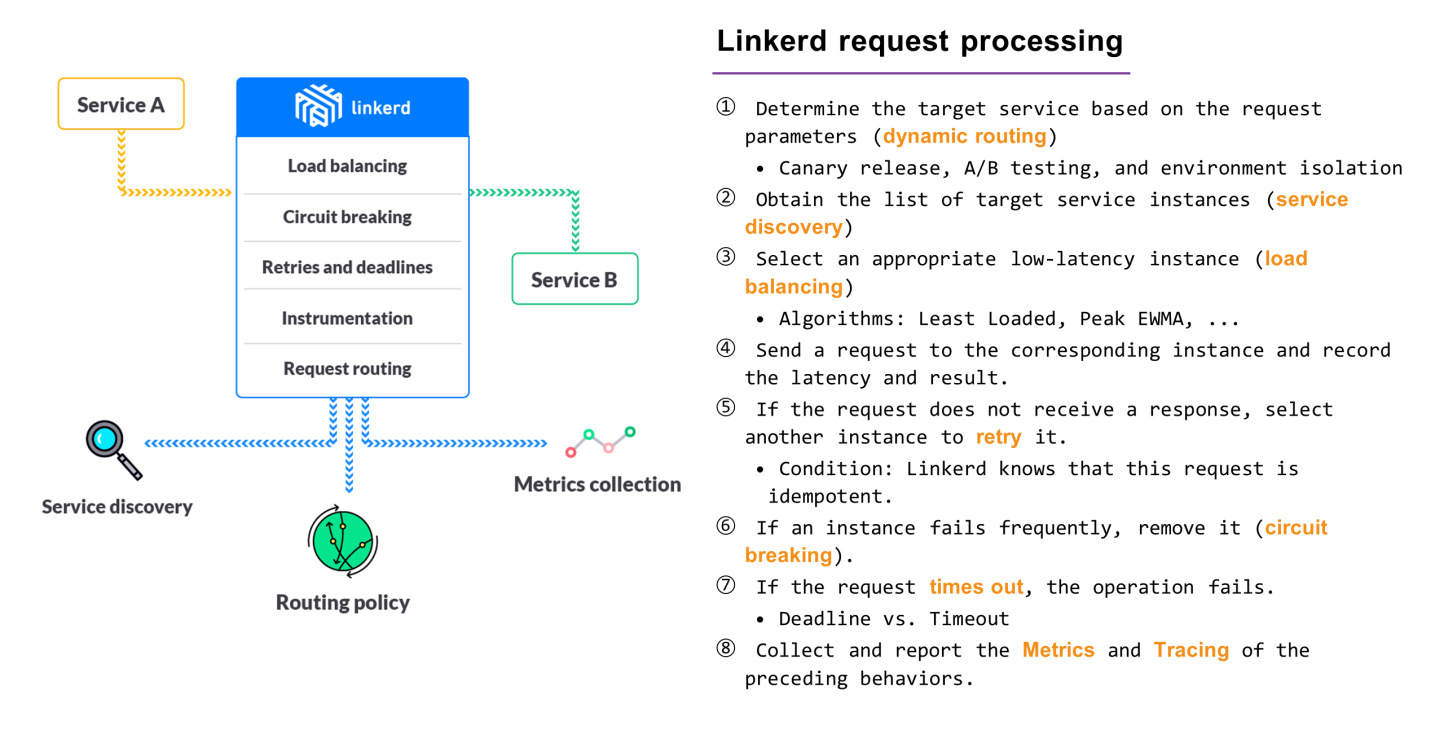

The core component of Linkerd is a service proxy. Therefore, as long as we understand its request processing flow, we can master its core logic.

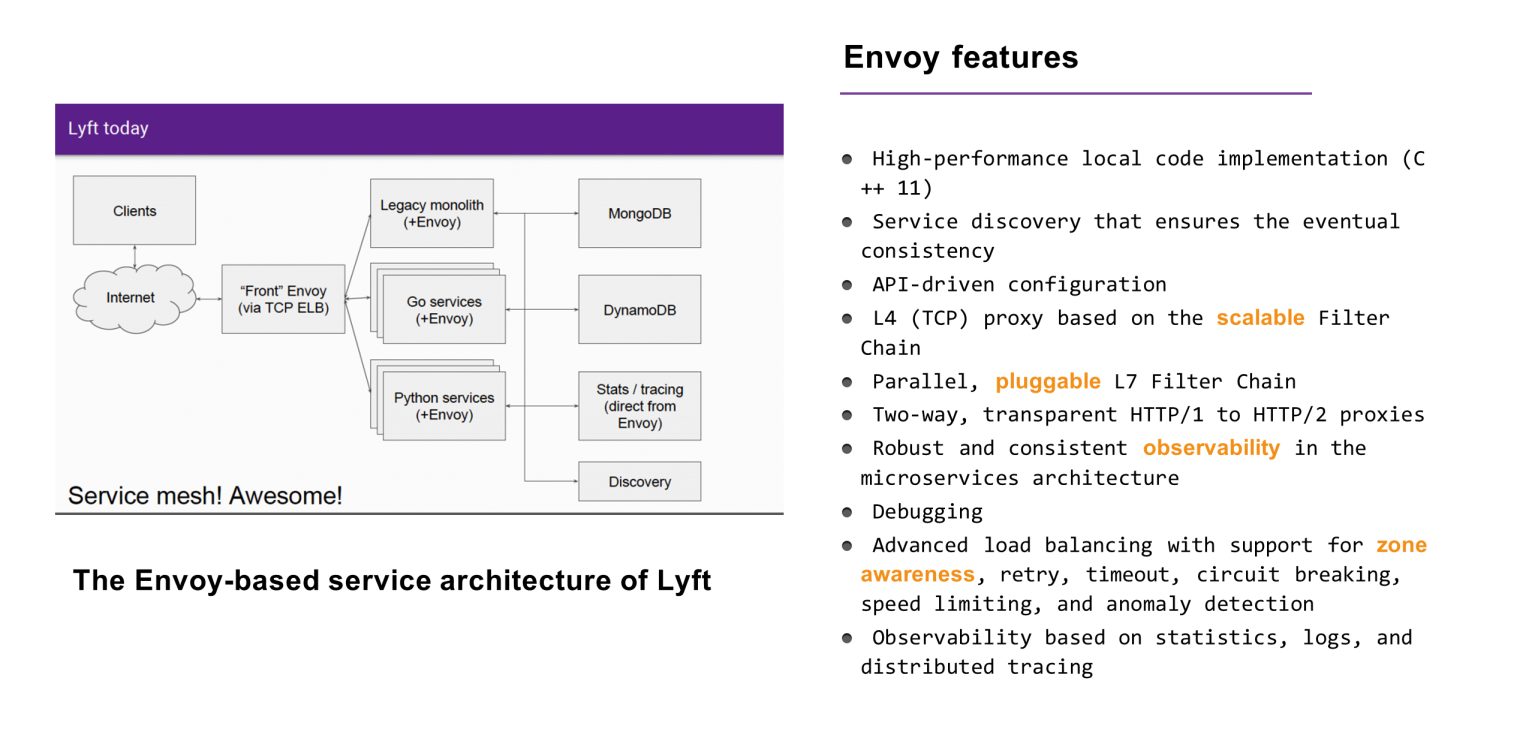

Envoy is a type of high-performance service mesh software with the following features:

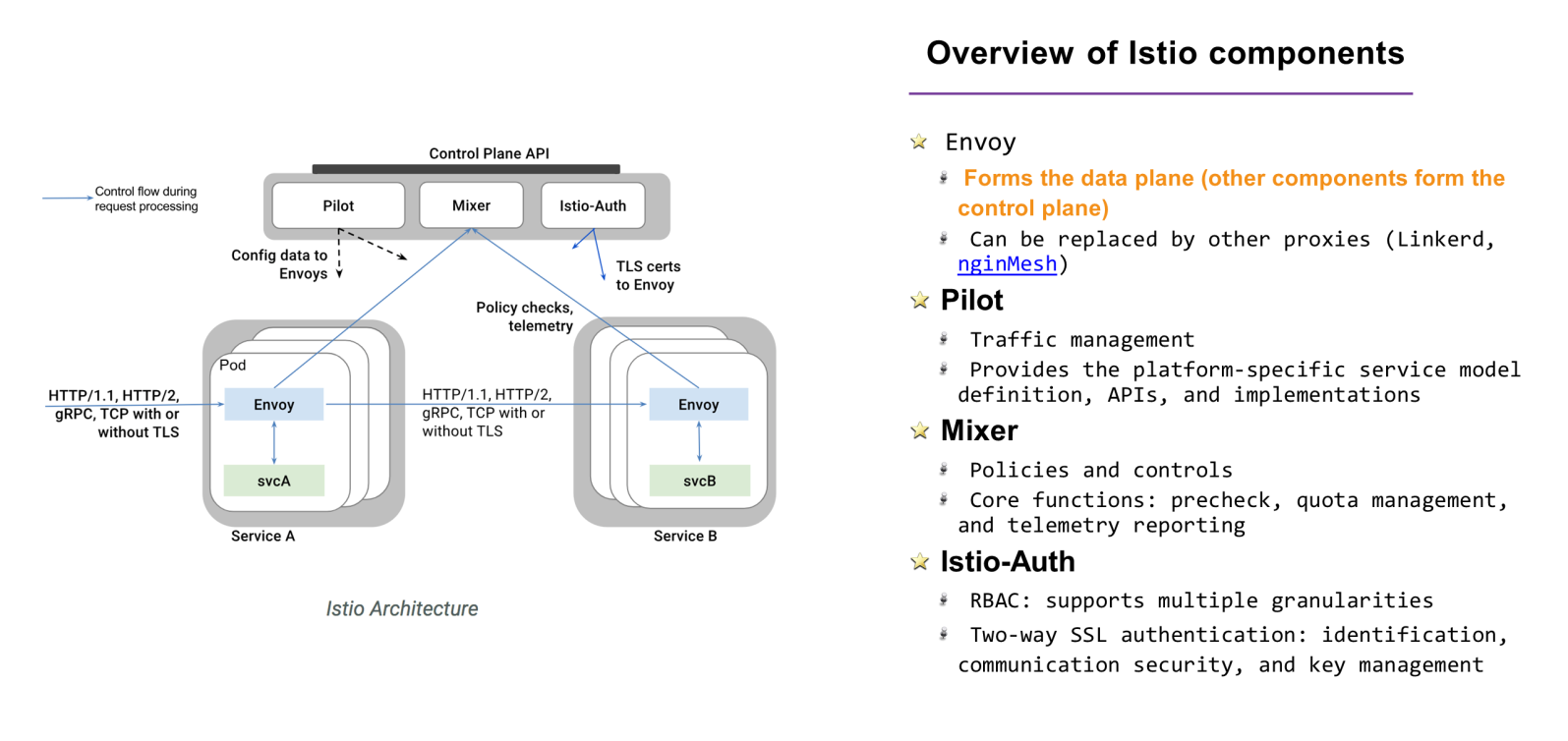

Istio is a complete service mesh suite that separates the control plane and data plane, and consists of the following components.

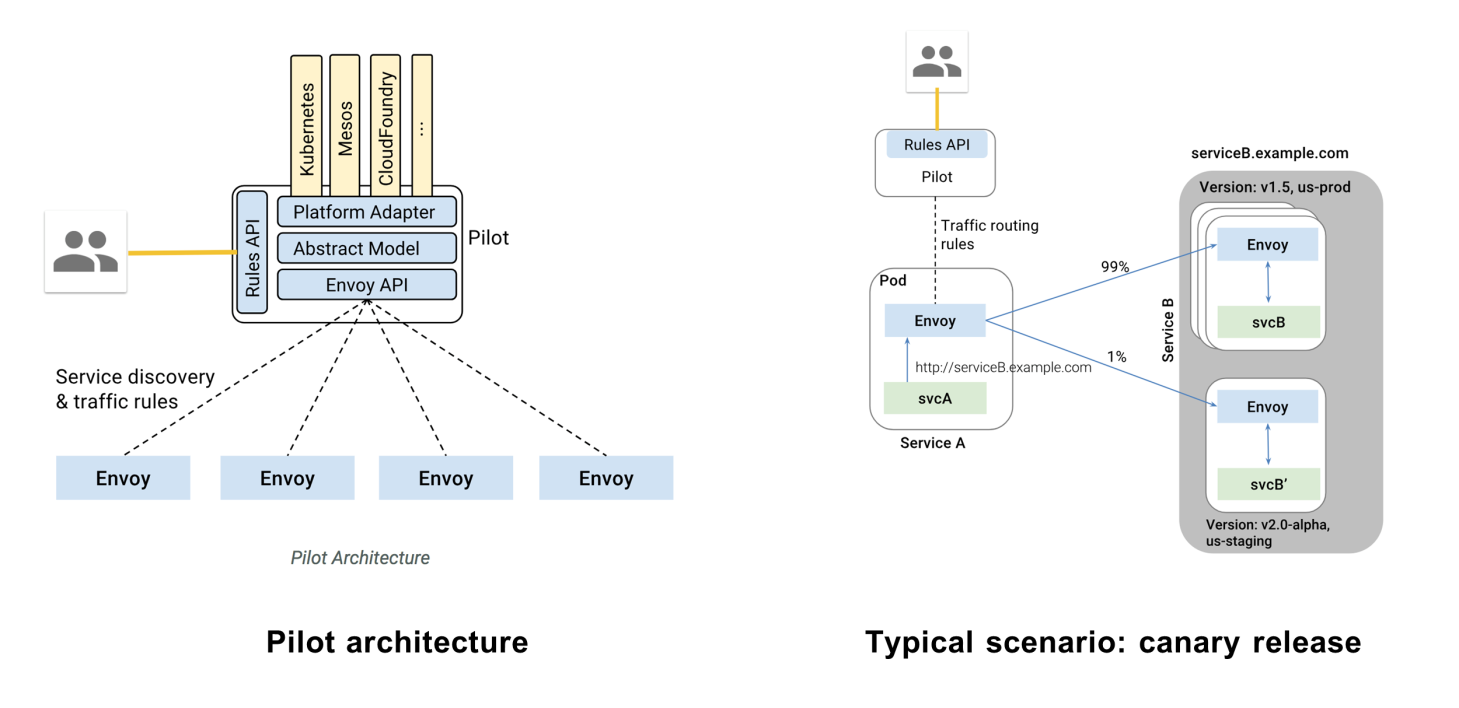

Pilot is the navigator in the Istio service mesh. It manages the traffic rules and service discovery on the data plane. A typical application scenario is canary release or blue-green deployment. Based on the rule API provided by Pilot, developers issue traffic routing rules to the Envoy proxy on the data plane, accurately allocating multi-version traffic (such as by allocating 1% of traffic to the new service).

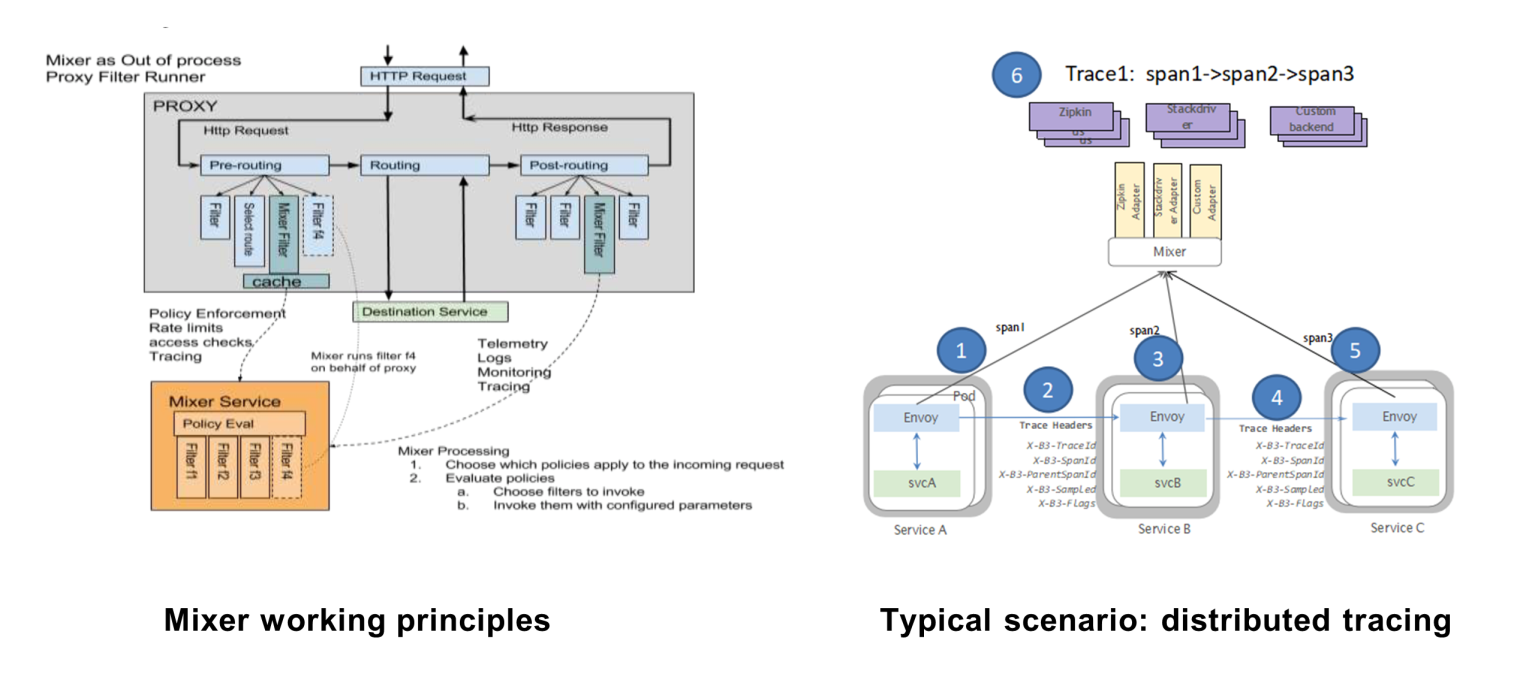

Mixer is the tuner in the Istio service mesh. It implements traffic policies (such as access control and speed limit) and observes and analyzes the traffic based on logs, monitoring, and tracing. These features are achieved by the Envoy Filter Chain mentioned earlier. Mixer mounts its own filters to the pre-routing expansion point and the post-routing expansion point.

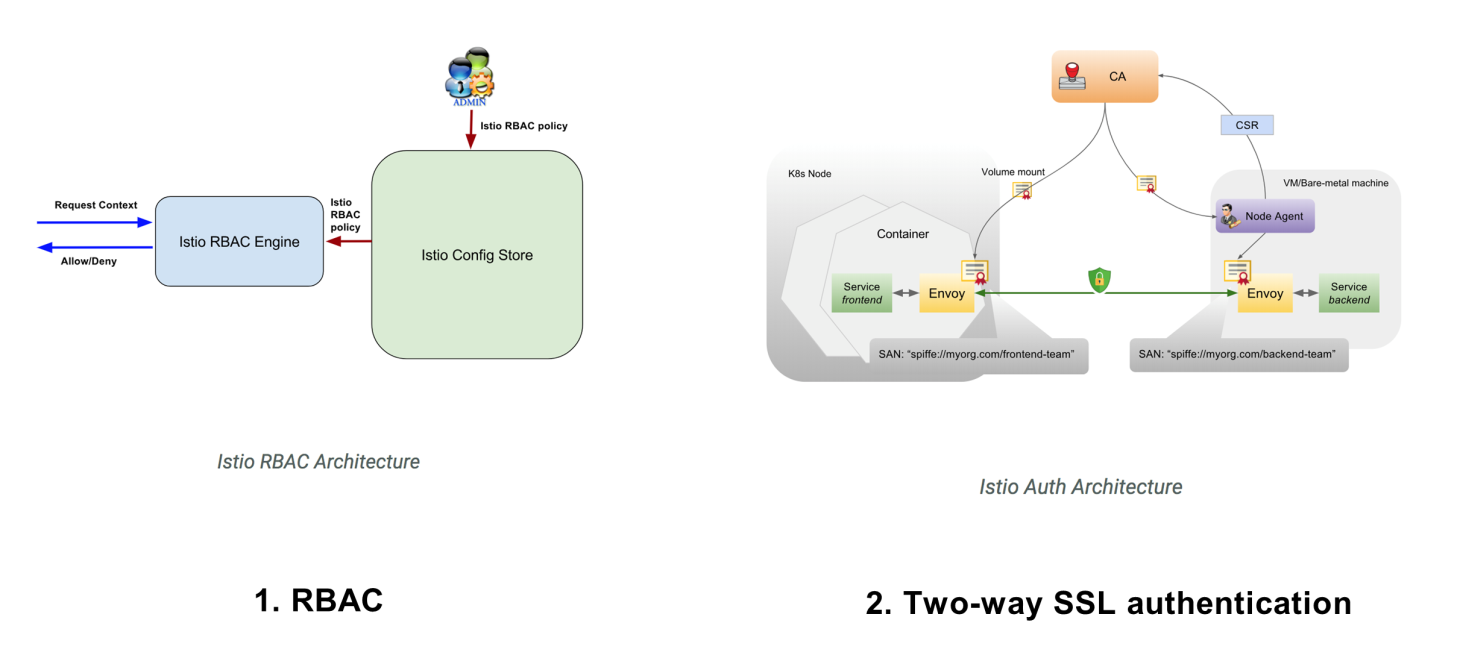

Auth is the security officer in Istio service mesh. It authenticates and authorizes the communication between service nodes. For authentication, Auth supports two-way SSL authentication between services, which allows both sides in the communication to recognize each other's identity. For authorization, Auth supports the popular RBAC, which enables convenient and fine-grained multi-level access control based on users, roles, and permissions.

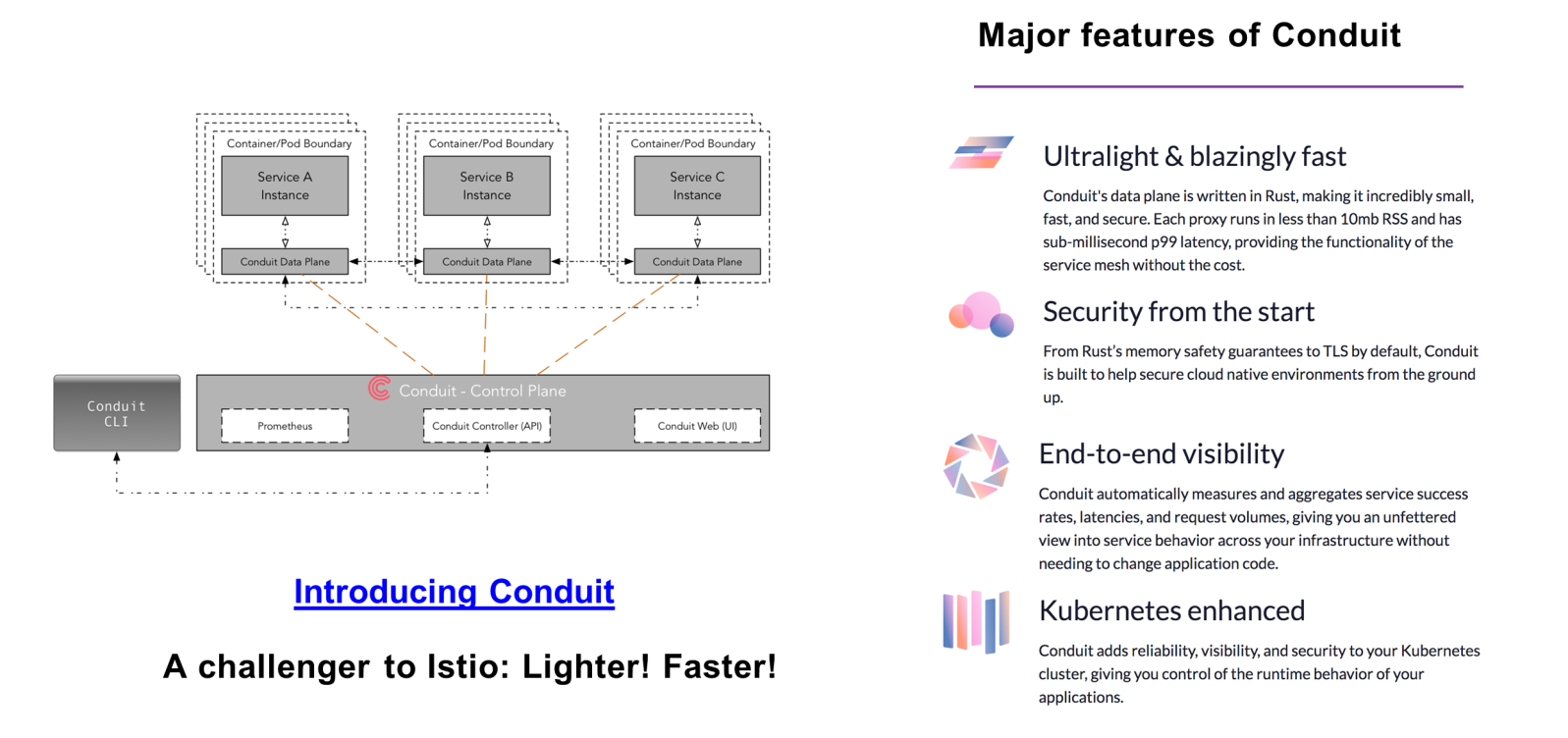

Conduit is a next-generation service mesh produced by Buoyant. A challenger to Istio, Conduit retains an overall architecture similar to Istio, which clearly distinguishes the control plane and the data plane. In addition, it has the following key features.

Starting from a consideration of microservice communication in the cloud-native era, this article describes the origin, development, and current status of service mesh in the hope of giving you a basic understanding of it.

3 posts | 1 followers

FollowAlibaba Clouder - February 14, 2020

Alibaba Container Service - December 18, 2024

Alibaba Container Service - January 10, 2025

Alibaba Developer - January 10, 2020

Alibaba Container Service - August 16, 2024

Farah Abdou - February 7, 2026

3 posts | 1 followers

Follow Alibaba Cloud Flow

Alibaba Cloud Flow

An enterprise-level continuous delivery tool.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn More NAT(NAT Gateway)

NAT(NAT Gateway)

A public Internet gateway for flexible usage of network resources and access to VPC.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Adrian Peng