By Wang Wei (Yanchen), Senior Data Engineer in the Fliggy Technology Department of Alibaba

Released by Hologres

During the 2020 Double 11 Global Shopping Festival, Alibaba cloud-native real-time data warehouse was first implemented in core data scenarios for the first time. This data warehouse is built based on Hologres and Realtime Compute for Apache Flink, and has set a new record for the big data platform. This article introduces how Hologres helps Fliggy's real-time data big screen achieve a 3-second startup with no faults during 2020 Double 11.

Compared with previous Double 11 Global Shopping Festivals, the biggest change in 2020 was that the activities were divided into two periods. This naturally formed two traffic peaks. Thus, it took longer to collect screen and marketing data, and there were more metrics. For the first time, Fliggy’s big screen procedure data was reused for Alibaba's GMV media big screen. It was a huge challenge to ensure the real-time accuracy and stability of Alibaba's GMV media big screen, Fliggy’s data big screen, and the Double 11 Global Shopping Festival overall.

During 2020 Double 11, it took just three seconds in the middle of the night to startup Fliggy’s real-time big screen with no faults. Alibaba Group’s media big screen was stable with accurate metrics, stable service, and real-time feedback.

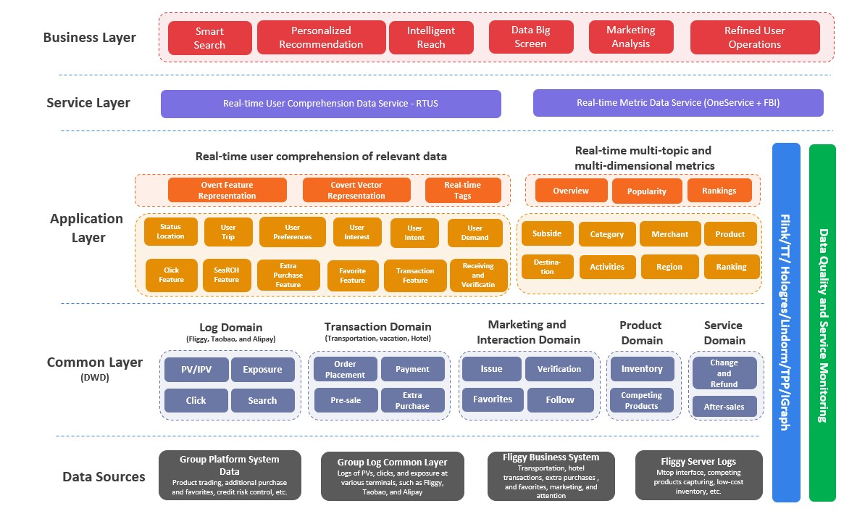

All of this cannot be achieved without the full-procedure technical improvements. The Fliggy real-time data architecture is shown below:

The following describes the business upgrades and optimizations for the full procedure of real-time data. As a result, a fast, accurate, and stable real-time data big screen was constructed during Double 11.

The DWD layer takes the lead in dealing with the peak traffic during Double 11. After nearly two years of iteration and improvement, the full coverage of the DWD layer is achieved. The domains of logs, transaction, marketing interaction, and service have been fully covered in the multi-terminal and multi-source DWD layer. Job throughput and resource efficiency also improved continuously. There were multiple full-procedure stress tests and further reinforcement to handle the traffic peaks of 2020 Double 11.

Dimension tables are the core logic and physical dependency of the DWD layer. Hotspot dimension tables may be considered as the risks and bottlenecks in services during the big promotion. The Fliggy commodity table was the Apache HBase dimension table on which various real-time jobs depend on the most. Jobs were in the DWD layer of Fliggy on Taobao when traffic surged during the promotion. In 2019, the daily QPS of the dimension table was reduced from hundreds of thousands to tens of thousands by optimizing the deep logic of Taobao PV traffic extraction. However, with the clicking of the DWD layer and the increase of dependency in other businesses, the daily QPS quickly increased to more than 50,000. What’s more, the QPS soared to more than 100,000 during the big promotion stress testing. The HBase cluster where the dimension table was located was an outdated public cluster. This meant there would be high stability risks during the promotion. Therefore, in 2020, Fliggy commodity tables and other heavy-traffic dimension tables were migrated to the Lindorm cluster with better performance. The original HBase cluster was retained for dimension tables for other non-core application layers. By doing so, the pressure on dimension tables bought by traffic peaks was dispersed.

The resource consumption of real-time jobs is also in line with the 80/20 principle, as most of the computing resources are consumed by a small number of jobs. The exposure jobs of the Fliggy require at least 1,000 CUs to ensure resources during Double 11. 600 CUs for the PV DWD layer jobs and at least half of the cluster resources for nine jobs at the traffic DWD layer are required. Large jobs are distributed to different resource queues to avoid resource over-consumption of multiple large jobs in one queue. During large traffic, the resources consumed by these jobs will exceed the allocated resources. Similarly, tasks at the transaction DWD layer of all categories are also scattered in each resource queue. This prevents sudden extreme abnormalities in a single cluster that leads to zero metric data.

During 2020 Double 11, the real-time DWD layer successfully withstood 250 times the daily transaction traffic and three times the log traffic in Taobao. The entire traffic DWD layer withstood peak traffic of tens of millions of records per second. There was no task latency at the transaction DWD layer, and there was only minute-level latency at the traffic DWD layer that faded very quickly.

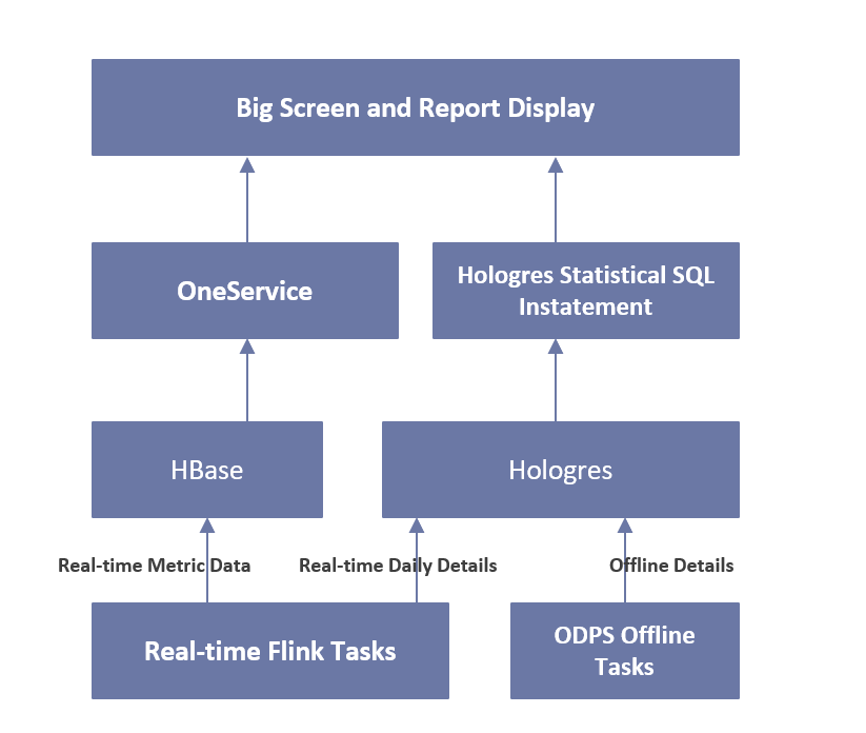

The three core stages of Double 11 are pre-sale, pre-heating, and sales. The most important thing in the sales stage is to pay the balance. The big change on the business side of 2020 Double 11 is that the balance payment period has changed from one day to three days. Thus, the marketing data of the balance from previous year cannot be reused. Previous metrics related to multi-dimensional balance payment, such as the market, category, day, and hour need to be retained. It is also necessary to add metrics of the balance payment on the product and the merchant sides. At the same time, since the balance payment period is longer, data from more dimensions need to be added temporarily to achieve a more efficient balance payment. On the last day of the balance payment, the demand for collecting order details with an unpaid balance was proposed. Therefore, the challenges lie on long-period, multi-dimensional, and changeable data metrics of balance payment during 2020 Double 11. To solve these challenges, the data architecture of the big screen and marketing data has been upgraded. The original pre-computing mode was replaced by the instant query hybrid mode of pre-computing + batch-stream unification. By doing so, the overall development efficiency was doubled, and the architecture can adapt to demand changes easily.

Stream-Batch Unification:

Based on Hologres and Apache Flink, the architecture adopts partition tables and the instant query capability of Hologres. The real-time detail data at the DWD layer is written into the partition on the day. The offline-side detail data at the DWD layer is imported directly by MaxCompute to cover Hologres’ coverage partition for the next day. For scenarios with less accuracy and stability, the offline merge step can be removed for both. At the same time, primary key coverage should be configured when writing. This enables data reflow in case of abnormal tasks. Metric computing for each dimension can be aggregated directly in Hologres through SQL statements, and query results can be returned instantly. This feature helps metric computing deal with changes in statistical metrics conveniently.

Pre-Computing:

The architecture retains the previous mature computing, storage, and service architecture of Apache Flink + Apache HBase + OneService. Apache Flink is used to aggregate metrics in real-time and writes them to HBase. OneService performs query and procedure switch operations. In scenarios with high availability and stability, primary and secondary procedures are constructed. Offline metric data reflow may also be required to fix possible abnormalities and errors with the real-time procedure.

The instant query service Hologres built is equipped with a simple and efficient architecture and extremely simple metric computing. It also improves the development efficiency of real-time metric data.

As for the balance payment, some of the common metrics constructed by Apache Flink SQL are tricky. The metric is about the paid sum or payment rate of balance accumulated each hour from 0:00. The GROUP BY statement in Apache Flink is essentially a group aggregation, which helps aggregate hourly data in groups. However, only data accumulated from 0:00 to the current hour can be grouped and aggregated. The data accumulated during 0:00-2:00, 0:00-3:00, and 0:00-4:00 cannot be aggregated for computing. This limitation of Apache Flink causes a problem. If the data needs to be refreshed when an error occurs, only the last hour's data will be updated. The accumulated data of other hours cannot.

For engines that use Hologres instant query, it only needs to add a window function after hourly data aggregation. Then, it uses a SQL statistical statement. This way, the development efficiency is improved substantially. An example is listed below:

select stat_date,stat_hour,cnt,gmv -- Hourly data

,sum(cnt) over(partition by stat_date order by stat_hour asc) as acc_cnt -- Accumulated hourly data

,sum(gmv) over(partition by stat_date order by stat_hour asc) as acc_gmv

from(

select stat_date,stat_hour,count(*) cnt,sum(gmv) as gmv

from

dwd_trip_xxxx_pay_ri

where stat_date in('20201101','20201102')

group by stat_date,stat_hour

) a ;The GMV media big screen of Alibaba Group has always been independently managed by the DT Team. The big screen for 2020 Double 11 reused the Fliggy real-time procedure data for the first time to ensure the consistency and integrity of the standard. Therefore, it posed a greater challenge in terms of screen metric computing, stability, and timeliness of procedures.

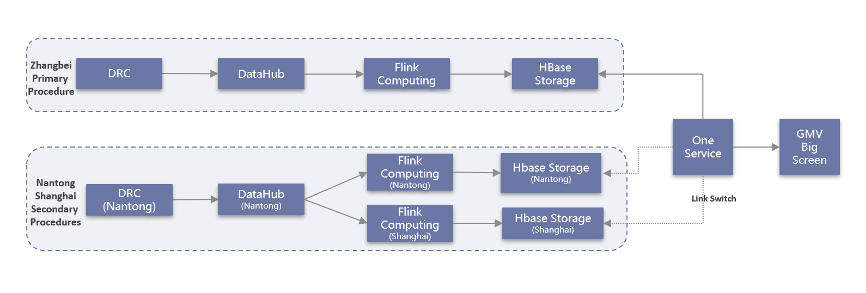

To ensure high system availability, transactions of each category are synchronized from the DRC of the source database to the transaction details DWD layer. The primary/secondary double procedures are created in Zhangbei and Nantong clusters, respectively. For GMV statistics tasks and the HBase result storage at the application layer, the backup procedure at the Shanghai cluster is also added. The following figure shows the overall procedure architecture:

In addition, with the monitoring and alarms of exceptions in full-procedure real-time tasks, the procedure can be switched in seconds if an exception occurs, and the system SLA reaches more than 99.99%.

To do this, the full-procedure data processing details of tasks are optimized.

For the "source" part, the DataHub (TT) writing of binlog after DRC synchronization is optimized. Multiple queues of source TT are reduced to a single queue, and the data latency is also reduced. In the early development stage, the transaction data traffic of various categories was not evaluated correctly, so the number of TT queues was overset. As a result, the traffic in a single queue was very small. The default cache size and frequency for TT collection caused large data latency, which led to a longer latency in the entire procedure. After the TT queue number is reduced, the data latency decreases to seconds.

In the middle part, the processing logic of the DWD layer for various transactions is optimized to reduce the logic processing latency. The initial DWD layer of TTP transactions (such as international air tickets and train tickets) completely simulates the offline DWD layer for multi-dimensional reuse. It associates complex and time-consuming route segments, causing over three seconds of processing latency of the whole task. To accurately balance latency and reusability, the original multi-stream join mode is replaced by a multi-level join mode for unified output. Thus, GMV processing latency is reduced to less than 3s. The overall process is listed below: For the task node, the parameter configuration is adjusted to reduce buffer and I/O processing latency. For the DWD layer and GMV statistics, parameters, such as the allowLatency and cache size of miniBatch, the flush interval of TT output, and the flushsize of HBase output are all adjusted.

TopN has always been a common statistical scenario for real-time marketing analysis. Since TopN hotspot is a part of statistics, it is prone to data skews and has performance and stability issues. After the Double 11 pre-sale, the TopN jobs of the exposed traffic of the venue, merchants, and commodities began to show backpressure one after another. The checkpoint operation failed due to a timeout. The latency was so long that it made failover more frequently, and statistics were nearly unavailable. This may be caused by traffic rising and insufficient job throughput. Resource scale-out and concurrency increases were helpless. The backpressure was still concentrated on the rank node while resources were sufficient. The execution algorithm of the rank node transformed into the RetractRank algorithm with poor performance. Moreover, the logic of the original algorithm applied function row_number() for inversion after the GROUP BY operation, and then selected top N values. It cannot be automatically optimized into the UnaryUpdateRank algorithm. Thus, the performance dropped dramatically. The UnaryUpdateRank algorithm had an accuracy risk and was deprecated in Apache Flink 3.7.3. After several adjustments and tests, it was determined that the problem cannot be solved through configuration optimization, but it was solved through multiple logic optimizations.

The venue category exposure and merchant&product tasks were logically split into two tasks. By doing so, data skew on logical rank nodes of products or merchants can be prevented, which helps to avoid data output failure.

First, level-1 aggregated computing was performed for UV to reduce the sorting data volume. Then, level-2 aggregation was performed and the original algorithm was replaced by the UpdateFastRank algorithm. Finally, the checkpoint was performed in seconds. It only took ten minutes to backtrack and aggregate the exposed data in a day.

There is also another strategy that refers to two-level TopN. First, "Hash Group By" for IDs of products or merchants is conducted to get top N values. Then, the overall TopN is performed.

Data big screen always represents the highest requirements in real-time scenarios. Every test and challenge brought by Double 11 will bring breakthroughs to the entire real-time data system and procedures. Fliggy real-time data technology not only empowers the media big screens but also enhances marketing analysis and venue operations. The real-time DWD layer and feature layer, real-time marketing analysis, real-time tags, and RTUS service consist of the real-time data system. It empowers core businesses, such as search, recommendation, marketing, customer engagement, and user operation in an all-around and multi-dimensional way.

Network Monitoring Technology Behind the Smooth Experience During Double 11

Hologres - July 7, 2021

Hologres - July 16, 2021

Hologres - May 31, 2022

Hologres - June 30, 2021

Alibaba Cloud New Products - January 19, 2021

Alibaba Clouder - December 11, 2020

Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Hologres