This article is organized from the sharing of FFA 2023 by Zhaoqian Lan from Alibaba Cloud. The content is divided into the following four parts on the research into the transformation practice of Flink 2.0 state storage-computing separation:

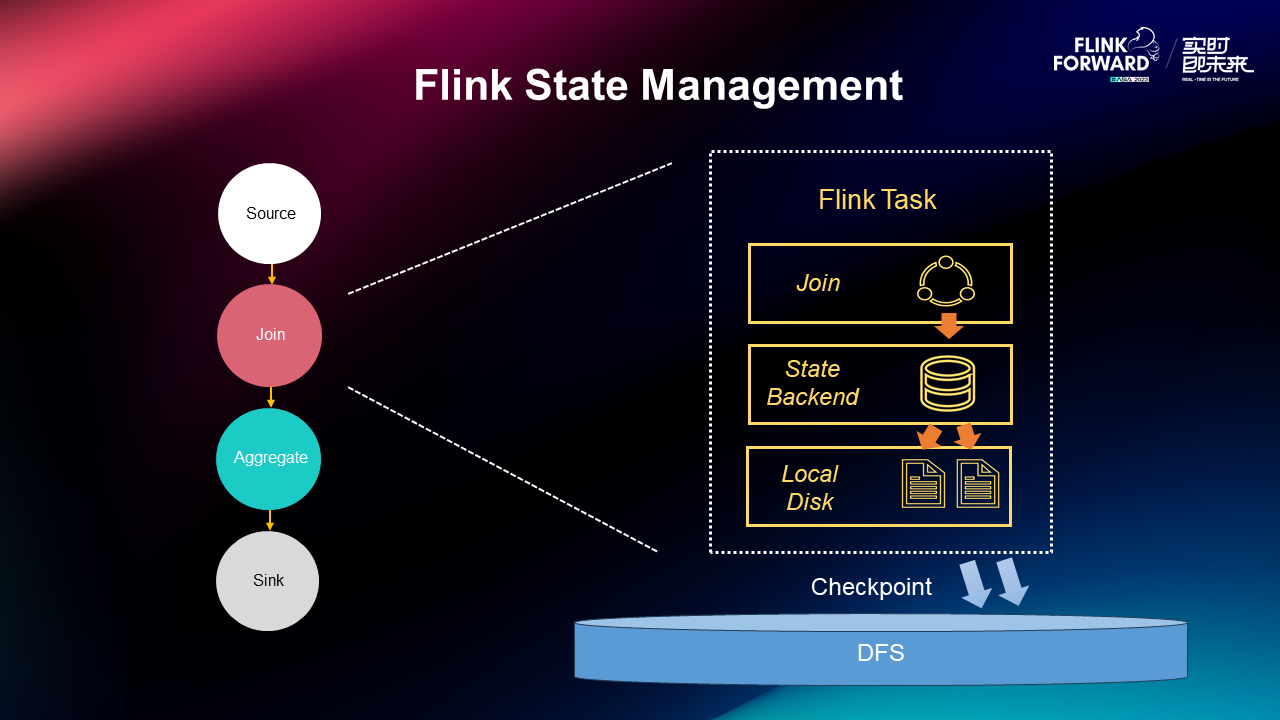

State management is at the core of stateful stream computing. Currently, the most commonly used state backend in the Flink production environment is based on the implementation of RocksDB. It is a locally disk-based state management system that stores state files locally and periodically writes the files to DFS during checkpoints. This integrated storage-computing architecture is simple enough to ensure stability and efficiency in small-state operations and meets the needs of most scenarios. However, as Flink continues to evolve, business scenarios are becoming increasingly complex, and large-state jobs are becoming more common. As a result, many practical issues related to state management have emerged under the integrated storage-computing architecture.

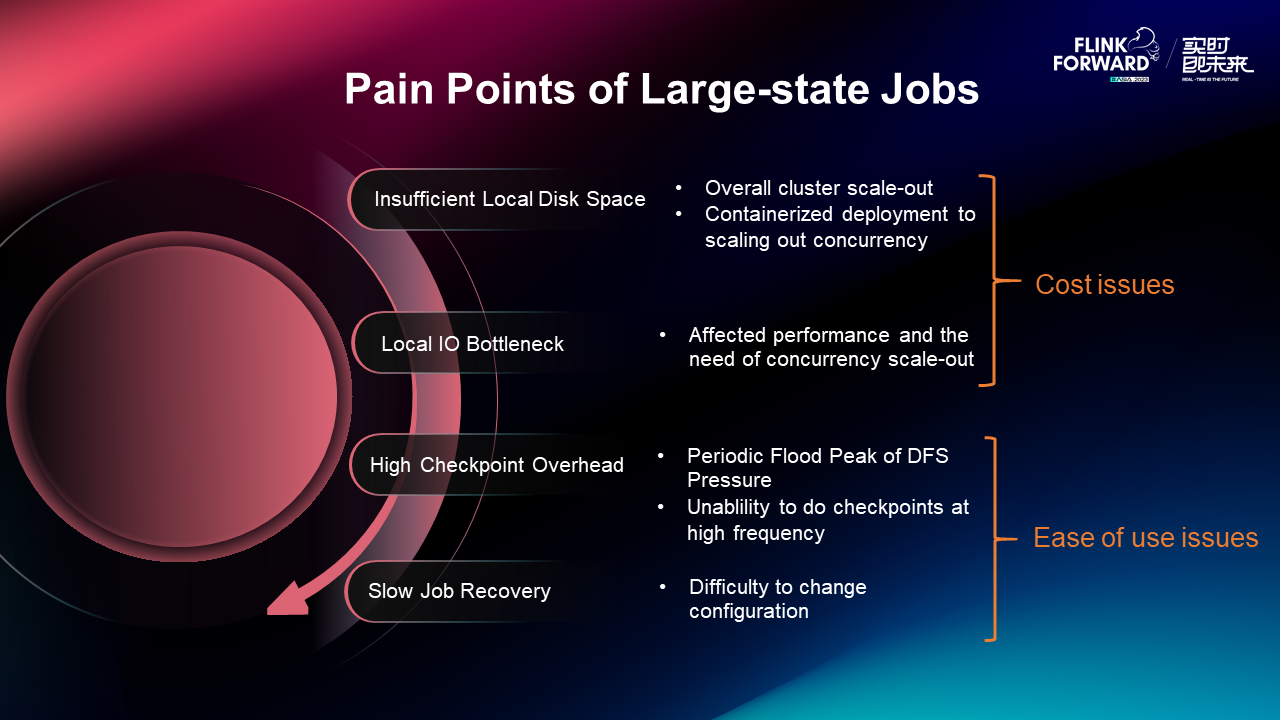

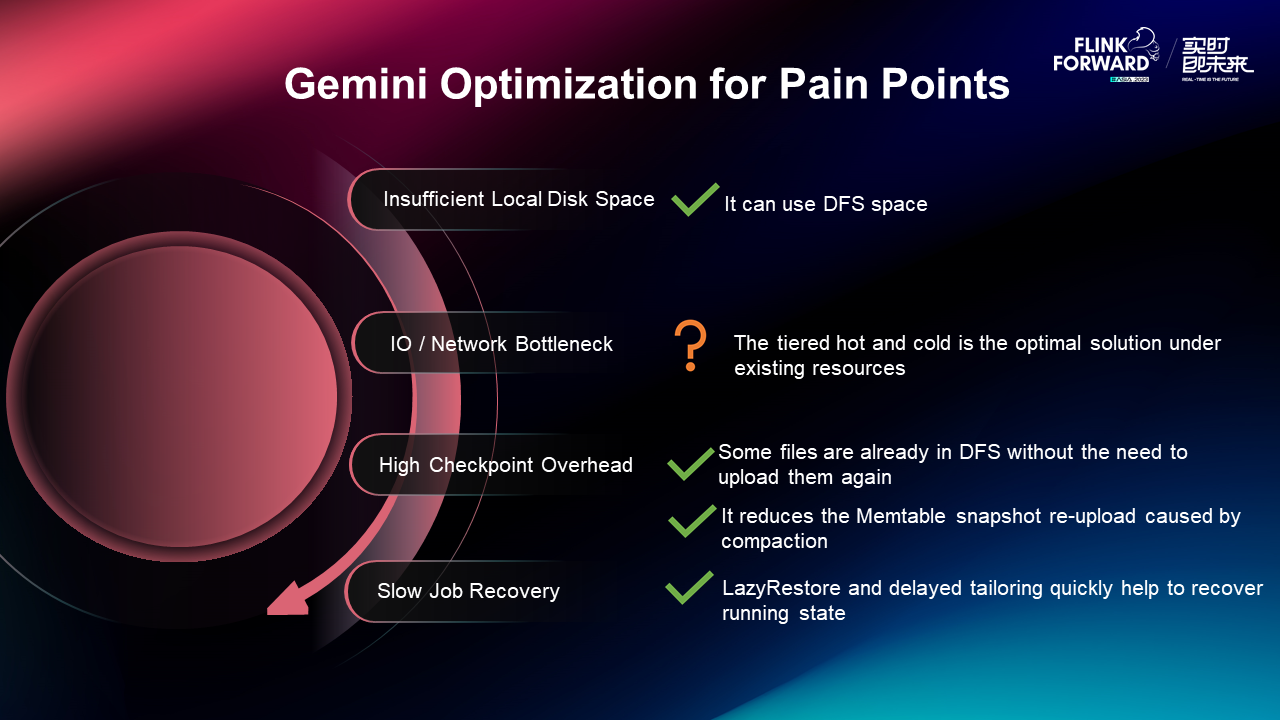

In large-state jobs, the state management based on RocksDB local disk storage-computing integration mainly encounters the following four problems:

• Local disks may have insufficient space, often requiring scaling out to address this issue. In current cluster or cloud-native deployment modes, it is inconvenient to expand the local disk separately. Therefore, users generally increase concurrency, tying storage and computing together for expansion, resulting in increased waste of computing resources.

• Normal state access during job operations may also encounter some bottlenecks in local disk I/O, leading to overall performance insufficiency, and necessitating concurrent operation scaling.

• Checkpoints can be costly, especially with large states, resulting in peaks in remote storage access during the checkpoint process.

• Job recovery is slow because full files need to be transferred from remote storage back to local, which can be a lengthy process.

The first two points are cost-related issues affecting users, while the checkpoint overhead and recovery speed are key concerns affecting the usability of Flink.

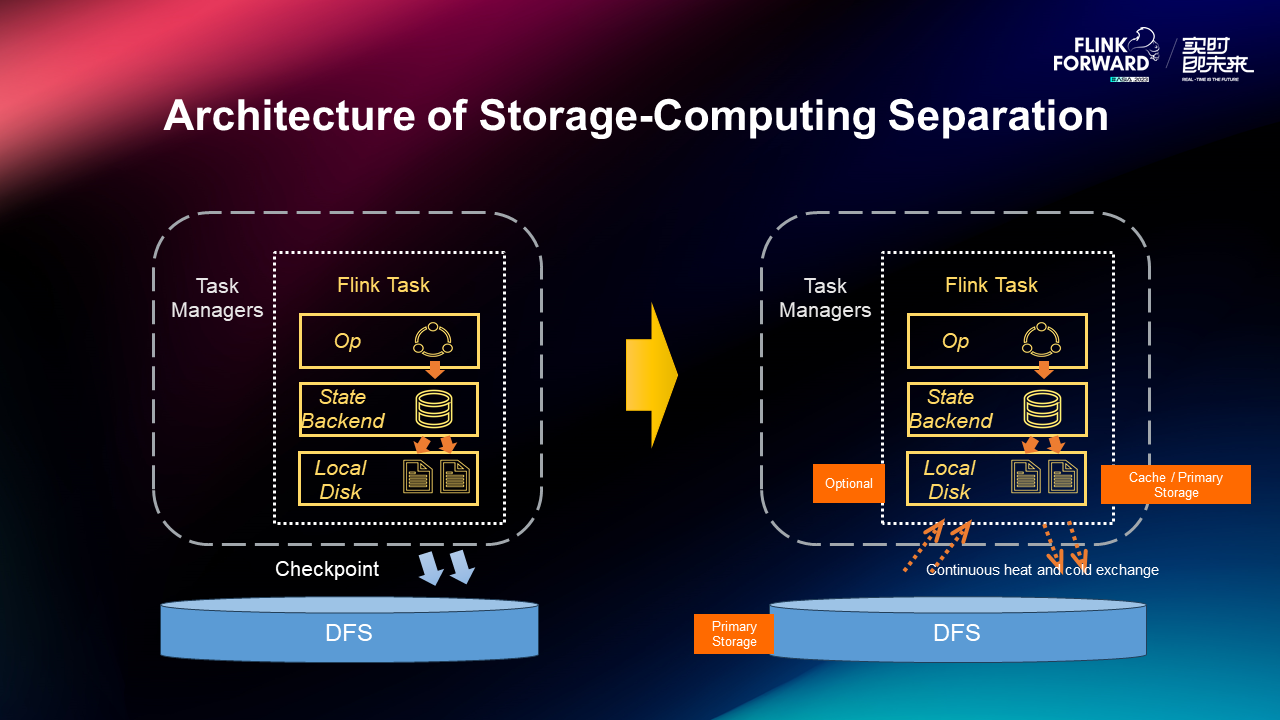

To solve the above problems, we propose an architecture of storage-computing separation. Storage-computing separation can get rid of the limitation of local disks. In addition, it can use remote storage (DFS) as the primary storage and use free local disk as a cache to use. At the same time, users can still select the local disk as the primary storage and still operate in the original mode. The significant advantage of this is that, on the one hand, the problems of insufficient disk space and I/O performance no longer affect computing resources, and on the other hand, state checkpoints and recovery can be done directly at the remote end, making it more lightweight. The architecture perfectly solves the problems faced by large-state jobs.

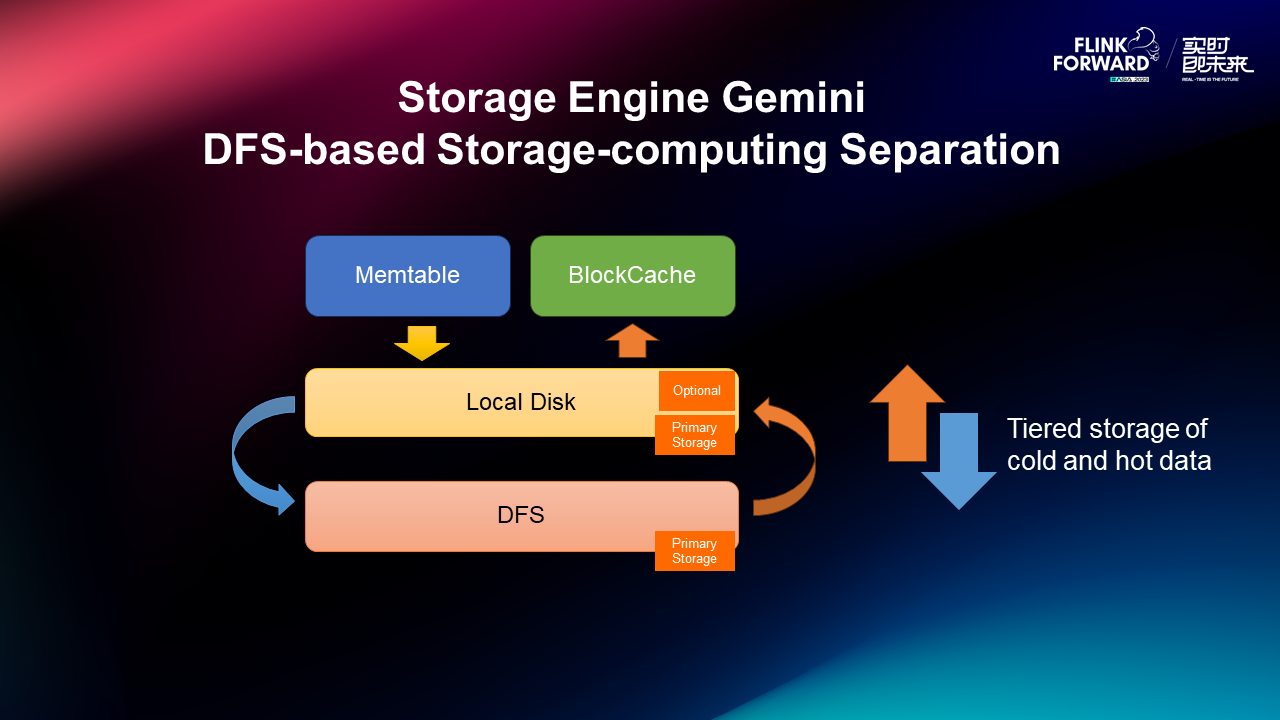

At the beginning of the discussion on the architecture of storage-computing separation, I hope to start with the enterprise-level state storage Gemini developed by Alibaba Cloud to explore its practice in storage-computing separation. The practice mainly includes the following three types:

Gemini can use the remote end as part of the primary state storage. It first stores the state files on the local disk, and if the local disk is insufficient, it moves the files to the remote storage. Files with high access probability are stored in the local disk, while those with low access probability are stored remotely. The two parts together constitute the primary storage, and on this basis, the hot and cold division is carried out to ensure efficient services under the given resource conditions. This hierarchical file management mode of Gemini gets rid of the limitation of local disk space. Theoretically, the local space can be configured to zero to achieve the effect of pure remote storage.

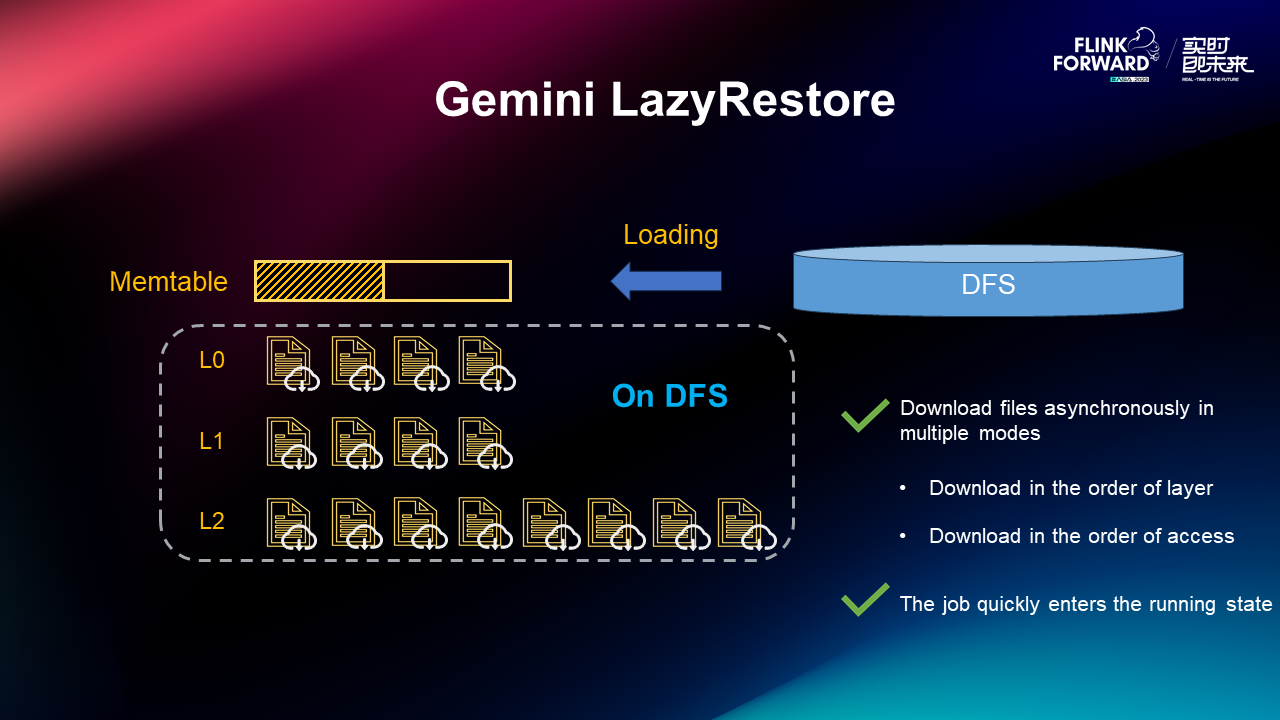

Gemini supports remote file storage. In the scenario of job recovery, you can enable the service without loading data from the remote files back to the local disk, so that the user's job enters the running state. This function is called LazyRestore. In the actual recovery process, Gemini only needs to load the metadata and a small number of data in the memory back from the remote end, and then the entire storage can be rebuilt and started.

Although the job has been started from a remote file, reading the remote file involves a longer I/O delay and the performance is still unsatisfactory. In this case, memory and local disks need to be used for acceleration. Gemini uses a background thread to download data files asynchronously and gradually transfers data files that are not downloaded to the local disk. The download process includes multiple strategies, such as downloading in the order of LSM-tree levels or in the order of actual access. These strategies can further shorten the time from LazyRestore to full-speed performance in different scenarios.

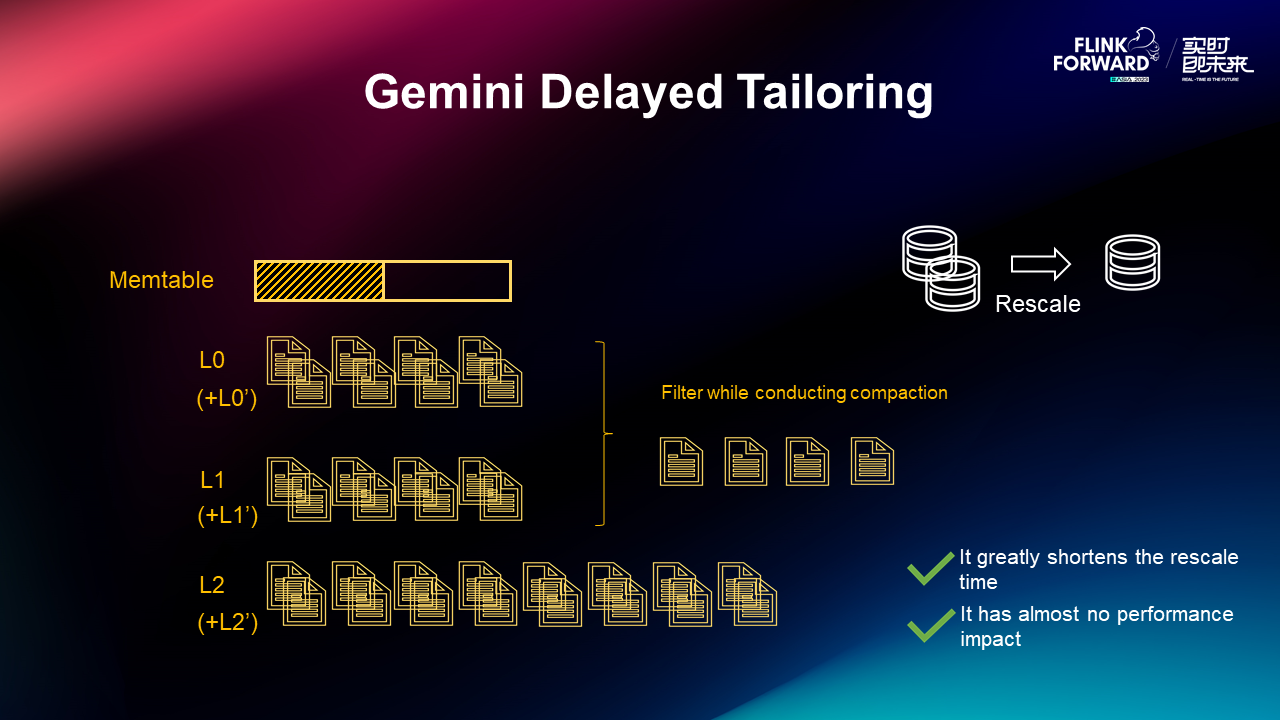

In the scenario of concurrency modification, for example, when merging two concurrent state data into one concurrency, RocksDB currently performs a merge after downloading both data. It involves tailoring the redundant data and rebuilding the data file, which is relatively slow. The community has made a lot of targeted optimizations for this process, but it still cannot avoid downloading data files. However, Gemini only needs to load the metadata of these two parts of data and synthesize them into a special LSM-tree structure to start the service. This process is called the delayed tailoring.

After reconstruction, the LSM-tree has more layers than that in normal cases. For example, in the figure, there are two L0 layers, two L1 layers and two L2 layers. However, Flink has a KeyGroup data partitioning mechanism. Therefore, the increasing layers will not affect the length of read links. Because the data is not actually tailored, there will be some redundant data which will be gradually cleaned up in the subsequent compaction process. The process of delayed tailoring eliminates the need to download and merge the data itself, which can greatly shorten the time for state recovery.

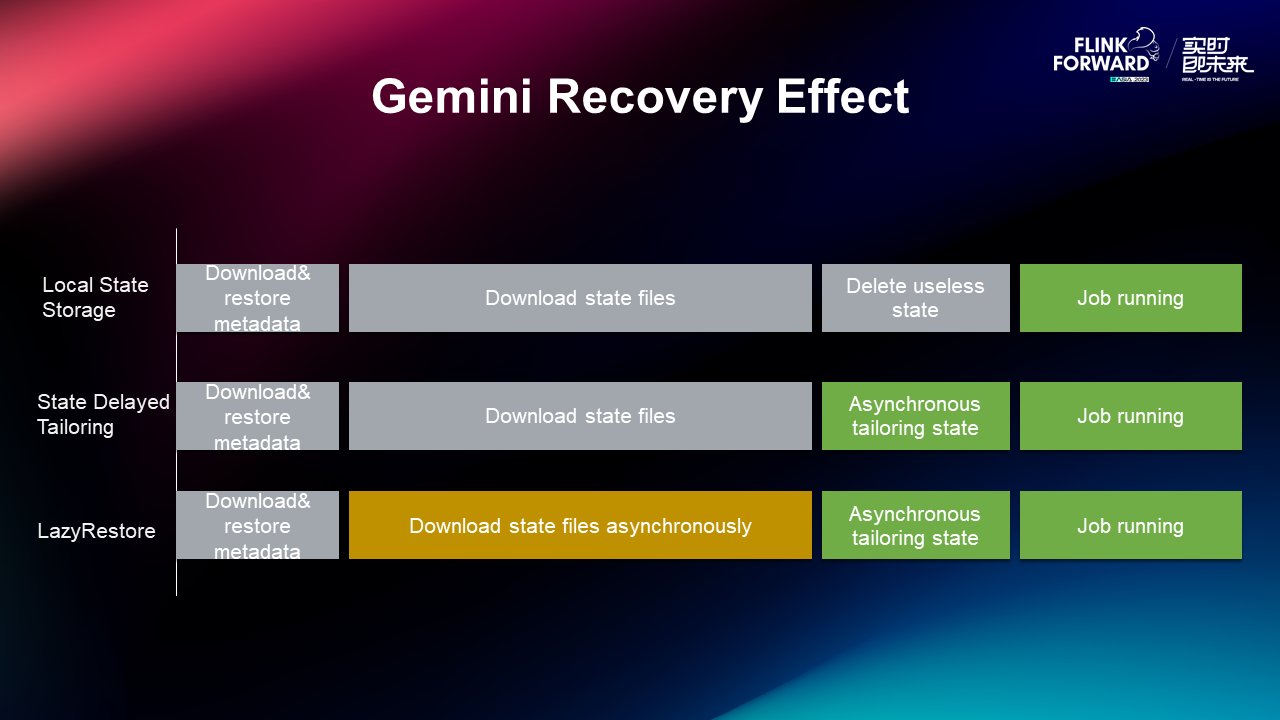

With asynchronous state tailoring and LazyRestore, for Gemini, the recovery time, that is, the state of the job from INITIALIZING to RUNNING, becomes very short, which is a great improvement compared with the local state storage scheme.

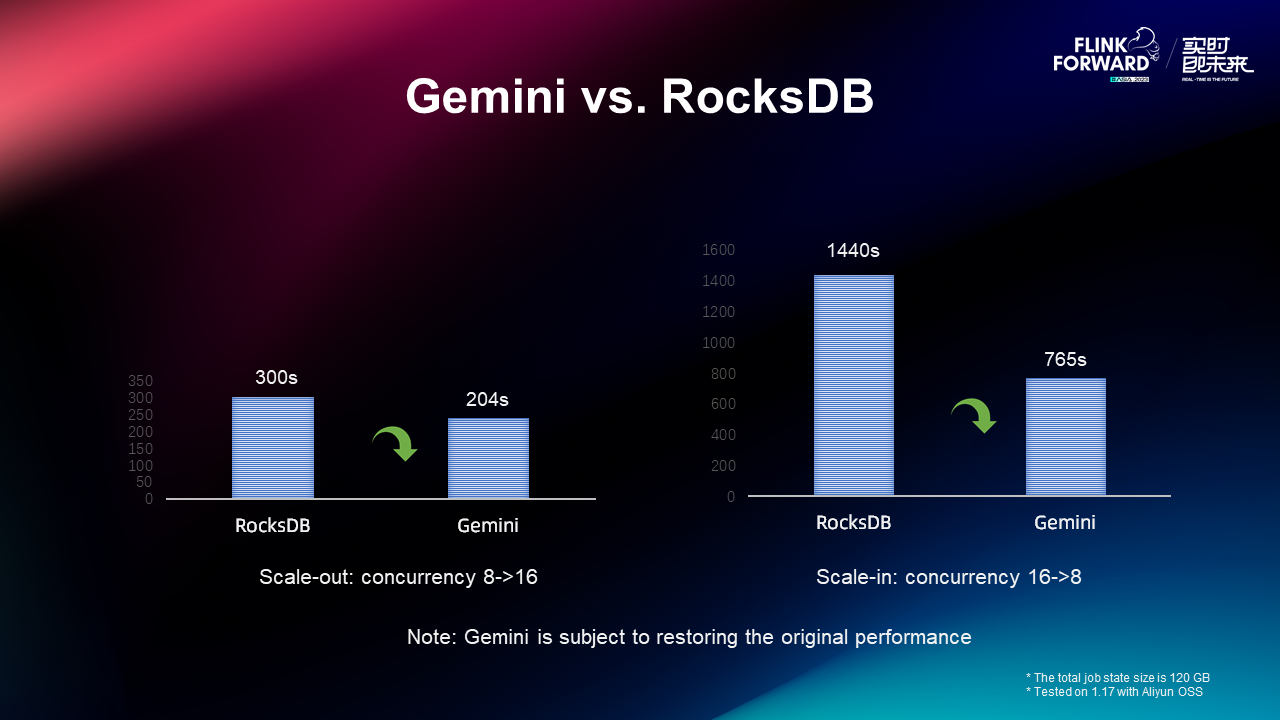

We have evaluated the concurrency modification time that Gemini and RocksDB take. The evaluation metric is the time from the start of the job to the recovery of the original performance, which includes the time that Gemini needs to download the file asynchronously. The results of the above experiment show that Gemini is significantly better than RocksDB in both scaling-in and scaling-out scenarios.

The optimization of Gemini related to storage-computing separation solves the above-mentioned problems in large job scenarios. The problem of insufficient local space can be solved by using remote space. For the problem of high checkpoint overhead, since some files are already stored remotely, there is no need to upload them again, and this part of the overhead is also reduced. To address the slow job recovery issue, the features of LazyRestore and delayed tailoring enable rapid recovery of job execution.

Another feature is the snapshot of the Memtable. When Gemini performs checkpointing, it uploads the Memtable as it is to the remote storage, which does not affect the Memtable flush process or the internal compaction. Its effect is similar to that of a changelog for generic incremental snapshots. Both mitigate peaks in CPU overhead and DFS I/O at checkpoints.

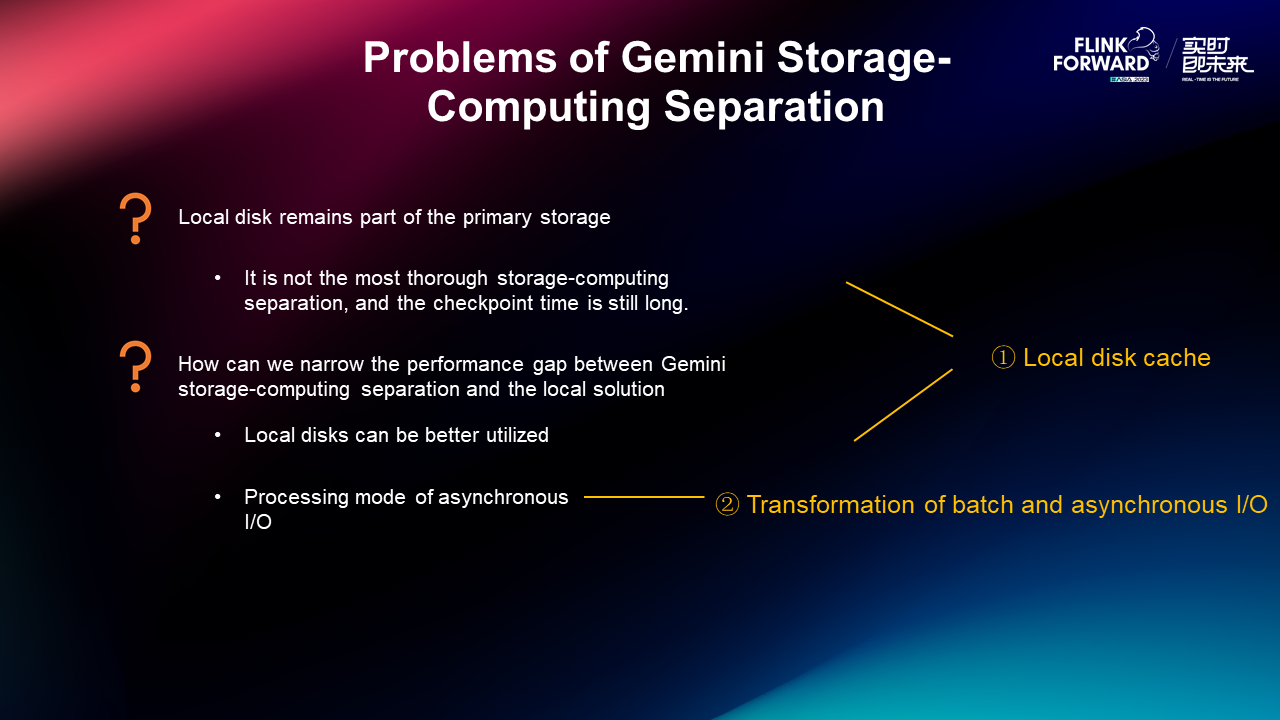

Gemini has done good practice in the storage-computing separation and has achieved good results in the big state operation scenarios. But it still has some problems:

First, Gemini still uses the local disk as part of the primary storage, and the state file is written to the local disk first, which is not the most thorough storage-computing separation. This will cause a large number of files to be uploaded during checkpointing, which will last for a long time and make it impossible to perform very lightweight checkpoints.

Second, all storage-computing separation solutions will encounter a problem, that is, the performance gap compared with the local solution still exists. In the current solution, Gemini has already used the local disk, but the utilization efficiency of the local disk is not the highest. If more requests can be sent to the memory or local disk, the number of remote I/O requests will decrease and the overall job performance will be improved. In addition, asynchronous I/O is the optimization that many storage systems adopt. It uses the method of improving I/O parallelism to increase the throughput of jobs, which is the next optimization direction worth trying.

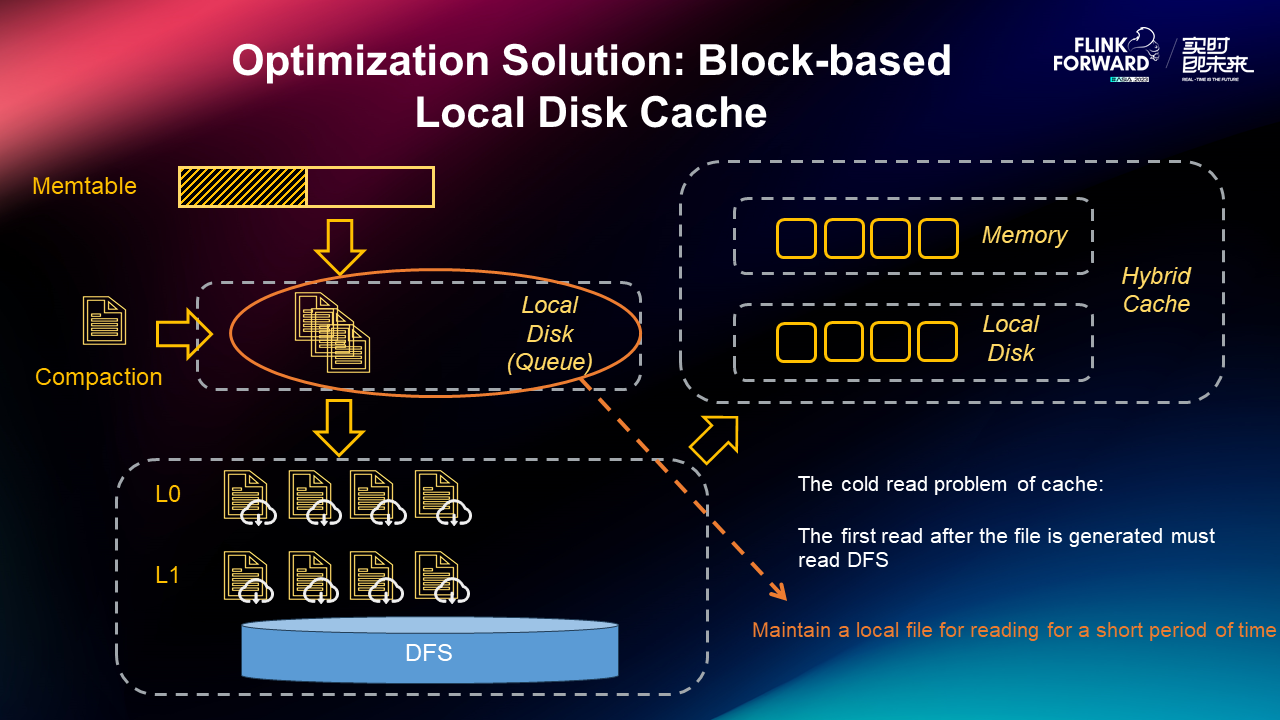

To solve these problems, we have made a simple exploration. First, we have made a very thorough storage-computing separation, directly writing to remote storage and using local disks directly as Cache. On this basis, we have practiced different forms of cache. Second, we have implemented a simple asynchronous I/O PoC to verify its performance improvement in the storage-computing separation scenario.

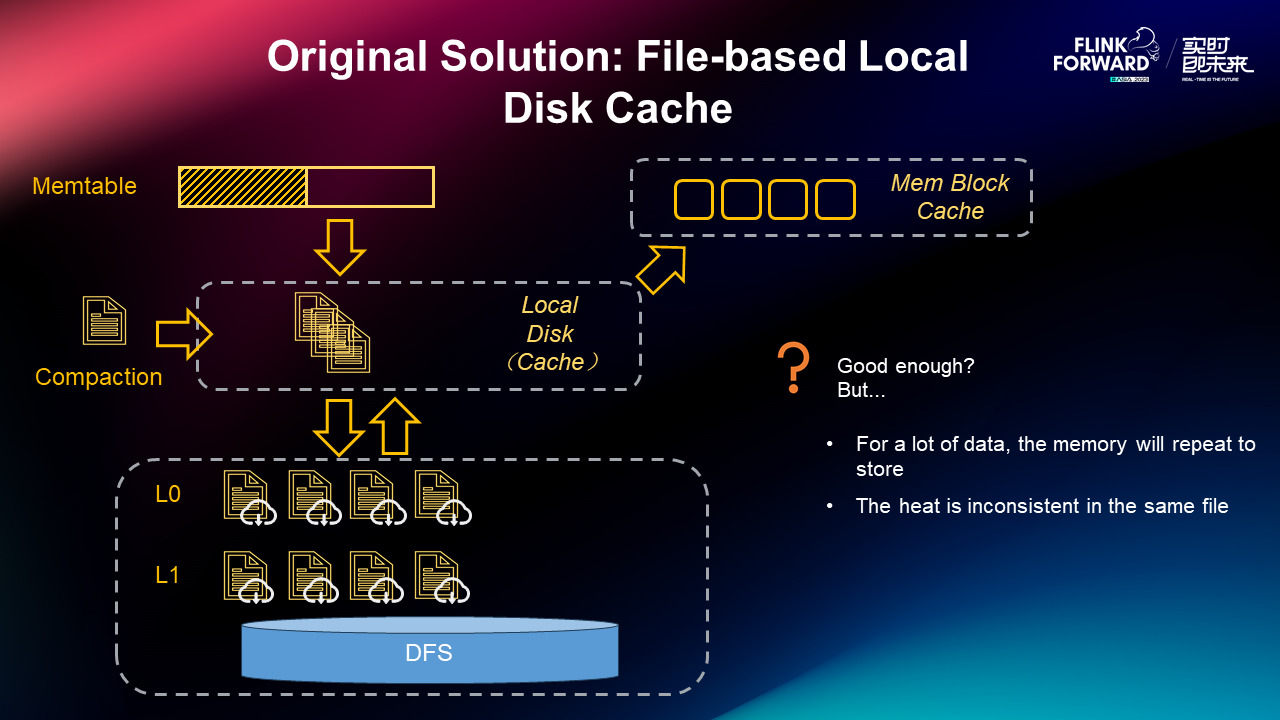

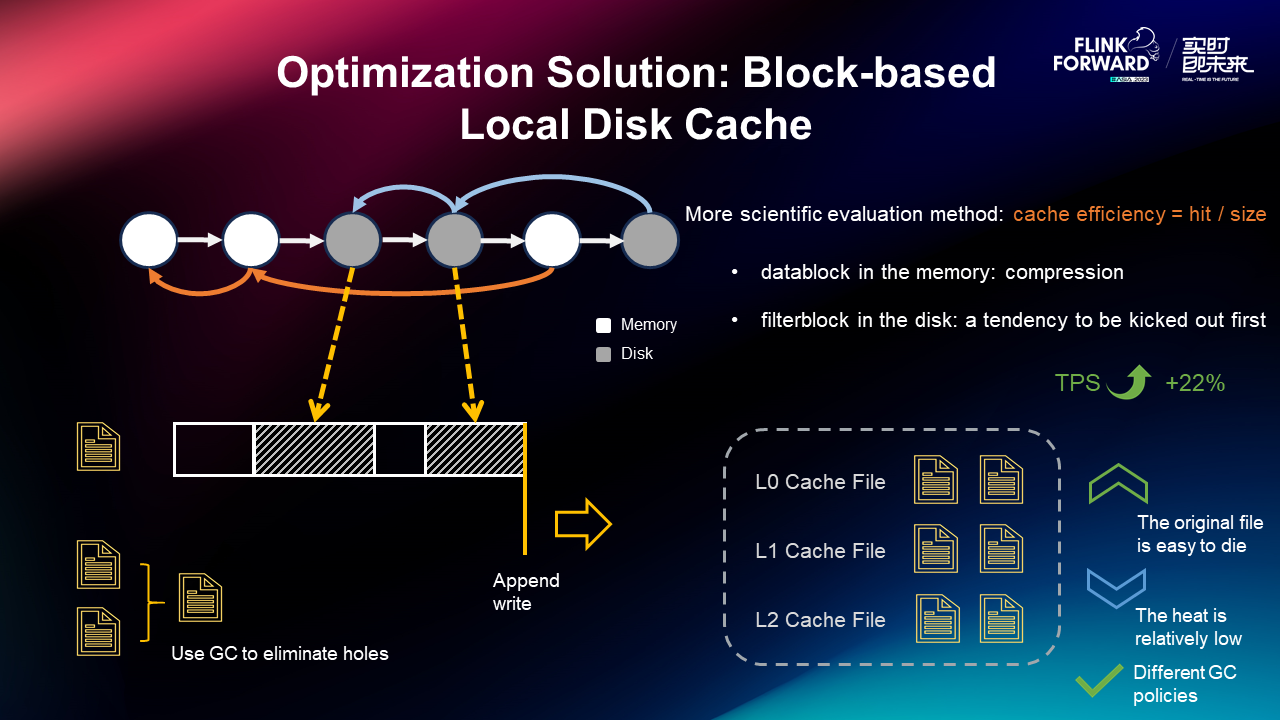

We will not elaborate on the changes to use remote storage as the primary storage. Here, we will mainly discuss the forms and optimization of cache. The simplest architecture is a file-based cache. If a remote file is accessed, it is loaded into the local disk cache. At the same time, the memory cache still exists and is still in the form of a BlockCache. This form is a very simple but efficient architecture. However, the memory BlockCache and the local disk file cache have a large number of data duplications, which is equivalent to wasting a lot of space. Besides, since the granularity of files is relatively coarse, the access probability is different for different blocks of the same file. Therefore, some cold blocks are maintained in the disk, which reduces the hit ratio of the local disk. In response to these two problems, we have designed a new local disk cache form for optimization.

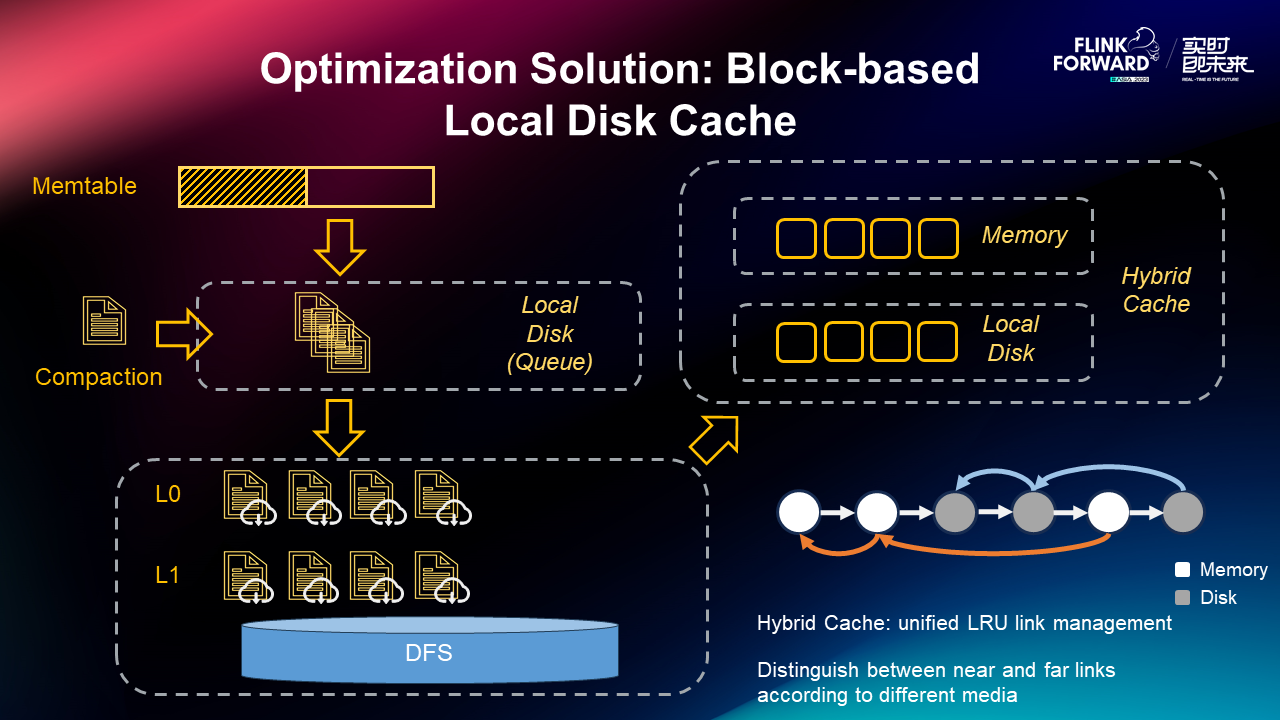

We have proposed to combine local disks with memory to form a hybrid cache with block granularity. It uses an overall LRU for unified management, and different blocks are only different in terms of the media. The relatively cold blocks in the memory are asynchronously flushed to the local disk, and the disk blocks are written in the underlying file in the form of append write in sequence. If some disk blocks are eliminated due to the LRU policy, a hole is bound to be mapped to a file. Therefore, we have adopted space recycling for optimization to maintain the effectiveness of cache space. The process of space recycling is a trade-off between space and CPU overhead.

Files at different layers, such as L0 File, L1 File, and L2 File, have different life cycles. For L0 File, its life cycle is relatively short, but the heat is relatively high. For L2 File, the File itself is easier to survive, but the heat is relatively low. According to these different characteristics, we can take different strategies for space recycling. File blocks from different layers will be cached in different underlying files. Different thresholds and frequencies of space recycling may be performed for different underlying files, which may ensure maximum space recycling efficiency.

In addition, we have also proposed an optimization scheme for the block elimination strategy. The most primitive LRU is managed based on the hit frequency. If a block is not hit in a period of time, it will be eliminated. This strategy does not take into account the space overhead of caching a block. In other words, although a block is cached, there may be more blocks that cannot be cached. Therefore, a new evaluation system called cache efficiency is introduced here. The number of hits in a period of time is divided by the block size to better judge whether each cached block should be cached. The disadvantage of this evaluation method is that the overhead will be relatively high. The most basic LRU is for O(1) queries, but the scoring of cache efficiency requires the implementation of a priority queue, and its operating efficiency will be greatly reduced. Therefore, the idea here is to perform special treatment for the abnormal cache efficiency of blocks while maintaining LRU body management.

At present, exceptions are found in two parts. The first part is the data block in memory. Its hit ratio is relatively low in memory, but its proportion can reach 50%. The current strategy for it is to compress. The cost is that each access involves decompression, but this overhead is much smaller than that of performing an I/O. The second part is the filter block in the disk. Although it has hits, its size is relatively large and the cache efficiency is not high. A policy that tends to kick out the filter block in the disk first is implemented here, so that relatively upper-layer data can be cached. In the test job scenario, these two special rules have been combined with LRU. Compared with the case without these two rules, the overall TPS will increase by 22%, which has a more significant effect.

However, direct writing to the remote end causes the remote file cold read problem of the system, that is, the read after the file is generated for the first time still needs to involve remote I/O. We have also made a small optimization here to solve this problem, providing a queue for uploading remote ends on the local disk, and allowing the files in it to be cached for a period of time. The time is not very long, about a level of 20 to 30 seconds, during which the remote I/O of the queue files will become local I/O. This approach can greatly alleviate the problem of remote file cold reads.

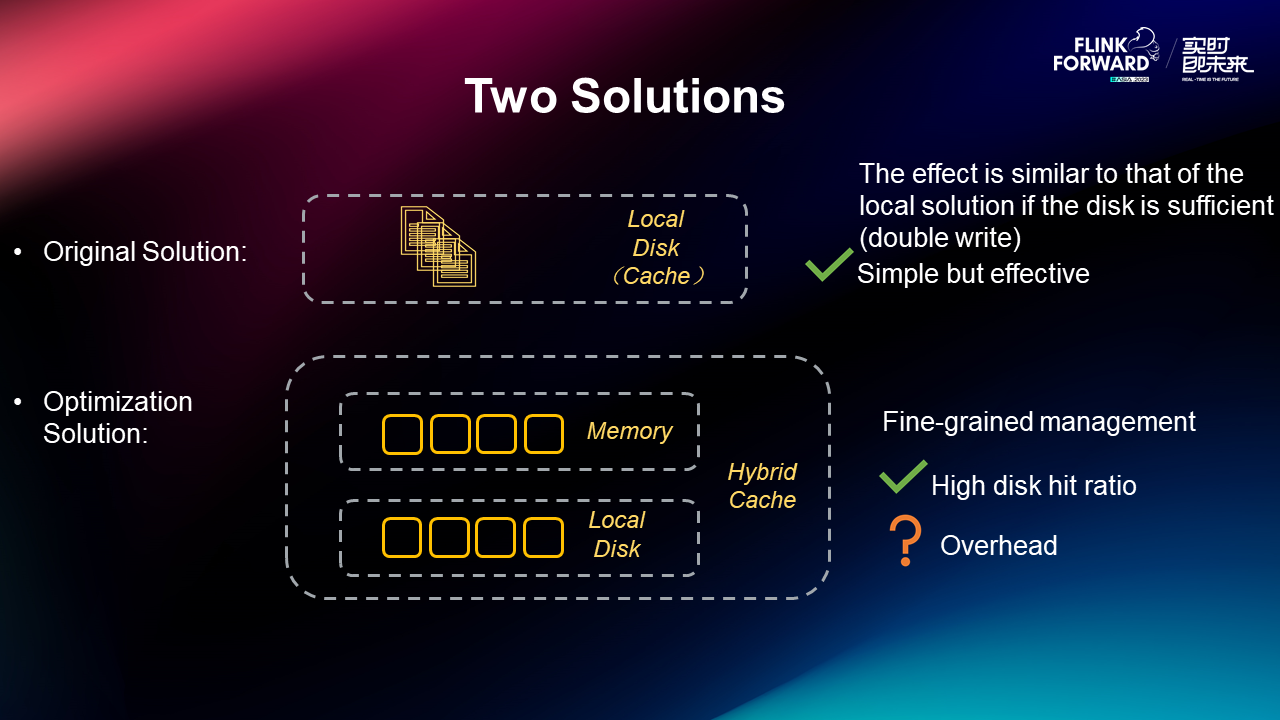

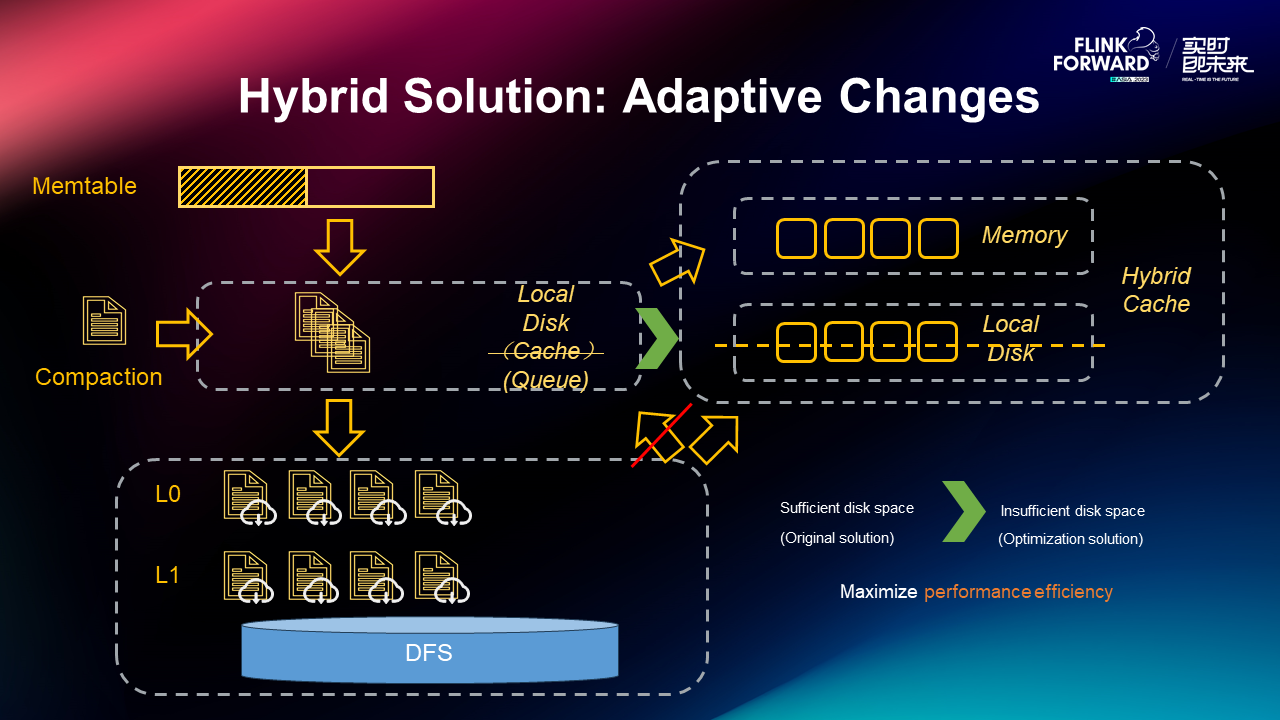

So far, we have two cache schemes of storage-computing separation. The first is the file-based local disk cache scheme, which has the advantage of being very simple and effective. It has a similar performance to the local scheme in scenarios with sufficient disks because the local disk can cache all files. The second is the optimization of hybrid block cache, which is a very good solution when the local disk is insufficient because it improves the cache hit ratio. But it also brings a relatively large management overhead. If we want to have a common scheme to adapt to all scenarios, what should we do?

Combining the above two schemes, we have designed an adaptive hybrid scheme. In the case of sufficient disks, the file-based cache solution is used. In the case of insufficient disks, the local disk and memory are automatically combined to form a hybrid block cache solution. The integration of the two solutions merges their respective advantages to maximize performance efficiency in all scenarios.

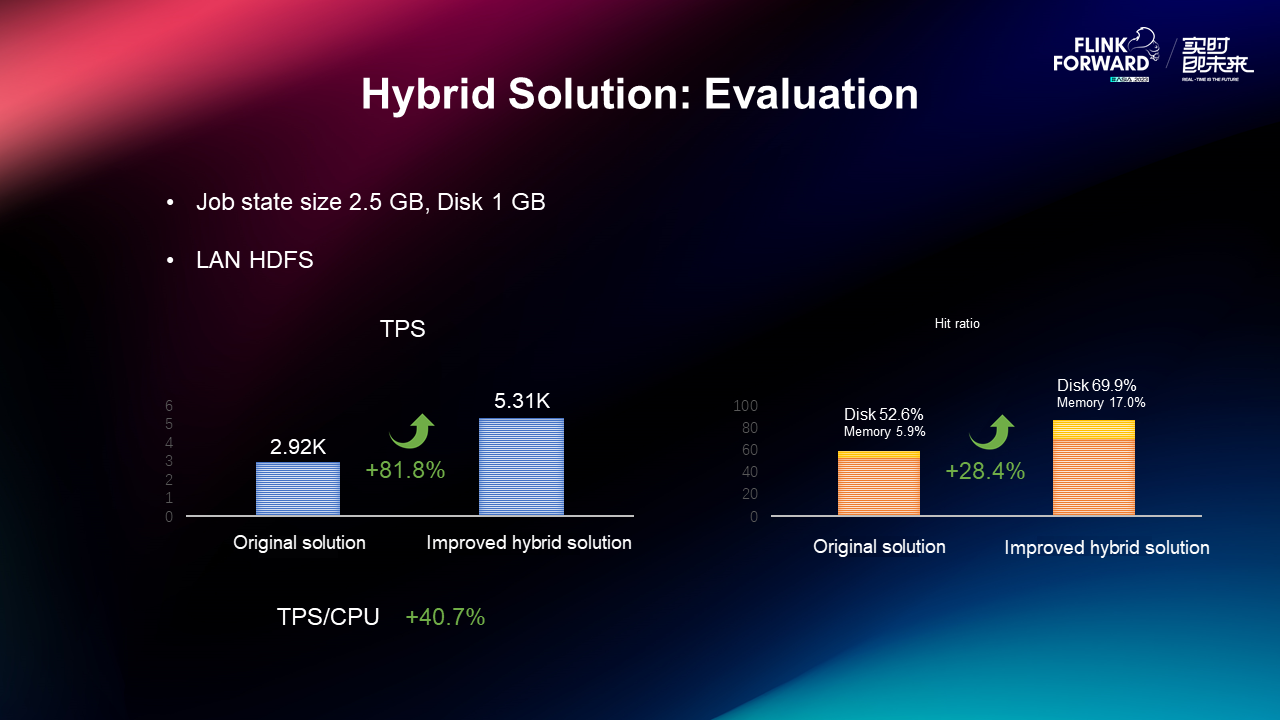

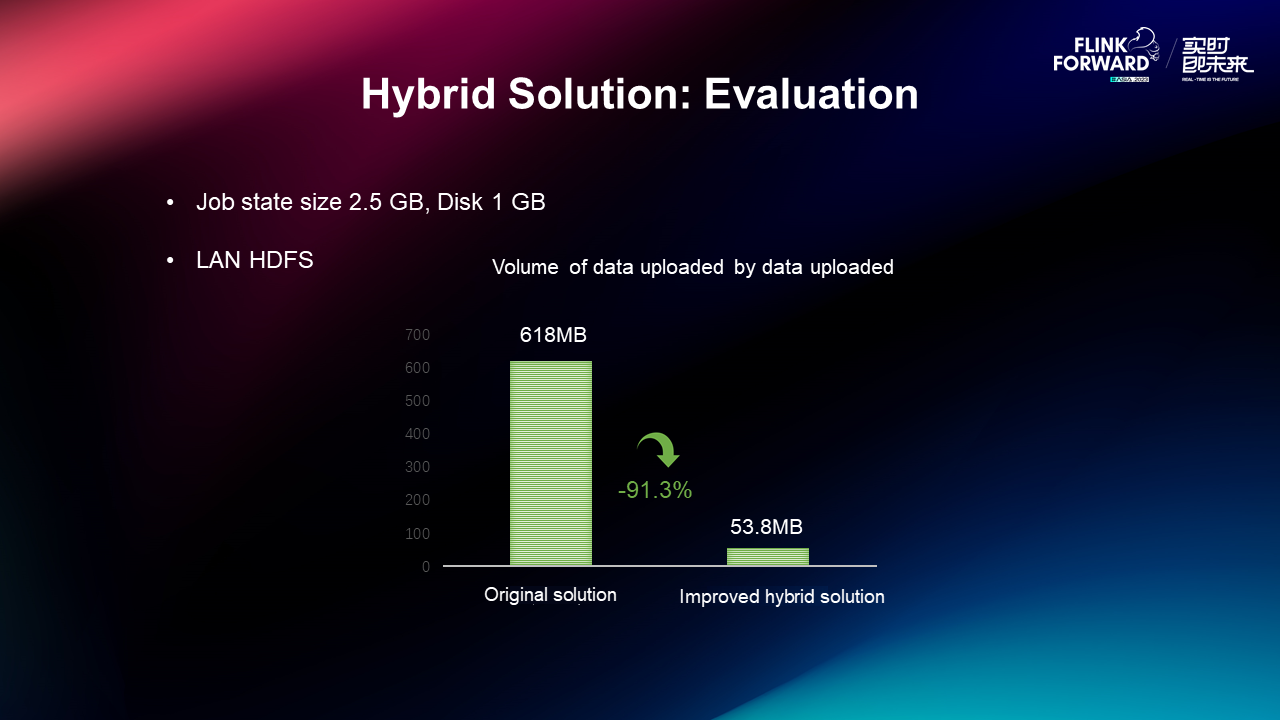

We have used test jobs to evaluate the proposed hybrid solution. As you can see, the new solution has an 80% improvement in TPS compared with the original cache solution with file granularity. At the same time, it also brings some CPU overhead. Using CPU efficiency (TPS/CPU) as the criterion, the new solution also has a 40% improvement. The increase in cache hit ratio is a major source of TPS improvement.

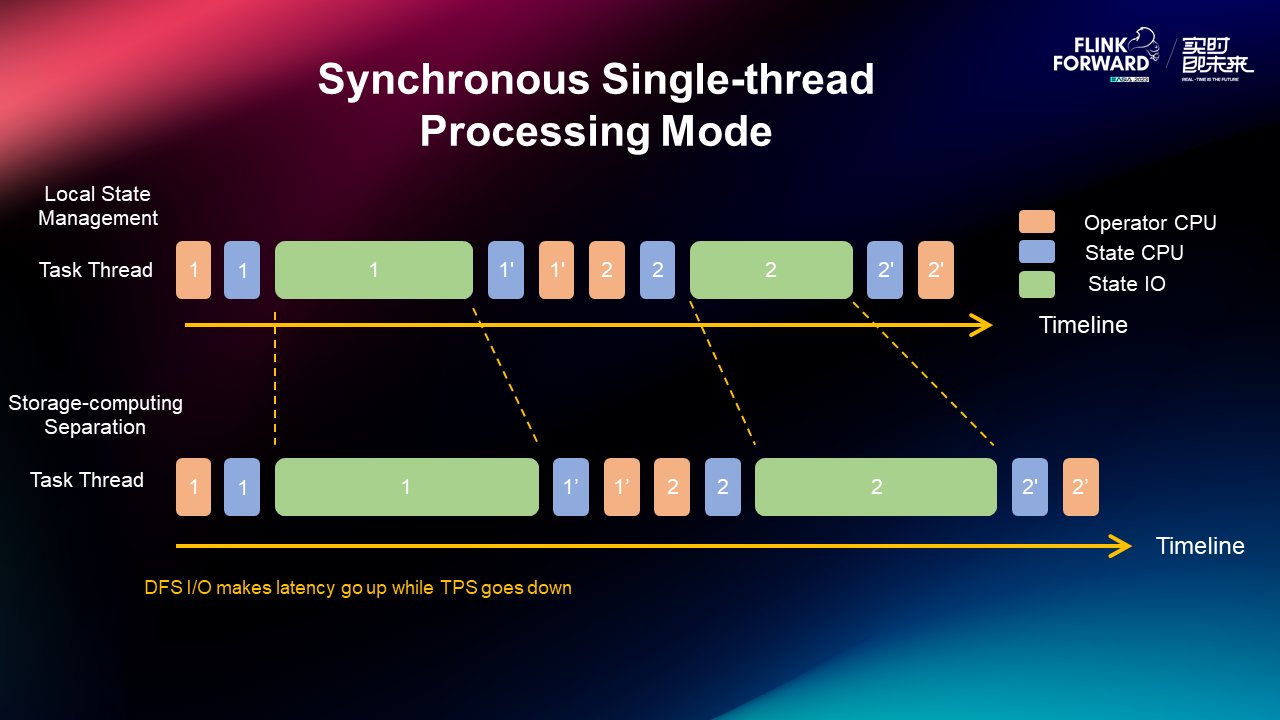

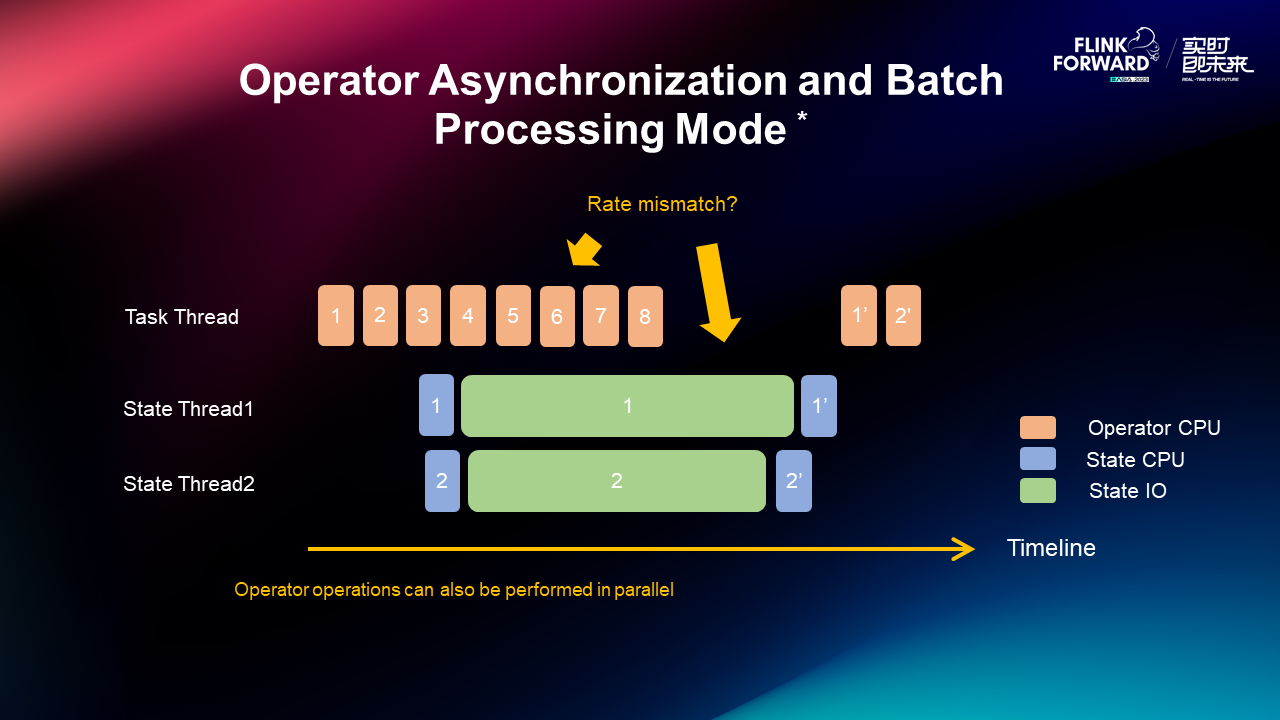

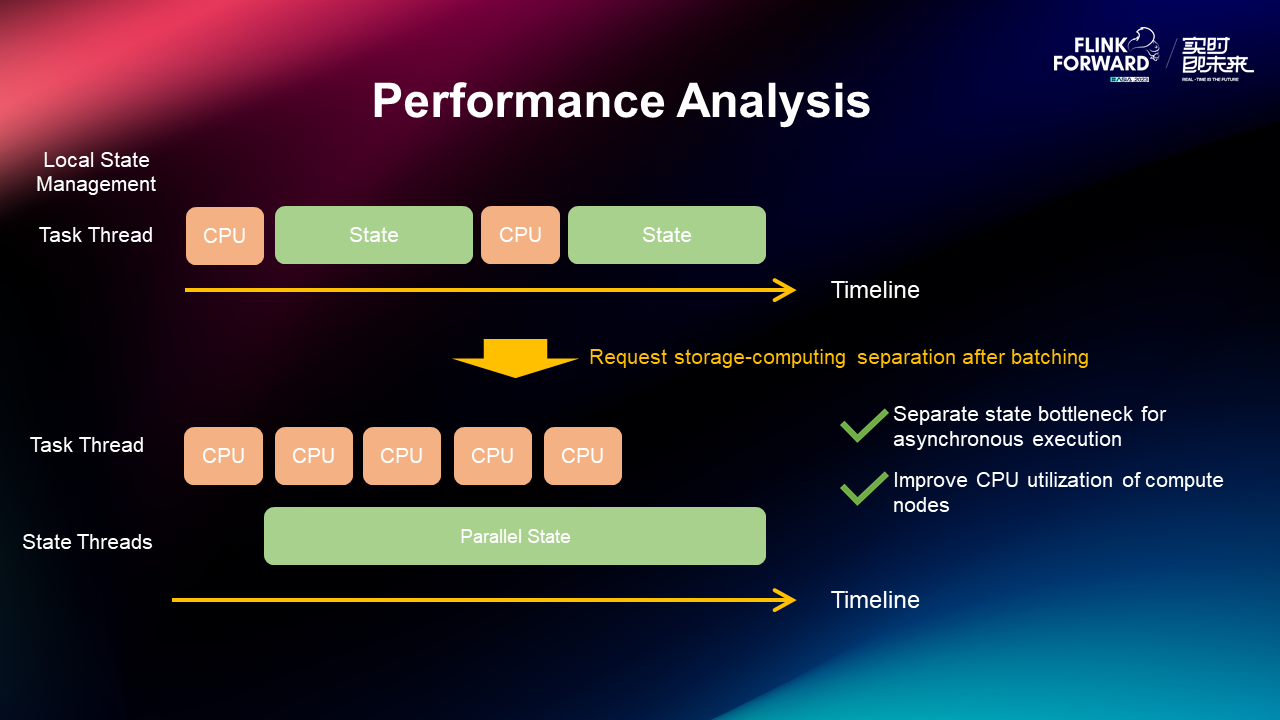

The second exploration is the asynchronous I/O transformation and testing of Flink. The following figure shows the single-thread processing model of Flink. On the task thread, all data is processed in sequence. For each data processing, the overhead is divided into the CPU overhead of the operator, the CPU overhead of the state access, and the I/O time required for the state access, among which I/O is the largest overhead. The I/O delay of remote storage is higher than that of local storage due to the storage-computing separation. As a result, the overall TPS is significantly reduced.

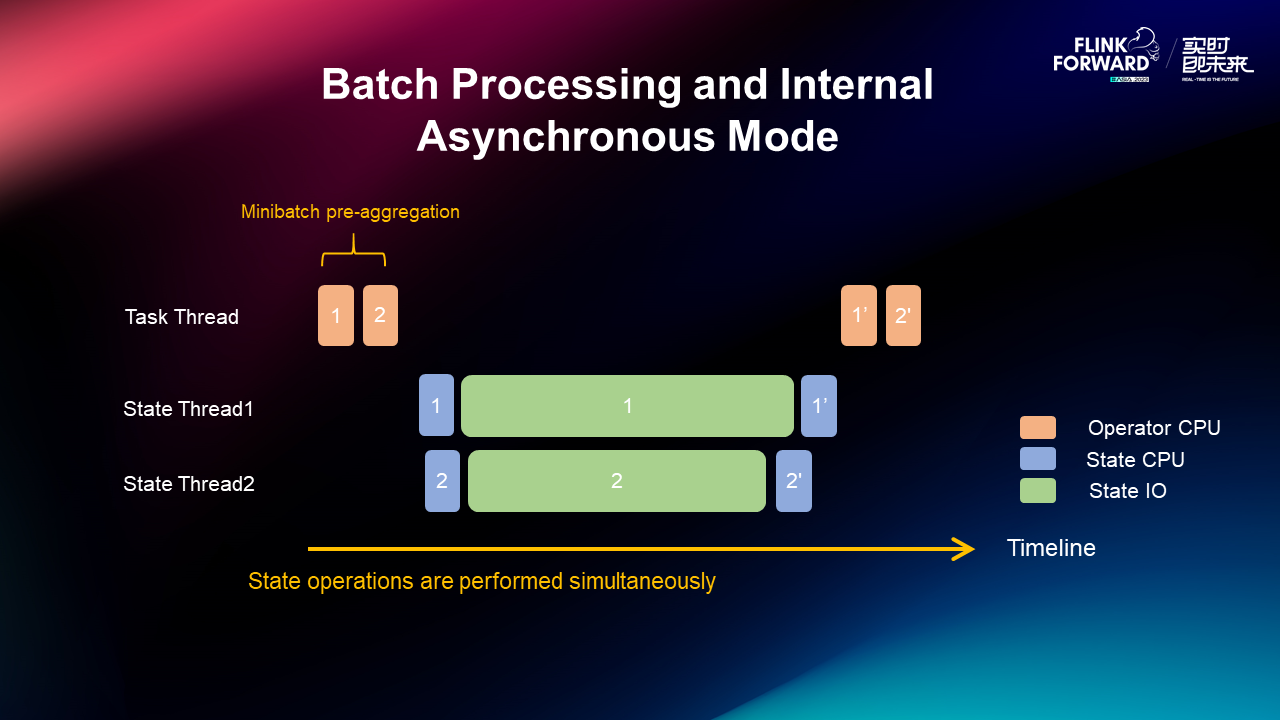

We have changed this mode so that the state operations can be performed simultaneously. In terms of task threads, the overall time is reduced after the state is parallelized, so the TPS will be improved. At the same time, task threads need to be pre-batched, which is very similar to what micro-batch does. Similarly, the pre-aggregation function can also be used to reduce the number of state accesses and further improve TPS.

In addition, based on adding asynchronous state access, we can continue to explore the process of asynchronization from the perspective of operators. It means that after the state access has started asynchronous execution, the task threads can continue to perform other CPU operations on data. However, here comes a problem: the state access to I/O usually takes a long time. Although some other data processing work can be done when task threads are idle, there will eventually be a problem of rate mismatch. The bottleneck will finally fall on state access and will degenerate into a situation where this optimization is not done.

After trade-offs, we believe that using only batch, with the use of asynchronous I/O for state access within the batch, is a more balanced solution.

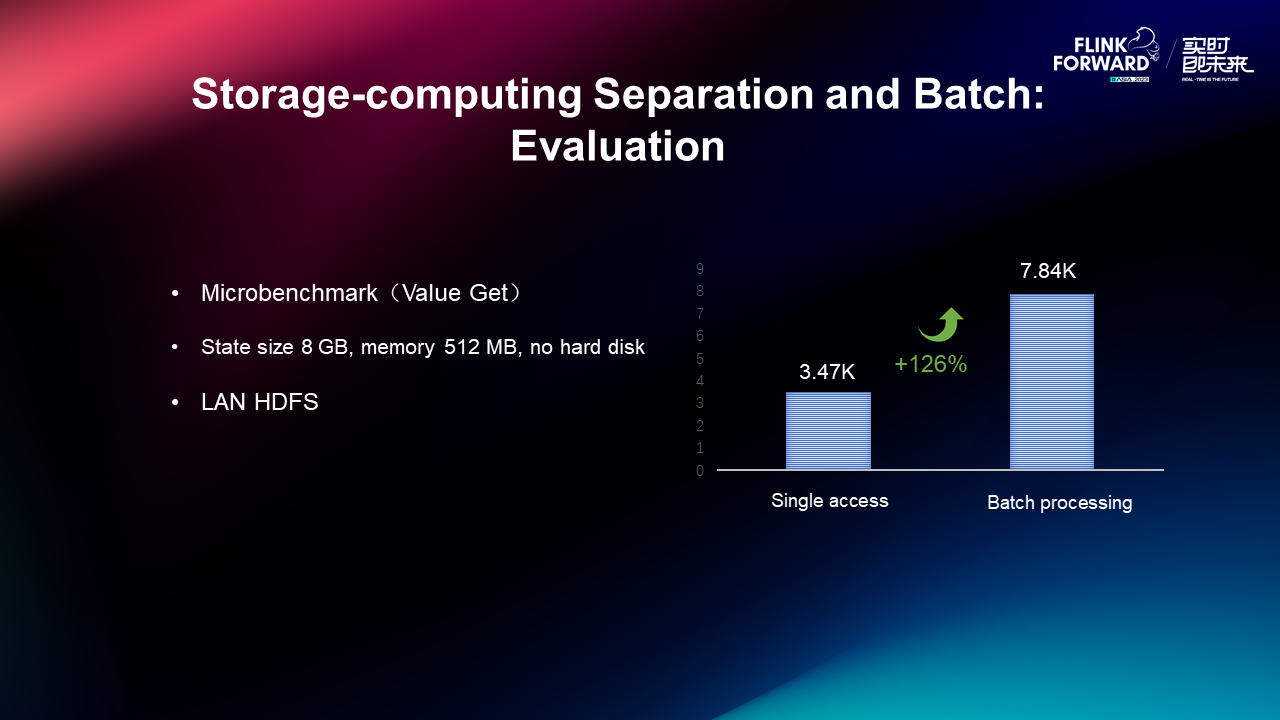

We have made a simple state backend that supports batch asynchronous interfaces and performed a simple test on the community Microbenchmark. So far, only the value get scenario has been involved. From the comparison results, it can be seen that batch execution, together with asynchronous I/O, has a great improvement in the storage-computing separation scenario.

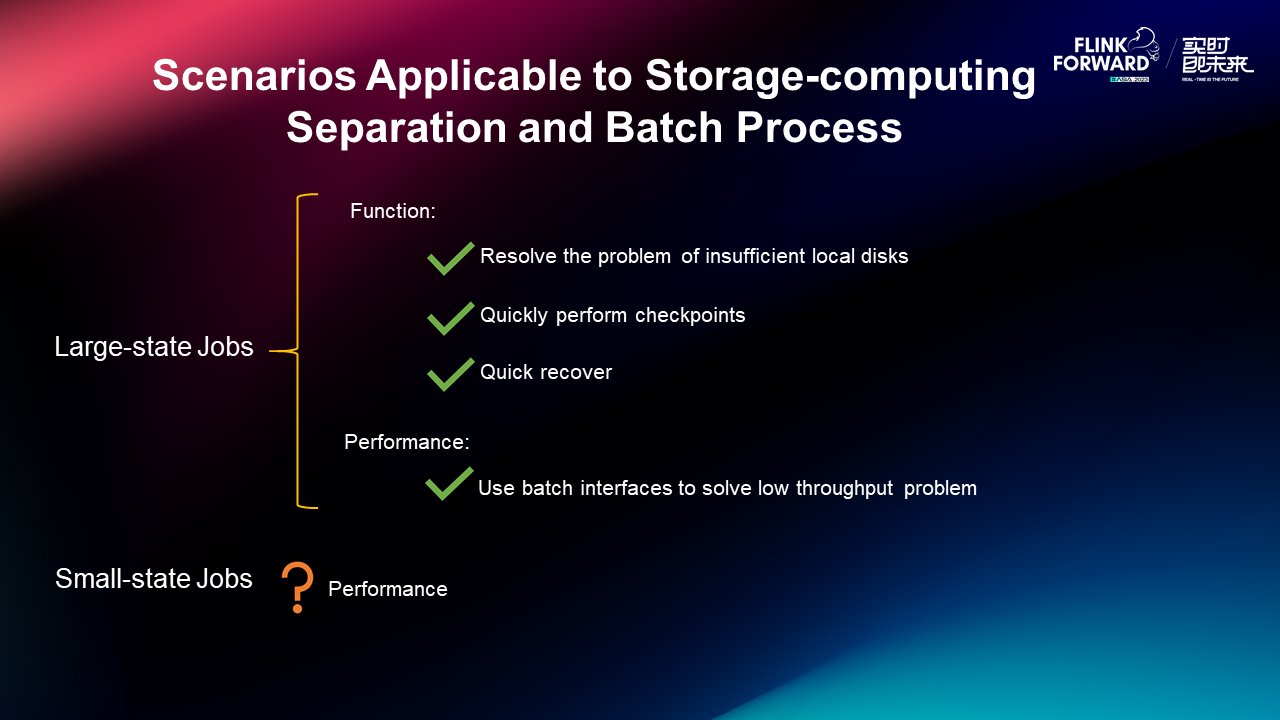

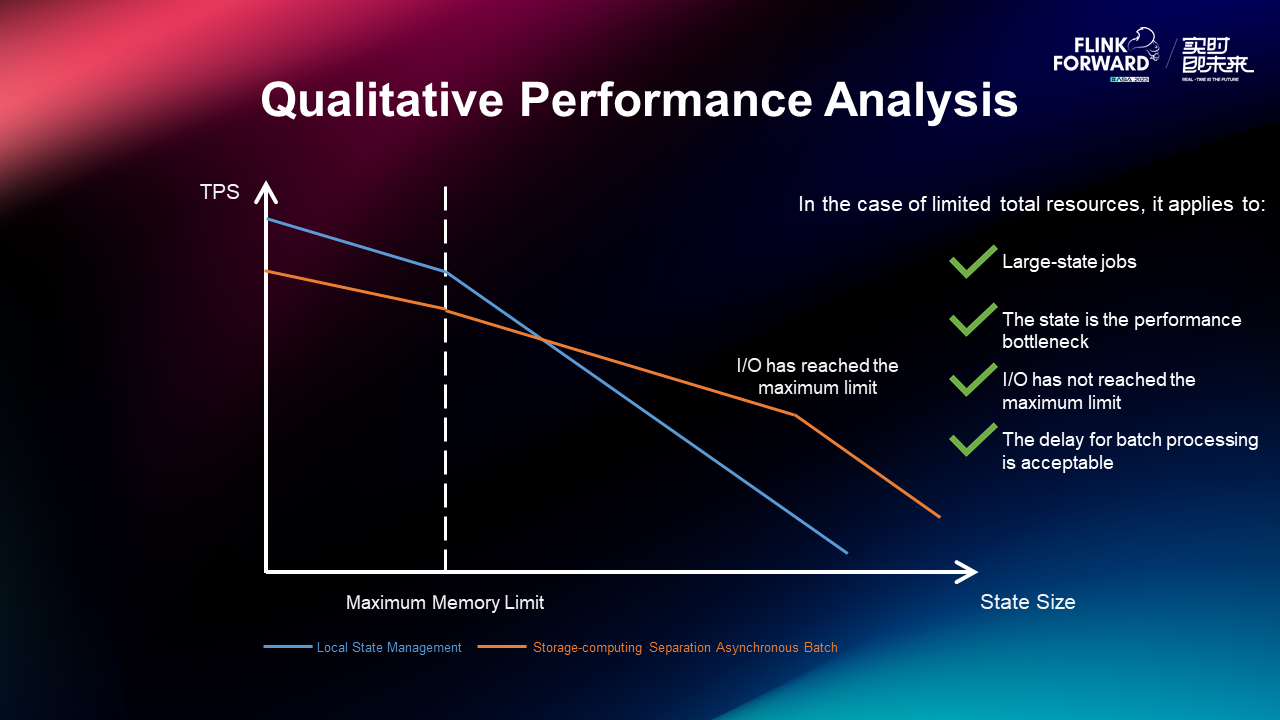

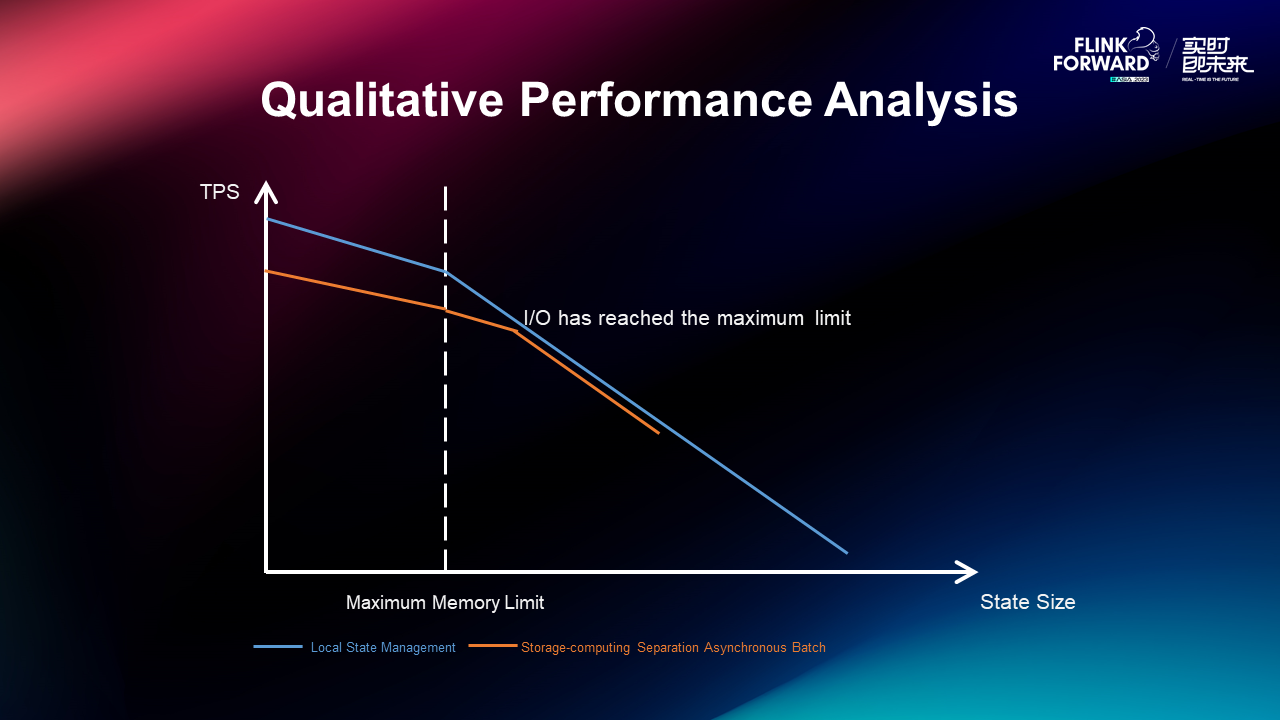

The batch execution of storage-computing separation state access explored above has unique application scenarios. For large-state jobs, the storage-computing separation solves several problems mentioned at the beginning. In terms of performance, batch interfaces are used to make up for its low performance.

The performance source of this solution is that state access is parallelized within a batch, which reduces the time of state access and improves the CPU utilization of compute nodes. This solution is useful for improving the performance of large-state jobs.

In the case of small-state jobs, state access can be achieved very quickly. The improvement in separating state access from task threads is small, and the overhead of interaction between threads is introduced. Therefore, in small-state scenarios, this batch asynchronous state access solution may not be as good as the original local state management solution.

As the state size gradually increases, the state I/O overhead gradually increases and becomes a bottleneck. The execution of asynchronous I/O dilutes the time consumed by each I/O. This results in a slower drop in the red line while a faster drop in the blue line of local state management. After a certain state size is reached, the performance of the asynchronous I/O solution will be significantly better. This solution needs to consume I/O bandwidth. If the state access has reached the I/O limit, asynchronous I/O cannot reduce the total I/O time, so its slope is similar to that of local state management.

If the I/O limit is reached when the state is very small, paralleled execution will not be effective, as shown in the preceding figure.

To summarize, batching and asynchronously performing state access have advantages under the following conditions:

• State access is the bottleneck in jobs with large states.

• I/O has not reached the bottleneck (not at full capacity).

• The business can tolerate the delay (ranging from sub-second to seconds) for batch processing.

In most storage-computing separation scenarios, the I/O performance is provided by storage clusters, which can support a relatively large number of I/O and can be flexibly scaled. In general, the I/O bottleneck is not reached prematurely. Asynchronous I/O can be effectively used to optimize storage-computing separation scenarios.

The preceding content highlights our efforts and advancements in storage-computing separation. We aim to leverage the release of Flink 2.0 to make contributions to the community. This includes our support for both pure remote storage-computing separation solution and the storage backend of hybrid cache. Additionally, we are exploring the introduction of asynchronous I/O to ensure high-performance data processing in the storage-computing separation mode.

Evolution of Flink 2.0 State Management Storage-computing Separation Architecture

206 posts | 56 followers

FollowApache Flink Community - May 9, 2024

Alibaba Cloud Community - May 10, 2024

Alibaba Cloud Community - December 9, 2021

Apache Flink Community - August 29, 2025

ApsaraDB - September 19, 2022

Alibaba EMR - May 11, 2021

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Media Solution

Media Solution

An array of powerful multimedia services providing massive cloud storage and efficient content delivery for a smooth and rich user experience.

Learn More Batch Compute

Batch Compute

Resource management and task scheduling for large-scale batch processing

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn MoreMore Posts by Apache Flink Community