The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

By Yi Li, Senior Technical Expert of Alibaba Cloud and Director of Alibaba Container Service

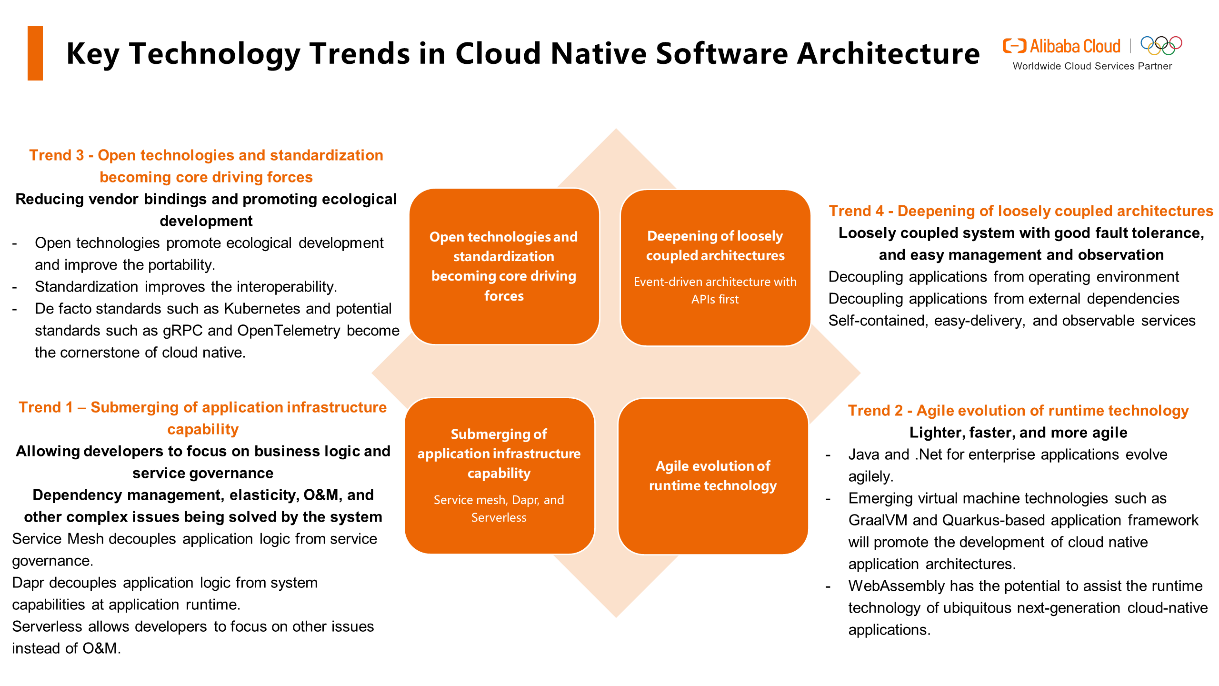

Cloud native computing generally includes the following three dimensions: cloud native infrastructure, software architecture, and delivery and O&M systems. This article will focus on software architecture.

"Software architecture refers to the fundamental structures of a software system and the discipline of creating such structures and systems." — From Wikipedia.

In my understanding, the main goal of software architecture is to solve these challenges:

The cloud native application architecture aims to build a loosely coupled, elastic, and resilient distributed application architecture. This allows us to better adapt to the needs of changing and developing business and ensure system stability. In this article, I'd like to share my observations and reflections in this field.

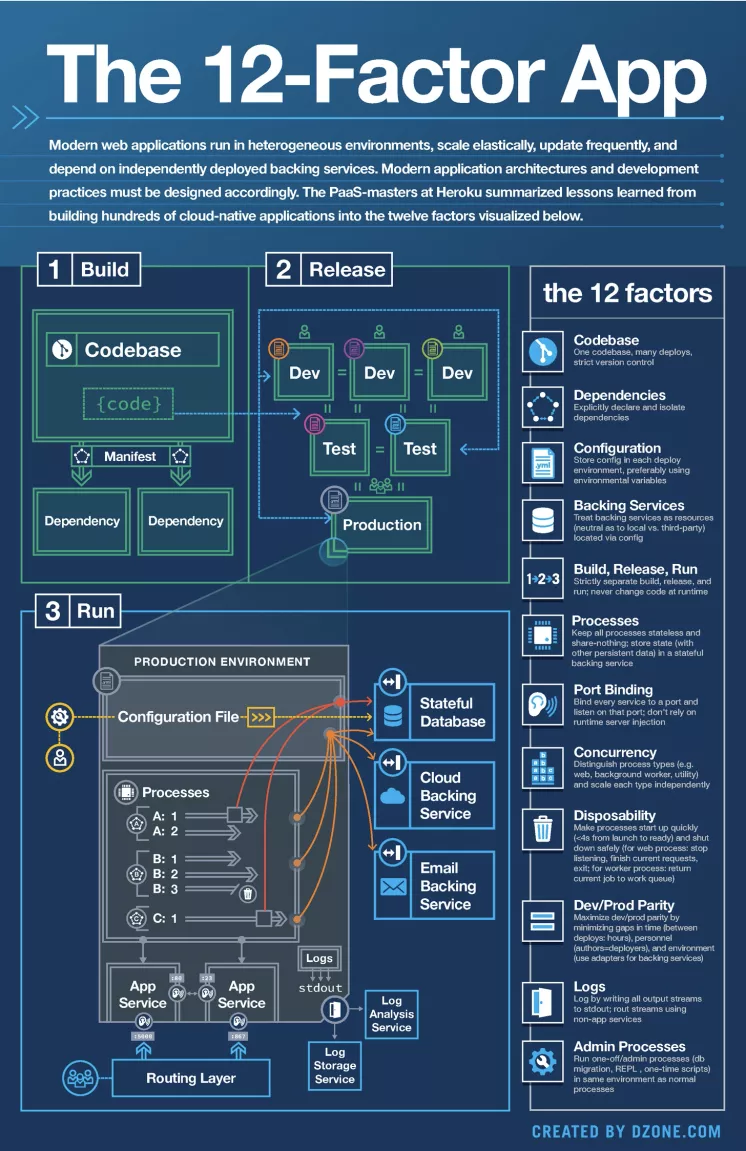

In 2012, Adam Wiggins, founder of Heroku, issued "The Twelve-Factor App". It defined some basic principles and methodologies to be followed in building an elegant Internet application. It has also influenced many microservices application architectures. The Twelve-Factor App focuses on the healthy growth of applications, effective collaboration among developers, and avoiding the decay of software architecture. Even today, The Twelve-Factor App is also worth learning and understanding.

Picture source: https://12factor.net/zh_cn/

The Twelve-Factor App provides good architecture guides and helps us:

The core idea of microservices is that each service in the system can be independently developed, deployed, and upgraded, and that each service is loosely coupled. The cloud native application architecture further emphasizes loose coupling in the architecture to reduce dependency between services.

In object-oriented software architectures, the most important thing is to define the object and its interface contract. The SOLID Principles is the most recognized design principle.

The five principles together are called SOLID Principles, which helps us build application architectures with high cohesion, low coupling, and flexibility. In the distributed microservices application architecture, API First design is the extension of Contract First.

API should be designed first. User requirements are complex and changeable. For example, the application presentation mode and operation process may be different from the client to the mobile app. However, the conceptual model and service interaction of the business logic are relatively stable. APIs are more stable, while their implementations can be iterated and continuously changed. A well-defined API can ensure the quality of the application system.

API should be declarative and describable/self-describable. With standardized descriptions, it is easy for developers to communicate with, understand, and verify API, and to simplify collaborated development. API should support concurrent development by service consumers and providers, which accelerates the development cycle. It also should support the implementation of different technology stacks. For example, for the same API, developers can use Java to implement service, JavaScript to support front-end application, and Golang for service calls in server-side applications. This allows development teams to flexibly choose the right technology based on their own technical stacks and system requirements.

API should be equipped with SLA. As the integration interface between services, APIs are closely related to the stability of the system. SLA should be considered as a part of API design instead of adding it into API after deployment. Stability risks are ubiquitous in distributed systems. In such circumstances, we can conduct stability architecture design and capacity planning for independent services by taking the API-first design approach. Apart from that, we can also perform fault injection and stability tests to eliminate systemic stability risks.

In the API field, the most important trend is the rise of standardization technology. gRPC is an open-source, high-performance, platform-independent, and general RPC framework developed by Google. Its design consists of multiple layers. The data exchange format is developed based on Protobuf (Protocol Buffers), which provides excellent serialization and deserialization efficiency and supports multiple development languages. In terms of transport layer protocol, gRPC uses HTTP/2, which greatly improves the transport efficiency compared with HTTP/1.1. In addition, as a mature open standard, HTTP/2 has various security and traffic control capabilities as well as good interoperability. In addition to calling server-side services, gRPC also supports interactions between back-end services and browsers, mobile apps, and IoT devices. gRPC already has complete RPC capabilities in functions and also provides an extension mechanism to support new functions.

In the trend of cloud native, the interoperability demand for cross-platform, cross-vendor, and cross-environment systems will inevitably lead to open-standards-based RPC technology. Conforming to the historical trend, gRPC has been widely applied. In the field of microservices, Dubbo 3.0 announced its support for the gRPC protocol. In the future, we will see more microservices architectures developed based on gRPC protocol with multiple programing languages support. In addition, gPRC has become an excellent choice in the data service field. For more information, read this article on Alluxio

In addition, in the API field, open standards like Swagger (an OpenAPI specification) and GraphQL deserves everyone's attention. You can choose one of them based on your business needs, this article will not go into details of these standards.

Before talking about Event Driven Architecture (EDA), let's understand what event is first. Event is the record of things occurred and status changes. Records are immutable, which means that they cannot be changed or deleted, and they are sorted creating time. Relevant parties can get notification of these status changes by subscribing to published events, and then use the selected business logic to take actions based on obtained information.

EDA is an architecture that builds loosely coupled microservices systems. Microservices interact with each other through asynchronous event communication.

EDA enables complete decoupling between event producers and consumers. Thus, producers do not need to pay attention to the way that an event is consumed, and consumers do not need to concern about how the event is produced. We can dynamically add more consumers without affecting producers. By adding more message-oriented middleware, we can dynamically route and convert events. This also means that event producers and consumers are not time-dependent. Even if messages cannot be processed in time due to application downtime, the program can continue to obtain and execute these events from the message queue after recovery. Such a loosely coupled architecture provides greater agility, flexibility, and robustness for the software architecture.

Another important advantage of EDA is the improvement on system scalability. Event producers will not be blocked when waiting for event consumption. They can also adopt the publish-subscribe method to allow parallel event processing by multiple consumers.

In addition, EDA can be perfectly integrated with Function as a Service (FaaS). Event-triggered functions execute business logics, and glue code that integrates multiple services can be written in functions. Thus, event-driven applications can be easily and efficiently constructed.

However, EDA still faces many challenges as follows:

With its own advantages, EDA has many outstanding prospects in scenarios such as Internet application architectures, data-driven and intelligent business, and IoT. The detail of EDA will not be discussed here.

In the cloud native software architecture, except for focusing on how the software is built, we also need to pay attention to proper design and implementation of software. By doing so, better delivery and O&M of software can be achieved.

In The Twelve-Factor App, the idea of decoupling applications from operating environments has been proposed. The emergence of Docker container further strengthens this idea. Container is a lightweight virtualization technology for applications. Docker containers share the operating system kernel with each other and support second-level boost. Docker image is a self-contained application packaging format. It packages the application and its related files, such as system libraries and configuration files, to ensure consistent deployment in different environments.

Container can serve as the foundation for Immutable Infrastructure to enhance the stability of application delivery. Immutable Infrastructure is put forward by Chad Fowler in 2013. In this mode, instances of any infrastructures, including various software and hardware like servers and containers, will become read-only upon creation. Namely, no modification can be made to those instances. To modify or upgrade certain instances, developers can only create new instances to replace them. This mode reduces the burden of configuration management, ensures that system configuration changes and upgrades can be reliably and repeatedly executed, and avoids troublesome configuration drift. It is easy for Immutable Infrastructure to solve differences between deployment environments, enabling a smoother process of continuous integration and deployment. It also supports better version management, and allows quick rollback in case of deployment errors.

As a distributed orchestration and scheduling system for containers, Kubernetes further improves the portability of container applications. With the help of Loadbalance Service, Ingress, CNI, and CSI, Kubernetes helps service applications to reconcile implementation differences of underlying infrastructures, so as to achieve flexible migration. This allows us to realize the dynamic migration of workloads among data centers, edge computing, and cloud environments.

In the application architecture, application logic should not be coupled with static environment information, such as IP and mac addresses. In the microservices architecture, Zookeeper and Nacos can be used for service discovery and registration. In Kubernetes, the dependence on the IP address of service endpoint can be reduced through Service and Service Mesh. In addition, the persistence of the application state should be implemented through distributed storage or cloud services, which can greatly improve the scalability and self-recovery capabilities of the application architecture.

Observability is one of the biggest challenges for distributed systems. Observability can help us understand the current state of the system, and can be the basis for application self-recovery, elastic scaling, and intelligent O&M.

In the cloud-native architecture, self-contained microservices applications should be observable so that they can be easily managed and explored by the system. First, an application should be observable on its own health status.

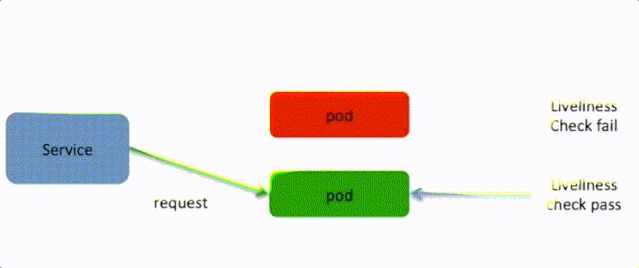

In Kubernetes, a liveness probe is provided to check application readiness through TCP, HTTP, or command lines. For the HTTP-type probe, Kubernetes regularly accesses this address. If the return code of this address is not between 200 and 400, the container is considered unhealthy, and the container will be forbidden for reconstruction.

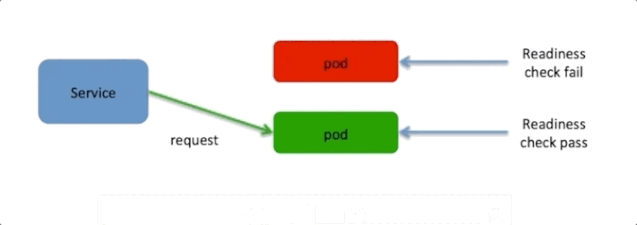

For slow-start applications, Kubernetes supports the readiness probe provided by business container to avoid importing traffic before the application is started. For HTTP-type probe, Kubernetes regularly accesses this address. If the return code is not between 200 and 400, the container is considered to be unable to provide services, and then requests will not be scheduled to this container.

Meanwhile, the observable probe has been contained in new microservices architectures. For example, two actuator addresses, which are /actuator/health/liveness and /actuator/health/readiness, have been released by Spring Boot 2.3. The former is used as the liveness probe, whereas the latter is used as the readiness probe. Business applications can read, subscribe to, and modify the Liveness State and Readiness State through the system event mechanism of Spring. This allows Kubernetes to perform more accurate self-recovery and traffic management.

For more information, see this article

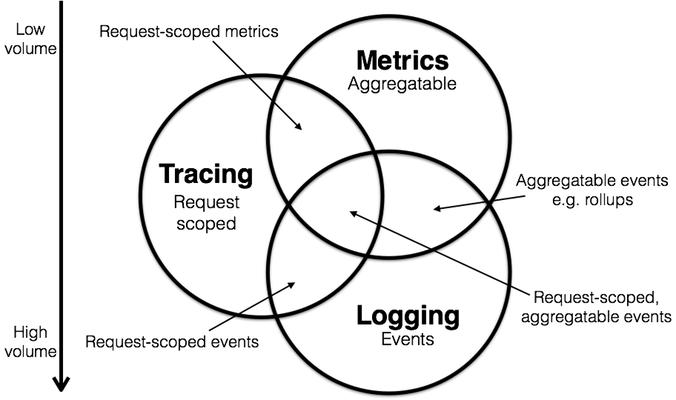

In addition, application observability consists of three key capabilities: logging, metrics, and tracing.

In the distributed system, stability, performance, and security problems can occur anywhere. Additionally, these problems require full-procedure observability assurance and the coverage of different layers, such as the infrastructure layer, PaaS layer, and application layer. In addition, the association, aggregation, query, and analysis of observability data should be realized among different systems.

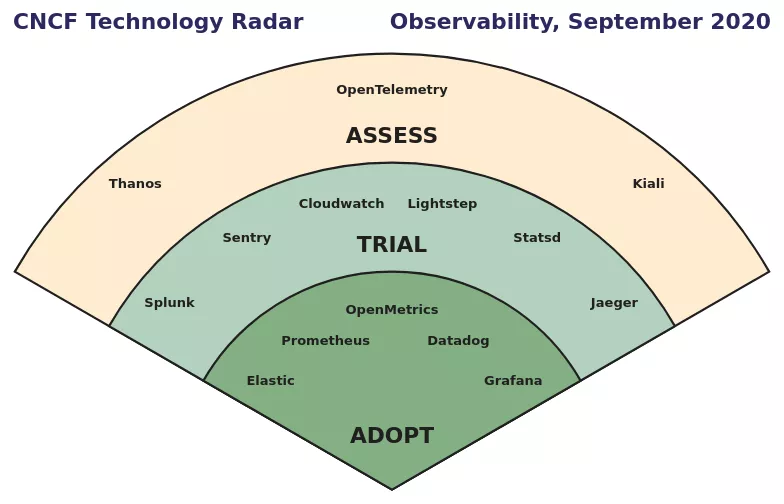

The observability field of software architecture has broad prospects, and many technological innovations in this field have emerged. In September 2020, CNCF released the technology radar of cloud native observability

In the Technology Radar, Prometheus has become one of the preferred open-source monitoring tools for cloud native applications for enterprises. Prometheus has developed an active community of developers and users. In the Spring Boot application architecture, the introduction of the micrometer-registry-prometheus dependency allows Prometheus to collect application monitoring metrics. For more information, see this documentation

In the field of distributed tracing, OpenTracing is an open-source project of CNCF. It is a technology-neutral standard for distributed tracing. It provides a unified interface and is convenient for developers to integrate one or more types of distributed tracing implementations in their own services. Additionally, Jaeger is an open-source distributed tracing system from Uber. It is compatible with the OpenTracing standard and has been approved by CNCF. In addition, OpenTelemetry is a potential standard, trying to integrate OpenTracing and OpenCensus to form a unified technical standard.

For many remaining business systems, existing applications are not fully observable. The emerging Service Mesh technology can become a new way to improve the observability of these systems. Through request intercepting hosted in the data plane, the mesh can obtain performance indicators of inter-service calls. In addition, the service caller only needs to add the message header to be forwarded, and the complete tracing information can be obtained in the Service Mesh. This greatly simplifies the observability construction, allowing existing applications to integrate into cloud-native observability systems at a low cost.

Alibaba Cloud offers a wide range of observability capabilities. Among them, XTrace supports OpenTracing and OpenTelemetry standards. Application Real-time Monitoring Service (ARMS) provides the hosted Prometheus service, which allows developers to focus on other issues instead of the high availability and capacity challenges of systems. Observability is the foundation of Algorithmic IT Operations (AIOps) and will play an increasingly important role in enterprises' IT application architectures in the future.

"Murphy's Law" says that "Anything that can go wrong will go wrong". The distributed system may be affected by factors, such as hardware and software, or be internally and externally damaged by human. Cloud computing provides infrastructure that is higher in SLA and security than self-built data centers. However, we still need to pay close attention to system availability and potential "Black Swan" risks during application architecture design.

To achieve systematic stability, developers need to take an overall consideration in several aspects, such as software architecture, O&M system, and organizational guarantee. In terms of architecture, the Alibaba economy has rich experience in defensive design, traffic limiting and degradation, and fault isolation. It has also provided excellent open-source projects such as Sentinel and ChaosBlade to the community.

In this article, I will talk about several aspects that can be further discussed in the cloud native era. I summarized my reflections as: "Failures can and will happen, anytime, anywhere. Fail fast, fail small, fail often and recover quickly."

Firstly, "Failures can and will happen". Therefore, we need to make servers more replaceable. There is a very popular metaphor in the industry, that is, "Pets vs. Cattle". When facing architectures, should we treat servers as raising pets with carefulness to avoid downtime and even rescue it at all costs? Or should we treat servers as raising cattle, which means they can be abandoned and replaced in case of problems? The cloud native architecture suggests that each server and component can afford to fail. Provided that the failure will not affect the system and servers and components are capable of self-recovery. This principle is based on the decoupling of application configuration and persistence from specific operating environment. The automated O&M system of Kubernetes makes server replacement simpler.

Secondly, "Fail fast, fail small, and recover quickly". "Fail fast" is a very counter-intuitive design principle. As failures cannot be avoided, the earlier problems are exposed, the easier it is to recover and the fewer problems occurring in the production environment there are. After adopting the Fail-fast policy, our focus will shift from how to exhaust problems in the system to how to quickly find and gracefully handle failures. In the R&D process, integration tests can be used to detect application problems as early as possible. At the application layer, modes, like Circuit Breaker, can be used to prevent overall problems caused by local faults of a dependent service. In addition, Kubernetes health monitoring and observability can detect application faults. The circuit breaker function of the Service Mesh can extend the fault discovery, traffic switching, and fast self-recovery capabilities out of the application implementation, which will be guaranteed by system capabilities. The essence of "Fail small" is to control the influence range of failures. This principle requires constant attention in terms of architecture design and service design.

Thirdly, "Fail often". Chaos engineering is an idea that periodically introduces fault variables into the production environment to verify the effectiveness of the system in defending against unexpected faults. Netflix has introduced chaos engineering to solve stability challenges of microservices architecture. The chaos engineering is also widely used by many Internet companies. In the cloud native era, there are more new approaches available. For example, Kubernetes allows us to easily inject faults, shut down pods, and simulate application failure and self-recovery process. With Service Mesh, we can perform more complex fault injection for inter-service traffic. For example, Istio can simulate fault scenarios such as slow response and service call failure, helping us verify the coupling between services and improve the stability of systems.

For more stability discussions about architecture delivery and O&M, I will share them in the next article.

The cloud native software architecture aims to drive developers to focus on business logic and enable the platform to handle system complexity. Cloud native computing redefines the boundary between application and application infrastructure, further improving development efficiency and reducing the complexity of distributed application development.

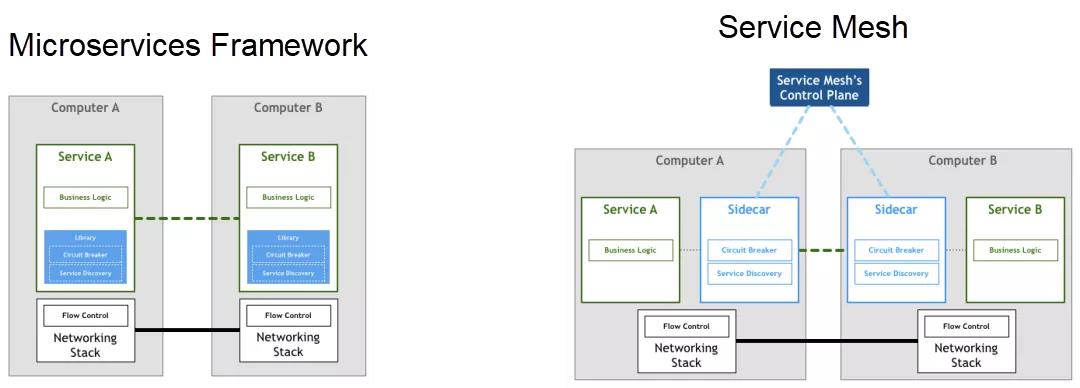

In the microservices era, application frameworks such as Spring Cloud and Apache Dubbo have achieved great success. Through code libraries, they provide service communication, service discovery, and service governance, such as traffic shifting, blow, traffic limiting, and full-procedure tracing. These code libraries are built inside applications, and are released and maintained along with applications. Therefore, this architecture has some unavoidable challenges:

Image source: https://philcalcado.com/2017/08/03/pattern_service_mesh.html

To solve these challenges, the community proposed Service Mesh architecture. It decouples business logic from service governance capabilities. By submerging architecture in infrastructure, service governance can be independently deployed on both service consumer and provider sides. In this way, decentralization is achieved, and the scalability of the system is guaranteed. Service governance can also be decoupled from business logic. Thus, service governance and business logic can evolve independently without mutual interference, which improves the flexibility of the overall architecture evolution. At the same time, the Service Mesh architecture lowers the intrusiveness towards business logic and the complexity of polyglot support.

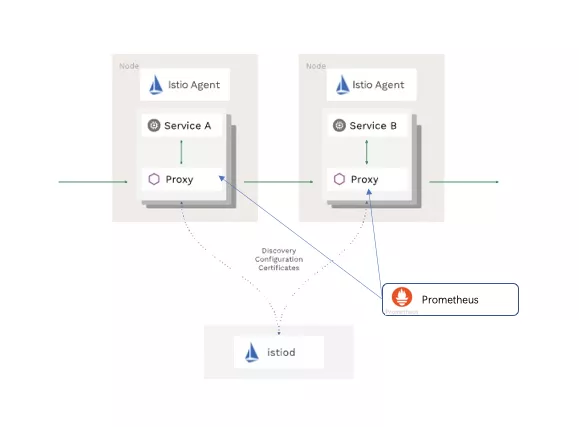

The Istio project led by Google, IBM, and Lyft is a typical implementation of the Service Mesh architecture and has become a new phenomenal "influencer".

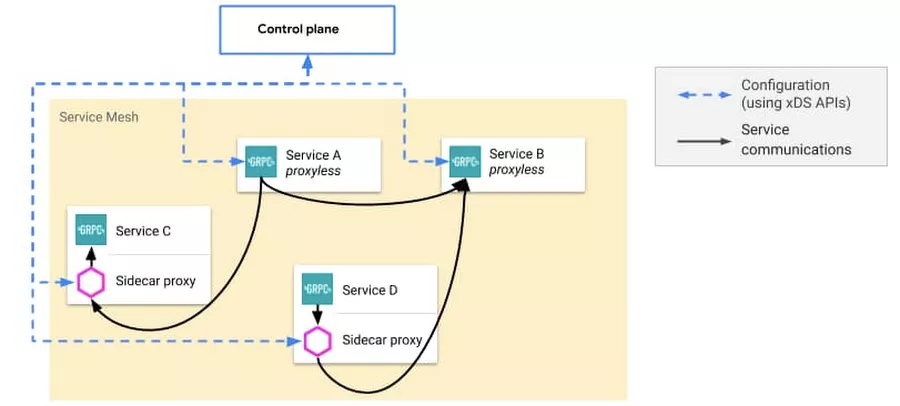

The preceding picture shows the architecture of Istio, which is logically divided into the data plane and the control plane. The data plane is responsible for data communication between services. The application is paired with the intelligent proxy Envoy deployed in sidecar mode. The Envoy intercepts and forwards the network traffic of application, collects telemetry data, and executes service governance policies. In the latest architecture, istiod, as the control plane of Istio, is responsible for configuration management, delivery, and certificate management. Istio provides a series of general service governance capabilities, such as service discovery, load balancing, progressive delivery (gray release), chaos injection and analysis, full-procedure tracing, and zero-trust network security. These capabilities can be orchestrated into IT architectures and release systems of upper-layer business systems.

The Service Mesh achieves the separation of the data plane and the control plane in terms of architecture, which makes it a graceful architecture. Enterprise customers have diversified requirements for the data plane, such as various protocols support, like Dubbo, customized security policies, and observability access. The capabilities of the service control plane also change rapidly, including basic service governance, observability, security systems, and stability assurance. However, APIs between the control plane and the data plane are relatively stable.

CNCF established the Universal Data Plane API Working Group (UDPA-WG) in order to develop standard APIs in the data plane. Universal Data Plane API (UDPA) aims to provide standardized and implementation-independent APIs for L4 and L7 data plane configurations, which is similar to the role of OpenFlow for L2, L3, and L4 in SDN. UDPA covers service discovery, load balancing, route discovery, monitor configuration, security discovery, load reporting, and health check delegation.

UDPA is gradually developed based on existing Envoy xDS APIs. Currently, in addition to supporting Envoy, UDPA supports client-side load balancing, such as gRPC-LB, as well as more data plane proxies, hardware load balancing, and mobile apps.

We know that Service Mesh is not a silver bullet. Its architecture adds a service proxy in exchange for architecture flexibility and system evolvability. However, it also increases the deployment complexity (sidecar management) and performance loss (two forwarding added). The standardization and development of UDPA will bring new changes to the Service Mesh architecture.

In the latest version, gRPC starts to support UDPA load balancing.

The concept of "Proxyless Service Mesh" has been created. The following figure shows the diagram of the concept:

As shown above, gRPC applications obtain service governance policies directly from the control plane. gPRC applications can also directly communicate with each other without any additional proxy. This reflects the ambition of the open Service Mesh technology is to evolve into a cross-language service governance framework, which can give consideration to the standardization, flexibility, and operational efficiency. Google's hosting Service Mesh products have taken the lead in providing support for "proxyless" gRPC applications.

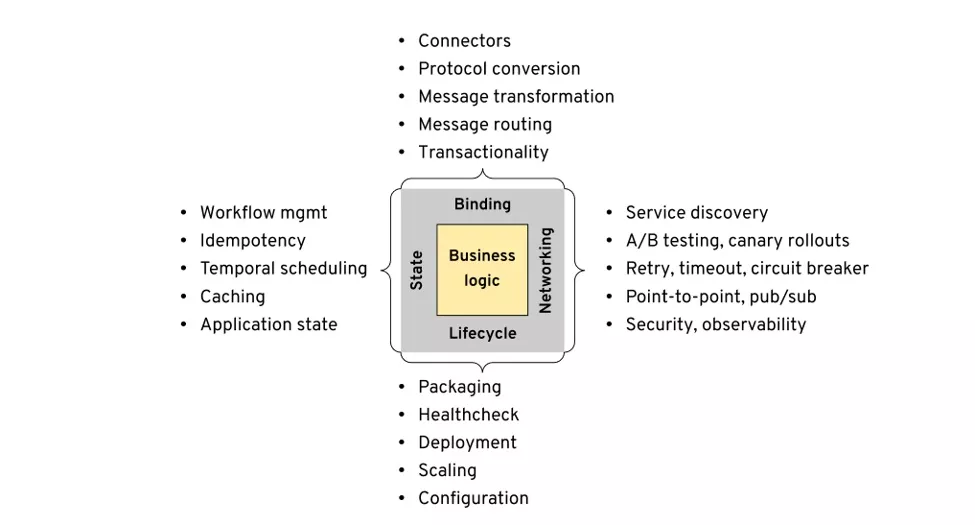

For distributed applications, Bilgin Ibryam gives analysis and summary of four typical types of demands in the article, Multi-Runtime Microservices Architecture.

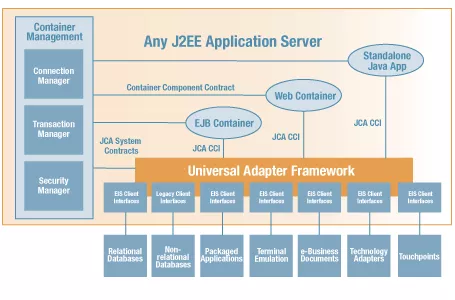

Those who are familiar with traditional enterprise architectures may find that the traditional Java EE (now renamed as Jakarta EE) application server also aims to solve similar problems. The architecture of a typical Java EE application server is shown in the following figure. The application lifecycle is managed by various application containers, such as Web container and EJB container. Application security management, transaction management, and connection management are all completed by the application server. The application can access external enterprise middleware, such as databases and message queues, through standard APIs, like JDBC and JMS.

Different external middleware can be pluggable from the application server by using the Java Connector Architecture specification. The application is dynamically bound to specific resources through JNDI at runtime. Java EE solves the cross-cutting concern of the system in the application server. Thus, Java EE allows developers to only focus on the business logic of the application, which improves the development efficiency. At the same time, the application's dependence on the environment and middleware can be reduced. For example, ActiveMQ used in the development environment can be replaced by IBM MQ in the production environment, without modifying the application logic.

In terms of architecture, Java EE is a large monolith application platform. The iteration of its architecture is too slow that it cannot keep up with changes of architecture technologies. Due to its complexity and inflexibility, Java EE has been forgotten by most developers since the rise of microservices.

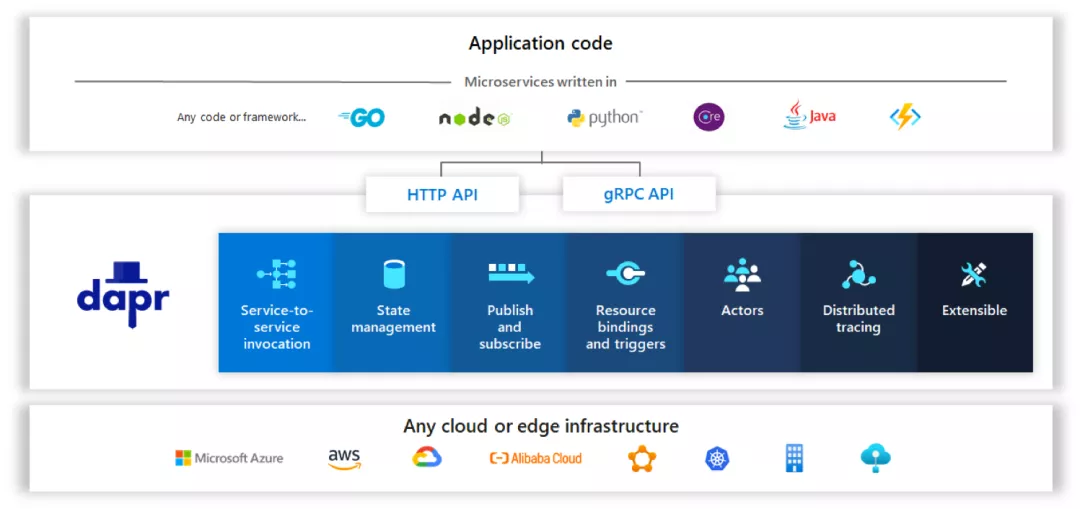

Microsoft gives a solution called Dapr. Dapr is an event-driven and portable runtime environment for building microservices applications. It supports cloud or edge deployment of applications, and the diversity of programing languages and frameworks. Dapr adopts the Sidecar mode to separate and abstract some cross-cutting requirements in the application logic. This decouples the application from the runtime environment and external dependencies, including dependencies among services.

The preceding figure shows the functions and positioning of Dapr:

Although Dapr is similar to Service Mesh in architecture and service governance, they are essentially quite different. For applications, Service Mesh is a transparent infrastructure, while Dapr provides abstractions for state management, service calling, fault handling, resource binding, publishing and subscription, and distributed tracing. To explicitly call Dapr, applications need support from SDK, HTTP, and gRPC. Dapr is a developer-oriented development framework.

Dapr is still very young and is undergoing rapid iteration. So, there is still a long way to go for Dapr to be supported by developers and third-party manufacturers. However, Dapr has revealed a new direction for us. By separating concerns, developers are allowed to focus only on the business logic, while concerns to distributed architectures submerges in infrastructures. Business logic should be decoupled from external services to avoid vendor binding. In addition, application and application runtime should be two independent processes that interact through standard APIs. The lifecycle should be decoupled to facilitate upgrades and iterations.

In the previous article, I have introduced Serverless application infrastructures, such as FaaS and Serverless container. In this article, I'd like to discuss some thoughts on the architecture of the FaaS application.

The core principle of FaaS is that developers do not have to focus on infrastructure O&M, capacity planning, or scaling. They only need to pay for cloud resources and services they used. By doing so, developers can focus on other issues rather than infrastructure O&M and reuse existing cloud service capabilities as much as possible. This will help developers to reallocate development time to things with more value and direct impacts on users, such as good business logic, user-attracting interfaces, and fast-responsive and reliable APIs.

At the software architecture level, FaaS splits complex business logic into a series of fine-grained functions, and calls these functions in event-driven mode. Since functions are loosely coupled, they can be combined and coordinated together in the following two modes:

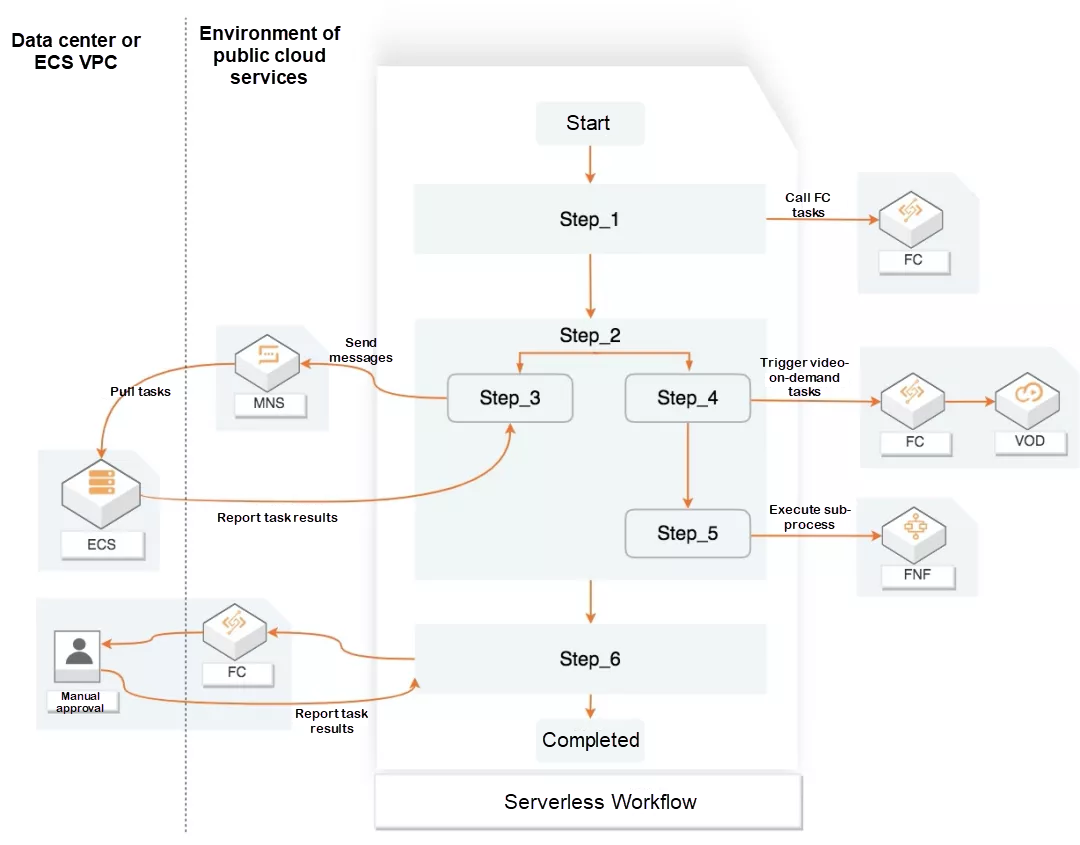

Workflow Orchestration: Take Alibaba Cloud Serverless Workflow as an example. Tasks can be orchestrated through a declarative business process. This simplifies complex operations required in developing and running business, such as task coordination, state management, and fault handling. In this way, developers can only focus on business logic development.

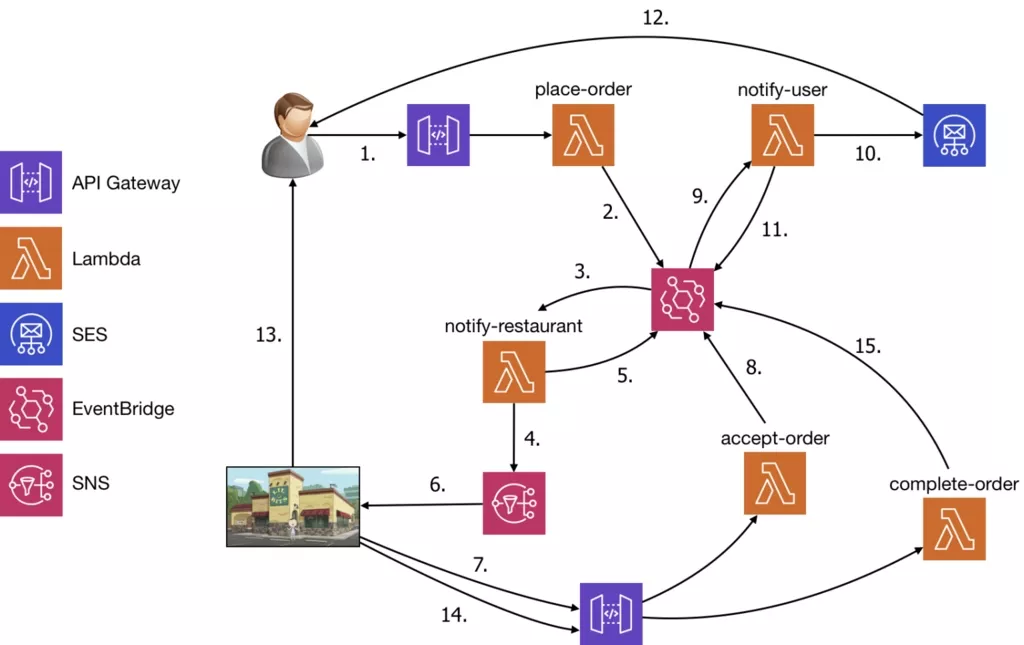

Event Choreography: Function services exchange messages through events. Message middleware, such as EventBus, forward events and trigger function execution. The following is an example of a scenario where EventBridge connects several function-based business logics, including place-order, notify-user, notify-restaurant, accept-order, and complete-order. This mode is more flexible, and system performance is better. However, it lacks explicit modeling, and the development and maintenance are relatively complicated.

Serverless has many advantages, such as reducing O&M costs, improving system security and R&D efficiency, and accelerating business delivery. However, Serverless still has some unavoidable problems in following aspects:

Cost management: One of the weaknesses of the "pay-as-you-go" mode is that it is impossible to accurately predict the specific cost. It is different from budget management methods of many organizations.

Vendor targeting: Although Serverless applications are based on open languages and frameworks, most Serverless applications rely on some non-standard Backend as a Service (BaaS), such as object storage, key-value database, authentication, logging, and monitoring.

Debugging and monitoring: Compared with traditional application development, Serverless applications do not provide proper debugging and monitoring tools. Good observability is an important aid for Serverless computing.

Architecture complexity: Serverless developers do not need to focus on the complexity of underlying infrastructures, but the complexity of the application architecture requires special attention. Event-driven architecture and fine-grained function microservices are very different from traditional development methods. Developers need to apply them in appropriate scenarios based on business needs and technical capabilities, and then gradually expand their application scopes.

For more information about typical Serverless application architectures, read this article

The technical report, Cloud Programming Simplified: A Berkeley View on Serverless Computing, is also a good reference for further understanding of Serverless computing.

Faster, lighter, and more agile application runtime technologies are what cloud native computing is continuously pursuing.

Smaller size: For microservices distributed architectures, the smaller size means lower download bandwidth and faster distribution and download speed.

Faster booting: For traditional monolith applications, booting speed is not a key metric compared to operating efficiency. The reason is that these applications are rebooted and released at a relatively low frequency. For microservices applications that require rapid iteration and horizontal scaling, however, faster booting means higher delivery efficiency, quicker rollback, and faster fault recovery.

Fewer resources: Lower resource usage at runtime means higher deployment density and lower computing costs.

For those reasons, the number of developers using languages such as Golang, Node.js, and Python continues to climb. There are several technologies that deserve your attention:

In the Java field, GraalVM has gradually matured. It is an enhanced cross-language full-stack virtual machine based on HotSpot and supports multiple programing languages, including Java, Scala, Groovy, Kotlin, JavaScript, Ruby, Python, C, and C++. GraalVM allows developers to compile programs as local executable files in advance.

Compared with classic Java VM, the program generated by GraaIVM has lower booting time and runtime memory cost. As next-generation Java frameworks customized in the cloud native, Quarkus and Micronaut can achieve amazing booting time and resource cost. For more analysis, see cloud native evolution of Java.

WebAssembly is another exciting technology. WebAssembly is a secure, portable, and efficient virtual machine sandbox designed for modern CPU architectures. It can be used anywhere (like servers, browsers, and IoT devices), running applications safely on any platform with different operating systems or CPU architectures. WebAssembly System Interface (WASI) is used to standardize interaction abstractions of WebAssembly applications and system resources, such as file system access, memory management, and network connection. WASI provides standard APIs similar to POSIX.

Platform developers can adopt different WASI APIs for different implementations according to specific operating systems and operating environments. Cross-platform WebAssembly applications are allowed to be run on different devices and operating systems. This allows application operating to be decoupled from specific platform environment, gradually realizing "Building Once, Run Anywhere". Although WebAssembly has surpassed the browser field, its development is still in the early stage. We are looking forward that the community will come together to further develop WebAssembly. If you are interested, check out this link for the combination of WebAssembly and Kubernetes:

Cloud native software architectures are developing rapidly, and they involve a wide range of content. The above-mentioned content is more of a personal summary, understanding, and judgment. So, I am looking forward to having an in-depth communication and discussion with everyone.

2,593 posts | 793 followers

FollowAlibaba Clouder - May 18, 2021

Alibaba Cloud Native Community - July 6, 2022

Alibaba Clouder - March 14, 2018

Yunlei - March 4, 2024

Alibaba EMR - September 30, 2019

Alibaba Cloud Community - September 10, 2025

2,593 posts | 793 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn MoreMore Posts by Alibaba Clouder