There are various approaches to accelerate computing.

(1) Software/hardware: Fully utilize hardware performance through software optimization or enhance the hardware frequency.

(2) Direction: Scale horizontally to enhance concurrent processing capability or scale vertically to improve single-point performance.

(3) Concurrent processing capabilities: When categorized by granularity, these capabilities include machine-level concurrency, where multiple machines perform the same operation; thread-level concurrency, where multiple threads run concurrently on different cores for parallel processing; and instruction-level concurrency, where a single instruction operates on multiple data.

Among them, concurrent processing is more commonly used. So what is instruction-level concurrency? In the von Neumann architecture, the CPU fetches instructions and data from the storage system, executes the instructions, and then stores the results back into the storage system. Typically, one instruction operates on one piece of data, generating one result. SIMD (Single Instruction, Multiple Data) is a specialized type of CPU instruction that enables the execution of a single instruction on multiple pieces of data simultaneously.

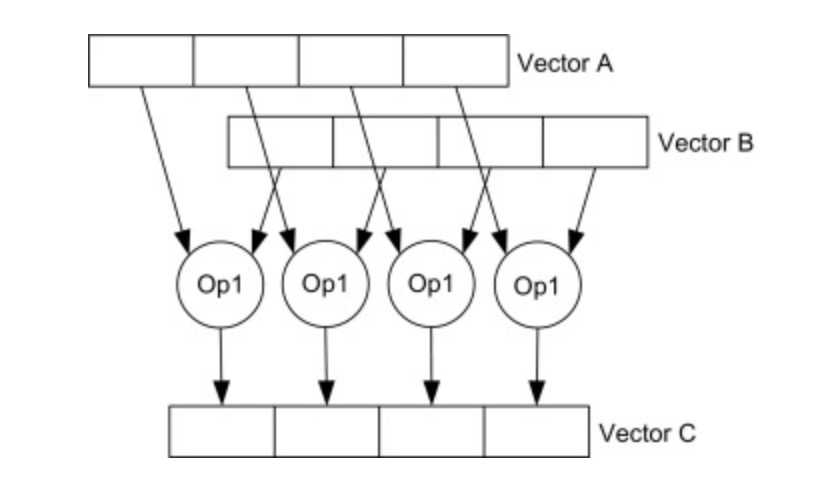

SIMD instructions facilitate vectorized execution, where one instruction operates on multiple pieces of data in one or more arrays, as opposed to processing one piece of data at a time. The term vectorized can be better understood as array-based execution since it involves vectors, which represent magnitude and direction. When an SIMD instruction is executed, multiple data elements are loaded from memory into a wide register simultaneously, and they are computed in parallel through a parallel instruction. For instance, an instruction that operates on 32 bytes (256 bits) can be executed on 8 variables of the INT type simultaneously, resulting in an 8-fold acceleration in computation. SIMD also reduces the number of loops used. This significantly reduces loop jump instructions, further enhancing computation speed. An SIMD instruction can have 0, 1, or 2 array parameters. With one array parameter, the instruction computes every element in the array and writes the results to the corresponding positions. With two parameters, the instruction operates on each corresponding position of the two arrays and writes the results to the corresponding positions.

The principle behind compiler acceleration through SIMD is to reduce the number of loop iterations by expanding the loop. By expanding the loop, the compiler minimizes jump statements, preserving the pipeline structure. The pipeline can preload instructions, reducing CPU pauses. Therefore, reducing jump instructions enhances the pipeline's efficiency.

In the preceding figure, the SIMD instruction is executed on 4 pairs of numbers in Vectors A and B simultaneously. It generates 4 results and stores them in Vector C.

The following code is an example to calculate the square of four floats.

void squre( float* ptr )

{

for( int i = 0; i < 4; i++ )

{

const float f = ptr[ i ];

ptr[ i ] = f * f;

}

}If the preceding code is converted into SIMD instructions, the loop can be deleted and the calculation can be completed with three instructions, which are loading the floats into the register, calculating the square root, and writing the results back to memory.

void squre(float * ptr)

{

__m128 f = _mm_loadu_ps( ptr );

f = _mm_mul_ps( f, f );

_mm_storeu_ps( ptr, f );

}When executing an SIMD instruction, a group of data is loaded into a wide register (128-bit, 256-bit, or 512-bit), the computation is performed, and the results are then stored in another wide register.

SIMD instructions rely on hardware support for MMX series, SSE (Streaming SIMD Extensions) series, and AVX (Advanced Vector Extensions) series of extended instruction sets. SSE1, SSE2, SSE3, SSE4.1, and SSE4.2 operate on 16-byte registers. AVX and AVX2 introduce 32-byte registers, while AVX512 introduces 64-byte registers. Currently, most CPUs support AVX2, while AVX512 is only supported by the latest CPUs.

The availability of instruction sets depends on CPU hardware support. Below is a list of CPUs that support different instruction sets.

ARM also introduces SIMD extended instructions. Typical SIMD operations include arithmetic operations (+-*/), abs, and sqrt. For comprehensive instruction sets, view this documentation

Then, how to generate an SIMD instruction? The following are several methods.

Compiler auto-vectorization

A compiler needs to meet the following conditions to perform auto-vectorization.

(1) The code follows a certain paradigm. Various cases will be introduced in detail later.

(2) For commonly used compilers such as GCC and Clang, add -O3 to the compilation options to enable vectorization.

During compilation, add -O3 or-mavx2 -march=native -ftree-vectorize to the compilation options to enable vectorization.

Only later versions of the compiler support vectorization. Tests indicate that GCC 4.9.2 and earlier versions do not support vectorization, while GCC 9.2.1 does. GCC is more suitable for vectorization. Clang cannot convert some code to vectorized code, but in some cases, the vectorized code generated by Clang performs better than that generated by GCC, as Clang instructions use wider registers. Therefore, it is recommended to write standard code to test the performance of the two compilers separately.

res[i] = tmpBitPtr[i] & opBitPtr[i]; // Use subscripts to access the address. Both Clang and GCC support this feature.

*(res + i) = *(tmpBitPtr + i) & *(opBitPtr + i); // Use address arithmetic to access the memory. Clang does not support this feature, while GCC does.In programming, there are some best practices to guide the compiler to generate vectorized code more efficiently.

The initial and end values of the loop variables must be fixed. For example,

for (int i = 0;i < n ;++i ) // The total number of iterations is countable, and the code can be vectorized.

for (int i = 0;i != n;++i) // The total number of iterations is uncountable, and the code cannot be vectorized.Only include in calculation simple mathematical operations (+-*/) or simple logical operations such as AND and NOT. Do not include statements such as switch, if, and return.

Exceptions are that some trigonometric functions such as sin and cos, or arithmetic functions such as pow and log. They can be automatically vectorized because lib provides a built-in vectorization implementation for them.

Only the innermost loop can be vectorized.

The calculating parameters and results of the function must be stored in contiguous space and loaded into registers from memory through a single SIMD instruction.

for (int i=0; i<SIZE; i+=2) b[i] += a[i] * x[i]; // Access contiguous space, and the code can be vectorized.

for (int i=0; i<SIZE; i+=2) b[i] += a[i] * x[index[i]] // Access discontiguous space, and the code cannot be vectorized.This is the most important practice, because in parallel computing, numbers on which multiple independent instructions belonging to the same parallel instructions are executed cannot be related. Otherwise, it cannot be parallelized and can only be computed serially.

The following are several scenarios for data dependency.

for (j=1; j<MAX; j++) A[j]=A[j-1]+1;// Case 1: Write before reading, and the code cannot be vectorized.

for (j=1; j<MAX; j++) A[j-1]=A[j]+1;// Case 2: Read before writing, and the code cannot be vectorized.

for (j=1; j<MAX; j++) A[j-4]=A[j]+1;// Case 3: Write before reading, but the code can be vectorized. There is no dependency in the same group of data if four groups of data form a vector. Therefore, the code can be vectorized.

// Case 4: Write before writing, and the code cannot be vectorized. No example here.

for (j=1; j<MAX; j++) B[j]=A[j]+A[j-1]+1;// Case 5: Read before reading, and the code can be vectorized. As there is no write operation, the vectorization is not affected.

for (j=1; j<MAX; j++) sum = sum + A[j]*B[j] // Case 6: The code can be vectorized. Though the same variable is read and another variable is written in the loop, the wide register can represent the sum temporarily. Data is calculated separately and added to the wide register after the loop ends.

for (i = 0; i < size; i++) {

c[i] = a[i] * b[i];

}// Case 7: It depends on whether there is an intersection between the memory space of Vector C and that of Vectors A and B. If C is an alias of A or B, for example, c=a + 1, then c[i] = a[i +1], then Vector A and C have a memory intersection. In the above cases, Case 3, 5, and 6 can be vectorized, which are exceptional cases. In general, it is advisable to write code with no dependencies. If dependencies are present and vectorization is still desired, manual SIMD code can be written.

Although pointers can achieve similar effects to arrays, the latter can reduce the likelihood of unexpected dependencies. When using pointers, even the compiler cannot always determine if the code can be vectorized in certain scenarios. However, when using arrays, this concern is eliminated as the compiler can easily vectorize the code.

Using the loop counter as an array subscript simplifies the understanding for compilers. If additional values are used as subscripts, it becomes difficult to confirm if the code can be vectorized. For example,

for(int i = 0;i < 10;i++) a[i] = b[i] // The code can be vectorized.

for(int i =0,index=0;i < 10;i++) a[index++]=b[index] // The code cannot be vectorized.Data should be 16-byte or 32-byte aligned. The elements of an array should be of the basic type instead of the struct or class type. In a complex structure, the same elements of each object in one array are not stored in adjacent locations.

In some earlier compilers, the number of iterations in a loop needs to be an integer multiple of the instruction width. For example, for a 128-bit instruction that is executed on 4-byte variables of the INT type, the number of iterations in the loop needs to be an integer multiple of 4. Therefore, when writing code, you need to write two parts of loops. The first is an integer multiple of 4, and the second is the small amount of data left at the end of the loop.

The latest compilers can automate this process. You can write code according to the normal logic, and there is no need to split it into two parts; the code generated by the compiler will automatically generate two parts of logic.

Compilers can translate simple logic into SIMD instructions. This requires us to carefully consider the code style to avoid hindering vectorization. However, for some complex logic, compilers cannot perform auto-vectorization. Nevertheless, we humans know that the logic inside is that each operand is calculated separately without interfering with each other, and the code can be vectorized. In this case, we can write an SIMD code. A typical example is to convert a string to all lowercase.

const static char not_case_lower_bound = 'A';

const static char not_case_upper_bound= 'Z';

static void lowerStrWithSIMD(const char * src, const char * src_end, char * dst)

{

const auto flip_case_mask = 'A' ^ 'a';

#ifdef __SSE2__

const auto bytes_sse = sizeof(__m128i);

const auto * src_end_sse = src_end - (src_end - src) % bytes_sse;

const auto v_not_case_lower_bound = _mm_set1_epi8(not_case_lower_bound - 1);

const auto v_not_case_upper_bound = _mm_set1_epi8(not_case_upper_bound + 1);

const auto v_flip_case_mask = _mm_set1_epi8(flip_case_mask);

for (; src < src_end_sse; src += bytes_sse, dst += bytes_sse)

{

/// load 16 sequential 8-bit characters

const auto chars = _mm_loadu_si128(reinterpret_cast<const __m128i *>(src));

/// find which 8-bit sequences belong to range [case_lower_bound, case_upper_bound]

const auto is_not_case

= _mm_and_si128(_mm_cmpgt_epi8(chars, v_not_case_lower_bound), _mm_cmplt_epi8(chars, v_not_case_upper_bound));

/// keep lip_case_mask _mm_and_si128(v_flip_case_mask, is_not_case);

/// flip case by applying calculated mask

const auto xor_mask = _mm_and_si128(v_flip_case_mask, is_not_case);

const auto cased_chars = _mm_xor_si128(chars, xor_mask);

/// store result back to destination

_mm_storeu_si128(reinterpret_cast<__m128i *>(dst), cased_chars);

}

#endif

for (; src < src_end; ++src, ++dst)

if (*src >= not_case_lower_bound && *src <= not_case_upper_bound)

*dst = *src ^ flip_case_mask;

else

*dst = *src;

}

static void lowerStr(const char * src, const char * src_end, char * dst)

{

const auto flip_case_mask = 'A' ^ 'a';

for (; src < src_end; ++src, ++dst)

if (*src >= not_case_lower_bound && *src <= not_case_upper_bound)

*dst = *src ^ flip_case_mask;

else

*dst = *src;

}The preceding two functions are used to convert uppercase letters in strings to lowercase letters. The first adopts SIMD implementation, and the second adopts normal practice. The first uses a 128-bit instruction (16 bytes), which is theoretically 16 times faster than a non-vectorized instruction. However, because the second code is very clear in structure, it can also be automatically vectorized. In this example, we test the compilation performance of different compilers (G 9.3.0 and Clang 12.0.0).

| Compiler | SIMD/normal> | Interpretation (If the latency ratio is less than 1, SIMD auto-vectorization prevails; if greater than 1, normal practice prevails.) |

| g++> | 1.9 | The compiler generates 256-bit instructions through auto-vectorization. Compared with 128-bit instructions, the performance is doubled. |

| g++> | 0.99 | The two are similar in performance. The compiler generates 128-bit instructions through auto-vectorization. |

| g++> | 0.09 | -O2 cannot auto-vectorize. |

| clang++> | 3.1 | The compiler generates 512-bit instructions through auto-vectorization. Compared with 128-bit instructions, the performance is improved by three times. |

| clang++> | 1.6 | The compiler generates 256-bit instructions through auto-vectorization. |

| clang++> | 0.93 | The compiler automatically generates 128-bit instructions. |

| clang++> | 0.09 | -O1 cannot vectorize. |

Conclusion: At the same optimization level, Clang generates wider instructions and performs better.

The following is the simplest SIMD instruction, which implements the addition of two numbers.

const __m128i dst = _mm_add_epi32(left,right);It adds four groups of numbers of the INT type and fills the results. __m128i represents a 128-bit wide register that stores variables of the INT type (4 bytes and 32 bits). It can store 4 variables of the INT type. _mm_add_epi32 is an SIMD instruction. The prefix _mm represents a 128-bit register, add represents addition, and epi32 represents a 32-bit integer. A standard name for a SIMD instruction contains the following information: register width, operation type, and parameter width.

Register width:

The following table shows the naming of registers of various widths with various data types.

| 16 bytes | 32 bytes | 64 bytes | |

| 32-bit float | __m128 | __m256 | __m512 |

| 64-bit float | __m128d | __m256d | __m512d |

| Integer | __m128i | __m256i | __m512i |

The following table shows the mapping relationship between the register width and the instruction prefix. For example, the name of a 128-bit register begins with _mm.

| Instruction prefix | Number of register bits |

| _mm | 128 |

| _mm256 | 256 |

| _mm512 | 512 |

Operation type: XOR, AND, INTERSECT, etc.

Parameter width: The number of bits of a single data entry in the parameter, which is included in the suffix of the instruction. For example, if a floating-point number is 32 bits and a double-precision floating-point number is 64 bits, you can enter four floating-point numbers or two double-precision floating-point numbers on a 128-bit register. Instructions with no input parameters have no parameter width information. The following table shows the details of parameter widths. For example, epi16 represents a 16-bit integer.

| Instruction suffix | Number of bits of a single data entry | Data type |

| epi8 | 8 | int |

| epi16 | 16 | int |

| pi16 | 16 | int |

| epi32 | 32 | int |

| pi32 | 32 | int |

| epi64 | 64 | int |

| pu8 | 8 | unsigned> |

| epu8 | 8 | unsigned> |

| epu16 | 16 | unsigned> |

| epu32 | 32 | unsigned> |

| ps | 32 | float |

| pd | 64 | double |

Take the function __m128 _mm_div_ps (__m128 a, __m128 b) as an example, according to the prefix __mm in the instruction name, the register is 128 bits. The div represents division, and the suffix ps represents that the operation parameter is a 32-bit floating point number. That means the instruction loads two arrays simultaneously, each array containing four 32-bit single-precision floating-point numbers. After it completes the division operation of the numbers at the corresponding positions of the two arrays, the instruction returns four division results.

Usually, the width of the results of the instruction is the same as the parameter width, but there are exceptions.

When two vectors execute the SIMD instruction, it is executed on the data in the corresponding positions of the two vectors separately. However, there are some exceptions. For example, the execution of the instruction on adjacent data of the same vector is called horizontal operation. Take the instruction __m128i _mm_hadd_epi16 (__m128i a, __m128i b) as an example, h represents horizontal, referring to adding the adjacent data of vectors A and B in turn. If the value of Vector A is [1,2,3,4] and the value of Vector B is [5,6,7,8], then the result is [1+2,3+4,5+6,7+8].

All data in the two vectors is involved in the calculation. However, there are exceptions. The mask is used to control the involvement of data in the calculation. If a certain bit of the mask is 1, the bit is involved in the calculation. Take the function __m128i _mm_mask_add_epi16 (__m128i src, __mmask8 k, __m128i a, __m128i b) as an example, which uses k as the mask. If a certain bit is 1, it returns the sum of bitness in the corresponding positions of Vector A and B. If a certain bit is 0, it returns the number corresponding to src.

Features of SIMD instruction sets include arithmetic, comparison, encryption, bit operations, logical operations, statistics and probability, displacement, memory loading and storage, and shuffle.

1. SIMD memory operation instructions

SIMD memory operations refer to loading data into registers and returning the corresponding SIMD type. The instruction _mm_load_si128 is used to load 16-bit data, and _mm256_load_ps to load 64-bit data. These two instructions require the data to be aligned. For data that is not aligned, instructions _mm_loadu_si128 and _mm256_loadu_ps are used.

2. SIMD register initialization instructions

Initialize the register to 0. Instructions _mm_setzero_ps and _mm256_setzero_si256 initialize the register to 0, and the operations have no dependencies.

Initialize the register to a specific value. The instruction _mm[256]_set_XXX initializes each point to a different value, and _mm[256]_set1_XXX to the same value. [256] represents whether 256 appears. The instruction _mm_set_epi32(1,2,3,4) initializes the register to sequential integers [1,2,3,4]. For reverse-order initialization, use the instruction _mm_setr_epi32(1,2,3,4).

3. Bitwise operation instructions

There are multiple bitwise operation instructions for floats and ints, including AND, OR, and XOR. The fastest way to execute a NOT instruction is to perform an XOR operation on a number with all 1 bits, and the fastest way to get all 1 bits is to compare two numbers with all 0 bits. The following code is an example.

__m128i bitwiseNot(__m128i x)

{

const __m128i zero = _mm_setzero_si128();

const __128i one = _mm_cmpeq_epi32(zero, zero);

return _mm_xor_si128(x, one);

}4. Floating-point instructions

Floating-point instructions support the basic operations +-*/ and the extended operation sqrt. Some of the useful functions include _mm_min_ss(a,b). For 32-bit floating-point numbers, if 1/x is to be completed, the corresponding SIMD instruction is _mm_rcp_ps, and the corresponding SIMD instruction  is

is _mm_rsqrt_ps. SIMD instructions can complete the operation faster with only one instruction.

If you want to add two arrays, for example, [a,b,c,d]+[e,f,g,h]=[a + e,b + f,c + g,d + h], the corresponding SIMD instructions are _mm_hadd_ps, _mm_hadd_pd, _mm256_hadd_pd, and _mm256_hadd_ps.

5. Non-parallel instructions with the acceleration effect

Some instructions can only be executed on one piece of data, but they can also achieve the effect of acceleration. For example, the instruction _mm_min_ss is used to take the minimum value of two floating-point numbers. It can complete the calculation with one instruction to avoid jumping through branch instructions. Similarly, the instruction _mm_max_sd is used to take the maximum value.

Writing SIMD instructions may seem impressive, but a major drawback is the lack of portability. If you write a 512-bit wide instruction and try to run it on a machine that does not support AVX, issues will arise. Therefore, the best solution is to write code that adheres to the compiler's specifications and let the compiler handle the vectorization. Modern compilers are capable of resolving these problems for us.

Modern compilers are intelligent enough to automate vectorization. In addition to keeping the compiler versions up to date, developers also need to enhance their coding skills to write code that meets the specifications mentioned earlier, enabling the compilers to generate efficient execution code for us.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

ApsaraDB - November 14, 2024

Apache Flink Community - March 26, 2025

Data Geek - November 4, 2024

ApsaraDB - July 9, 2025

Alibaba EMR - May 20, 2022

Alibaba Cloud Big Data and AI - October 27, 2025

YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More Phone Number Verification Service

Phone Number Verification Service

A one-stop, multi-channel verification solution

Learn More