What are the general process and methods of stress testing? What data metrics are important? How can you estimate the QPS to be supported by the backend? This article shares and analyzes the problems that need attention in the process of stress testing. Comments and feedback are welcomed.

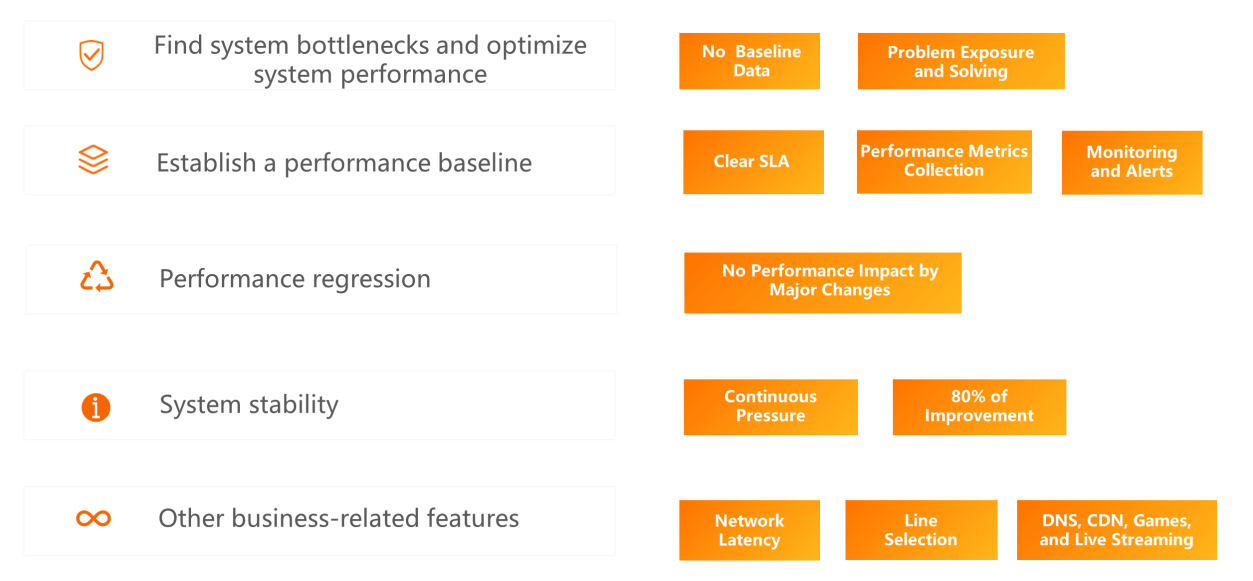

Before planning the stress tests, we must first clarify the goals. The ultimate goal must be optimizing the system performance, but different approaches may be required for different specified goals. In general, goals may include:

Baseline performance data are insufficient when new systems are launched. In this case, QPS and RT are generally not specified in stress testing. System problems are exposed and solved under constant stress to approach the system limit.

This goal aims to collect the current maximum performance indicators of the system. Based on business characteristics, the tolerance for RT and error rate is determined first. Then, the maximum QPS and concurrency that can be supported are calculated through stress testing.

At the same time, it is possible to establish a reasonable alert mechanism by combining performance indicators and monitoring data. Alarm items for system water level, traffic limiting threshold, and elastic policies can also be established.

The system capabilities and SLA can be quantified (for example, being referred to in bidding.)

For online systems or systems with specific performance requirements, the QPS and RT that the system needs to support can be determined based on the online operation. Then, the regression verification can be conducted before any performance impact is involved, ensuring that the performance meets expectations.

This goal focuses on whether the system can provide an SLA guarantee stably for a long time under certain stress. Generally, you can set the stress value to 80% of the service peak traffic and continue adding stress.

Network quality is required in some business scenarios, such as DNS resolution, CDN services, multi-player real-time online games, and high-frequency transactions. These scenarios require extremely low network latency. The difference of network lines (i.e., China Telecom, China Unicom, Education Network) is especially important.

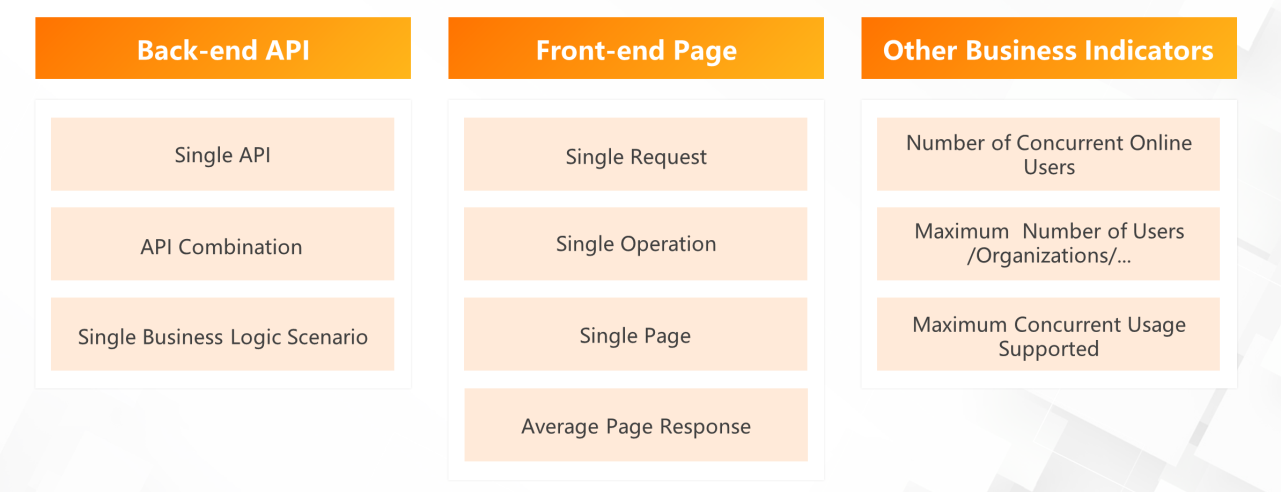

After clarifying the testing goal, you need to determine what needs to be tested. Generally, you can divide stress testing objects like this:

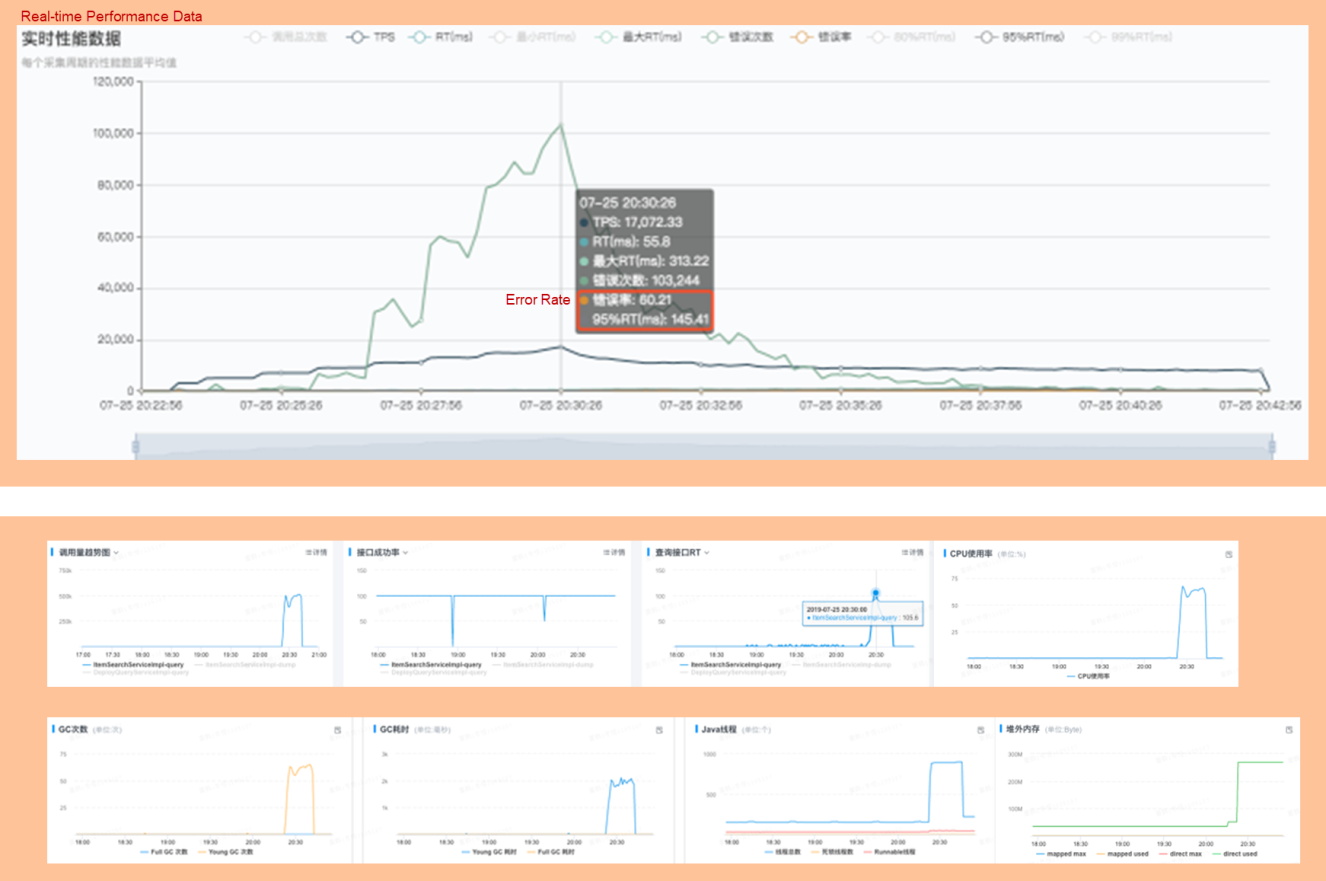

During stress testing, you need to focus on the following data indicators:

The three most important indicators are QPS, RT, and success rate. Other indicators include:

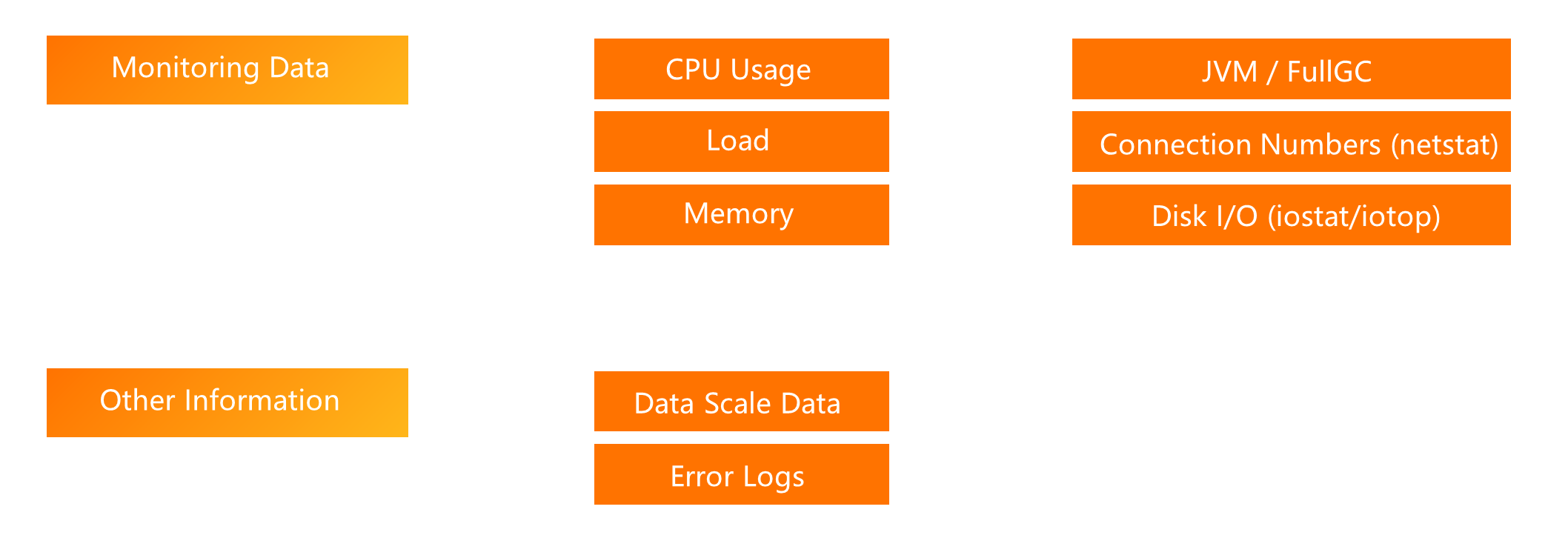

Monitoring data is a key component, including:

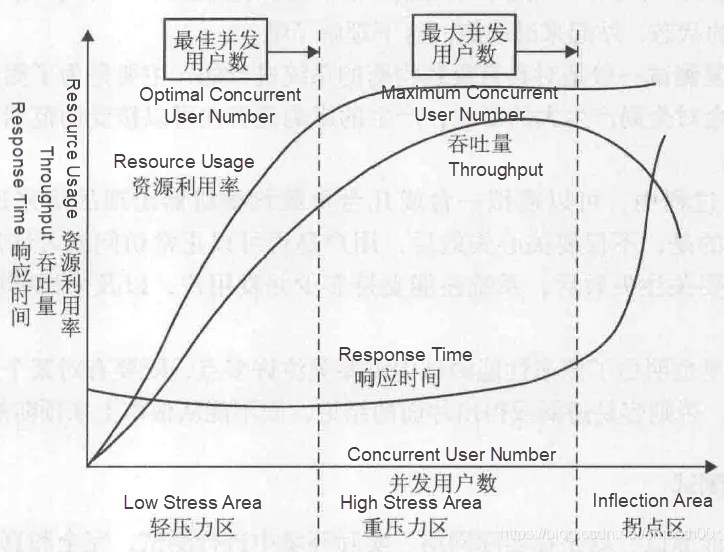

Generally, with the increase of stress (the increase of concurrent requests), the relationship among QPS, RT, and success rate is explored to find the balance point of the system. It would be better if the monitoring data at the server could also be used for analysis.

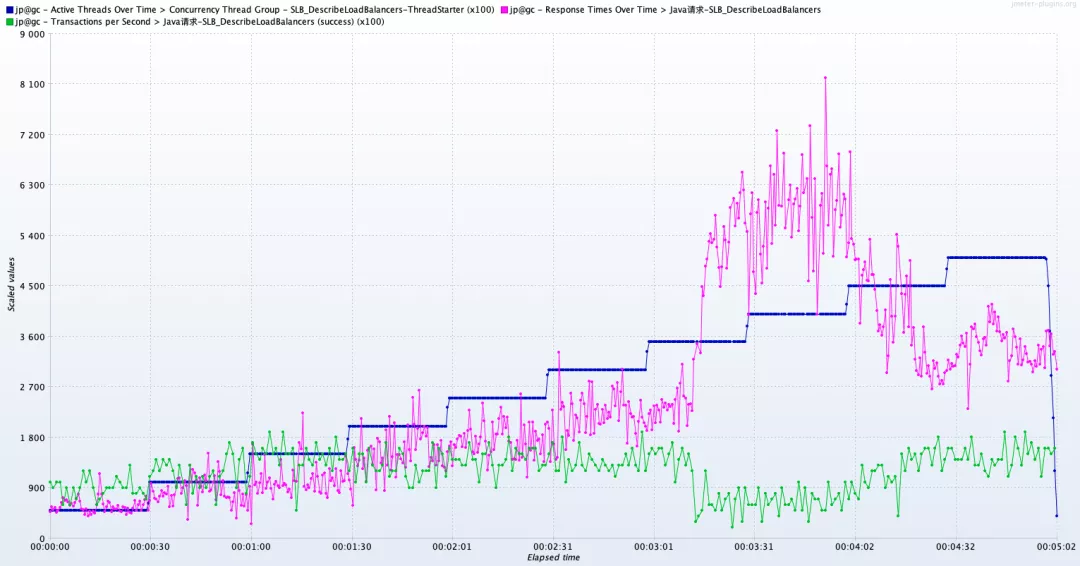

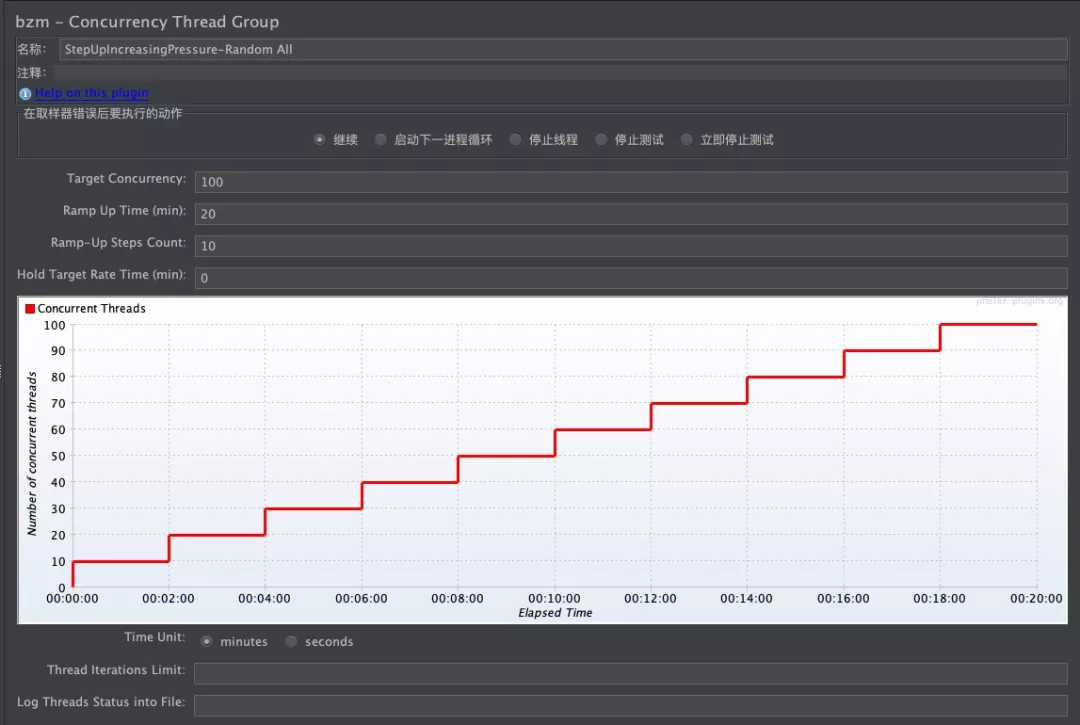

Concurrency Thread Group

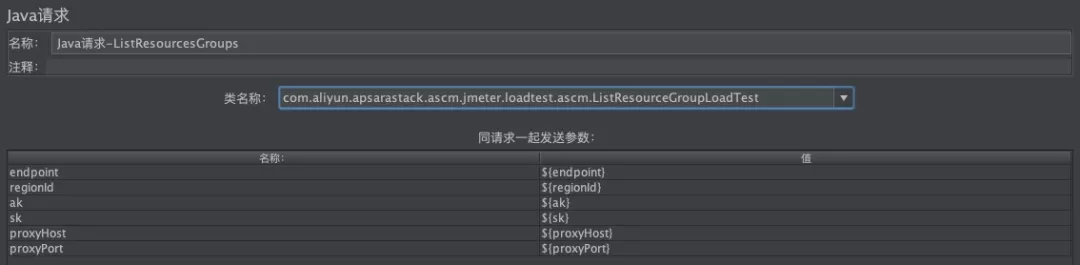

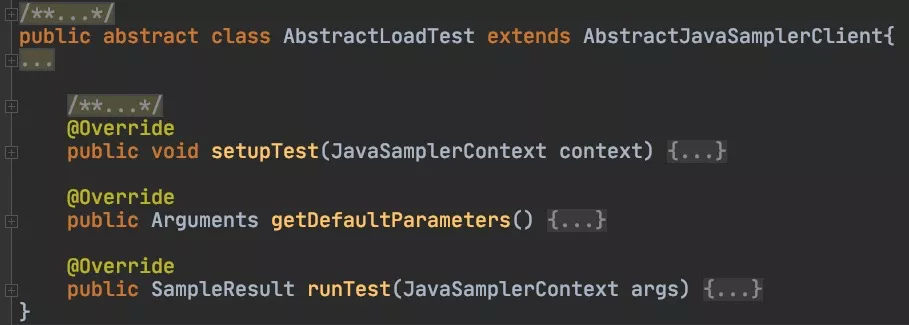

Java Sampler

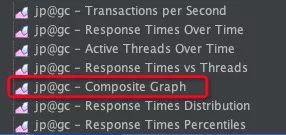

Composite Chart

You can merge multiple charts into one chart. The coordinate system automatically scales to display the results on one chart.

The preceding indicators are all system-oriented data, which is insensitive or meaningless to users. So, what kind of figures are meaningful?

If you are providing an online web service, the user may care about the concurrency limit of your system while not being aware of the system lag. The system SLA may tolerate occasional page error retries.

If you are providing a settlement system, the user may be concerned about the settlement performance. In the case of ensuring transaction validity, users care about the number of orders that can be processed per second. Settlement errors are not allowed, but the system SLA can also tolerate occasional timeout retries.

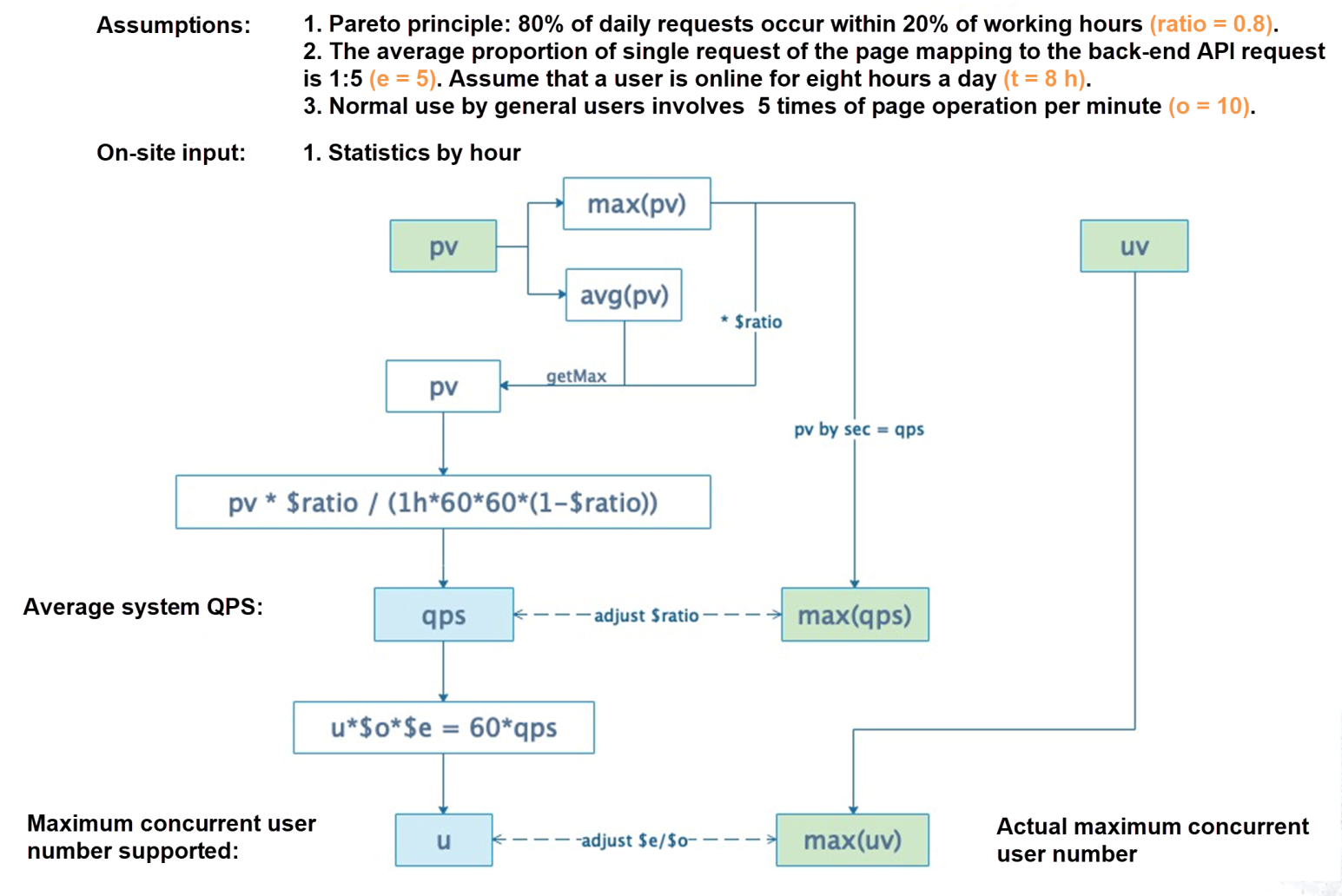

Let's take a look at the analysis on the diagram below:

The page visit (PV) indicates the number of backend API calls counted by the backend log. If there is a general-sense PV counted by the frontend, the basic principle is the same. The PV can be converted as PV * x-ratio = number of back-end calls.

1. Obtain the average and peak value of daily on-site AS API PV and UV.

2. Take Max (PV peak value * 0.8 and average daily PV) as the target PV'. Take the UV value in the PV' period as the reference N' of the concurrent user number. Calculate PV' / perMinute / N' and take the result as O', which is the number of APIs triggered by user operations.

3. Calculate the QPS to support by the backend according to the following rules:

4. Based on the stress testing QPS, the maximum number of concurrent users are calculated like this:

If the on-site environment data indicates that 50 people have logged into the system during the peak period from 9:00 to 10:00, the PV value is 10,000. According to step 3.1, the system can support normal operation under the current user number only when the overall QPS is greater than or equal to 11.

If the stress test results from the home environment show that the QPS of a random API call is 30, maintain the assumptions above and refer to step 4.2. The calculation result can support simultaneous operations from 18 users during peak hours.

The methods above calculate the random API at 1:1, but the call is uneven. The distribution information of API calls can be collected according to the on-site data. Stress testing simulates the call distribution as similar as possible to the actual one.

It estimates the expansion ratio of the minute by minute operation number on the page to the backend API corresponding to each frontend request. You can make an approximate calculation based on the model, but it is less accurate compared with a direct calculation based on the on-site data.

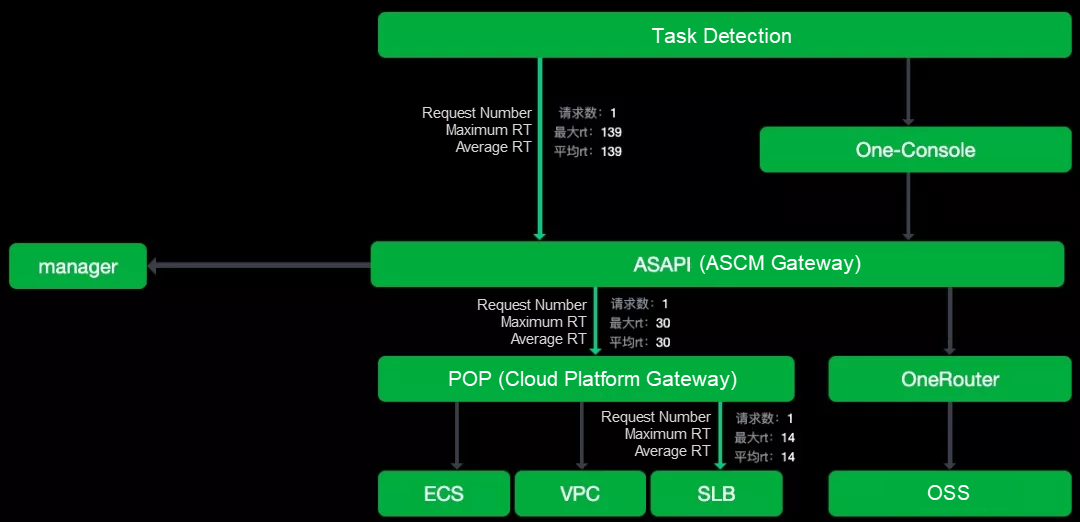

eagleye-traceId

1 posts | 0 followers

FollowAlibaba Clouder - March 14, 2017

hyj1991 - June 20, 2019

Alibaba Cloud Community - October 10, 2022

Alibaba Clouder - April 8, 2019

Alibaba Clouder - April 21, 2020

Alibaba Cloud Native - April 28, 2022

1 posts | 0 followers

Follow Mobile Testing

Mobile Testing

Provides comprehensive quality assurance for the release of your apps.

Learn More Penetration Test

Penetration Test

Penetration Test is a service that simulates full-scale, in-depth attacks to test your system security.

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More