The OceanBase Optimizer Team, led by Xifeng (OceanBase Senior Technical Expert) and Shanwen (Technical Expert), is dedicated to building the world's leading distributed query optimizer.

Many people may have experienced such scenes.

At the beginning of programming, we often only care about the correctness of the results.

After the system has been running for a time, we will feel powerless.

A bunch of questions emerged.

Why is my program so slow?

Why is the program stuck?

Which program caused the obstruction?

At this time, if we monitor slow SQL by various means and passively respond to optimization, we are inefficient.

If we ignore it, it is likely to cause program paralysis, suspended animation, and collapse.

What should I do?

In previous articles about query rewrite, we introduced several subquery-related rewrite strategies. This article focuses on the optimization of Group By to explore the possibility of equivalent changes. In partial analysis scenarios, Group By is used to meet the statistical analysis requirements of businesses. For example, the number of people in different age groups and the average salary in different age groups. In a typical statistical analysis, SQL joins data in multiple tables first, groups the join results by certain dimensions, and finally performs aggregation operations, such as sum, count, and extreme value calculation in each group. In this type of request, the amount of data in the original table is relatively large. The join operation itself needs to process a large amount of data, and multi-table join may further enlarge the data scale. As a result, the grouping operation also needed to process a large amount of data.

An effective optimization strategy for such statistical analysis queries is to adjust the execution order between join and grouping operations. Firstly, execute pre-aggregation of the data of the original table. Then multi-table join and final grouping aggregation are carried out. This optimization strategy is called grouping down.

Consider the following statistical analysis type of query Q1. It counts the total box office since each movie in a theater was released. It may be assumed that since the cinema opened, a total of 3,000 screenings have been shown for 100 films (i.e., there are 3,000 records in the PLAY table and movie_name has 100 different values). 200,000 movie tickets were sold (i.e., there are 200,000 records in the TICKETS table).

-- Schedule table

PLAY(play_id, movie_name, time, price, seats)

-- Ticket schedule

TICKETS(play_id, real_price, sale_date);

Q1:

SELECT P.movie_name,

SUM(T.real_price)

FROM PLAY P,

TICKETS T

WHERE P.play_id = T.play_id

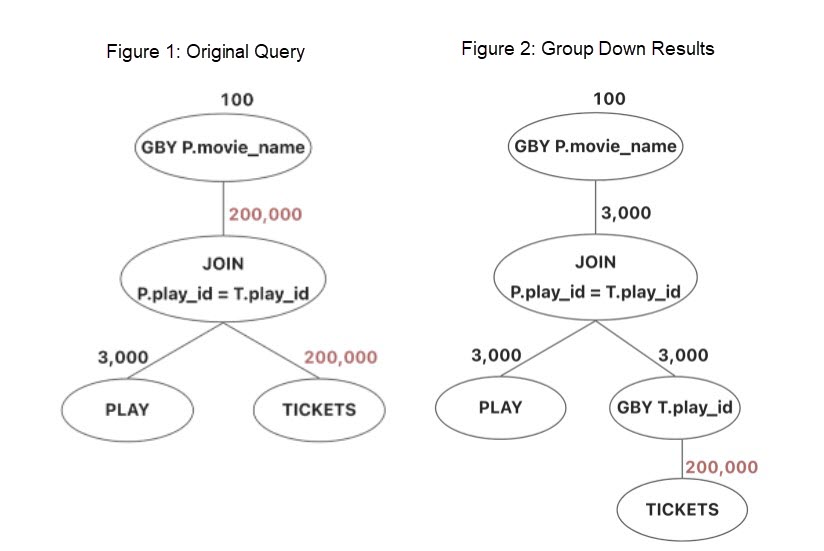

GROUP BY P.movie_name;Figure 1 shows the logical execution plan tree of Q1. First of all, we need to join P and T together. Each movie ticket uniquely corresponds to a schedule, so the join between P and T will generate 200,000 records. Finally, we need to group the join results again. As you can see, 200,000 records need to be processed for both join and grouping. The overall cost is high.

OceanBase uses group-down optimization for Q1 to do the grouping operation ahead of the join operation. As such, query Q2 with the same semantics can be constructed.

Q2:

SELECT P.movie_name,

SUM(V.total)

FROM PLAY P,

(SELECT T.play_id,

SUM(T.real_price) total

FROM TICKETS T

GROUP BY T.play_id) V

WHERE P.play_id = V.play_id

GROUP BY P.movie_id;An important observation of the rewriting above is that there are a large number of records with the same play_id value in the TICKETS table, which will be divided into the same movie group. We can pre-aggregate the data of the same play_id to obtain the box office of each movie and count the total box office of each movie according to the box office of each ticket.

In Q2, view V is Group By according to play_id, and the box office of each film can be obtained. This round of Group By can reduce the data volume (from 200,000 to 3,000). The grouping result and the film record in the PLAY table are joined according to P.play_id = T.play_id. Finally, the join result is grouped according to movie_name to obtain the total box office of each movie.

Figure 2 shows the logical execution plan tree of Q2. As you can see, after grouping down, the data size of join and grouping operations is reduced to 3,000. This method improves the execution performance of queries.

In the example of grouping down above, the grouping down operation can reduce the amount of data that needs to be calculated for subsequent join and grouping operations. Does grouping down always produce better execution performance? The answer is no. In different scenarios, it is difficult to determine which is better or worse, Q1 or Q2. Further judgment needs to be made according to the actual data scale. Let’s consider two scenes:

Scene 1: The theater has many large screen screening rooms, and the seats are mostly full. At this time, there is much ticket purchase information with the same play_id in the table TICKETS. Q1 and Q2 behave similarly to the example given above. Q2 will perform better. I won't repeat it here.

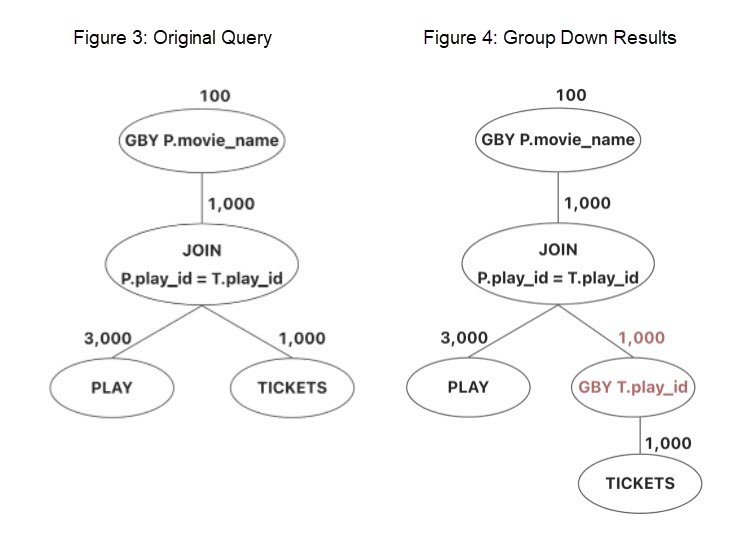

Scene 2: Cinemas are mostly small screening rooms. Affected by the pandemic, the ticket sales are not ideal. There are many vacant seats, and some films are shown without audiences. The size of the TICKETS table is relatively small. The number of ticket purchase information with the same play_id is relatively small. It may be assumed that the size of the TICKETS table is 1,000. Figures 3 and 4 show the logical execution plan tree of Q1 and Q2 under this data distribution.

In Figure 4, pre-aggregation cannot significantly reduce the data size of the TICKETS table. This makes the subsequent join and grouping operations not significantly change in the data processing scale. Compared with Figure 3, Figure 4 adds an additional round of pre-aggregation time consumption. On the whole, the execution performance of the entire query will deteriorate if grouping down is performed.

As you can see, in the preceding two scenarios, sometimes, Q2 performance is not better than Q1. Therefore, grouping down is a cost-based query rewriting strategy to ensure that the query performance does not deteriorate after rewriting. After the rewriting is completed, the physical optimizer is asked to obtain the cost of the execution plan before and after the rewriting based on the actual data distribution. Rewriting is triggered only when the cost is reduced. Grouping down rewriting triggered by cost evaluation can reduce the data scale processed by join operations. It improves query efficiency in scenarios where groups are calculated after multiple tables are joined.

This article describes the query rewriting strategy of grouping down. The query performance is improved by performing the grouping operation before joining and pushing down to a single table to reduce the data set. In some scenarios, grouping down could cause query performance degration. This policy is a cost-based rewrite. It is triggered only when the cost is reduced after the rewrite is evaluated. The group down described in this article mainly adjusts the group operation to that before the join operation. As shown in the preceding discussion, in some scenarios, we will also want to perform the join operation first and then perform the grouping operation. This reverse optimization strategy will be described in subsequent articles.

OceanBase Rewriting Series: Best Practices for Promoting Subquery Performance

OBProxy: A Detailed Explanation of the Functional Modules and Features

OceanBase - August 25, 2022

OceanBase - August 25, 2022

OceanBase - August 25, 2022

ApsaraDB - September 30, 2021

OceanBase - September 9, 2022

AdrianW - August 22, 2019

ApsaraDB for OceanBase

ApsaraDB for OceanBase

A financial-grade distributed relational database that features high stability, high scalability, and high performance.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn MoreMore Posts by OceanBase