By Daoyuan Wang, technical expert for Alibaba Cloud Elastic MapReduce (EMR).

The Relational Cache function of Spark on Elastic MapReduce (EMR) can accelerate Spark SQL through precomputing algorithms and efficient data storing models. All of this makes using Spark SQL to query massive data in real time that much more feasible. When Relational Cache works as a Materialized View, it analyzes SQL statements when users submit them, choosing the available results from the precomputed algorithm to accelerate query speeds. To efficiently reuse precomputed results, the precomputed cache results are usually quite general in nature. So, for user queries, additional computing beyond this is often required to obtain the final results. Given this, it is of particular importance to be able to find the matching cache and build an accurate new execution plan quickly.

The Materialized View supported in Hive 3.x uses Apache Calcite to rewrite the execution plan, that is the result of the query optimizer's attempt to calculate the most efficient way to implement a query request. However, given that Spark SQL uses Catalyst to optimize the execution plan, and the introduction of Calcite can often be rather heavy loaded, therefore the Spark on EMR Relational Cache implements its own Catalyst rules to rewrite the execution plan. Following this, this article goes through the process of rewriting the execution plan in the Spark on EMR Relational Cache.

Spark works by analyzing user query statements and converting them to into an Unresolved Logical Plan, Resolved Logical Plan, Optimized Logical Plan, and Physical Plan in sequence. Unoptimized logical plans can vary greatly depending on the user query statement to which they relate. As a part of the optimization process, we can obtain the user query plan from a logical plan. Next, to match the logical plan in optimization, we can choose to place the rewriting process in the later step of the Spark optimizer. At the same time, we can analyze the logical plan of the Relational Cache in advance to obtain the optimized cache plan, reducing the complexity of matching. In this way, we only need to match the two logical plans after optimizations, such as predicate push-down and predicate merge.

When matching, we want to match computing and I/O operations as much as possible. Therefore, we will traverse the target plan in the forward order, perform matching in sequence and try to find the most matching nodes. When determining whether the two nodes match, we adopt the post-order traversal method, hoping to detect a mismatch as soon as possible and reduce the execution time of plan matching. Then, we rewrite the plan (including such things as further Filter, Project, Sort, and the Aggregate operations on the cached data) based on the matching results, to make it fully equivalent to the matching node. Then, we update the reference binding of the logical plan node and seamlessly replace it into the logical plan, so that the final rewritten plan can be easily obtained.

The Join operations in Spark are all binary operations, and the actual Join order may vary greatly according to some policies. Therefore, special processing is required for Join nodes. We first process the logical plan, and rearrange the Join according to the Join order of the cache plan. This step is performed before tree matching to avoid wasting time on repeated Join rearrangements. The rearranged Join can be matched with a greater probability.

To achieve the universality of cache, we have introduced the Record Preserve concept based on the characteristics of the Star data model. This is similar to the relation between the Primary Key (PK) and the Foreign Key (FK) in traditional databases. When a table with a PK and a table pointed to by a non-null FK are joined on a FK, the number of records will not change, records will not expand, and records will not be lost. The semantics of PK and FK are often missing in big data processing frameworks. We have introduced a new DDL to allow users to customize the relation of Record Preserve Join. When you define "A Inner Join B" as the Record Preserve for Table A, we will also match the relation between "A Inner Join B" and Table A. With the help of PK and FK, the number of successful matches can be greatly increased, and a Relational Cache can be shared by more queries that seem to differ greatly, which can effectively save additional storage and precomputing costs for users.

Generally, Aggregate matching is relatively simple. The Grouping Set operation supported by Spark will build an Expand logical plan node, which is equivalent to converting one record into multiple records marked with Grouping IDs. The child nodes of Expand are shared by all Grouping cases, so here we only match the child nodes once, and then match the Grouping attribute and Aggregate attribute respectively above. It is mainly to verify that all the attributes or aggregate functions required for the target aggregation can be computed from the aggregation result corresponding to a certain Grouping ID. For example, the coarse-grained Sum can perform the second Sum for the fine-grained Sum, the coarse-grained Count should also perform the second Sum for the fine-grained Count, and the coarse-grained Average cannot be restored only from the fine-grained Average.

After the matched logical plan is found, it's time to rewrite the logical plan. For logical plans that do not require secondary aggregation, you can directly choose the required columns from the Relation of the cached data based on the schema of the cached data, and perform subsequent operations after filtering based on the conditions. For logical plans that still need secondary aggregation, you need to keep all the columns to be used external, the columns required for aggregation, and the data required by the aggregate function when choosing the required columns. The aggregate function that performs secondary aggregation needs to be rewritten according to the actual situation, to ensure that it can use the results that have been initially aggregated in the Relational Cache. It is necessary to determine whether secondary aggregation can be performed based on the semantics of aggregation. If it is an aggregation of Grouping Set, you need to choose the correct Grouping ID for filtering before the secondary aggregation. After secondary aggregation, the steps are basically the same as general rewriting. You only need to replace them into the target plan.

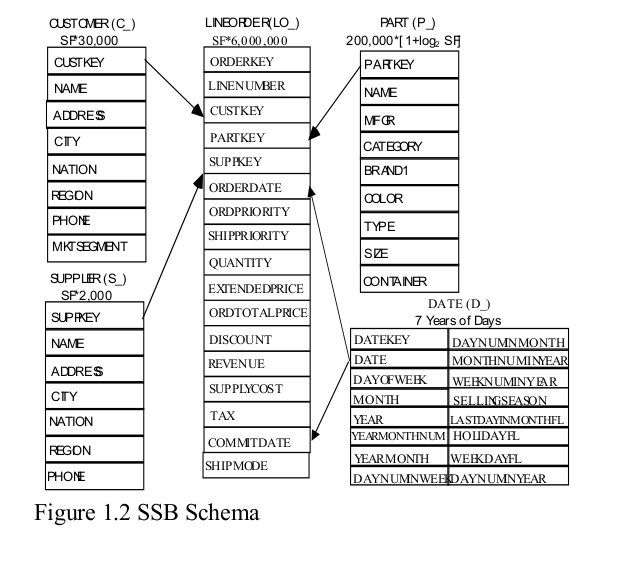

Here is an example to illustrate the result of rewriting the logical plan. Star Schema Benchmark is a standard benchmark for data analysis of Star models, and its structure definition is shown in the following figure:

The SQL statement for building a Relational Cache is as follows:

SELECT GROUPING_ID() AS grouping_id, lo_discount, s_city, c_city, p_category, d_year, lo_quantity, d_weeknuminyear, s_nation, s_region, p_mfgr, c_region, d_yearmonth, p_brand, c_nation, d_yearmonthnum, SUM(lo_revenue) AS lo_revenue_SUM, SUM(lo_supplycost) AS lo_supplycost_SUM, SUM(V_REVENUE) AS V_REVENUE_SUM

FROM supplier, p_lineorder, dates, customer, part

WHERE lo_orderdate = d_datekey AND lo_custkey = c_custkey AND lo_suppkey = s_suppkey AND lo_partkey = p_partkey

GROUP BY lo_discount, s_city, c_city, p_category, d_year, lo_quantity, d_weeknuminyear, s_nation, s_region, p_mfgr, c_region, d_yearmonth, p_brand, c_nation, d_yearmonthnum GROUPING SETS ((d_year, d_weeknuminyear, lo_discount, lo_quantity), (d_year, lo_discount, lo_quantity), (lo_discount, lo_quantity), (d_yearmonthnum, lo_discount, lo_quantity), (d_year, p_category, p_brand, s_region), (d_year, p_category, s_region), (d_year, s_region), (d_year, s_region, c_region, s_nation, c_nation), (d_year, s_city, c_city, s_nation, c_nation), (d_year, s_city, c_city), (d_year, d_yearmonth, s_city, c_city), (d_year, s_region, c_region, c_nation, p_mfgr), (d_year, s_region, s_nation, c_region, p_mfgr, p_category), (d_year, s_nation, s_city, c_region, p_brand, p_category, p_brand), (d_year, s_nation, s_city, c_region, p_brand, p_category), (d_year, s_nation, s_city, c_region, p_category, p_brand))You should choose a query as an example. The Specific query statement is as follows:

select c_city, s_city, d_year, sum(lo_revenue) as revenue

from customer, lineorder, supplier, dates

where lo_custkey = c_custkey

and lo_suppkey = s_suppkey

and lo_orderdate = d_datekey

and c_nation = 'UNITED KINGDOM'

and (c_city='UNITED KI1' or c_city='UNITED KI5')

and (s_city='UNITED KI1' or s_city='UNITED KI5')

and s_nation = 'UNITED KINGDOM'

and d_yearmonth = 'Dec1997'

group by c_city, s_city, d_year

order by d_year asc, revenue descThe original logical plan is as follows:

Sort [d_year#39 ASC NULLS FIRST, revenue#0L DESC NULLS LAST], true

+- Aggregate [c_city#6, s_city#31, d_year#39], [c_city#6, s_city#31, d_year#39, sum(lo_revenue#23L) AS revenue#0L]

+- Project [c_city#6, lo_revenue#23L, s_city#31, d_year#39]

+- Join Inner, (lo_orderdate#16 = d_datekey#35)

:- Project [c_city#6, lo_orderdate#16, lo_revenue#23L, s_city#31]

: +- Join Inner, (lo_suppkey#15 = s_suppkey#28)

: :- Project [c_city#6, lo_suppkey#15, lo_orderdate#16, lo_revenue#23L]

: : +- Join Inner, (lo_custkey#13 = c_custkey#3)

: : :- Project [c_custkey#3, c_city#6]

: : : +- Filter (((isnotnull(c_nation#7) && (c_nation#7 = UNITED KINGDOM)) && ((c_city#6 = UNITED KI1) || (c_city#6 = UNITED KI5))) && isnotnull(c_custkey#3))

: : : +- HiveTableRelation `ssb`.`customer`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [c_custkey#3, c_name#4, c_address#5, c_city#6, c_nation#7, c_region#8, c_phone#9, c_mktsegment#10]

: : +- Project [lo_custkey#13, lo_suppkey#15, lo_orderdate#16, lo_revenue#23L]

: : +- Filter ((isnotnull(lo_custkey#13) && isnotnull(lo_suppkey#15)) && isnotnull(lo_orderdate#16))

: : +- HiveTableRelation `ssb`.`lineorder`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [lo_orderkey#11L, lo_linenumber#12L, lo_custkey#13, lo_partkey#14, lo_suppkey#15, lo_orderdate#16, lo_orderpriotity#17, lo_shippriotity#18, lo_quantity#19L, lo_extendedprice#20L, lo_ordtotalprice#21L, lo_discount#22L, lo_revenue#23L, lo_supplycost#24L, lo_tax#25L, lo_commitdate#26, lo_shipmode#27]

: +- Project [s_suppkey#28, s_city#31]

: +- Filter (((isnotnull(s_nation#32) && ((s_city#31 = UNITED KI1) || (s_city#31 = UNITED KI5))) && (s_nation#32 = UNITED KINGDOM)) && isnotnull(s_suppkey#28))

: +- HiveTableRelation `ssb`.`supplier`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [s_suppkey#28, s_name#29, s_address#30, s_city#31, s_nation#32, s_region#33, s_phone#34]

+- Project [d_datekey#35, d_year#39]

+- Filter ((isnotnull(d_yearmonth#41) && (d_yearmonth#41 = Dec1997)) && isnotnull(d_datekey#35))

+- HiveTableRelation `ssb`.`dates`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [d_datekey#35, d_date#36, d_dayofweek#37, d_month#38, d_year#39, d_yearmonthnum#40, d_yearmonth#41, d_daynuminweek#42, d_daynuminmonth#43, d_daynuminyear#44, d_monthnuminyear#45, d_weeknuminyear#46, d_sellingseason#47, d_lastdayinweekfl#48, d_lastdayinmonthfl#49, d_holidayfl#50, d_weekdayfl#51]The rewritten logical plan is as follows:

Sort [d_year#47 ASC NULLS FIRST, revenue#558L DESC NULLS LAST], true

+- Aggregate [c_city#22, s_city#39, d_year#47], [c_city#22, s_city#39, d_year#47, sum(cast(lo_revenue_SUM#773L as bigint)) AS revenue#558L]

+- Filter ((((((((isnotnull(s_nation#40) && ((s_city#39 = UNITED KI1) || (s_city#39 = UNITED KI5))) && (s_nation#40 = UNITED KINGDOM)) && isnotnull(d_yearmonth#49)) && (d_yearmonth#49 = Dec1997)) && isnotnull(c_nation#23)) && (c_nation#23 = UNITED KINGDOM)) && ((c_city#22 = UNITED KI1) || (c_city#22 = UNITED KI5))) && (grouping_id#662 = 19322))

+- Relation[grouping_id#662,lo_discount#759,s_city#39,c_city#22,p_category#762,lo_quantity#763,d_weeknuminyear#764,s_nation#40,s_region#766,p_mfgr#767,c_region#768,p_brand1#769,c_nation#23,d_yearmonthnum#771,d_yearmonth#49,lo_revenue_SUM#773L,lo_supplycost_SUM#774L,V_REVENUE_SUM#775L,d_year#47] parquetFrom this, we can see that the execution plan is greatly simplified, and we can respond to users' hit queries in sub-seconds.

During the actual test, we found that the matching time increases linearly when multiple Relational Caches exist. Since we store Cache SQL statements in Metastore, the time taken to fetch SQL statements and re-analyze them should not be underestimated, which leads to a significant increase in the matching process, and deviates from our original intention of pursuing a sub-second level response. Therefore, we have built a logical plan cache in Spark to cache the analyzed Relational Cache plan in the memory. For each Relational Cache, only one copy is cached, and the plan itself occupies limited space, so we can cache the optimized logical plans of almost all the Relational Caches, so that after the first query, queries will no longer suffer from latency due to fetching SQL statements and re-analyze them. After such optimization, the matching time is greatly reduced to the order of 100 ms.

The Relational Cache provides a cache-based optimization solution, which enables Spark SQL to be used in real-time queries to meet the demand of users for second-level queries on massive data. By dynamically rewriting user queries, the cache utilization can be greatly improved, the cache hit scenarios can be extended, and the query performance can be effectively improved. The existing solution also has many aspects to be optimized. For example, the time complexity of repeated backtracking traversal is high, and it is better to update and maintain the matching information within the logical plan node. Considering the intrusion to Spark, we have chosen the existing solution for the moment. In the future, we will further optimize the logical plan rewriting process based on actual usage. In addition, for rewriting a logical plan, this also involves different rewriting methods based on different Relational Cache plans. As for how to choose the best rewriting solution according to the execution cost in these rewriting results, this will be revealed in subsequent articles.

Alibaba EMR - August 28, 2019

Alibaba EMR - December 2, 2019

Alibaba Clouder - July 21, 2020

Alibaba EMR - March 16, 2021

Alibaba EMR - April 21, 2022

Alibaba EMR - May 20, 2022

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More ApsaraVideo Media Processing

ApsaraVideo Media Processing

Transcode multimedia data into media files in various resolutions, bitrates, and formats that are suitable for playback on PCs, TVs, and mobile devices.

Learn More EventBridge

EventBridge

EventBridge is a serverless event bus service that connects to Alibaba Cloud services, custom applications, and SaaS applications as a centralized hub.

Learn More