By Keqing

The seventh article of this series (Understand the Implementation Principles of Database Index) introduced the index build process of OceanBase from the perspective of code introduction and explained the code related to index construction.

This eighth article of this series explains the submission and playback of transaction logs. OceanBase logs (clogs) are similar to REDO logs of traditional databases. This module is responsible for persisting transaction data during transaction commit and implements a distributed consistency protocol based on Multi_Paxos.

Compared with the traditional RDS log module, OceanBase's Log Service faces the following challenges:

Multi_Paxos replaces the traditional primary/secondary synchronization mechanism, the high availability of the system and high reliability of data are realized.The log module of OceanBase implements a set of standard Multi_Paxos to ensure that all submitted data can be recovered without a majority of permanent failures.

It also implements an out-of-order log commit to ensure there are no dependencies between transactions. Before the following introduction to OceanBase's project implementation for Multi_Paxos, readers take for granted that they have already understood the core idea of Multi_Paxos. If you want to know more, please learn more about Multi_Paxos in the question and answer section of the community.

Let's take a transaction log of a partition as an example. The normal process is shown below:

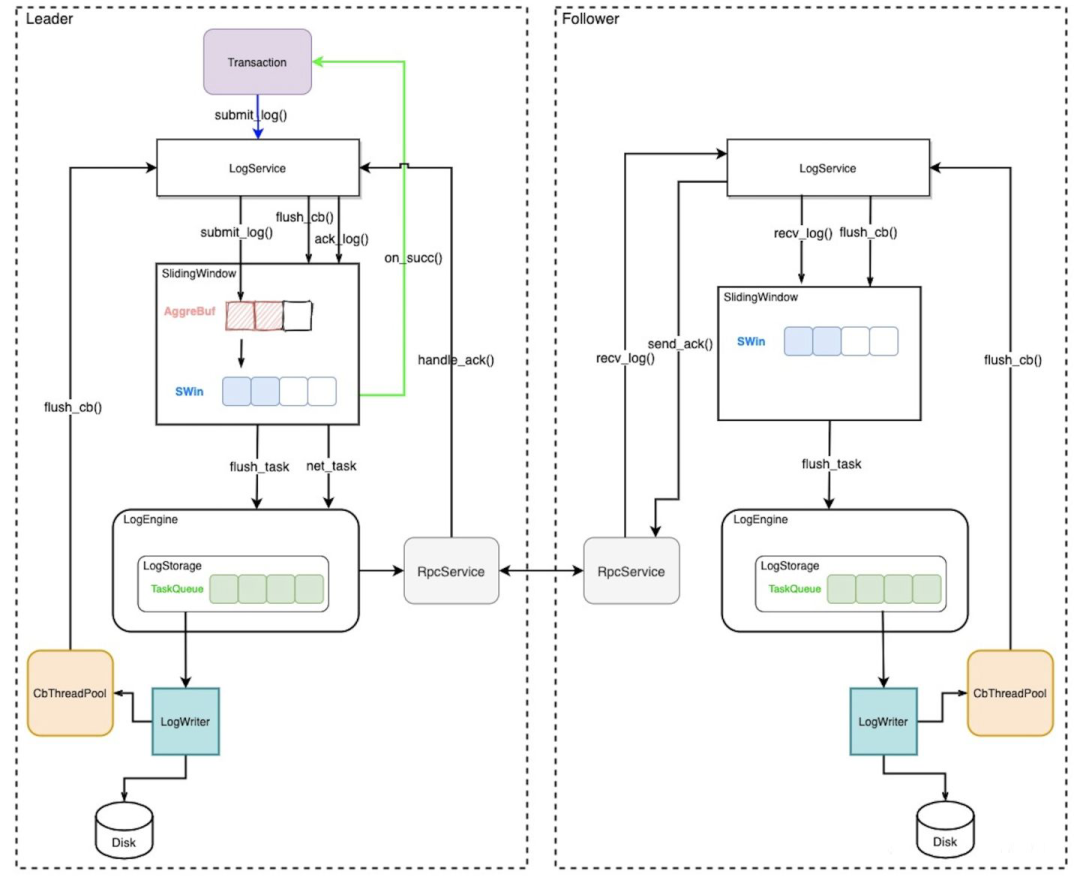

log_service->submit_log () interface to commit logs (which carries on_succ_cb callback pointers).clog first assigns log_id and submit_timestamp and commits to a sliding window to generate a new log_task. Then, the local system commits the write disk and synchronizes the logs to the follower through RPC.log_service->flush_cb() to update the log_task status and mark local persistence. When the follower disk writing is successful, return the ACK to the leader.leader receives the ack_list of the ack and updates log_task.leader counts the majority in steps 4 and 5. Once a majority is reached the leader sequentially calls the log_task->on_success callback transactions while sending a confirmed_info message to the follower and slides this log out of the sliding window.confirmed_info message, the follower tries to slide this log out of the sliding window, submits, and replays the log in the sliding out of the operation.On the follower, the submitted logs are played back in real-time. The playback process is shown below:

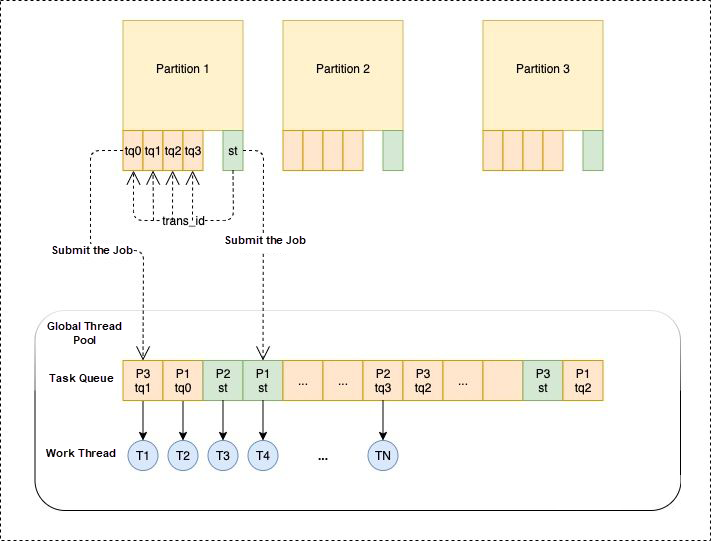

When the follower slides out the log, the corresponding value of the submit_log_task that records the playback point in each partition will be increased. This task will be asynchronously committed to a global thread pool for consumption.

When the global thread pool consumes the submit_log_task of a partition, it reads all the logs to be played back in it and distributes them to the corresponding four playback task queues of the partition. The allocation method is mainly to hash according to the trans_id to ensure the logs of the same transaction are allocated to one queue. The queue assigned to the new task asynchronously submits the task_queue as a task to the global thread pool mentioned in 1 for consumption.

When the global thread pool consumes task_queue, it traverses all subtasks in the queue in turn and executes the corresponding application logic based on the log type. At this point, a log has been synchronized to the follower and can be read on the follower.

After learning the source code interpretation of this article, you may have a corresponding understanding of the submission and playback of transaction logs. The next article in this series will explain the relevant content of OceanBase storage layer code interpretation. Please look forward to it!

An Interpretation of the Source Code of OceanBase (7): Implementation Principle of Database Index

An Interpretation of the Source Code of OceanBase (9): "Macro Block Storage Format"

OceanBase - September 14, 2022

OceanBase - September 9, 2022

OceanBase - September 9, 2022

OceanBase - May 30, 2022

OceanBase - September 13, 2022

OceanBase - September 9, 2022

Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More Phone Number Verification Service

Phone Number Verification Service

A one-stop, multi-channel verification solution

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by OceanBase