Enterprises typically derive data from logs. Precise operations will be facilitated if they can extract valuable information from logs to profile users on a platform or website. Yi Xiu, a technical expert at Alibaba Cloud, shared some best practices of Alibaba Cloud in log processing and data warehouse (DW) creation. His talked about DW development and focused on the five steps for profiling users. The following article is based on Yi Xiu's presentation.

MaxCompute is an all-in-one data warehousing solution that supports processing and storing petabytes of data. How can we use MaxCompute to create a DW, cleanse and process data, and extract valuable information? MaxCompute 2.0 provides some new features, such as storing unstructured data in OSS. In earlier versions of MaxCompute, you need to use data integration tools or write some tasks to synchronize unstructured data to MaxCompute periodically or at once. This increases both the development and O&M costs. In MaxCompute 2.0, you can create external tables to connect to data sources and process data directly.

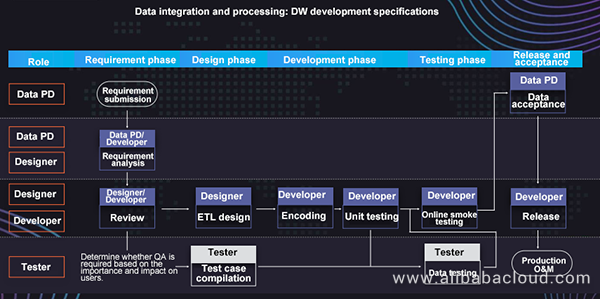

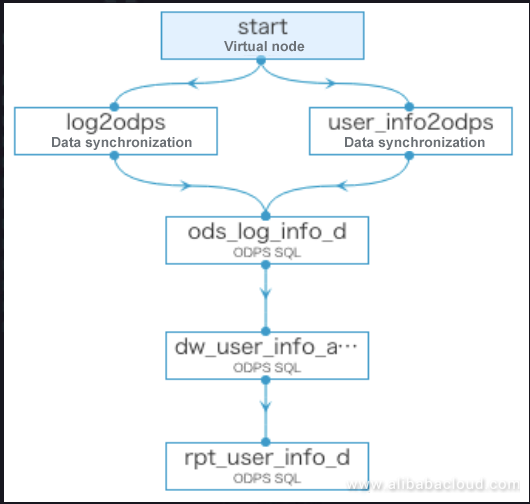

The preceding figure shows the DW development process. First, valuable information is extracted from log data and basic user information. Then, data developers need to perform extract, transform, and load (ETL) design, encode and configure data, and submit tasks online. DataWorks can be used to complete online data O&M.

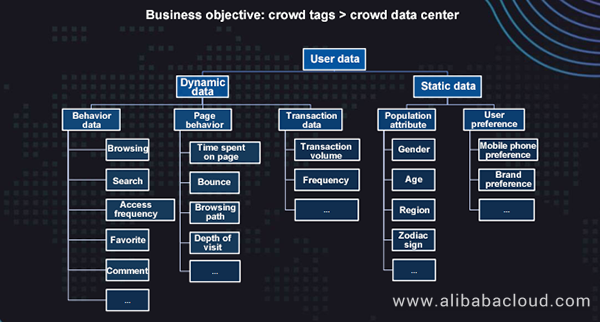

The preceding figure shows a trace diagram used to profile users. User data usually consists of dynamic data and static data. Dynamic data includes user behavior data, page behavior data, and transaction data. For example, the click-through and browsing data of a user on your website can be categorized as dynamic data. When we talk about the depth of visit, the number of pages a user accesses and the amount of time the user spends to view your website both belong to dynamic data. The bounce rate in the entire process from registration to data development is another example of dynamic data. Static data includes users' attributes, such as the name, zodiac sign, age, residence, and client used for accessing your website. Static data may reflect a user's client preference information.

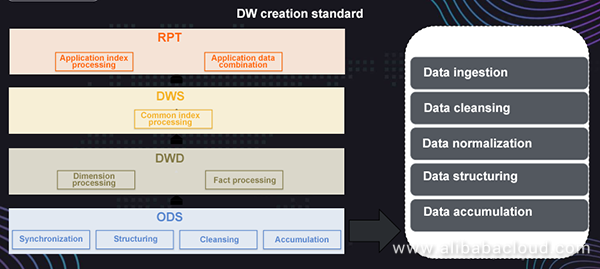

DW layering must be implemented before DW creation. The operational data store (ODS) layer is the underlying layer. It collects raw data to MaxCompute and processes certain unstructured data into structured data, including making the data normalized. The data warehouse detail (DWD) layer contains detailed data. At this layer, data is cleansed, exchanged, and packaged to generate value. At the upper layers, common indexes and application indexes, such as page view (PV), unique visitor (UV), and device access, are processed based on the data.

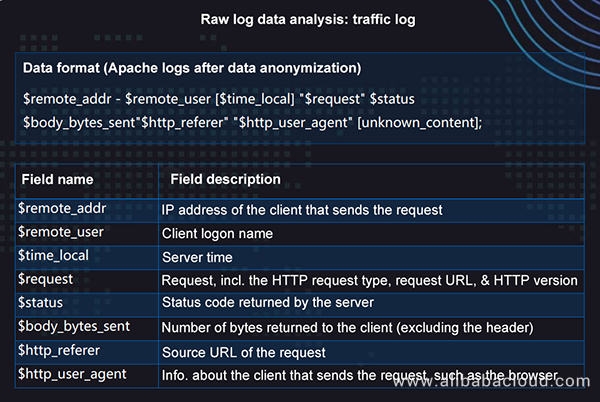

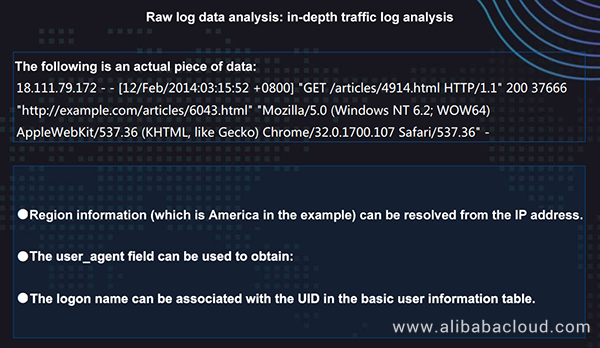

What kind of information can we obtain from fields in raw data? A log contains the IP address of a user who accesses a website or platform and the user's logon name and device information. The preceding figure shows the actual log data of a user, from which you can obtain the user's IP address, access time, access page, browser type, and browser version. For example, the user may use the browser of a mobile phone to gain access. With such information in hand, you can extract more valuable information. For example, you can deduce the city where the user lives through the IP address. Also, you can obtain the device operating system information through the user_agent field. Then, you can structure and abstract the data.

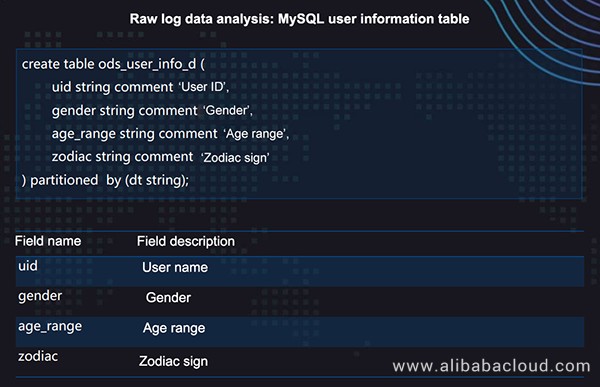

A user information table is a structured two-dimensional table that includes a user's gender, age, zodiac sign, and other information.

With the existing data shown in the red boxes in the preceding figure, you can obtain the website browsing behavior of users, such as the PV and UV of the entire website, most frequently visited page, and access time.

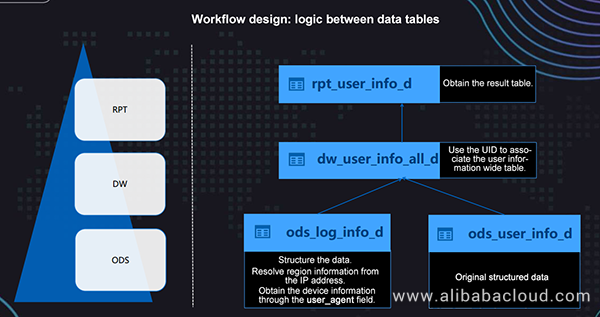

In the data development phase, the logic shown in the preceding figure must be implemented. The ods_log_info_d table on the left stores the log information, which must be structured to resolve users' IP addresses to specific regions. The basic user information in the ods_user_info_d table on the right is structured. The two tables are associated by UID and joined to generate a wide table at the DW layer. Data in the wide table is synchronized to MaxCompute without any modifications. Based on the wide table, the RPT layer generates result tables containing users' PV data. Then, you can obtain the average PV and the optimal depth of visit on the entire website.

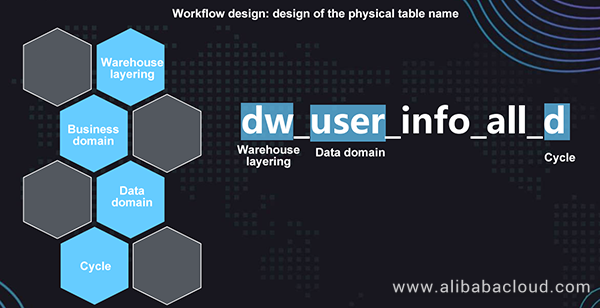

How can we create a more comprehensive table? All workflow tasks, node tasks, and tables have their respective naming rule. If the data volume is large and a great number of tables exist, the naming rule can be used to distinguish the tables with different functions. Typically, the table name consists of the database warehouse layering information, business domain, data domain, and data analysis frequency. As shown in the preceding figure, the dw_user_info_all_d table belongs to the DW layer and records users' basic information. Data in this table is updated on a daily basis. The table name clarifies the business value of the table so that dependent engineers can quickly obtain information about the table, for example, the update frequency and content of the table.

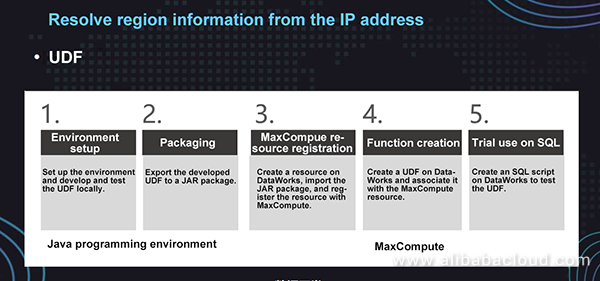

MaxCompute provides some common functions. However, the function used to convert an uppercase letter to a lowercase letter or resolve region information from an IP address is not exposed to external users in the public cloud. Therefore, user-defined functions (UDFs) are required. In most cases, Java UDFs are used. Then, you can use DataWorks to register the functions and resource packages with MaxCompute and then parse the functions.

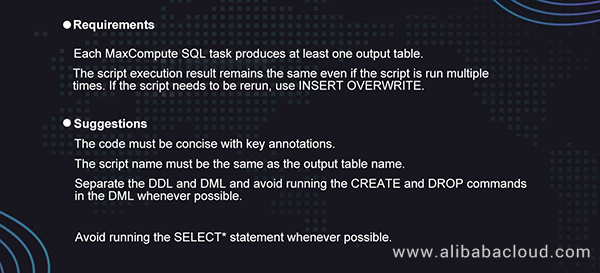

Only one table can be generated on each node. If multiple tables exist on one node and the processing logic of a table is incorrect, the task fails. In this case, you may need to run the entire task again. In addition, the name of the output table must be the same as that of the node. In this way, you can quickly locate the node on which data of the output table is not generated and the tasks that fail in the output O&M process.

The INSERT INTO and INSERT OVERWRITE statements may be involved in MaxCompute. For example, when you test a data task, you may write data into your database, resulting in duplicated data or dirty data. If you run INSERT OVERWRITE to rerun a task or manually pull a failed task, the original table data is cleared. If the original table is a partition table, the table data is cleared, and data is written into partitions in batches. This method eliminates dirty data. Other suggestions include adding annotations to code and explaining the entire SQL logic.

During the operation, we recommend that you avoid running SELECT* whenever possible because this operation increases computing costs. MaxCompute 2.0 has provided the full table scanning function. However, we recommend that you scan tables by partition to save computing costs. Last month we visited a customer who has spent over USD 400 per month on the platform. The customer did not create partitions before scanning the table. The computing costs for scanning the entire table are high.

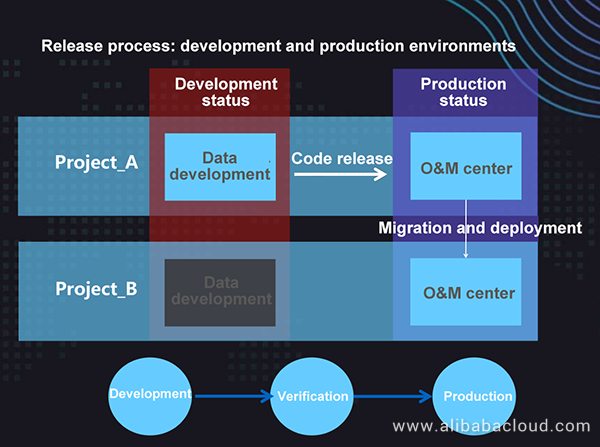

In addition to public cloud services, there are some private services in the public cloud. For example, in the security and finance industries, MaxCompute needs to be deployed locally. In the public cloud, one or two projects are created, including the development project and the production project. The data development team develops and debugs data tasks and releases them in the production environment, where O&M is performed by day, week, or month based on the configured scheduling information. For example, SGCC (Zhejiang) poses a high requirement on the data development process and creates multiple projects, including the projects of development, test, pre-release, and production. In this case, the entire code environment must be operated and maintained in detail. When creating a task, you can debug it in the project configuration. For example, you can disable scheduling in a development project. In this way, after you submit the task, it will not be automatically scheduled every day. After you release the task in a production project, the task will be synchronized each day based on your configuration.

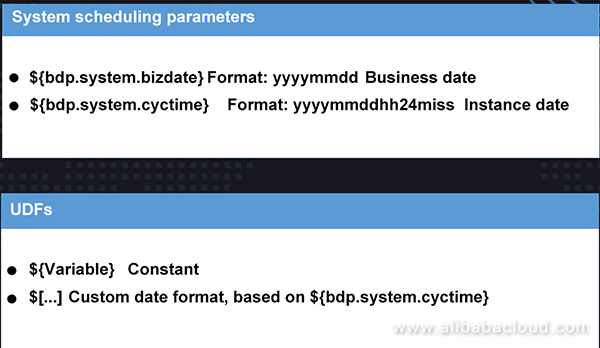

Scheduling parameters can be used to write data into a new partition. For example, you can write data generated on the 24th day of a month to a partition and write data generated on the 25th day of the month to another partition. When you set these system parameters to schedule the system data, the date is automatically switched so that you do not need to manually create partitions.

As shown in the preceding figure, three tables are created and named based on their layers, for example, the ODS layer and DW layer. To synchronize data to MaxCompute, you must create a target table to store the data. Then, you must create a workflow node and a UDF, configure the SQL node, and perform testing.

To learn more about Alibaba Cloud MaxCompute, visit www.alibabacloud.com/product/maxcompute

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - January 22, 2021

Alibaba Cloud MaxCompute - March 24, 2021

Alibaba Cloud Community - March 4, 2022

ApsaraDB - December 17, 2024

Alibaba Container Service - July 25, 2025

Alibaba Cloud Native - April 16, 2024

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba Cloud MaxCompute