By Longji

This article provides a technical overview of the cloud-native architecture of RocketMQ and how it supports various scenarios using a unified architecture.

The article is divided into three main parts. Firstly, it introduces the core concepts and architecture of RocketMQ 5.0. Then, it discusses the management and control links, data links, and client-server interactions of RocketMQ from a cluster perspective. Finally, it explores the storage system, which is the most important module of the message queue (MQ), and explains how RocketMQ achieves data storage, high availability, and further improves competitiveness using cloud-native storage.

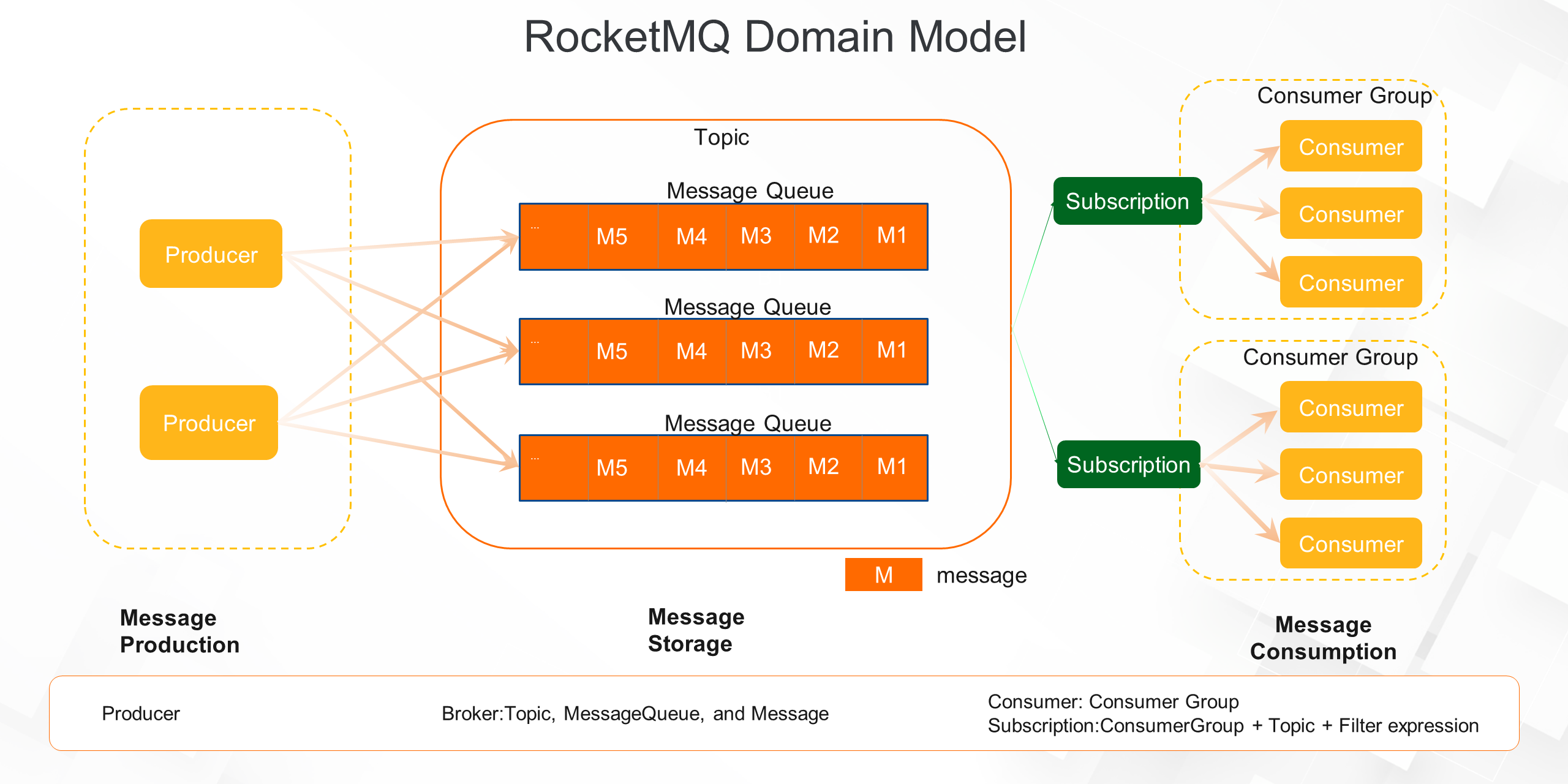

Before diving into the architecture of RocketMQ, let's first understand the core concepts and domain models from a user's perspective. The following diagram illustrates the sequence of message forwarding.

In RocketMQ, a message producer corresponds to the upstream applications of a business system. After a business action is triggered, the producer sends a message to a broker. The broker serves as the core of the message system's data link. Its responsibilities include receiving messages, storing messages, maintaining message and consumer status. Multiple brokers form a message service cluster, which collectively serves one or more topics.

Producers generate messages and send them to brokers. Messages act as a means of business communication. Each message contains a message ID, message topic, message body content, message attributes, and message business key. Each message belongs to a topic and represents the semantics of a specific business.

For example, in Alibaba, transaction messages are categorized under the topic "Trade" and shopping cart messages are categorized under the topic "Cart". The producer application sends messages to the respective topics. Topics also contain MessageQueues, which store load balancing and data shards for the message service. Each topic can have one or more MessageQueues distributed across different message brokers.

The process involves producers sending messages, brokers storing messages, and consumers consuming messages. Consumers generally correspond to downstream applications of a business system, and multiple application clusters with the same consumer share the same consumer group. A consumer establishes a subscription relationship with a topic. This relationship is defined by a consumer group, topics, and filter expressions. Messages that meet the subscription relationship are consumed by the corresponding consumer cluster.

Now, let's delve deeper into the technical implementation of RocketMQ.

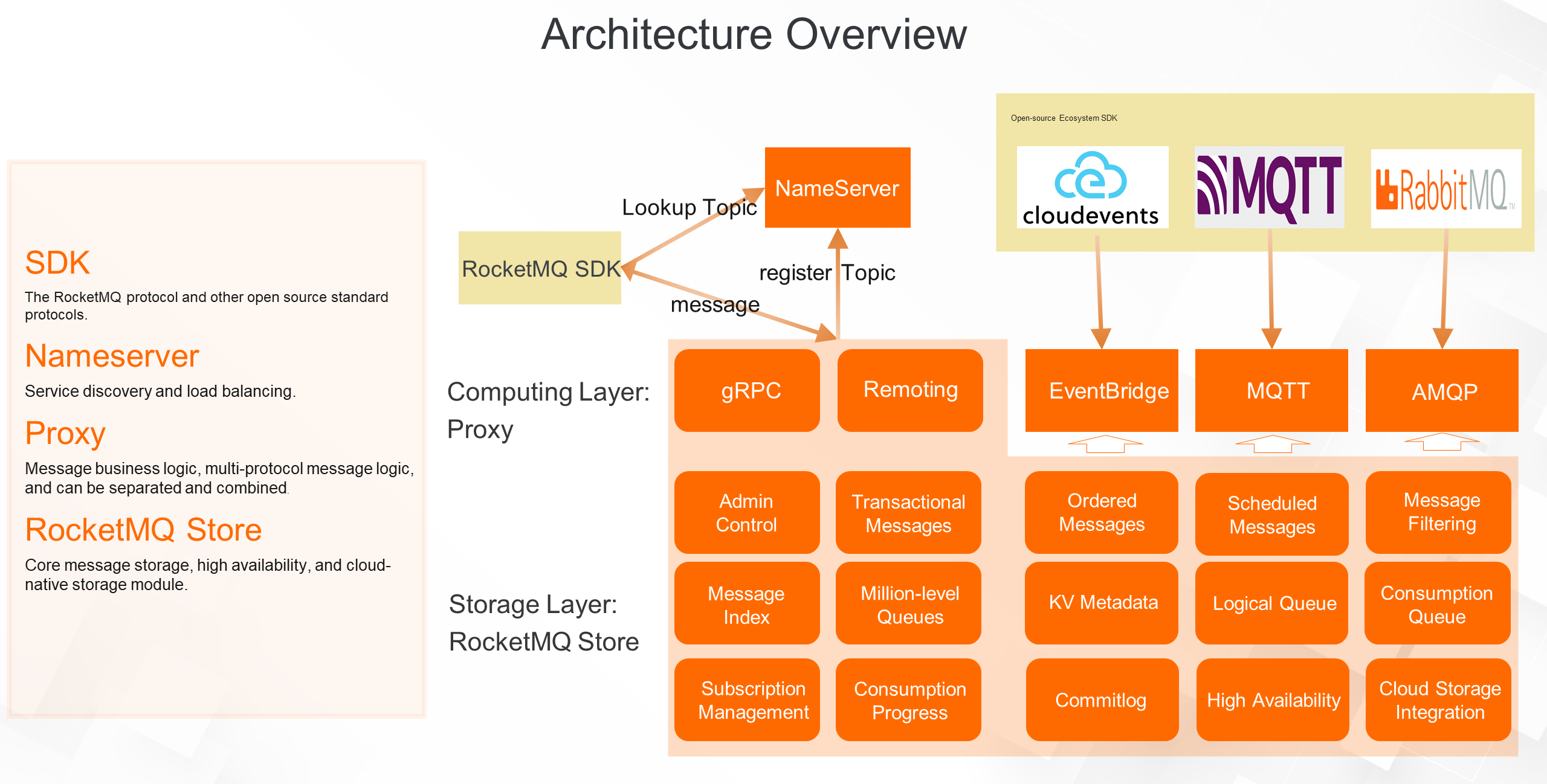

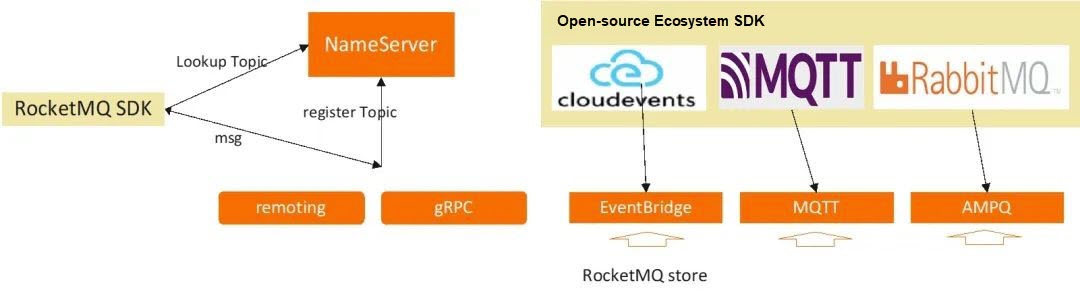

The architecture diagram of RocketMQ 5.0 is shown below. The diagram consists of four layers: SDK, NameServer, Proxy, and RocketMQ Store.

The SDK layer includes the RocketMQ SDK, which users use based on the RocketMQ domain model. In addition to the RocketMQ SDK, it also includes industry-standard SDKs for different scenarios, such as event-driven scenarios. RocketMQ 5.0 supports CloudEvents SDKs. For IoT scenarios, RocketMQ supports the SDKs of the IoT MQTT protocol. To facilitate the migration of traditional applications to RocketMQ, the AMQP protocol is also supported and will be open-sourced in the future.

The NameServer layer is responsible for service discovery and load balancing. It allows clients to obtain data shards and service addresses of topics and connect to the message server for sending and receiving messages.

The message services include the Proxy layer for computing and the RocketMQ Store layer for storage. RocketMQ 5.0 adopts a architecture that separates storage and computing. The separation emphasizes the separation of modules and responsibilities. The Proxy and RocketMQ Store layers can be deployed together or separately for different business scenarios.

The Proxy layer carries the upper-layer business logic of messages, especially for supporting multiple scenarios and protocols, such as the implementation logic and protocol conversion of CloudEvents, MQTT, and AMQP domain models. Depending on the business load, the Proxy layer can also be separately deployed to provide independent scalability. For example, in IoT scenarios, independent deployment allows for auto scaling to handle a large number of IoT device connections and decouples it from storage traffic scaling.

The RocketMQ Store layer is responsible for core message storage, including the Commitlog-based storage engine, search index, multi-replica technology, and integration with cloud storage. All the states of the message system are transferred to the RocketMQ Store layer, and all its components are stateless.

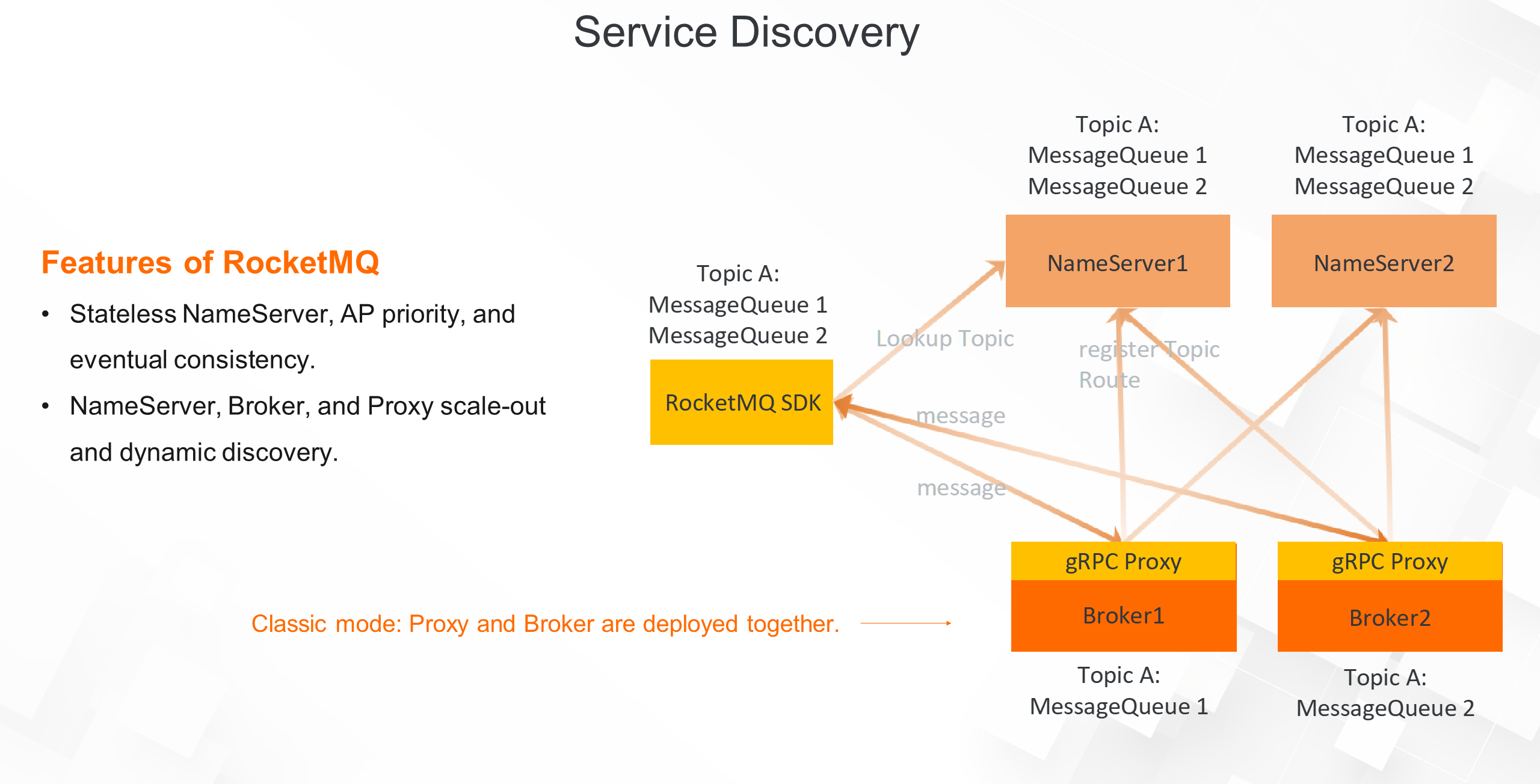

Now let's take a closer look at the service discovery architecture of RocketMQ, as shown in the following figure. The core of RocketMQ's service discovery is the NameServer. The figure shows the combined deployment model of proxies and brokers, which is the most common model for RocketMQ.

Each broker cluster is responsible for specific topic services. Each broker registers the topic information of its services to the NameServer (NS) cluster and communicates with each NameServer. It maintains the lease with the NS through the heartbeat mechanism. The service registration data structure contains topics and topic shards. In this example, broker1 and broker2 host one shard of Topic A each. The NS machine maintains a global view, showing that Topic A has two shards on broker1 and broker2.

Before sending and receiving messages to Topic A, the RocketMQ SDK randomly accesses the NameServer machine to obtain information such as the shards in Topic A, the broker on which each data shard is stored, and the duration of the shard's connection to the broker.

Most service discovery mechanisms in other projects use strongly consistent distributed coordination components like ZooKeeper and etcd as the registry. RocketMQ has its own characteristics. From the CAP perspective, the registry adopts the AP mode, and the NameServer node is stateless, following a shared-nothing architecture, which provides higher availability.

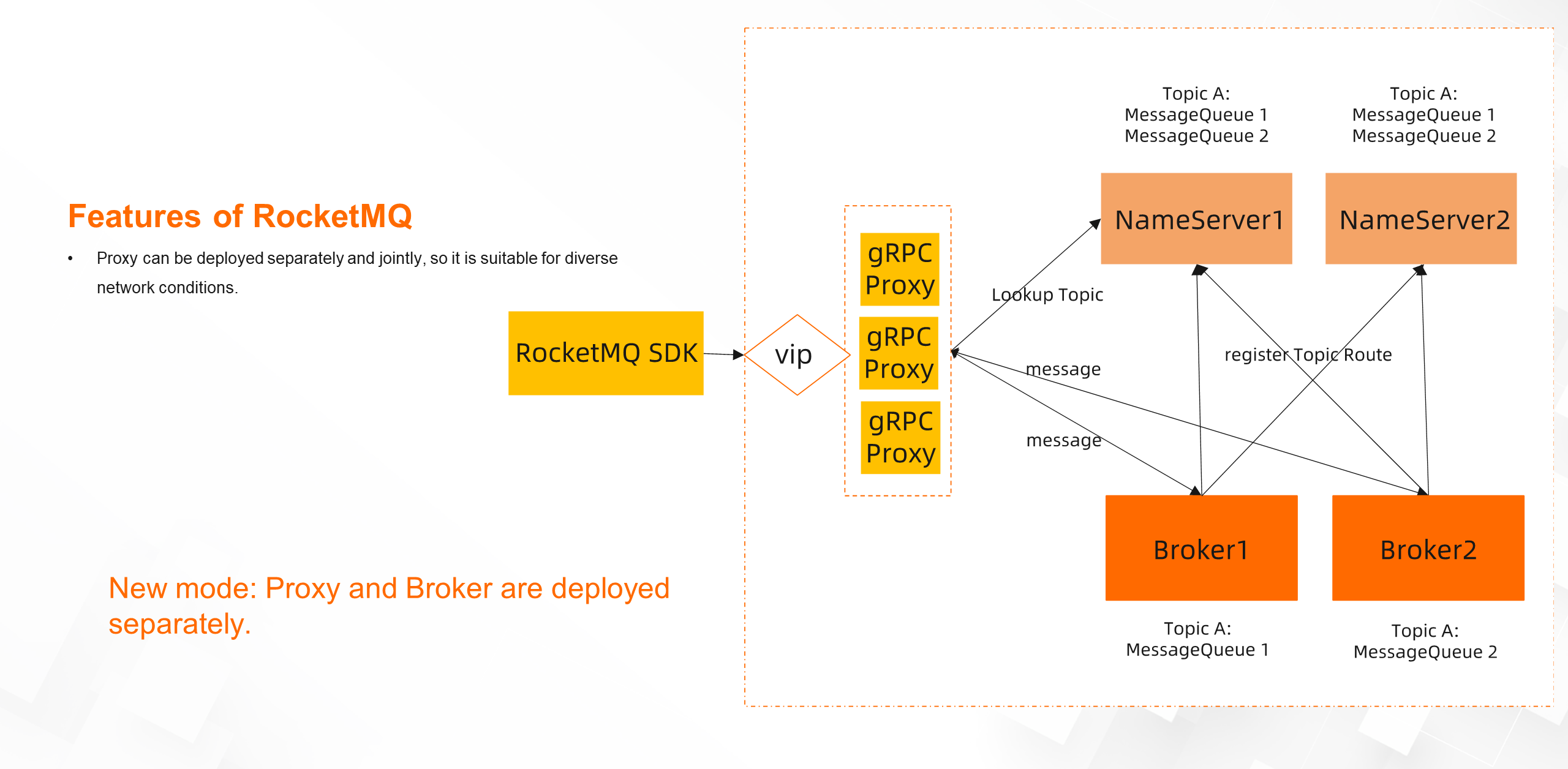

As shown in the figure below, the storage and computing of RocketMQ can be separated and combined. In the separated deployment mode, the RocketMQ SDK can directly access the stateless Proxy cluster. This model can handle more complex network environments and supports accessing multiple network types, such as public network access, for better security control.

In the entire service discovery mechanism, both the NameServer and Proxy are stateless and can add or remove nodes at any time. The scale-in or scale-out of stateful broker nodes is based on the NS registration mechanism, which can be dynamically discovered by clients in real-time. During the scale-in process, the RocketMQ broker can also control the read and write permissions for service discovery. The scale-in nodes can be blocked from writing and reading. After consuming all unread messages, a graceful and smooth shutdown can be achieved.

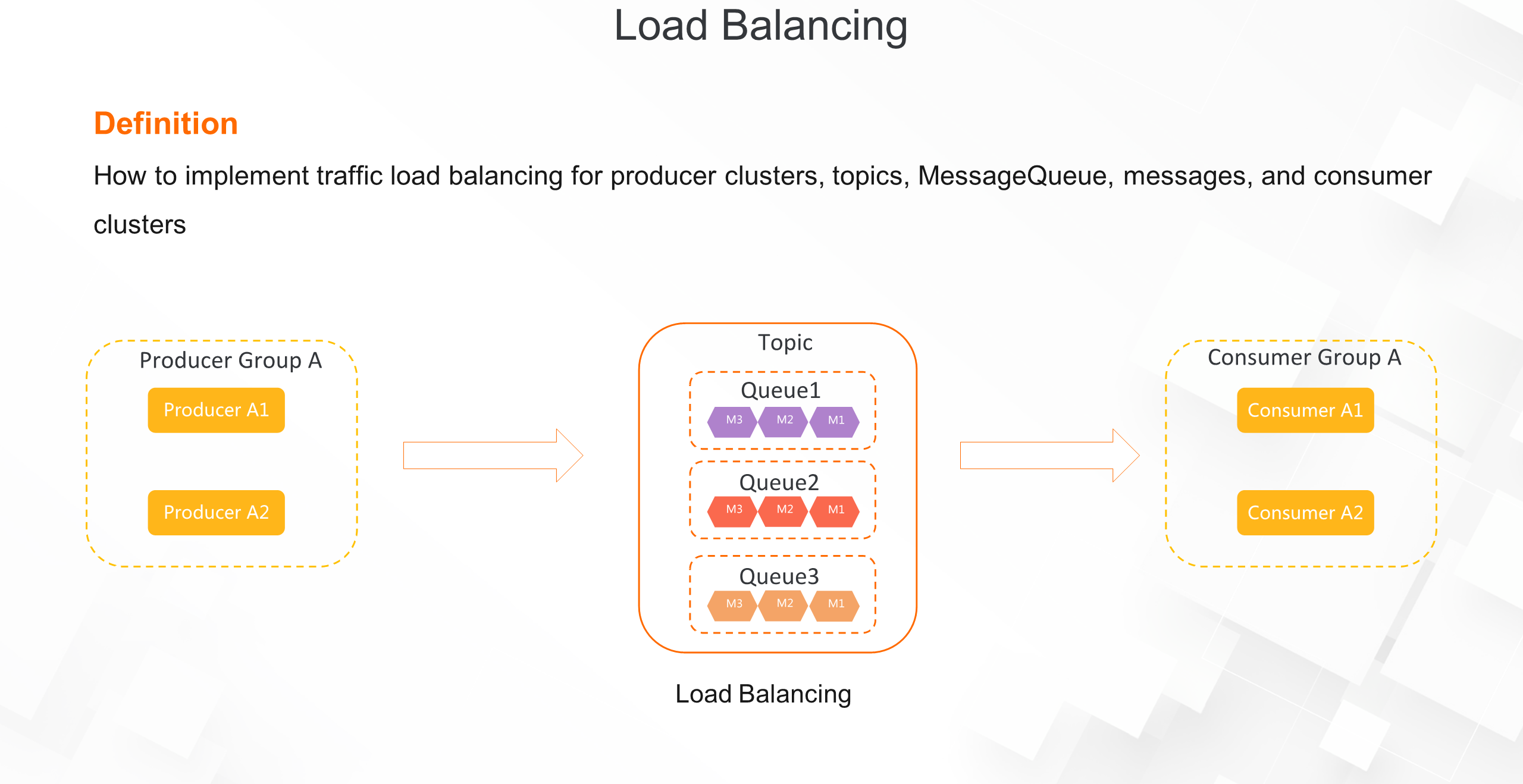

Through the previous introduction, we have learned how the SDK discovers the MessageQueue and broker address of topic shard information through NameServer. Based on the metadata discovered by these services, we will now explain in detail how message traffic achieves load balancing among producer, RocketMQ broker, and consumer clusters.

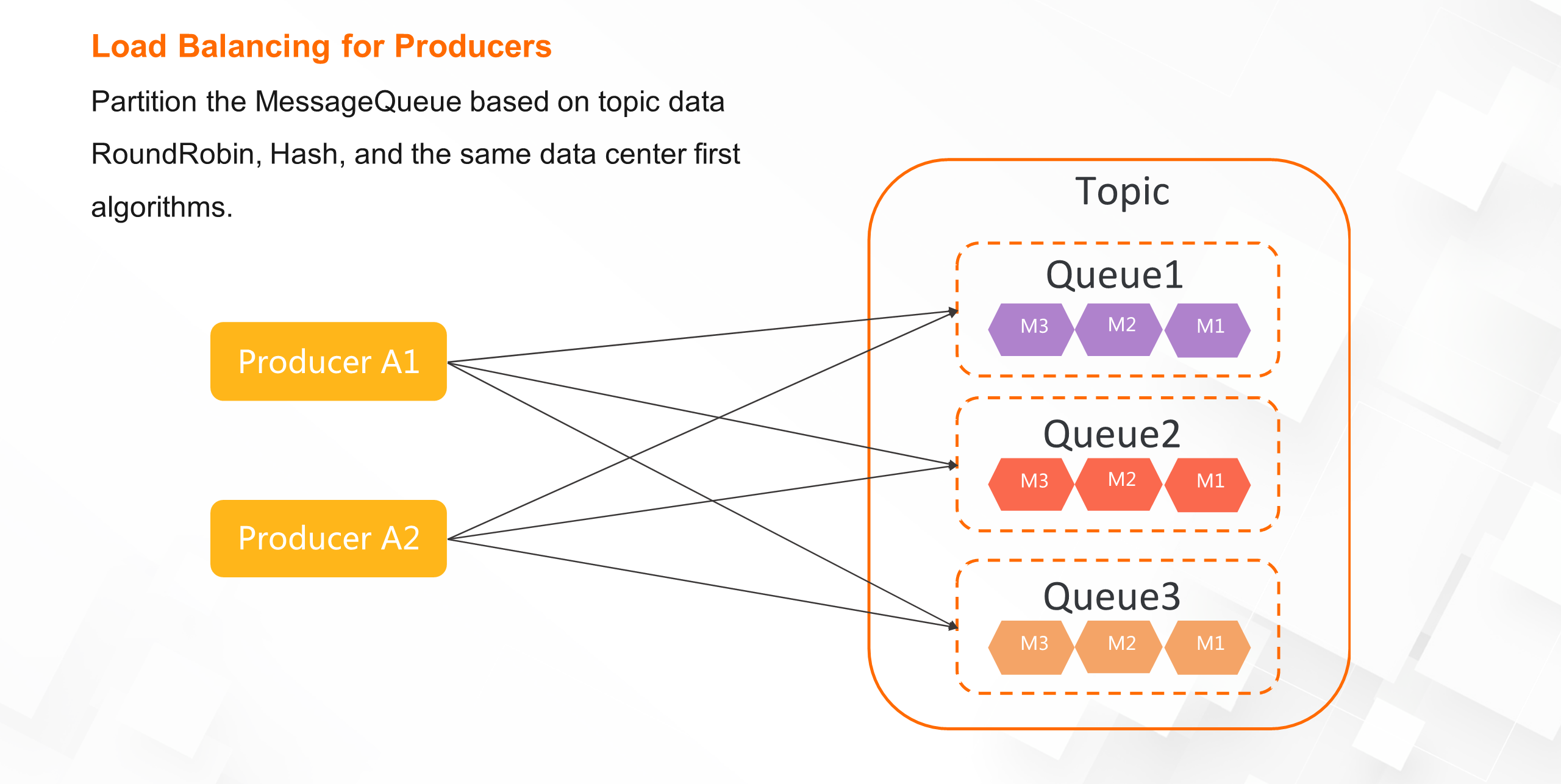

The following diagram illustrates the load balancing in the production link: The producer obtains the data shard of the topic and the corresponding broker address through the service discovery mechanism. The service discovery mechanism is relatively simple. By default, the RoundRobin method is used to poll and send messages to each topic queue to ensure a balanced traffic distribution among the broker cluster. However, in the case of ordered messages, the message-based business primary key is hashed to a queue. If there is a hot business primary key, it may also create a hotspot within the broker cluster. Additionally, based on metadata, more load balancing algorithms can be expanded based on business needs, such as the same data center first algorithm, which reduces latency and improves performance in multi-data center deployment scenarios.

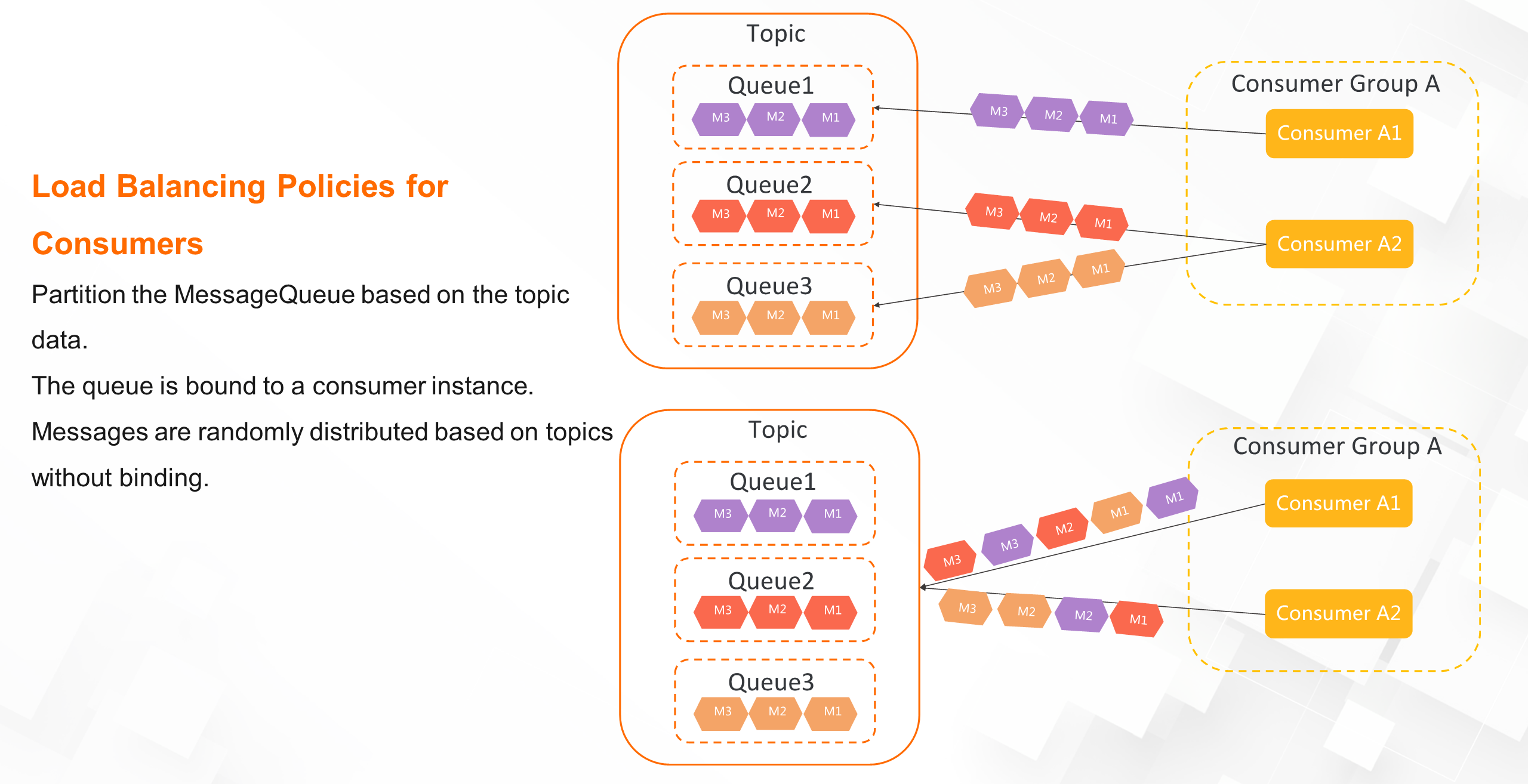

For consumers, there are two load balancing policies: queue-level load balancing and message-granularity load balancing.

The traditional approach is queue-level load balancing. If consumers know the total number of queues in a topic and the number of instances in the same consumer group, they can assign each consumer instance to a specific queue based on a unified allocation algorithm, similar to consistent hashing. By doing so, each consumer instance consumes only the messages bound to its assigned queue. The biggest drawback of this approach is the imbalance in load distribution. Some consumer instances are bound to more queues and have a heavier workload. For example, if there are three queues and two consumer instances, one consumer needs to consume 2/3 of the data. If there are four consumers, the fourth consumer will have no assigned queues. To address this issue, RocketMQ 5.0 introduces a message-granularity load balancing mechanism. Messages are randomly distributed among consumer clusters without the need to bind them to specific queues. This ensures load balancing among consumer clusters. Moreover, this mode aligns better with the future trend of serverless architecture. The number of broker machines and topic queues are completely decoupled from the number of consumer instances, allowing independent scalability.

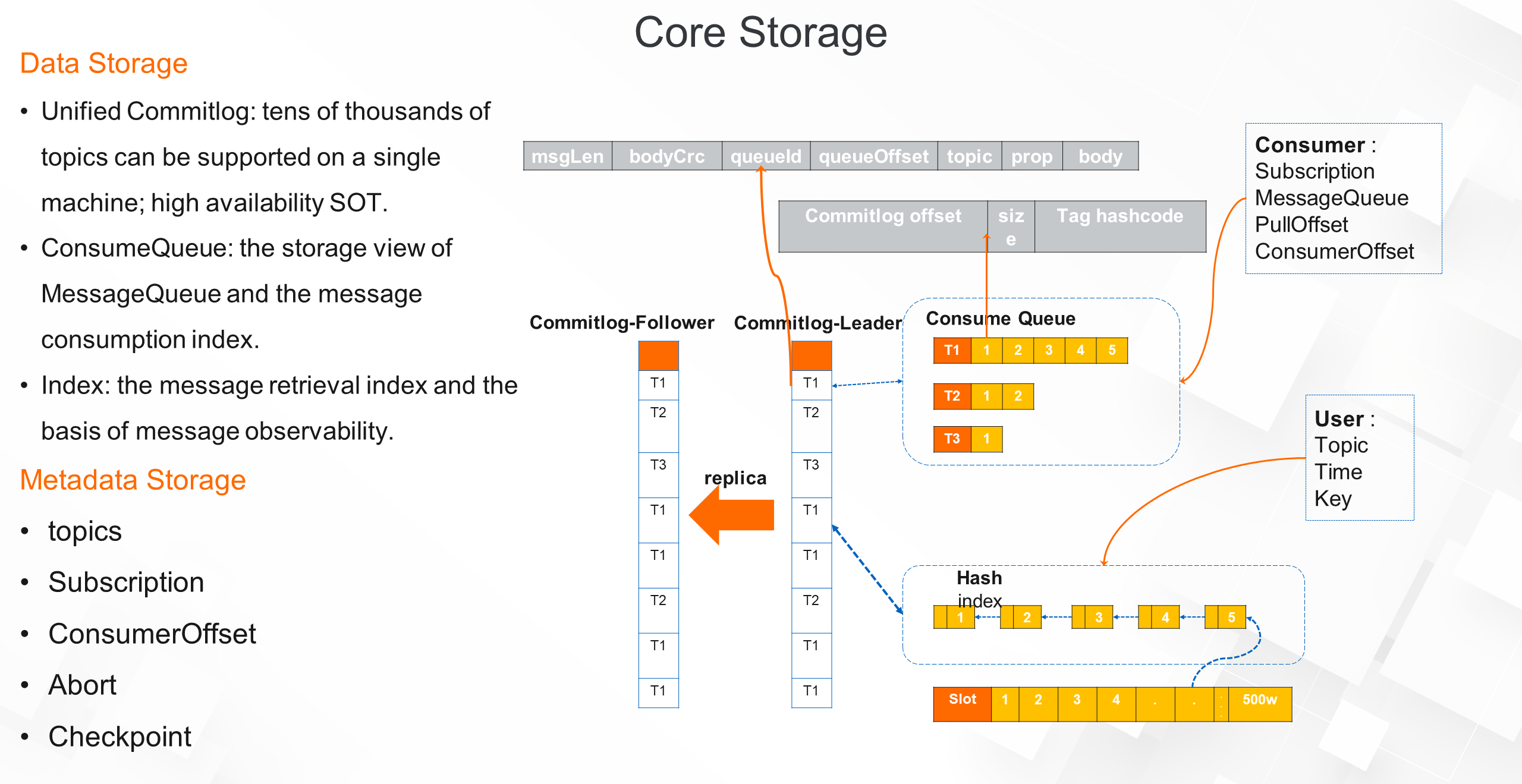

Through the architecture overview and service discovery mechanism sections, we now have a more comprehensive understanding of RocketMQ. Next, we will delve into its storage system. The storage system plays a crucial role in determining the performance, cost, and availability of RocketMQ. The storage core of RocketMQ consists of the commitlog, ConsumeQueue, and index files.

When a message is produced, it is first written to the commitlog, and then flushed to the disk and copied to the slave node for persistence. The commitlog serves as the source of truth for RocketMQ storage and enables the construction of a complete message index.

Compared to Kafka, RocketMQ writes data of all topics to commitlog files to maximize sequential I/O, allowing a single RocketMQ machine to support tens of thousands of topics.

After the commitlog file is written, RocketMQ asynchronously sends out multiple indexes. The first one is the ConsumeQueue index, which corresponds to the MessageQueue. Based on this index, messages can be precisely located by topic, queue ID, and offset. The message backtracking function is also implemented based on this capability.

Another important index is the hash index, which serves as the basis for message observability. Persistent hash tables are used to query message primary keys, and message tracing is implemented based on this capability.

In addition to storing the messages themselves, the broker also stores message metadata and topic files. For example, it maintains information about which topics the broker provides services to, as well as attributes such as the number of queues, read and write permissions, and order of each topic. The subscription and consumer offset files maintain the subscription relationship of the topic and the consumption progress of each consumer. The abort and checkpoint files are used to recover files after a restart, ensuring data integrity.

From the perspective of a single machine, we have learned the storage engine of RocketMQ from the functional level, including commitlog and index. Now, instead of the functional level, let's look at the high availability of RocketMQ from the perspective of clusters.

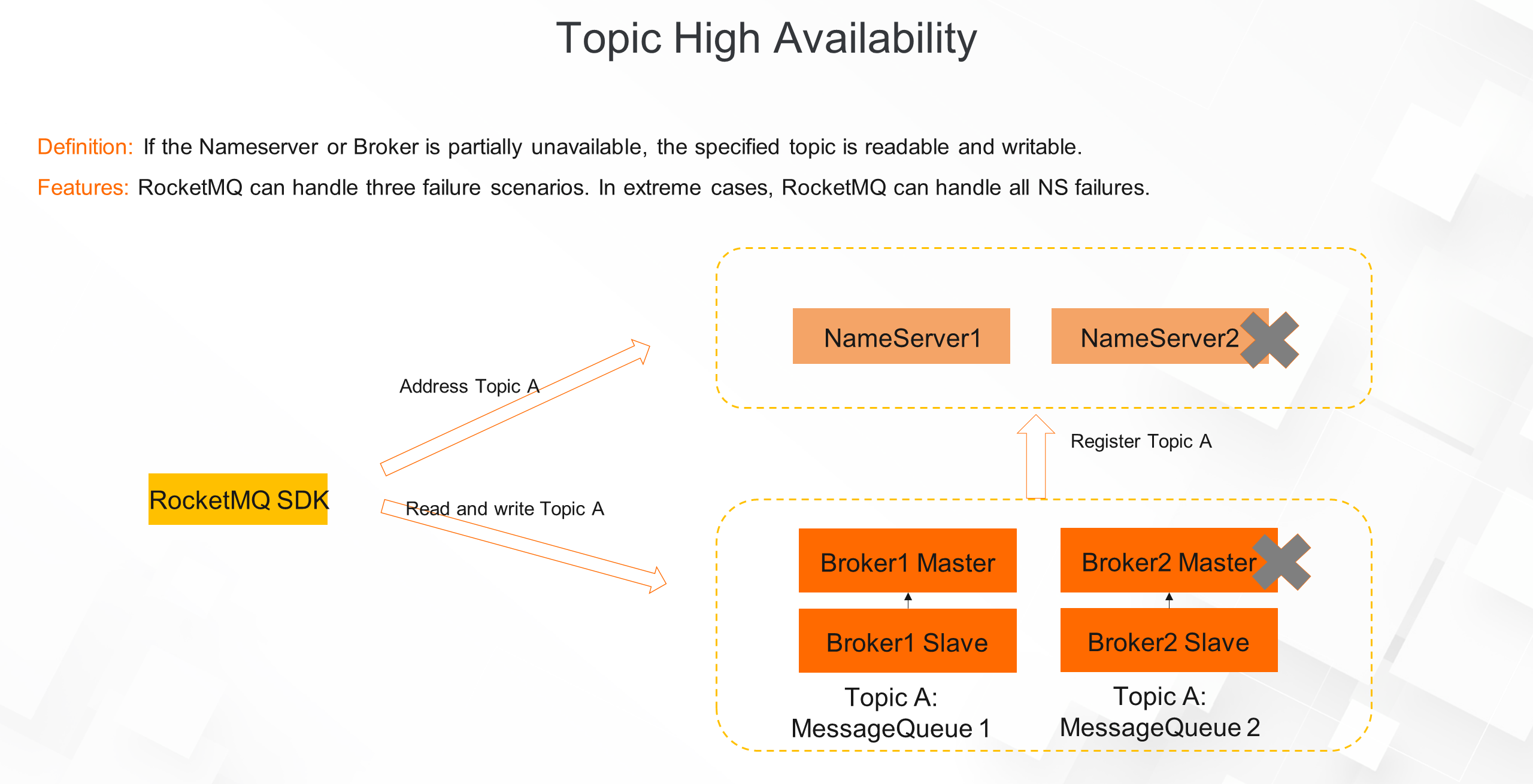

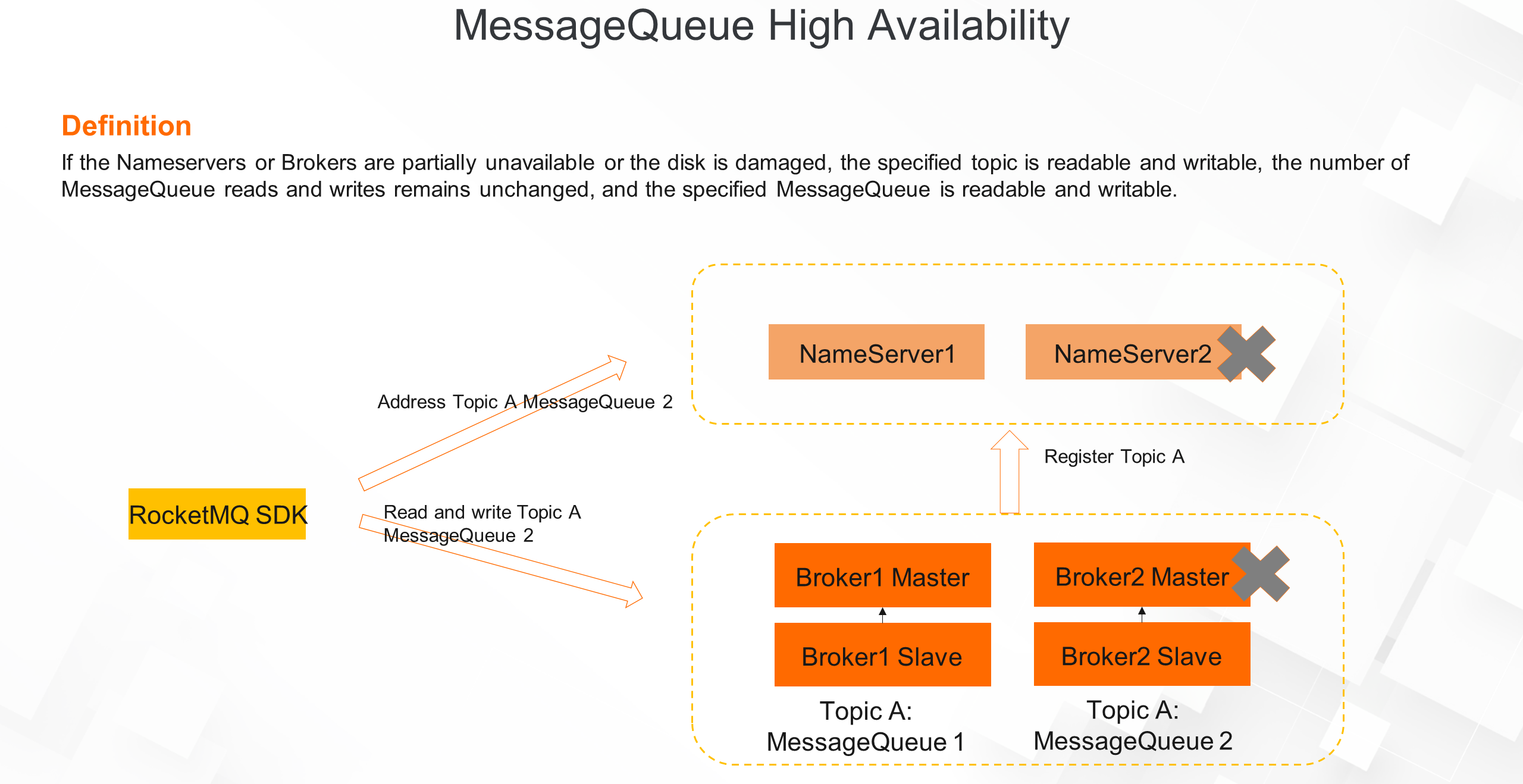

The high availability of RocketMQ means that when the NameServers and brokers are partially unavailable in its clusters, the specified topic is still readable and writable.

RocketMQ can handle three types of failure scenarios.

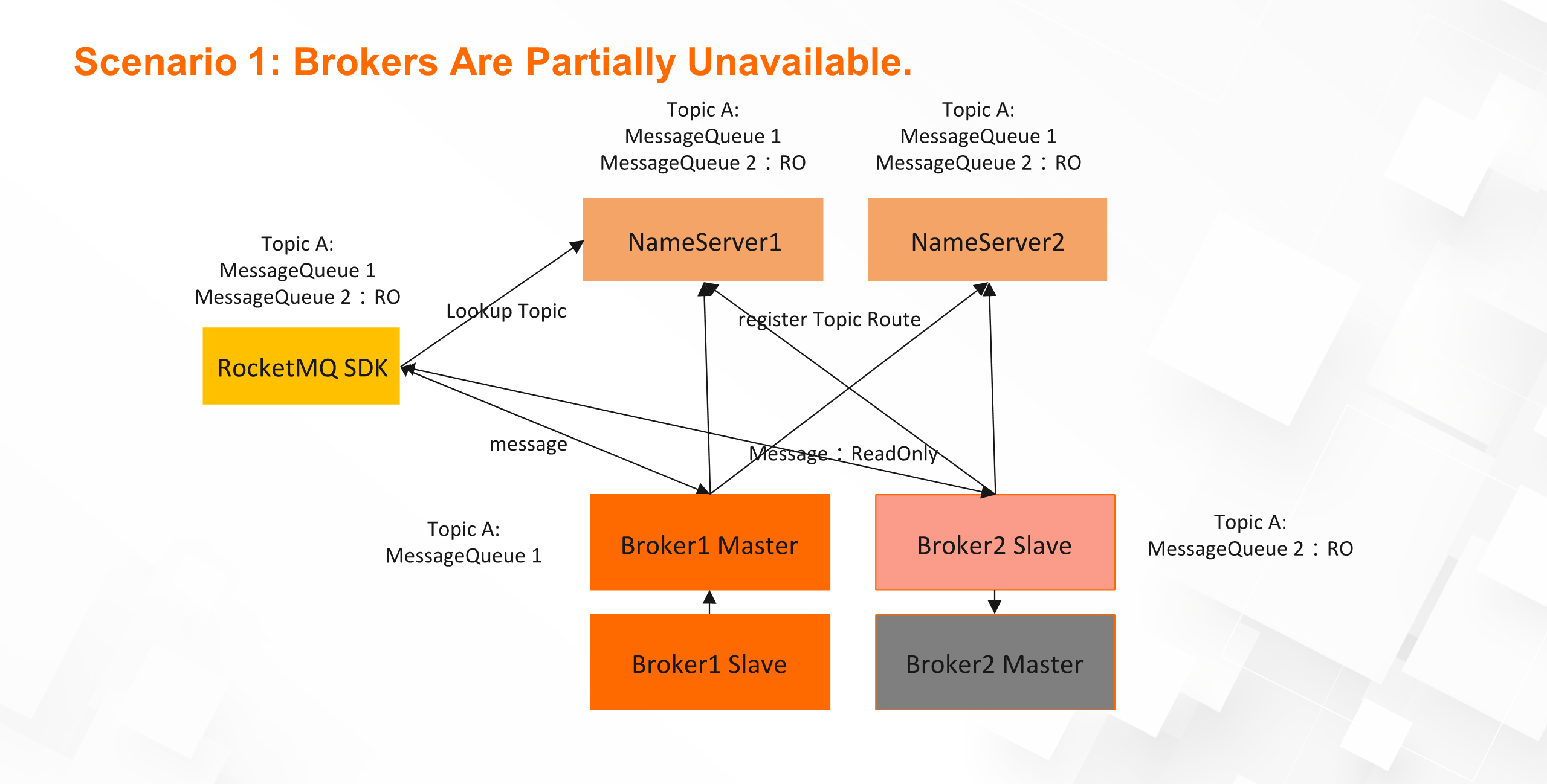

For example, when the primary node of Broker2 is down and the secondary node is available, Topic A is still readable and writable, in which Shard 1 is readable and writable, while Shard 2 is readable and not writable. The unread messages of Topic A in shard 2 can still be consumed. In summary, the read-and-write availability of topics is not affected as long as one node in any group of brokers in the broker cluster is alive. If all primary and secondary nodes in a group of brokers are down, the read-and-write availability of new data in the topic is not affected. Unread messages are delayed and can be consumed only after any primary or secondary node is started.

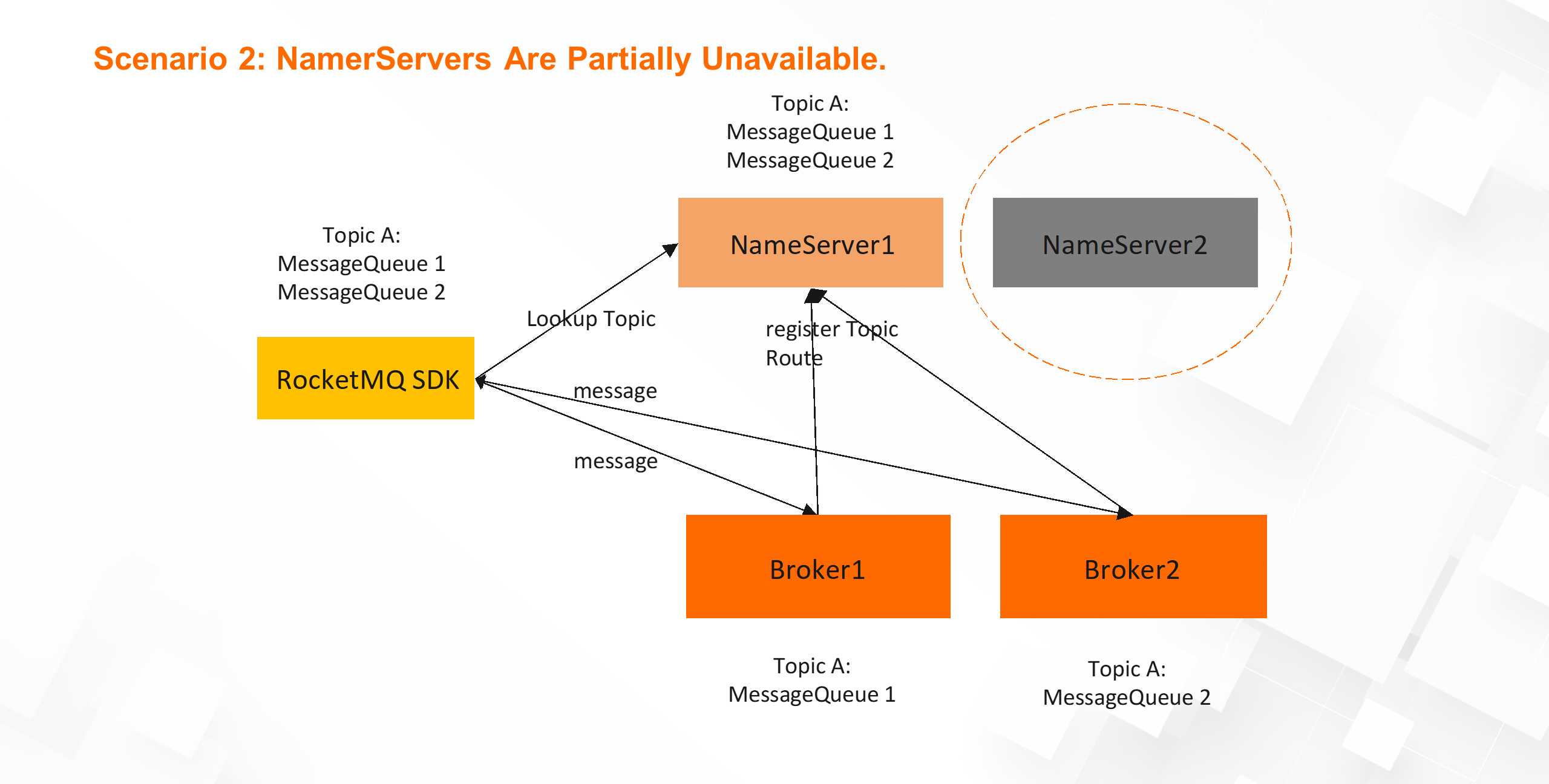

As the NameServer is a shared-nothing architecture, each node is stateless and in AP mode without relying on the majority algorithm. Therefore, as long as one NameServer is alive, the entire service discovery mechanism will be normal, and the read-and-write availability of topics will not be affected.

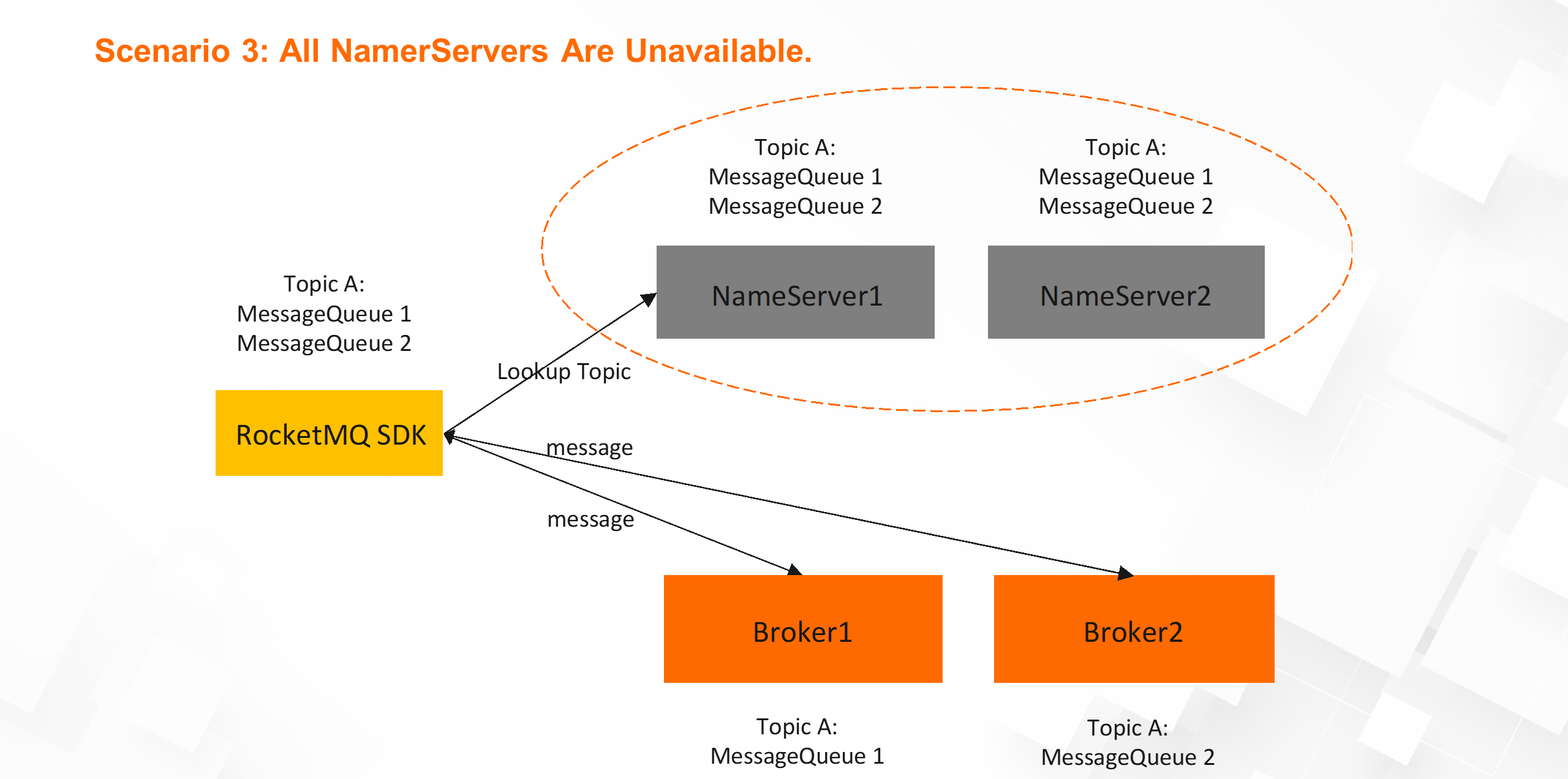

Because RocketMQ SDK caches service discovery metadata, you can send and receive messages based on the current topic metadata as long as the SDK is not restarted.

In the previous section, we talked about the high availability principle of topics. From its implementation, we can see that although topics are continuously readable and writable, the number of read and write queues of topics changes. Changes in the number of queues will affect some data integration businesses. For example, when binary logs are synchronized in heterogeneous databases, the changed binary logs of the same record will be written to different queues because when placing the changed binary logs in the same queue, the binary logs may be out of order, resulting in dirty data. Therefore, we need to further enhance the existing high availability by ensuring that when the local node is unavailable, the topic is readable and writable, the number of readable and writable queues of the topic remains unchanged, and the specified queue is also readable and writable.

As shown in the following figure, when the NameServer or broker is unavailable at any single point, Topic A still maintains two queues, and each queue has read and write capabilities.

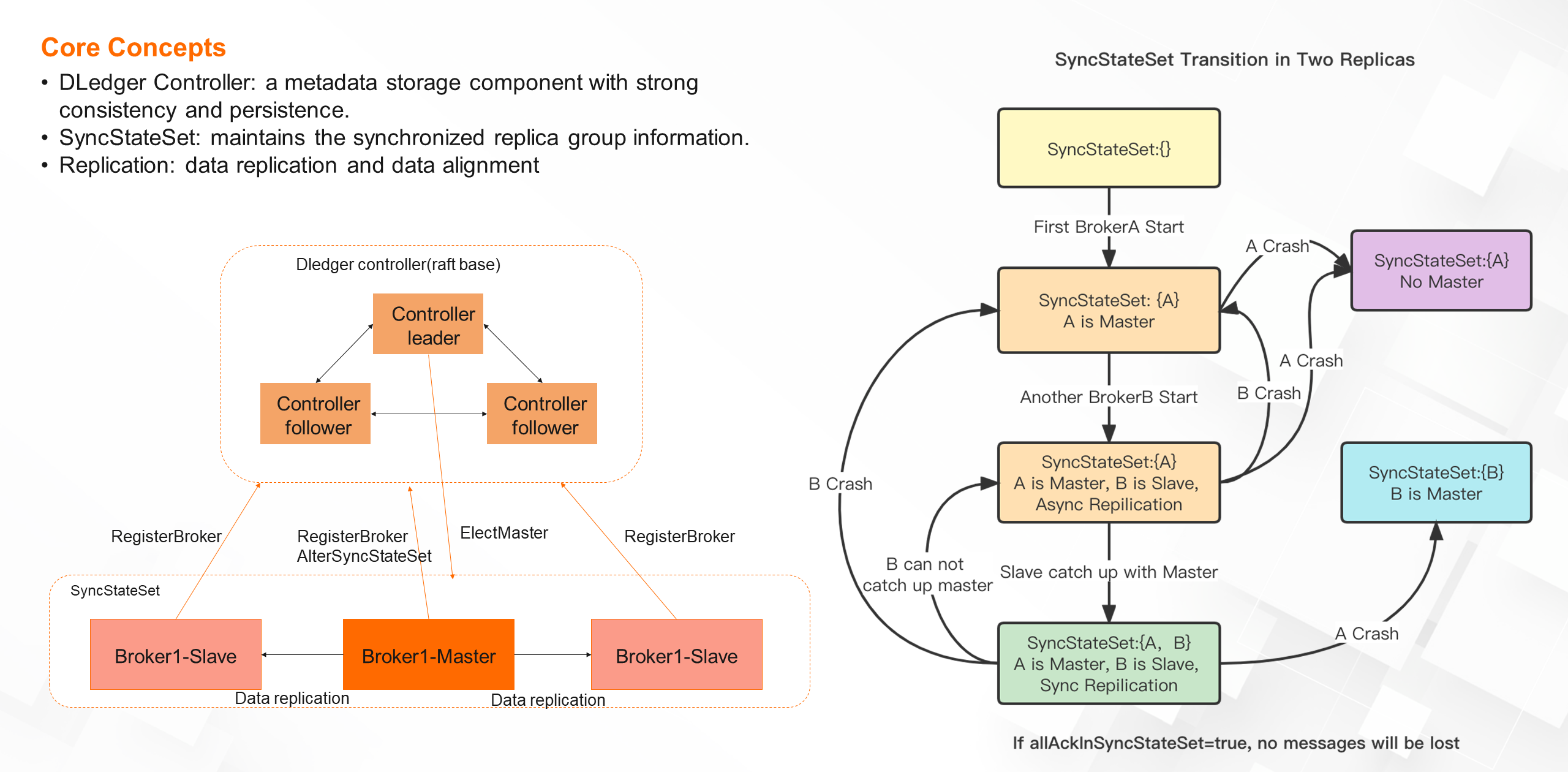

To address the preceding scenarios, RocketMQ 5.0 introduced a new high-availability mechanism. The core concepts are as follows:

• DLedger Controller: a strongly consistent metadata component based on the raft protocol. It executes the ElectMaster command and maintains state machine information.

• SynStateSet: maintains a collection of replica groups in the synchronization state. All nodes in the collection have complete data. After the primary node is down, select a new primary node from the collection.

• Replication: used for data replication, data verification, and truncation and alignment between different replicas.

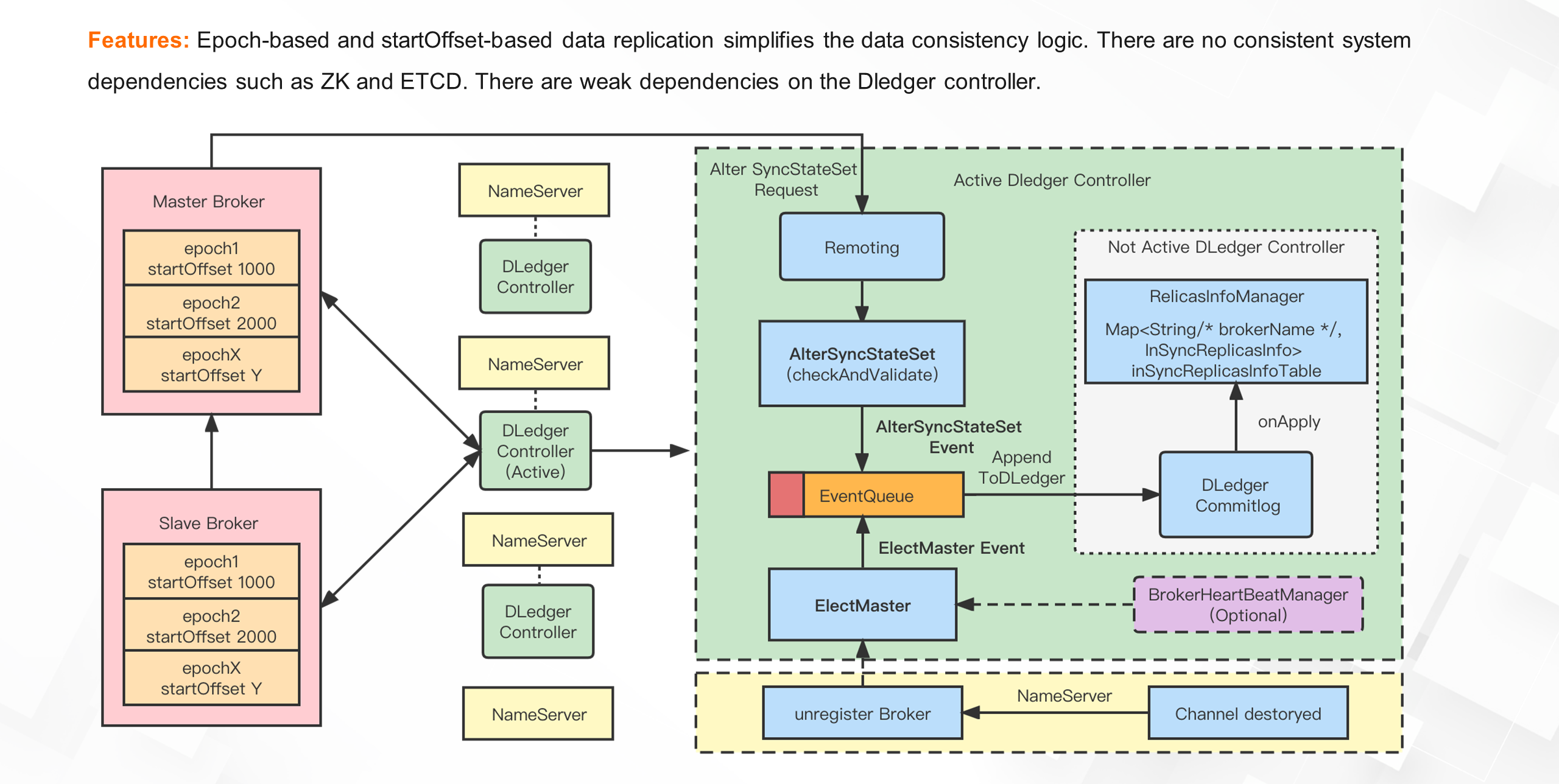

Below is an architectural panorama of 5.0 HA. The new high-availability architecture has multiple advantages.

• The data of epoch and start offset is introduced into the message storage. Based on the two data, data verification, truncation and alignment are completed, and the data consistency logic is simplified in the process of creating a replica group.

• Based on DledgerController, you do not need to introduce external distributed consistency systems such as ZK and ETCD, and DledgerController can also be deployed together with NameServer to simplify O&M and save machine resources.

• RocketMQ is weakly dependent on DledgerController. Even if Dledger is unavailable as a whole, this only affects the election of masters and does not affect the normal message sending and receiving process.

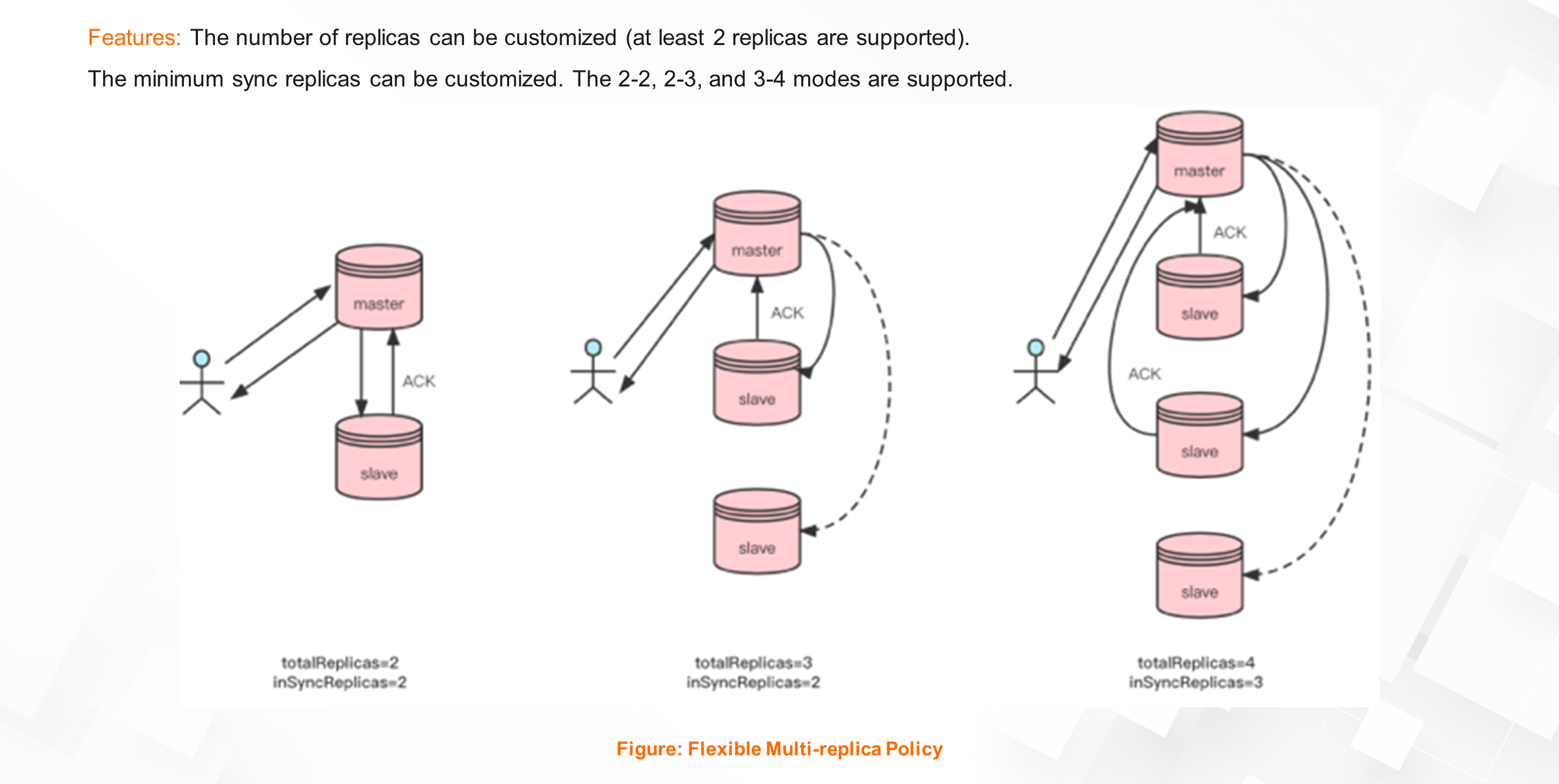

• Customizable. You can select data reliability, performance, and cost based on your business requirements. For example, the number of replicas can be 2, 3, or 4. Replicas can be replicated synchronously or asynchronously. For example, the 2-2 mode indicates two replicas and the data of the two replicas is replicated synchronously. The 2-3 mode indicates three replicas. If two replicas are written, the message persistence is considered successful. You can also deploy replicas in geo-data centers for asynchronous replication to implement disaster recovery. The following figure shows an example.

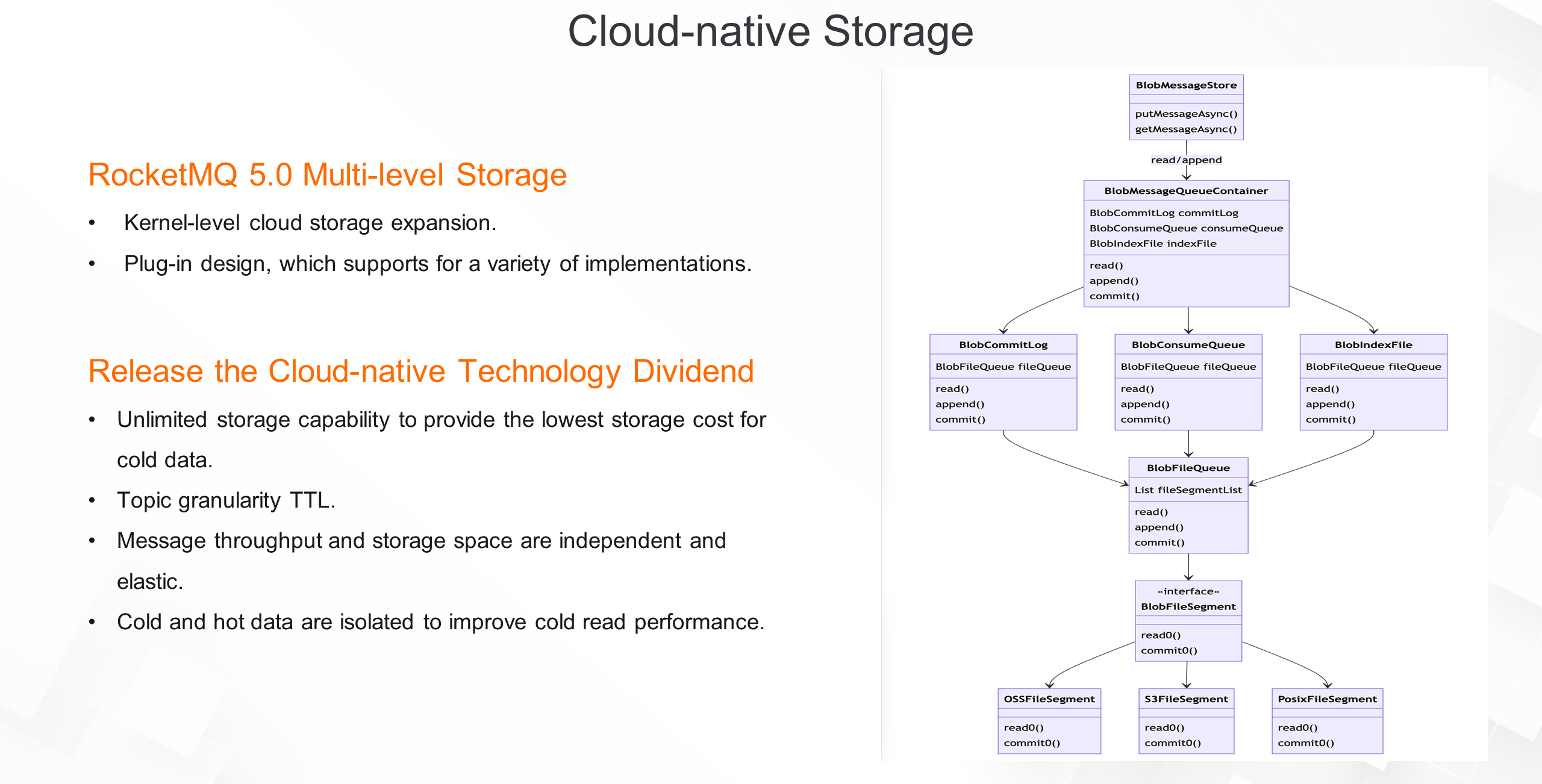

The previously mentioned storage systems are all implementations of RMQ for local file systems. In the era of cloud-native, deploying RocketMQ to the cloud environment can further utilize cloud-native infrastructure, such as cloud storage, to enhance the storage capabilities of RocketMQ. RocketMQ 5.0 introduces multi-level storage features, which is a kernel-level storage extension that extends Commitlog, ConsumeQueue, and IndexFile for object storage. Additionally, it adopts a plug-in design. Multi-level storage can be implemented in various ways, such as leveraging the Object Storage Service (OSS) on Alibaba Cloud or the S3 interface on AWS.

By incorporating cloud-native storage, RocketMQ brings four significant benefits.

Firstly, it provides unlimited storage capacity. The message storage space is not limited by local disk space. Previously, data could only be stored for a few days, but now it can be stored for several months or even a year. Object Storage Service is also the most cost-effective storage system in the industry, making it ideal for cold data storage.

Secondly, it introduces the concept of TTL (Time to Live) for topics. Previously, the lifecycle of multiple topics was bound to the Commitlog, and the retention time was uniform. Now, each topic uses a separate object to store the Commitlog file and can have an independent TTL.

Thirdly, it enables further separation of storage and computing within the storage system. This allows for the separation of storage throughput elasticity from storage space elasticity.

Lastly, it isolates hot and cold data, separating the read links for both types of data. This greatly improves cold read performance without impacting online services.

• Overall RocketMQ architecture:

• RocketMQ load balancing: AP priority, separate and combined mode, scale-out, and load granularity.

• RocketMQ storage design: storage engine, high availability, cloud storage.

Kruise Rollout: A Scalable Traffic Scheduling Scheme Based on Lua Scripts

639 posts | 55 followers

FollowAlibaba Cloud Native - June 7, 2024

Alibaba Cloud Native - June 6, 2024

Alibaba Cloud Native Community - January 5, 2023

Alibaba Cloud Native - June 12, 2024

Alibaba Cloud Native Community - November 23, 2022

Alibaba Cloud Native - June 7, 2024

639 posts | 55 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn MoreMore Posts by Alibaba Cloud Native Community