By Chen Yingda, Senior Technical Expert at Alibaba Cloud Intelligence

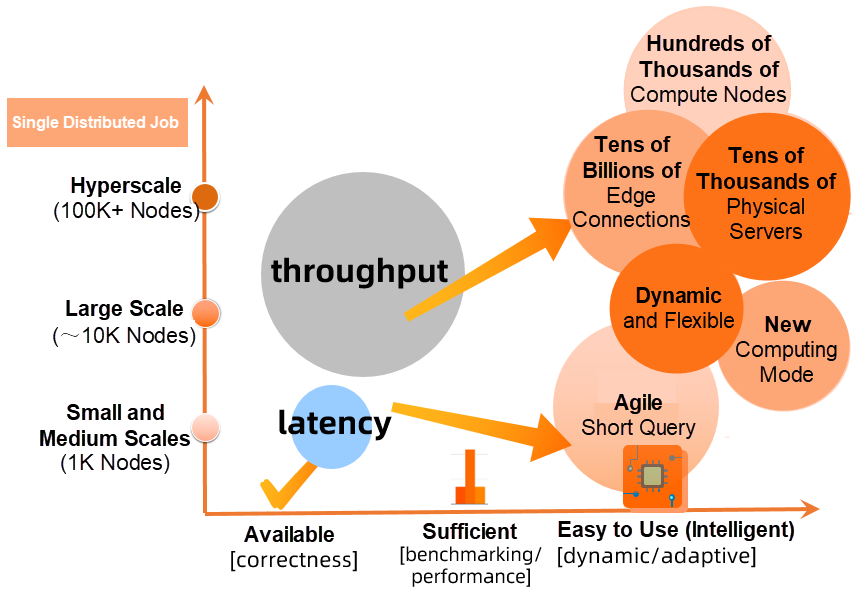

The Job Scheduler and distributed execution system, as the foundation for Alibaba's core big data capabilities, supports most of the big data computing requirements of the Alibaba Group and Alibaba Cloud big data platforms. Various computing engines, such as MaxCompute and PAI running on the system process users' massive data computing requirements every day. In the Alibaba big data ecosystem, the Job Scheduler system manages multiple physical clusters inside and outside of the Alibaba Group, with over 100,000 physical servers and millions of CPU and GPU cores. The Job Scheduler distributed platform runs more than 10 million jobs and processes exabytes of data every day, which gives it a leading position in the industry. A single job contains hundreds of thousands of compute nodes and manages tens of billions of edge connections. This job quantity and scale have pushed the Job Scheduler distributed platform to continuously develop over the past 10 years. With more diversified jobs and further development requirements, the Job Scheduler system architecture needs to further evolved, which is a huge challenge, but also a great opportunity for the Alibaba Job Scheduler team. This article describes how the Alibaba Job Scheduler team upgraded the core scheduling and distributed execution system over the past two years to build DAG 2.0.

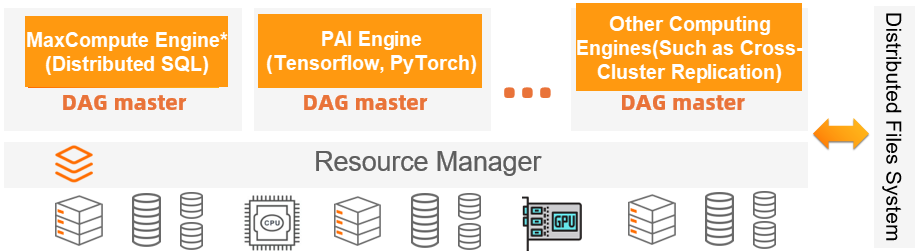

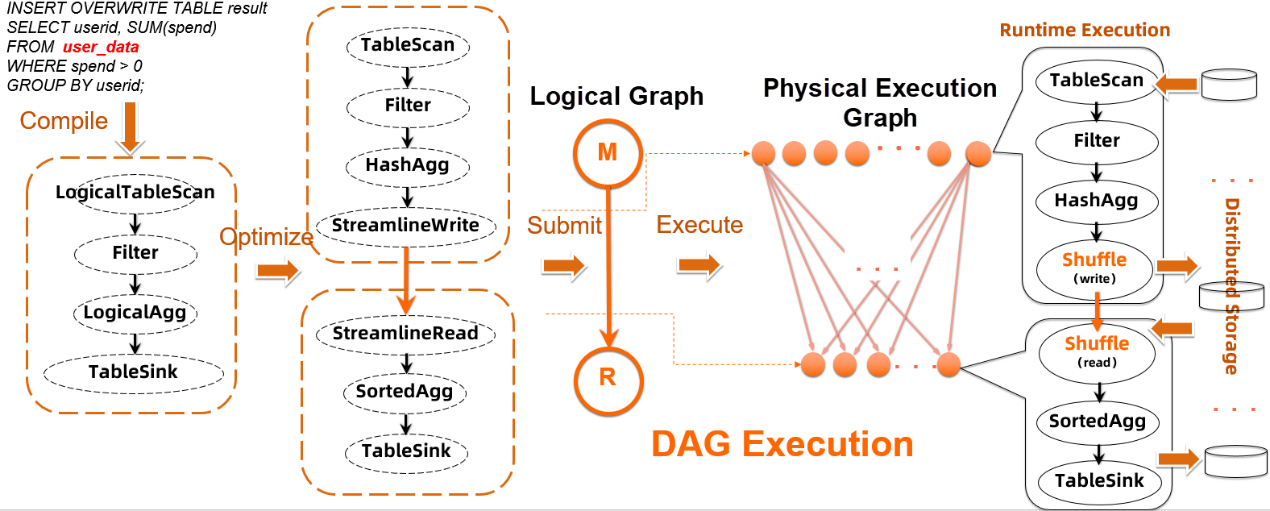

Taking an overall view, the architecture of the distributed system running on physical clusters can be classified into resource management, distributed job scheduling, and multi-compute node running, as shown in the following figure. The directed acyclic graph (DAG) is the central management node of each distributed job, that is, the application master (AM.) The AM is often called a DAG because it coordinates distributed job running. Job running in modern distributed systems can be described using DAG scheduling and data shuffling[1]. Compared with the traditional Map-Reduce[2] model, the DAG model can accurately describe distributed jobs and is also the architecture design foundation for mainstream big data systems, including Hadoop 2.0+, Spark, Flink, and TensorFlow. The only difference between the DAG model and these mainstream big data systems is whether the DAG semantics is disclosed to users or computing engine developers.

In the entire distributed system stack, the AM has responsibilities in addition to DAG execution. As the central control node of a distributed job, the AM applies for the computing resources required by the distributed job from the downstream Resource Manager, communicates with the upstream computing engine, and reports collected information to the DAG execution process. As the only component that accurately understands the running of each distributed job, the AM also accurately controls and adjusts DAG execution. In the preceding distributed system stack figure, the AM is the only system component that needs to interact with almost all distributed components and plays an important role during job running. AM was called JobMaster (JM) in the previous Job Scheduler system. In this article, we call it DAG or AM.

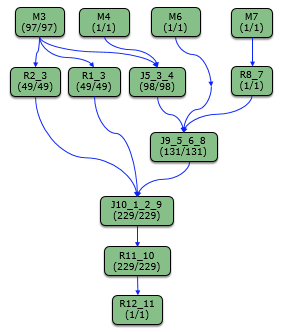

The DAG of a distributed job is expressed using a logical or physical graph. The DAG topology that users can see is usually a logical graph. For example, the logview diagram is a logical graph that describes the job running process even though it contains physical information, that is, the concurrency of each logical node.

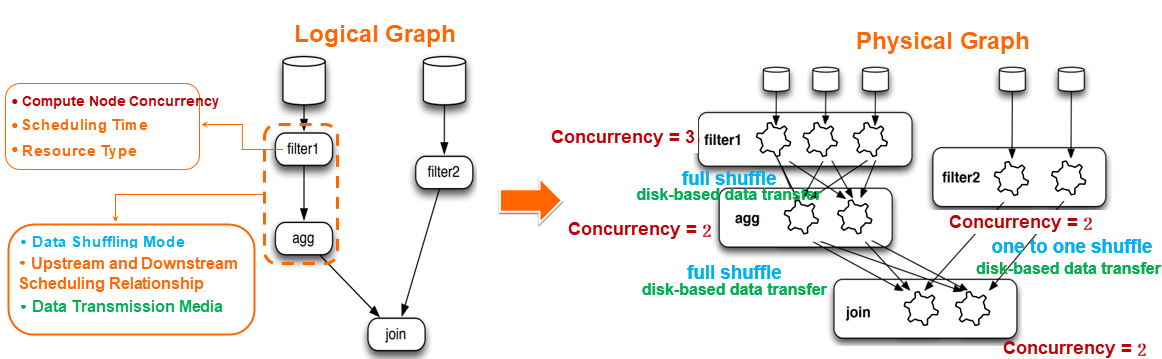

To be specific:

There are many equivalent methods for changing a logical graph to a physical graph. One of the important responsibilities of the DAG is to select a proper method to change the logical graph to a physical graph and flexibly adjust the graph. As shown in the preceding figure, mapping a logical graph to a physical graph is done to answer questions about the physical features of graph nodes and connection edges. After these questions are answered, the physical graph can be executed in the distributed system.

As the Job Scheduler distributed job execution framework, DAG 1.0 was developed when the Alibaba Cloud Apsara system was created. Over the past 10 years, DAG 1.0 has supported Alibaba big data businesses and developed advantages over other frameworks in the industry in terms of the system scale, reliability, and other aspects. Even though DAG 1.0 continuously evolved over the past 10 years, it inherited some features of the Map-Reduce execution framework and does not have a clear stratification between the logical and physical graphs. Therefore, it was difficult for DAG 1.0 to support more dynamic features during DAG execution or various computing modes. Currently, two completely separated distributed execution frameworks are used for the offline job mode and near real-time job mode (smode) for SQL jobs on the MaxCompute platform. In most cases, either the performance or resource utilization is prioritized. Therefore, a good balance cannot be achieved between the two.

With the updates to the MaxCompute and PAI engines and the evolution of new features, upper-layer distributed computing capabilities are constantly increasing. Therefore, we need the AM to be more dynamic and flexible in job management, DAG execution, and other aspects. To support the development of the computing platforms over the next 10 years, the Job Scheduler team started the DAG 2.0 project to completely replace the JobMaster component of DAG 1.0, upgrading its code and features. This would allow it to better support upper-layer computing requirements and leverage the upgrades to the shuffle service and FuxiMaster (Resource Manager) features. To provide enterprise services, a good distributed execution framework needs to provide support for hyperscale and large-throughput jobs in Alibaba as well as various scale and computing mode requirements on the cloud. In addition to developing hyperscale extended system capabilities, we needed to allow big data systems to use Job Scheduler more easily and leverage the intelligent and dynamic capabilities of the system to provide big data enterprise services adaptable to various data scales and processing modes. These were important considerations when designing the DAG 2.0 architecture.

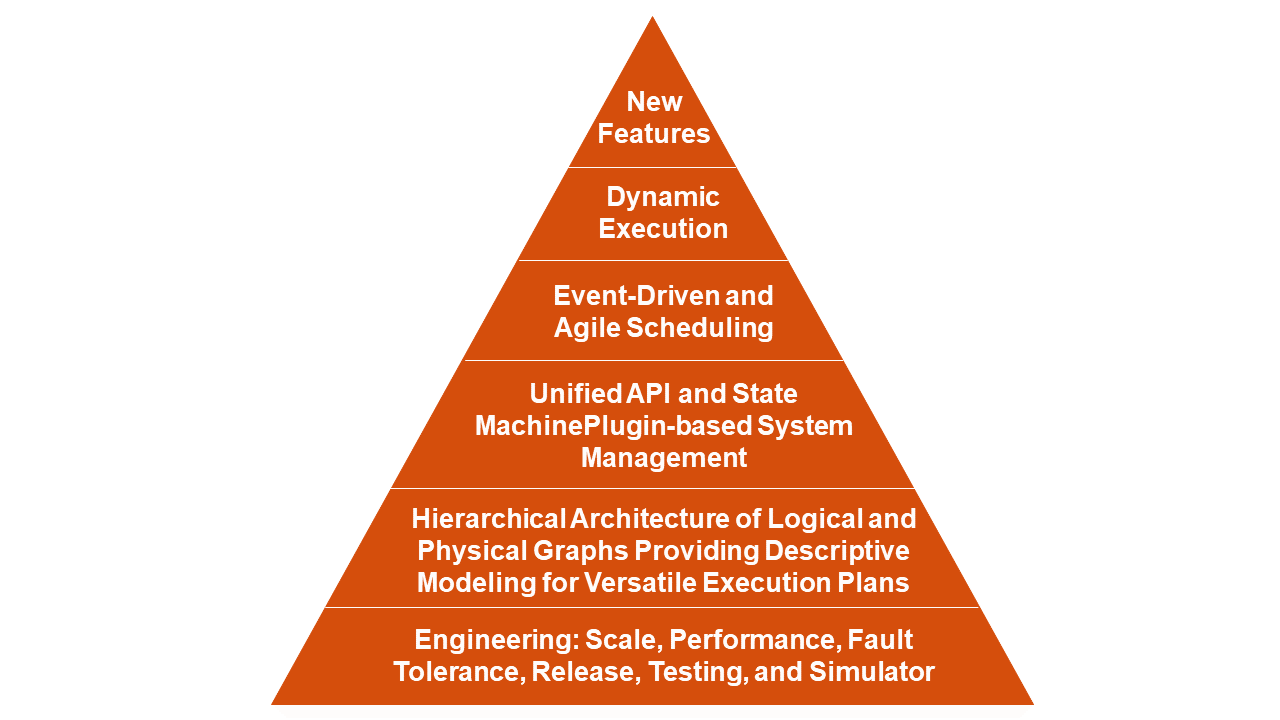

After we investigated the DAG components of various distributed systems in the industry, including Spark, Flink, Dryad, Tez, and TensorFlow, we used the Dryad and Tez frameworks as references in the DAG 2.0 project. Through foundational design, such as the clear stratification of the logical and physical graphs, extendable state machine management, plugin-based system management, and event-driven scheduling policies, DAG 2.0 can centrally manage various computing modes of the computing platform and provide better dynamic adjustment capabilities at different layers during job running.

The execution plan of a traditional distributed job is determined before the job is submitted. For example, an SQL statement generates an execution diagram after being processed by the compiler and optimizer, and the execution diagram is converted to an execution plan in the Job Scheduler distributed system.

This job process is standard in big data systems. However, if DAG execution cannot adapt to dynamic adjustment, the whole execution plan needs to be determined in advance. As a result, there is little opportunity to dynamically adjust running jobs. To convert the DAG execution logical graph to a physical graph, the distributed system must understand the job logic and features of data to process in advance and be able to accurately answer questions about the physical features of nodes and connection edges during job running.

However, many questions related to physical features cannot be perceived before the jobs run. For example, before a distributed job runs, only originally entered data features, such as the data volume can be obtained. For deep DAG execution, this means that only the physical plan (such as concurrency selection of the root node) is proper, and the physical features of downstream nodes and edges can only be guessed at based on certain specific rules. When the input data has rich statistics, the optimizer can use the statistics with features of operators in the execution plan to deduce the features of intermediate data generated in each step during the whole execution process. However, such a deduction must overcome major challenges during implementation, especially in the actual product environment of Alibaba. These challenges include:

Uncertainty concerning job running can result from various causes and requires a good distributed job running system to dynamically adjust job running based on intermediate data features. However, intermediate data features can be accurately obtained only after intermediate data is generated during execution. Therefore, a lack of dynamic capabilities may cause problems in online operations, including the following:

The DAG or AM, as the unique central node and scheduling control node for distributed jobs, is the only distributed system component that can collect and aggregate related data and dynamically adjust job running based on the data features. This component can adjust simple physical execution graphs (such as dynamic concurrency) as well as the complex shuffling method and data orchestration method. The logical execution graph may also need to be adjusted based on data features. Dynamic adjustment of the logical graph is a new solution that we are exploring in DAG 2.0.

Clear physical and logical stratification of nodes, edges, and graphs, event-based data collection and scheduling management, and plugin-based feature implementation allow DAG 2.0 to collect data during job running and use it to systematically answer the essential questions when converting a logical graph into a physical graph. Both the physical and logical graphs can be dynamic when necessary and the execution plan can be properly adjusted. In the following implementation scenarios, we will further illustrate how the strong dynamic capabilities of DAG 2.0 help ensure a more adaptive and efficient distributed job running.

DAG 2.0 can describe different physical features of nodes and edges in the abstract and hierarchical node, edge, and graph architecture to support different computing modes. The distributed execution frameworks of various distributed data processing engines, including Spark, Flink, Hive, Scope, and TensorFlow, are derived from the DAG model proposed by Dryad[1]. We think that an abstract and hierarchical description of graphs can better describe various models in a DAG system, such as offline, real-time, streaming, and progressive computing. When DAG 2.0 was first implemented, its primary goal was to use the same set of code and architecture systems to unify several computing modes running on the Job Scheduler platform, including MaxCompute offline jobs and near real-time jobs, PAI TensorFlow jobs, and other non-SQL jobs. In the future, we plan to explore more novel computing modes step-by-step.

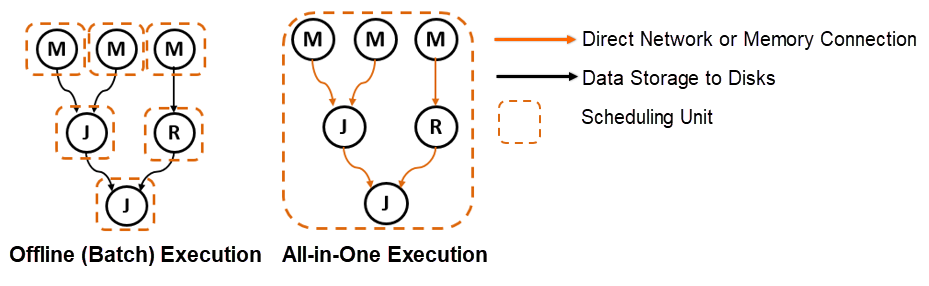

Most jobs on the MaxCompute platform are SQL offline jobs (batch) and near real-time jobs (smode.) For historical reasons, the resource management and running of MaxCompute SQL jobs in the offline job and near real-time job modes are implemented based on two sets of completely separated code. These two sets of code and the features of the two modes could not be reused. As a result, we could not strike a balance between resource utilization and execution performance. In the DAG 2.0 model, these two modes are integrated and unified, as shown in the following figure.

Offline jobs and near real-time jobs can be accurately described after different physical features are mapped to logical nodes and edges.

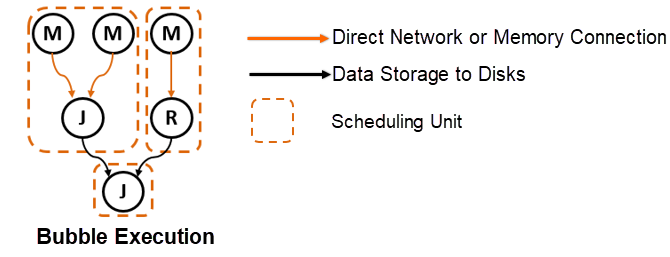

Due to the features of offline job mode, such as on-demand resource application and intermediate data storage to the disk, online jobs have obvious advantages in resource utilization, scale, and stability. In near real-time job mode, resident computing resource pools and gang scheduling (greedy resource application) reduce overhead during job running, ensure pipelined data transmission, and accelerate job running. However, this mode cannot support large-scale jobs across a wide scope due to its resource use features. DAG 2.0 unifies these two computing modes on the same architecture. This unified description method makes it possible to balance high resource utilization for offline jobs and high performance for near real-time jobs. When the scheduling unit can be adjusted freely, a new hybrid computing mode is possible. We call it the bubble execution mode.

This bubble mode enables DAG users (the developers of upper-layer computing engines like the MaxCompute optimizer) to flexibly cut out bubble subgraphs in the execution plan based on the execution plan features and engine users' sensitivity to resource usage and performance. In the bubble, methods, such as direct network connection and compute node prefetch, are used to improve performance. Nodes that do not use the bubble execution mode still run in the traditional offline job mode. The offline job mode and near real-time job mode can be described as two extreme cases of the bubble mode. In the new unified model, the computing engine and execution framework can select different combinations of resource utilization and performance based on actual requirements. Typical application scenarios include:

We only list two simple policies here. There are many other refined and targeted optimization policies. Flexible bubble-based computing provided at the DAG layer allows upper-layer computing engines to select proper policies in different scenarios to better support various computing requirements.

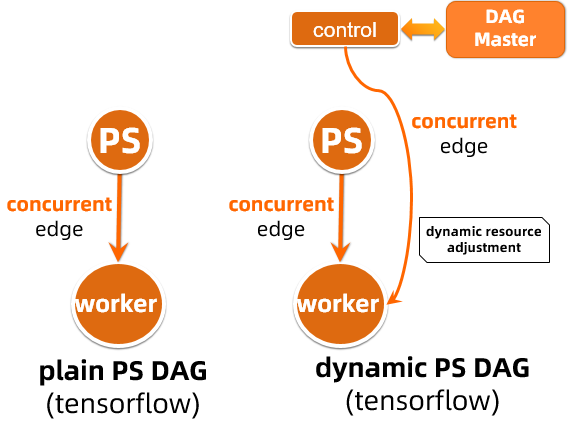

The underlying design of the DAG 1.0 execution framework was deeply influenced by the Map-Reduce mode. Edge connection between nodes mixes various semantics, including the scheduling sequence, running sequence, and data shuffling. In two nodes connected by an edge, the downstream node can be scheduled only after the upstream node runs, exits, and generates data. This is not the case for some new computing modes. For example, in the parameter server computing mode, the parameter server (PS) and worker have the following features in the running process:

In this running mode, the PS and worker have a scheduling dependency relationship. However, the PS and worker must run at the same time, so there is no logic that says the worker can be scheduled only after the PS exits. Therefore, in the DAG 1.0 framework, the PS and worker can only be regarded as two independent stages for scheduling and running. In addition, all PSs and workers can only communicate and coordinate with each other through a direct connection between computing nodes and with the assistance of external entities, such as ZooKeeper or Apsara Name Service and Distributed Lock Synchronization System. As a result, the AM or DAG, as the central management node, does not take effect, and jobs are managed by the computing engine and completed through coordination between compute nodes. This decentralized management method cannot handle complicated scenarios, such as failover.

In the DAG 2.0 framework, to accurately describe the scheduling and running relationships between nodes, we introduced and implemented the concurrent edge feature. Upstream and downstream nodes connected using a concurrent edge can be scheduled sequentially and run simultaneously. The scheduling time can also be flexibly configured. For example, the upstream and downstream nodes can be scheduled synchronously, or the downstream node can be scheduled by triggering an event after the upstream node runs for a certain time. With this flexible description capability, PS jobs can be described using the following DAG, which ensures more accurate descriptions of the relationships between job nodes. This means the AM can understand the job topology to manage jobs efficiently. For example, it can use different processing policies for failovers of different compute nodes.

In addition, the new description model of DAG 2.0 allows more dynamic optimizations for TensorFlow or PS jobs on the PAI platform and new innovative work. In the preceding dynamic PS DAG, a control node is introduced. The node can dynamically adjust the resource application and concurrency of jobs before and after the PS workload runs to optimize job running.

In fact, the concurrent edge describes the physical features of the running or scheduling time of the upstream and downstream nodes. It is also an important extension on the architecture, with clear logical and physical graph stratification. In addition to PS job modes, the concurrent edge is also important for bubble execution mode, which unifies the offline job and near real-time job modes.

As the foundation for the distributed operation of the computing platform, DAG 2.0 provides more flexible and efficient execution capabilities for various upper-layer computing engines. These capabilities are implemented based on detailed computing scenarios. Next, we will use interworking between DAG 2.0 and various upper-layer computing engines, such as MaxCompute and PAI, to describe how the DAG 2.0 scheduling and execution framework empowers upper-layer computing and applications.

As it must run computing jobs on different platforms, MaxCompute has various computing scenarios, and complex logic for offline jobs in particular, which provides diversified scenarios for implementing dynamic graph capabilities. We will give some examples by looking at dynamic physical and logical graphs.

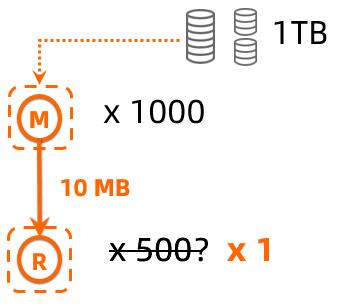

Dynamic concurrency adjustment based on the amount of intermediate data during job running is a basic DAG dynamic adjustment capability. In a traditional static MR job, the mapper concurrency can be accurately determined based on the read data volume. However, the reducer concurrency can only be deduced. As shown in the following figure, when a 1 TB MR job is submitted for processing, the reducer concurrency of 500 is deduced based on the mapper concurrency of 1000. However, if the mappers filter a large amount of data and ultimately generate 10 MB of intermediate data, 500 concurrent reducers represent an obvious waste of resources. The DAG must dynamically adjust the reducer concurrency (500 to 1) based on the actual amount of data generated by mappers.

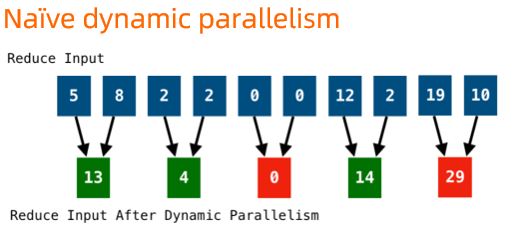

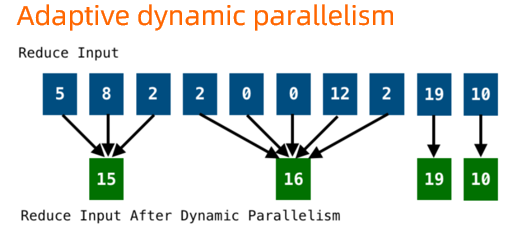

In actual implementation, the output partition data of upstream nodes is simply aggregated according to the concurrency adjustment ratio. As shown in the following figure, when the concurrency is adjusted from 10 to 5, unnecessary data skew may occur.

DAG 2.0 dynamic concurrency adjustment based on intermediate data comprehensively considers the possibility of skewed data partitions and optimizes the dynamic adjustment policy to ensure an even data distribution after adjustment. This effectively prevents data skew caused by dynamic adjustments.

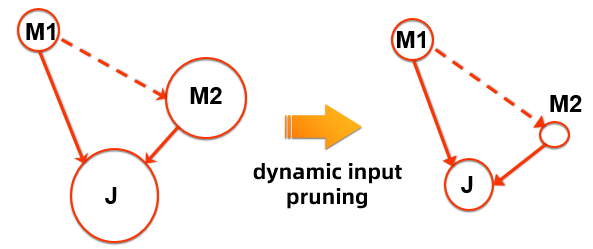

This most common downstream node concurrency adjustment is an intuitive presentation of DAG 2.0 dynamic physical graphs. In the DAG 2.0 project, we also explore source data-based dynamic concurrency adjustment based on the data processing features of the computing engine. For example, when data of two source tables are joined (M1 join M2 at J) with the node size necessary to present the processed data amount, as shown in the following figure, M1 processes an intermediate data table (for example, M1 concurrency is 10) and M2 processes a large data table (M2 concurrency is 1000.) The most inefficient scheduling is based on the 10 + 1000 concurrency. In addition, all M2 output needs to be shuffled to J, and therefore, the J concurrency is also large (about 1000.)

In this computing pattern, the M2 only needs to read and process data that can be joined with the M1 output. This means, if the M1 output data is much less than that expected by M2 after the overall execution cost is considered, we can first schedule M1 for computing, aggregate M1 output data statistics at the AM or DAG, and select only data valid to M2. The selection of data valid to M2 is essentially a predicate push down process, which can be jointly determined based on the optimizer of the computing engine and the runtime. This means M2 concurrency adjustment in this scenario is closely associated with upper-layer computing.

For example, if M2 processes a partitioned table with 1000 partitions and the downstream joiner joins on the partition key, theoretically only data in partitions that can be joined with the output of M1 needs to be read. If the M1 output only contains three partition keys of the M2 source table, M2 only needs to schedule three compute nodes to process data from these three partitions. As a result, the M2 concurrency is decreased from the default 1000 to 3. This dramatically reduces computing resource consumption and accelerates job running several times over, while maintaining the same logical computing equivalence and correctness. Optimizations in this scenario include:

As shown in the preceding figure, to ensure the scheduling sequence of M1 to M2, the DAG introduces a dependency edge between M1 and M2. This dependency edge only presents the execution sequence and does not have data shuffling. It is different from the assumption of tightly bound edge connections and data shuffling in the traditional MR or DAG execution framework. It is one of the extensions for the edge in DAG 2.0.

The DAG execution engine, as the underlying distributed scheduling execution framework, directly interworks with the development team for upper-layer computing engines. Except for the performance improvement, users cannot directly perceive upgrades of the DAG execution engine. The following example is one that users can perceive. This shows the DAG dynamic execution capabilities and benefits to users. This example is a LIMIT optimization based on DAG dynamic capabilities.

For SQL users, LIMIT is a common operation used to understand data table features for some basic ad hoc operations on data. The following shows a LIMIT example.

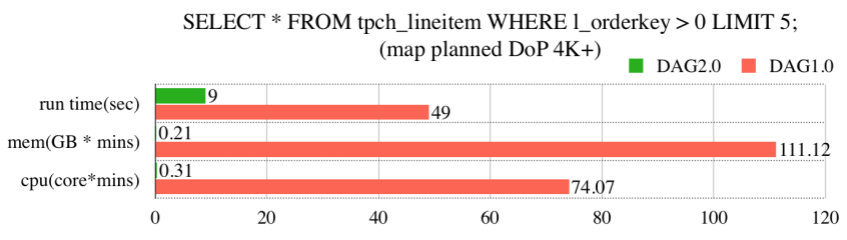

SELECT * FROM tpch_lineitem WHERE l_orderkey > 0 LIMIT 5;

In the distributed execution framework, the execution plan of this operation splits the source table, schedules the required number of mappers to read all data, and aggregates mapper output to the reducer for the final LIMIT operation. If the source table (tpch_lineitem in the preceding example) is large and requires 1000 mappers to read, the scheduling cost for the whole distributed execution process is 1000 mappers and one reducer. In this process, there are some aspects that can be optimized by the upper-layer computing engine. For example, each mapper can exit after it generates the number of records required for LIMIT (LIMIT 5 in the preceding example), so it does not need to process all data shards allocated to it. However, in a static execution framework, the 1001 compute nodes must be scheduled to obtain this simple information. This results in huge overhead for executing ad hoc queries, especially when cluster resources are tight.

To facilitate such LIMIT scenarios, DAG 2.0 has made some optimizations based on the dynamic capabilities of the new execution framework, including:

Flexible and dynamic interaction between the computing engine and DAG in the execution process can greatly reduce resource consumption and accelerate job running. According to offline test results and actual online performance, most jobs can exit in advance after one mapper node is executed, and not all mapper nodes need to be scheduled.

The following figure shows the differences before and after optimizing the preceding query when the mapper concurrency is 4,000 in an offline test.

After optimization, the execution time is reduced by over 5x, and the consumption of computing resources in reduced hundreds of times over.

As a typical example, this offline test result is something of an ideal situation. To evaluate the actual effect, we selected online jobs with LIMIT optimized after DAG 2.0 is released. The statistical results after one week were:

The optimization saves computing resources equivalent to 254.5 cores x min CPU + 207.3 GB x min and reduces scheduling by 4,349 computing nodes for each job.

As an optimization for special scenarios, LIMIT execution optimization involves the adjustment of different policies during DAG execution. This segmented optimization intuitively presents the benefits of the DAG 2.0 architecture. The flexible architecture ensures greater dynamic adjustment capabilities during DAG execution and more dedicated optimizations involving computing engines.

Dynamic concurrency adjustment in different scenarios and dynamic adjustment of the scheduling execution policy are only two examples of the dynamic adjustment of graph physical features. Physical feature runtime adjustment has various applications in the DAG 2.0 framework, such as dynamic data orchestration and shuffling for data skew during running. Next, we will introduce some ideas about the dynamic adjustment of logical graphs in DAG 2.0.

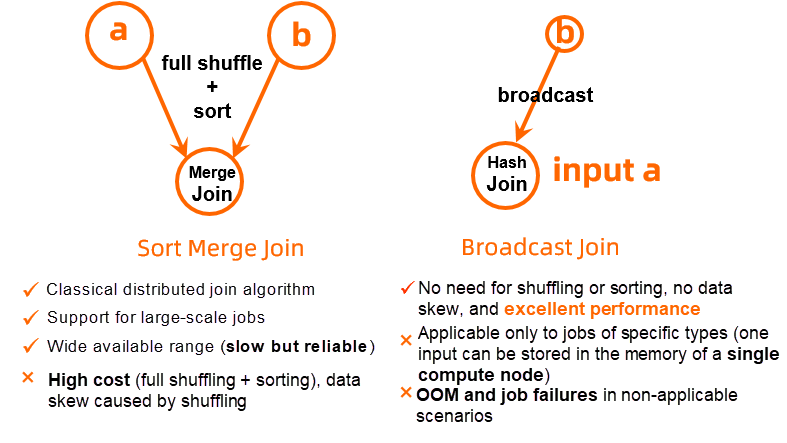

In distributed SQL jobs, map join is a common optimization method. If one of the two tables to be joined is small and its data can be stored in the memory of a single compute node, shuffling does not need to be performed for it. All its data is broadcasted to each distributed compute node that processes the large table. Hash tables are established in the memory to complete join. Map join can dramatically reduce large table shuffling and sorting and improve the job running performance. However, if data of the small table cannot be stored in the memory of a single compute node, OOM will occur when hash tables are established in the memory. As a result, the whole distributed job will fail and need to run again. Even though map join can greatly improve performance if it is correctly used, in most cases, the optimizer needs explicit map join hints from users to generate the map join plan. In addition, neither the user nor optimizer can accurately determine the input of a non-source table because the amount of intermediate data can only be accurately obtained during job running.

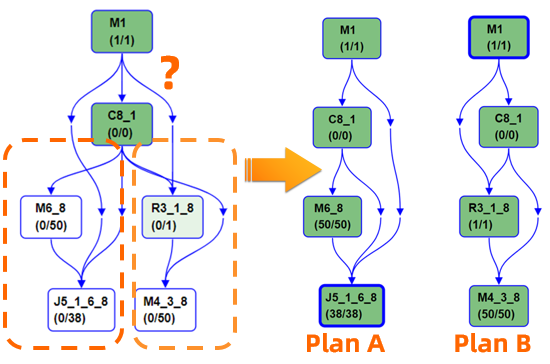

Map join and default sort-merge join are two different optimizer execution plans. At the DAG layer, they correspond to two different logical graphs. To support dynamic optimization based on intermediate data features during job running, the DAG framework needs to have dynamic logical graph execution capabilities. This is exactly what is provided by the conditional join feature developed in DAG 2.0. As shown in the following figure, when the algorithm used for the join operation cannot be determined in advance, the distributed scheduling execution framework allows the optimizer to submit a conditional DAG. This DAG contains the execution plan branches of the two join methods. During execution, the AM dynamically selects a branch (plan A or plan B) to execute based on the upstream data volume. This dynamic logical graph execution process ensures that an optimal execution plan is selected based on generated intermediate data features when a job runs.

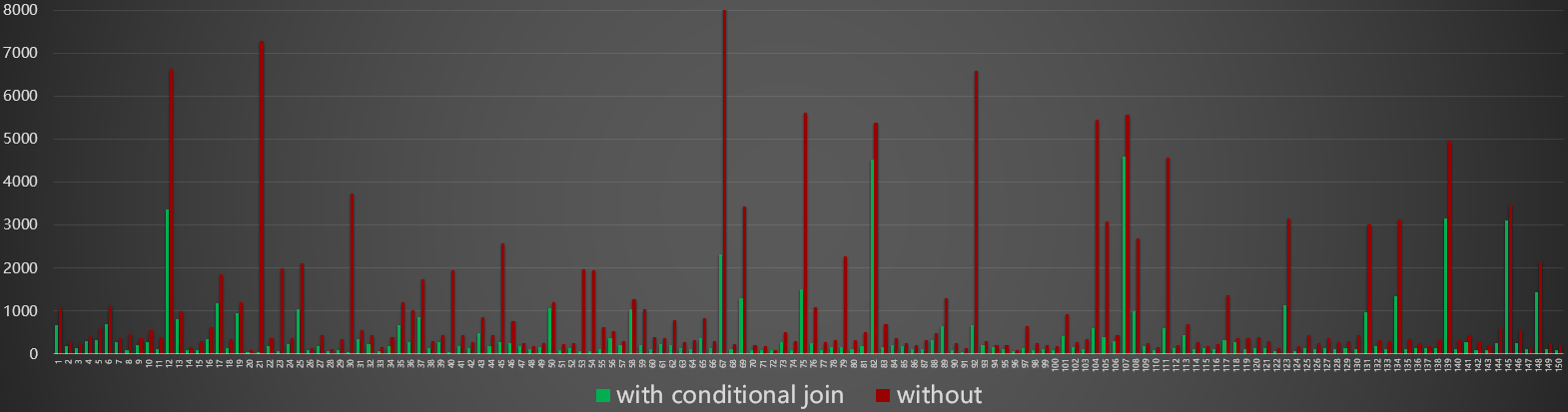

Conditional join is the first implementation scenario of dynamic logical graphs. When a batch of applicable online jobs is selected for testing, dynamic conditional join improves the performance by about 300% compared with a static execution plan.

The bubble mode is a new job running mode that we have explored in the DAG 2.0 architecture. We can adjust the bubble size and location to obtain different balances between performance and resource utilization. We will give some intuitive examples to help you understand how the bubble mode is applied in distributed jobs.

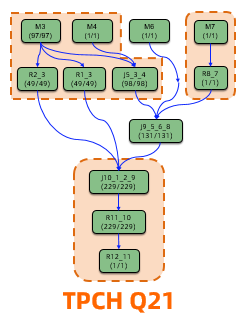

As shown in the preceding figure, the TPC-H Q21 job is cut into three bubbles. Data can be efficiently pipelined between nodes, and scheduling can be accelerated through hot nodes. Resource consumption (CPU x time) is 35% that of near real-time jobs, the performance is 96% of that of near real-time jobs in all-in-one scheduling mode, and about 70% higher than that of offline jobs.

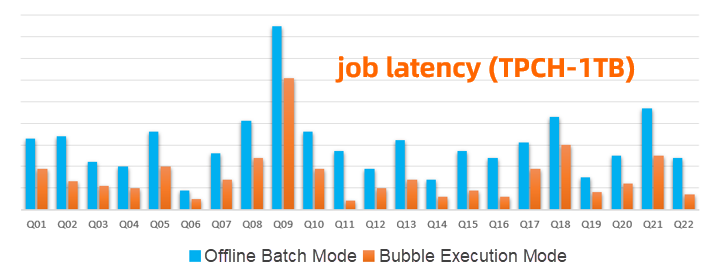

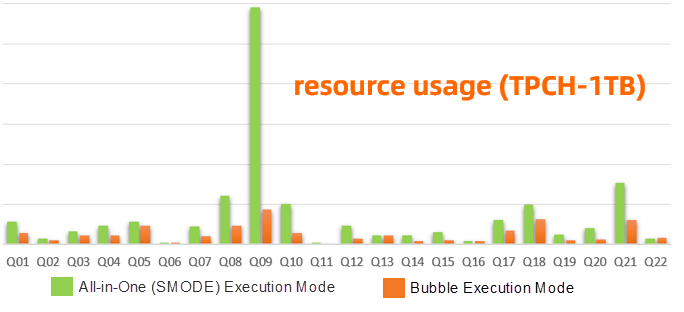

In the standard TPC-H 1 TB test, the bubble mode ensures a better balance between performance and resource utilization than the offline job mode and near real-time job all-in-one mode (gang scheduling.) When the greedy bubble policy is selected (bubble size capped at 500), the bubble mode improves the job performance by 200%, with resource consumption increasing by only 17%, when compared with the offline batch mode. The bubble mode can attain 85% of the performance of near real-time jobs all-in-one mode with less than 40% of the resource consumption (CPU x time.) The bubble mode ensures a good balance between performance and resource utilization, ensuring efficient system resource utilization. The bubble mode has been applied to all online jobs in the Alibaba Group. The actual online job performance is similar to that observed in the TPC-H test.

As mentioned before, the bubble mode supports different cut policies, but only the cut policy is discussed here. Closely combined with the upper-layer computing engine, such as the MaxCompute optimizer, this DAG distributed scheduling bubble execution capability allows us to strike an optimal balance between performance and resource utilization based on the available resources and computing features of the job.

In a traditional distributed job, the type and amount of resources (CPU, GPU, and memory) required by each compute node are determined in advance. However, it is difficult to select a proper compute node type and size before the distributed job runs. Even computing engine developers need some complex rules to estimate the proper configuration. In computing modes in which the configuration needs to be sent to users, it is more difficult for users to select proper configuration.

Here, we will use TensorFlow (TF) jobs on the PAI platform as examples to describe how the dynamic resource configuration feature in DAG 2.0 helps TF jobs select suited GPU types and improve the GPU utilization. Compared with CPUs, GPUs are a new type of computing resource featuring faster hardware updates. Most ordinary users are not familiar with the computing features of GPUs. As a result, users often select improper GPU types. GPUs are scarce resources in online operations. The GPU quantity applied for online always exceeds the total number of GPUs in a cluster. Therefore, users have to queue for a long time to obtain resources. However, the actual GPU utilization in clusters is about 20% on average, which is low. The gap between the application quantity and actual use is usually caused by improper GPU resource configurations specified during job configuration.

In the DAG 2.0 framework, an extra computing control node is provided for the GPU resources of PAI TF jobs. For more information, see the information on dynamic PS DAG in section 2.2.2 "Support for New Computing Modes." The node can run the resource prediction algorithm of the PAI platform to determine the GPU type required by the current job and then send a dynamic event to the AM to modify the GPU type applied for by the downstream worker if necessary. Accurate resource usage can be predicated via dry-run on sample data or history-based optimization (HBO) that predicates proper resource usage, given data characteristics, type of training algorithm, as well as information collected from historical jobs.

In this scenario, the control node and worker are connected through a concurrent edge. Scheduling is triggered on the edge when the control node selects a resource type and sends an event. This dynamic resource configuration during job running improves GPU utilization on more than 40% clusters in online functional testing.

As an important dimension of physical features, dynamic resource feature adjustment for compute nodes during job running is widely used on the PAI and MaxCompute platforms. In MaxCompute SQL jobs, the CPU or memory size of upstream and downstream nodes can be effectively predicted based on the upstream data features. When OOM occurs, the memory applied for by the retried compute node can be raised to prevent job failures. These are new features implemented in the DAG 2.0 architecture, and we will not elaborate on here.

The dynamic capabilities and flexibility of DAGs are the foundation of a distributed system. When combined with the upper-layer computing engine, this can ensure more efficient and accurate upper-layer computing and significantly improve the performance and resource utilization in specific scenarios. These are the new features and computing modes developed in the DAG 2.0 project. In addition to interworking with the computing engine for more efficient execution plans, agile scheduling is a fundamental feature of the AM or DAG execution performance. DAG 2.0 scheduling policies are implemented based on the event-driven framework and flexible state machine design. The following figure shows the achievements of DAG 2.0 in basic engineering literacy and performance.

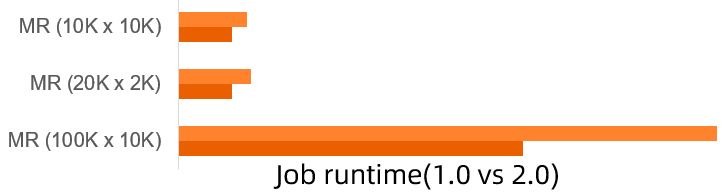

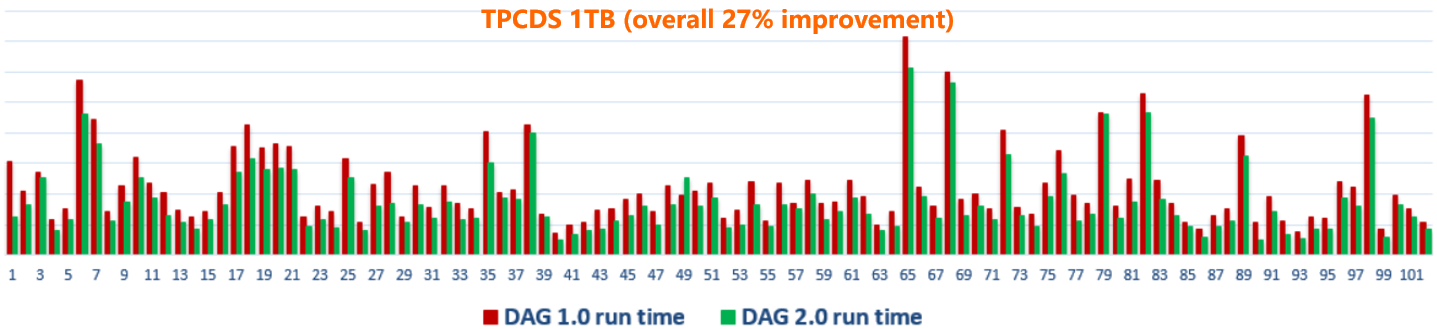

The simplest Map-Reduce (MR) jobs are used as examples. For this type of job, there is little opportunity to optimize scheduling execution. Scheduling execution depends on the scheduling system's agility and comprehensive de-blocking capability throughout the processing procedure. This example also highlights the scheduling performance advantages of DAG 2.0. The advantages are obvious when the job scale is large. On the TPCDS benchmark that better represents characteristics of production workload, even when features such as dynamic DAG, are disabled to allow fair comparison based on the same execution plan and runtime, DAG 2.0 still brings significant performance improvement, thanks to its efficient scheduling.

How can we use a next-generation framework to seamlessly interwork with online scenarios to ensure large-scale online application? This is an important topic and can illustrate the difference between actual production system upgrades and a small-scale proof of concept for a new system. The Job Scheduler system now supports tens of millions of distributed jobs on big data computing platforms inside and outside the Alibaba Group. To completely replace the online distributed production system that has supported our big data businesses for 10 years the DAG or AM core distributed scheduling execution component while preventing failures in existing scenarios, we need more than just advanced architecture and design. Changing the engine during operation to ensure high-quality system upgrades is more challenging than designing new system architecture. To perform such upgrades, a stable engineering base and a test or release framework are indispensable. Without such a base, upper-layer dynamic features and new computing modes are impossible.

Currently, DAG 2.0 covers all SQL offline jobs and near real-time jobs on the MaxCompute platform as well as TensorFlow jobs (CPU and GPU) and PyTorch jobs on the PAI platform. It supports tens of millions of distributed jobs every day and met the requirements of Double 11 and Double 12 in 2019. Under the pressure of the data peaks during Double 11 and Double 12 (over 50% up from 2018), DAG 2.0 ensured on-time output for Alibaba's important baselines on Double 11 and Double 12. Jobs of more types, such as cross-cluster copy jobs are migrating to the DAG 2.0 architecture, and computing job capabilities have been upgraded based on the new architecture. The release of the DAG 2.0 framework lays a solid foundation for the development of new features in various computing scenarios.

The core architecture of Job Scheduler DAG 2.0 aims to consolidate the foundation for the long-term development of Alibaba computing platforms and support the combination of upper-layer computing engines with distributed scheduling to implement various innovations and create a new computing ecosystem. This architecture upgrade is an important first step in this process. To support enterprise-grade and full-spectrum computing platforms of different scales and modes, we need to deeply integrate the capabilities of the new architecture with the upper-layer computing engines and other components of the Job Scheduler system. In Alibaba application scenarios, DAG 2.0 has maintained its leading position in job scale while achieving multiple innovations in architecture and features, including:

In addition, the clear DAG 2.0 system architecture ensures the rapid development of new features and promotes platform and engine innovations. Due to space limitations, we could only introduce certain new functions and computing modes. We have also implemented or are in the process of developing many other innovative features, such as:

Improved core scheduling capabilities can provide enterprise-grade service capabilities to various distributed upper-layer computing engines. The benefits of these improved computing scheduling capabilities will be passed on to client enterprises served via Alibaba Cloud computing engines, such as MaxCompute. Over the past 10 years, Alibaba businesses have been driven to build the largest cloud distributed platform in the industry. To better serve the Alibaba Group and our enterprise users, we hope that the platform's enterprise-grade service capabilities can constantly improve in terms of performance & scale and intelligent adaptation. This will make it easier to use distributed computing services and ensure inclusive big data.

For more information, visit the MaxCompute official website

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - August 31, 2020

Alibaba Clouder - December 2, 2020

Alibaba Clouder - November 27, 2017

Apache Flink Community China - November 8, 2023

yuaner.wu - June 18, 2024

Alibaba Cloud MaxCompute - December 13, 2018

137 posts | 21 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud MaxCompute