By Guchang Pan (Chuntan)

For example, my machine configuration is low. When the latency is 2ms, I think Redis is slower. If your hardware configuration is high, Redis can be considered slower in your operating environment when the latency is 0.5 ms.

Therefore, you need to understand the baseline performance of your Redis on the server in the production environment. Then, you can evaluate to what degree the latency reaches that you will think that Redis is slow.

In order to avoid network latency between the service server and the Redis server, you need to test the response latency of the instance directly on the Redis server. Run the following command to test the maximum response latency of this instance within 60 seconds:

./redis-cli --intrinsic-latency 120

Max latency so far: 17 microseconds.

Max latency so far: 44 microseconds.

Max latency so far: 94 microseconds.

Max latency so far: 110 microseconds.

Max latency so far: 119 microseconds.

36481658 total runs (avg latency: 3.2893 microseconds / 3289.32 nanoseconds per run).

Worst run took 36x longer than the average latency.As shown in the output, the maximum response latency within 60 seconds is 119 microseconds (0.119 milliseconds). You can use the following command to view the minimum, maximum, and average access latency of Redis over a period.

$ redis-cli -h 127.0.0.1 -p 6379 --latency-history -i 1

min: 0, max: 1, avg: 0.13 (100 samples) -- 1.01 seconds range

min: 0, max: 1, avg: 0.12 (99 samples) -- 1.01 seconds range

min: 0, max: 1, avg: 0.13 (99 samples) -- 1.01 seconds range

min: 0, max: 1, avg: 0.10 (99 samples) -- 1.01 seconds range

min: 0, max: 1, avg: 0.13 (98 samples) -- 1.00 seconds range

min: 0, max: 1, avg: 0.08 (99 samples) -- 1.01 seconds rangeIf you observe Redis runtime latency that is twice or more than its baseline performance, you can assume that Redis is slowing down.

A simple way to test the impact of the network on Redis performance is to use a tool (such as iPerf) to test the network limit bandwidth.

Server

# iperf -s -p 12345 -i 1 -M

iperf: option requires an argument -- M

------------------------------------------------------------

Server listening on TCP port 12345

TCP window size: 4.00 MByte (default)

------------------------------------------------------------

[ 4] local 172.20.0.113 port 12345 connected with 172.20.0.114 port 56796

[ ID] Interval Transfer Bandwidth

[ 4] 0.0- 1.0 sec 614 MBytes 5.15 Gbits/sec

[ 4] 1.0- 2.0 sec 622 MBytes 5.21 Gbits/sec

[ 4] 2.0- 3.0 sec 647 MBytes 5.42 Gbits/sec

[ 4] 3.0- 4.0 sec 644 MBytes 5.40 Gbits/sec

[ 4] 4.0- 5.0 sec 651 MBytes 5.46 Gbits/sec

[ 4] 5.0- 6.0 sec 652 MBytes 5.47 Gbits/sec

[ 4] 6.0- 7.0 sec 669 MBytes 5.61 Gbits/sec

[ 4] 7.0- 8.0 sec 670 MBytes 5.62 Gbits/sec

[ 4] 8.0- 9.0 sec 667 MBytes 5.59 Gbits/sec

[ 4] 9.0-10.0 sec 667 MBytes 5.60 Gbits/sec

[ 4] 0.0-10.0 sec 6.35 GBytes 5.45 Gbits/sec

Client

# iperf -c server IP -p 12345 -i 1 -t 10 -w 20K

------------------------------------------------------------

Client connecting to 172.20.0.113, TCP port 12345

TCP window size: 40.0 KByte (WARNING: requested 20.0 KByte)

------------------------------------------------------------

[ 3] local 172.20.0.114 port 56796 connected with 172.20.0.113 port 12345

[ ID] Interval Transfer Bandwidth

[ 3] 0.0- 1.0 sec 614 MBytes 5.15 Gbits/sec

[ 3] 1.0- 2.0 sec 622 MBytes 5.21 Gbits/sec

[ 3] 2.0- 3.0 sec 646 MBytes 5.42 Gbits/sec

[ 3] 3.0- 4.0 sec 644 MBytes 5.40 Gbits/sec

[ 3] 4.0- 5.0 sec 651 MBytes 5.46 Gbits/sec

[ 3] 5.0- 6.0 sec 652 MBytes 5.47 Gbits/sec

[ 3] 6.0- 7.0 sec 669 MBytes 5.61 Gbits/sec

[ 3] 7.0- 8.0 sec 670 MBytes 5.62 Gbits/sec

[ 3] 8.0- 9.0 sec 667 MBytes 5.59 Gbits/sec

[ 3] 9.0-10.0 sec 668 MBytes 5.60 Gbits/sec

[ 3] 0.0-10.0 sec 6.35 GBytes 5.45 Gbits/secFirst of all, you need to check the Redis slowlog.

Redis provides the statistics feature for slowlog commands. This feature records commands that take a long time to execute.

Before you view the slowlog of Redis, you must set the threshold for it. For example, you can set the threshold to 5 milliseconds and retain the latest 500 slowlogs.

# Command execution that takes more than 5 milliseconds is recorded as slowlogs.

CONFIG SET slowlog-log-slower-than 5000

# Only the last 500 slowlogs are retained.

CONFIG SET slowlog-max-len 500In the first case, Redis consumes more CPU resources because the time complexity of operating memory data is too high.

In the second case, Redis needs to return too much data to the client at a time, and more time is spent in the process of data protocol assembly and network transmission.

We can also analyze it from the resource usage level. If the OPS of your application operation Redis is not large, but the Redis instance CPU usage is high, it is a result of the use of complex commands.

If you query the slowlog and find that it is not caused by commands with too high complexity but simple commands (such as SET / DEL appear in the slowlog), you need to check whether your instance has written bigkey.

redis-cli -h 127.0.0.1 -p 6379 --bigkeys -i 1

-------- summary -------

Sampled 829675 keys in the keyspace!

Total key length in bytes is 10059825 (avg len 12.13)

Biggest string found 'key:291880' has 10 bytes

Biggest list found 'mylist:004' has 40 items

Biggest set found 'myset:2386' has 38 members

Biggest hash found 'myhash:3574' has 37 fields

Biggest zset found 'myzset:2704' has 42 members

36313 strings with 363130 bytes (04.38% of keys, avg size 10.00)

787393 lists with 896540 items (94.90% of keys, avg size 1.14)

1994 sets with 40052 members (00.24% of keys, avg size 20.09)

1990 hashs with 39632 fields (00.24% of keys, avg size 19.92)

1985 zsets with 39750 members (00.24% of keys, avg size 20.03)Let me remind you about something. When executing this command, pay attention to two problems:

If you find that large latency does not occur when you operate Redis, but a wave of latency suddenly occurs at a certain point, the phenomenon is that the slow time point is regular. For example, at a certain point in time or every interval, a wave of latency occurs.

If this is the case, you need to check whether a large number of keys expire in the business code.

If a large number of keys expire at a fixed point in time, the latency may increase when you access Redis at this point.

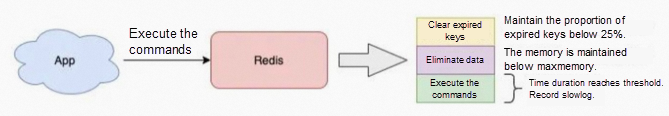

Redis uses two policies: passive expiration and active expiration.

Note: The scheduled task of the active expired key is executed in the main Redis thread.

In other words, if a large number of expired keys need to be deleted during the active expiration process when the application accesses Redis, it must wait for the expiration task to finish before Redis can serve the client request.

If a bigkey needs to be deleted due to expiration at this time, it will take longer. The delayed commands are not recorded in slowlogs.

This is because slowlogs only record the time consumed by one command to operate memory data. However, Redis proactively deletes the logic of expired keys before the command is executed.

When we use Redis as a cache, we usually set a maxmemory for this instance and then set a data elimination policy.

When the memory of a Redis instance reaches maxmemory, Redis must remove some data from the instance to keep the memory below maxmemory before new data is written.

This logic of removing old data takes time, and the specific length of time depends on the elimination strategy you configure.

Generally, the most commonly used is the allkeys-lru / volatile-lru elimination strategy. Their processing logic is to randomly take a batch of keys from the instance each time (this number can be configured), eliminate a key with the least access, temporarily store the remaining keys in a pool, continue to randomly take a batch of keys, compare them with the keys in the previous pool, and eliminate a key with the least access. This is repeated until the instance memory drops below maxmemory.

It should be noted that the logic for Redis to eliminate data is the same as to delete expired keys and is executed before the command is executed. It will increase the latency for us to operate Redis; the higher the write OPS, the more obvious the latency will be.

If the bigkey is stored in your Redis instance at this time, it takes a long time to delete the bigkey to release memory.

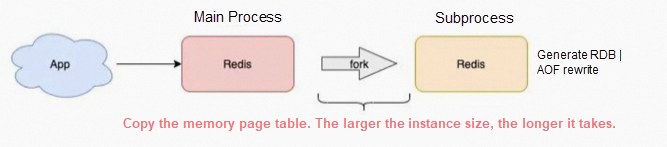

After Redis enables the background RDB and AOF rewrite, the main process must create a child process to persist data.

The main process creates a child process, and it calls the fork function provided by the operating system.

During the execution of the fork, the main process needs to copy its memory page table to the child process. If this instance is large, the copying process will be time-consuming.

Moreover, this fork process consumes a large amount of CPU resources. Before the fork is completed, the entire Redis instance is blocked and cannot process any client requests.

If your CPU resources are tight at this time, the fork will take longer, even seconds, which will affect the performance of Redis.

How do you confirm that the Redis latency is increased due to the time-consuming fork?

You can run the INFO command on Redis to view the latest_fork_usec items in microseconds.

# The time consumed for the last fork. Unit: microseconds.

latest_fork_usec:59477This time is the time when the main process is in the fork child process, and the entire instance is blocked from processing the client request.

If you find that this takes a long time, you should be alert. It means that during this period, your entire Redis instance is in an unavailable state.

In addition to data persistence, when the master node establishes data synchronization for the first time, the master node creates a child process to generate an RDB and then sends it to the slave node for full synchronization. Therefore, this process also affects the performance of Redis.

In addition to the latency mentioned above caused by the fork time during the sub-process RDB and AOF rewrite, there is another aspect that also causes performance problems – whether the operating system has enabled the memory large page mechanism.

When an application applies for memory from an operating system, the application is applied by a memory page, whereas the size of a conventional memory page is 4KB.

Starting from Linux 2.6.38, the Linux kernel supports the memory large page mechanism. This mechanism allows applications to request memory from the operating system at a size of 2MB.

The unit of memory that an application requests from the operating system each time becomes larger. This also means that it takes longer to request memory.

When Redis is executing the background RDB, it uses the fork child process to handle it. However, after the main process forks the sub-process, the main process can still receive the write request at this time, and the incoming write request will operate the memory data in a Copy On Write manner.

In other words, once the main process has data to modify, Redis does not directly modify the data in the existing memory but copies the memory data and then modifies the new memory data. This is called Copy On Write.

You can also understand that whoever needs to have a write operation needs to copy first and then modify it.

The advantage of this is that any write operation by the parent process will not affect the data persistence of the child process (the child process only needs to persist all the data in the entire instance at the moment of the fork and does not care about new data changes because the child process only needs a memory snapshot and then persist it to disk).

However, note that when the main process copies memory data, this phase involves the application of new memory. If the operating system enables large memory pages at this time, even if the client only modifies 10 GB of data, Redis will apply to the operating system in units of 2MB. This takes a long time to apply for memory, which increases the latency of each write request and affects the performance of Redis.

Similarly, if the write request is a bigkey, the main process will apply for more memory and take a longer time to copy the bigkey memory block. It can be seen that bigkey once again affects performance here.

Earlier, we analyzed the impact of RDB and AOF rewrite on Redis performance and focused on the fork.

Regarding data persistence, some factors affect Redis’s performance. This time we will focus on AOF data persistence.

If your AOF configuration is unreasonable, it may still cause performance problems.

After AOF is enabled for Redis, the way it works is listed below:

Redis provides three types of disk flushing mechanisms to ensure the security of AOF file data.

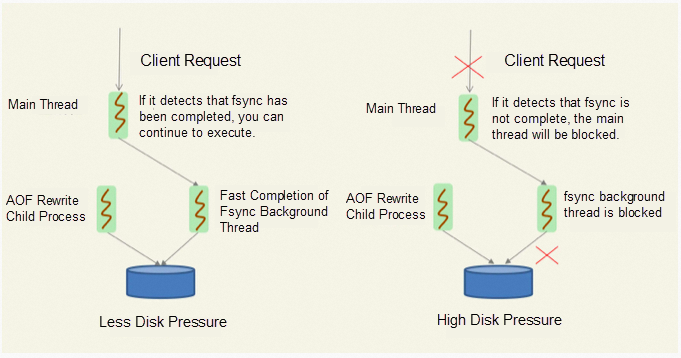

Is it OK to choose a compromise solution, appendfsync everysec?

The advantage of this scheme is that the Redis main thread returns after writing the memory. The specific disk brushing operation is executed in the background thread, which brushes the data in the memory to the disk every 1 second.

This solution takes performance into account and ensures data security as much as possible.

Is it perfect?

The answer is no. You should be on guard when using this scheme because this scheme still causes Redis latency to increase and block the entire Redis.

You can imagine a situation when the Redis background thread is executing AOF file flushing. If the I/O load of the disk is high at this time, the background thread will be blocked when executing the flushing operation (fsync system call).

At this time, the main thread will still receive the write request. Then, the main thread needs to write the data into the file memory (write system call). When the main thread uses the background sub-thread to execute fsync once and needs to write the newly received operation record back to the disk again, and if the main thread finds that the last fsync has not been completed, it will block it. If fsync is executed by the background sub-thread blocks frequently (for example, AOF rewriting takes up a large amount of disk I/O bandwidth), the main thread will also block, resulting in slower Redis performance.

In this process, the main thread still has the risk of blocking.

Therefore, although your AOF is configured as appendfsync everysec, you should not take it lightly. You should be alert to Redis performance problems caused by excessive disk pressure.

Under what circumstances will the disk I/O load be too large? How can we solve this problem?

I have summarized the following situations for reference:

For case 1, Redis's AOF background sub-thread brushed the disk and hit the sub-process AOF rewrite!

In many cases, when we deploy a service, to improve the service performance and reduce the performance loss caused by the context switching of the application between multiple CPU cores, the usual solution is to improve the performance by binding the process to the CPU.

As we all know, modern servers generally have multiple CPUs, and each CPU contains multiple physical cores. Each physical core is divided into multiple logical cores, and the logical cores under each physical core share L1/L2 Cache.

In addition to the main thread serving client requests, the Redis server creates child processes and sub-threads.

The child process is used for data persistence, while the child thread is used to perform some time-consuming operations, such as the asynchronous release of fd, asynchronous AOF disk flushing, asynchronous lazy-free, etc.

If you bind the Redis process to only one CPU logical core when Redis is performing data persistence, the fork-out child process will inherit the CPU usage preference of the parent process.

As such, the child process consumes a large number of CPU resources for data persistence (CPU resources are consumed to scan all instance data), which causes CPU contention between the child process and the main process, affects the main process to serve client requests, and increases the access latency.

This is the performance problem caused by CPU binding Redis.

If you find that Redis suddenly becomes slow and each operation takes hundreds of milliseconds or seconds, you need to check whether Redis uses Swap. As such, Redis basically cannot provide high-performance services.

If you know something about the operating system, you'll know that to mitigate the impact of insufficient memory on the application, the operating system allows a portion of the memory data to be swapped to disk to achieve the application's buffering of the memory usage. The memory data swapped to an area on disk is Swap.

The problem is that when the data in memory is swapped to disk, and Redis accesses the data again, it needs to read it from the disk, and accessing the disk is hundreds of times slower than accessing memory!

This operation latency is unacceptable, especially for Redis databases that require high performance and are extremely sensitive to performance.

At this point, you need to check the memory usage of the Redis machine to see if Swap is used.

You can check whether the Redis process uses Swap in the following ways:

# Find the Redis process ID first.

$ ps -aux | grep redis-server

# View Redis Swap usage.

$ cat /proc/$pid/smaps | egrep '^(Swap|Size)'The following result is returned:

Size: 1256 kB

Swap: 0 kB

Size: 4 kB

Swap: 0 kB

Size: 132 kB

Swap: 0 kB

Size: 63488 kB

Swap: 0 kB

Size: 132 kB

Swap: 0 kB

Size: 65404 kB

Swap: 0 kB

Size: 1921024 kB

Swap: 0 kBEach row of Size indicates the size of a piece of memory used by Redis. Swap below Size indicates how much data has been transferred to the disk for this size of memory. If the two values are equal, the data in this piece of memory has been transferred to the disk.

If only a small amount of data is transferred to the disk, for example, each Swap occupies a small proportion of the corresponding Size, the impact is not significant. If hundreds of megabytes or even gigabytes of memory are replaced with disks, you need to be alert. Redis performance will drop sharply.

Redis data is stored in memory. If your application frequently modifies the data in Redis, Redis may generate memory fragmentation.

Memory fragmentation reduces the memory usage of Redis instances. You can run the INFO command to obtain the memory fragmentation rate of the instance.

# Memory

used_memory:5709194824

used_memory_human:5.32G

used_memory_rss:8264855552

used_memory_rss_human:7.70G

...

mem_fragmentation_ratio:1.45mem_fragmentation_ratio=used_memory_rss / used_memory

used_memory indicates the memory size of Redis data storage, and used_memory_rss indicates the actual memory size allocated by the operating system to the Redis process.

If the mem_fragmentation_ratio is> 1.5, the memory fragmentation rate has exceeded 50%, and we need to take some measures to reduce memory fragmentation.

The general solution is listed below:

However, if you enable memory defragmentation, the performance of Redis may deteriorate.

The reason is that the defragmentation of Redis is performed in the main thread. When the defragmentation is performed, CPU resources are consumed, which is time-consuming and affects client requests.

Therefore, when you need to enable this feature, it is best to test and evaluate its impact on Redis in advance.

Configure the parameters for Redis defragmentation below:

# Enable automatic memory defragmentation (master switch).

activedefrag yes

# Do not defragment if the memory usage is less than 100MB.

active-defrag-ignore-bytes 100mb

# Start defragmentation if the memory fragmentation rate exceeds 10%.

active-defrag-threshold-lower 10

# When memory fragmentation rate exceeds 100%, do your best to defragment.

active-defrag-threshold-upper 100

# Minimum percentage of CPU resources occupied by memory defragmentation.

active-defrag-cycle-min 1

# Maximum percentage of CPU resources occupied by memory defragmentation.

active-defrag-cycle-max 25

# The number of elements of the List, Set, Hash, or ZSet type that are to be scanned at a time during defragmentation.

active-defrag-max-scan-fields 1000Is Your Redis Slowing Down? – Part 2: Optimizing and Improving Performance

1,308 posts | 461 followers

FollowAlibaba Cloud Community - July 13, 2023

Alibaba Clouder - October 22, 2020

Alibaba Cloud_Academy - June 26, 2023

Alibaba Clouder - March 11, 2021

ApsaraDB - October 12, 2021

ApsaraDB - July 10, 2019

1,308 posts | 461 followers

Follow Tair (Redis® OSS-Compatible)

Tair (Redis® OSS-Compatible)

A key value database service that offers in-memory caching and high-speed access to applications hosted on the cloud

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn MoreMore Posts by Alibaba Cloud Community

Dikky Ryan Pratama July 14, 2023 at 2:39 am

Awesome!