By Taosu

A distributed system is a system in which hardware or software components are distributed on different network computers and communicate and coordinate with each other only through message passing.

• Ingress-level load balancing

• Single-application architecture

• Primary/secondary database read/write splitting

• Split context and microservices

• Consistency (2PC, 3PC, Paxos, and Raft)

Strong consistency: database consistency reduces performance

• Availability (multi-level cache, read/write splitting)

BASE basically available: throttling causes slow response and degradation causes poor user experience

• Partition tolerance (consistency hashing solves the scaling problem)

2PC Protocol: Two-phase Commit Protocol. P refers to the preparation phase, and C refers to the commit phase

• Preparation phase: Ask whether you can start, write undo and redo logs, and receive a response.

• Commit phase: execute redo logs for commit operations, and execute undo logs for rollback operations.

3PC Protocol: divides the commit phase into three phases: CanCommit, PreCommit, and DoCommit

CanCommit: send a canCommit request and start waiting.

PreCommit: receive all Yes and write undo and redo logs. Timeout or No, interrupt.

DoCommit: execute redo logs for commit and undo logs for rollback;

The difference is that in the second step, the participant increases its timeout, and if it fails, it can release resources in time.

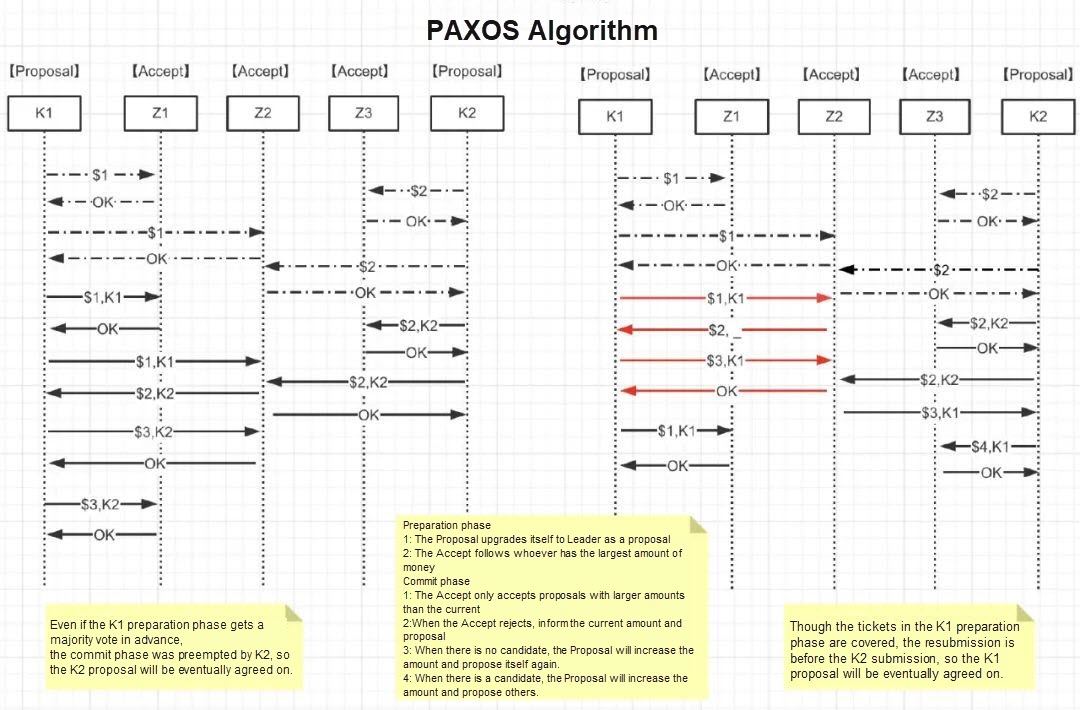

How to quickly and correctly agree on the value of certain data within a cluster in a distributed system where an exception occurs?

The consistency of participants (such as Kafka) can be guaranteed by the coordinator (such as Zookeeper), and the consistency of the coordinator can only be guaranteed by Paxos.

Roles in the Paxos algorithm:

• Client: for example, write requests to files in a distributed file server.

• Proposer: the proposal initiator. It selects V corresponding to the maximum N according to the Accept return and sends [N +1, V].

• Acceptor: the decision maker. After Accept, proposals less than N are rejected, and its own [N, V] is returned to the Proposer.

• Learners: the learner of the final decision. They act as a replication factor of the protocol.

//Algorithm constraint P1: An Acceptor must accept the first proposal it receives. //Given the majority rule, an Accpter has to accept multiple proposals P2a with the same V: if a proposal with a V is accepted, then the proposal with a higher number accepted by Acceptor must also be vP2b: if a proposal with a V is accepted, then the proposal with a higher number from Proposer must also be V // How to ensure that after the Acceptor of the proposal with a V is selected, Proposer can put forward proposals with higher numbers? For any [Mid, Vid], more than half of the Accepter sets S meet one of the following two conditions: the proposals accepted in S are larger than the proposals accepted in Mid S. If they are smaller than Mid, the value of the largest number is Vid.

Interview Question: How to Ensure Paxos Algorithm Activity

Suppose there is such an extreme case that two Proposers make a series of proposals with increasing numbers in turn, resulting in an endless loop and no value being selected:

• Select the primary Proposer to specify that only the primary Proposer can submit a proposal. As long as the primary Proposer and more than half of the Acceptors can communicate through the network normally, the primary Proposer puts forward a proposal with a higher number, which will eventually be approved.

• The time when each Proposer sends and submits proposals is set to random within a period of time. This ensures that the proposal does not remain in an endless loop.

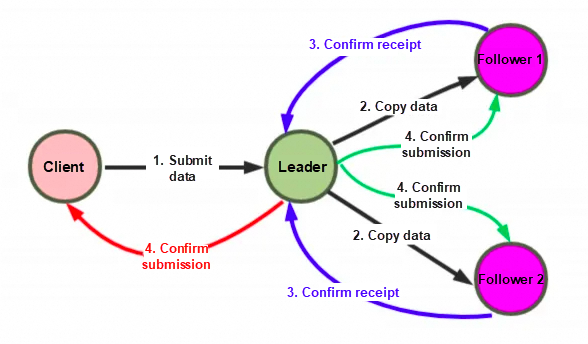

Raft is a consistency algorithm for managing replication logs.

Raft uses the heartbeat mechanism to trigger an election. When the server starts, the initial state is follower. Each server has a timer with an election timeout (usually 150-300 ms). If a server receives any message from a leader or candidate without timeout, the timer restarts. If a timeout occurs, it starts an election.

Leader Exception: If an exception occurs, the Follower will time out to elect the leader. After the leader election is completed, the leader compares the step size of each other.

Follower exception: the system synchronizes data to the current status of the leader after recovery.

Multiple candidates: the election fails, and the system times out and continues the election.

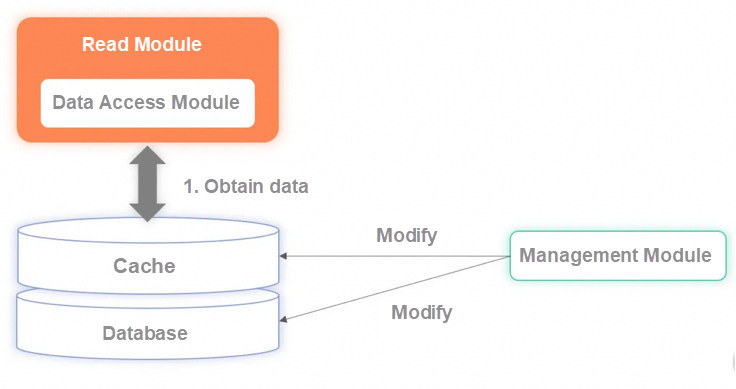

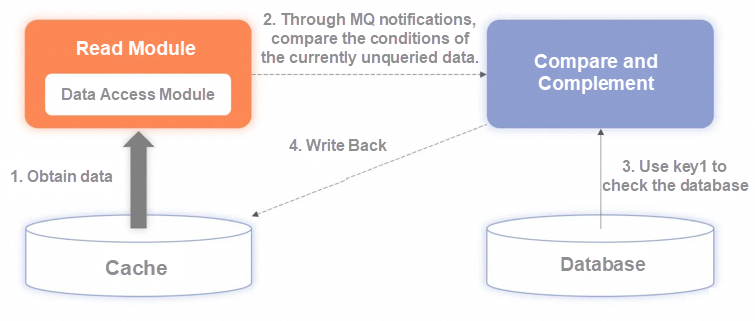

Full cache guarantees efficient reads

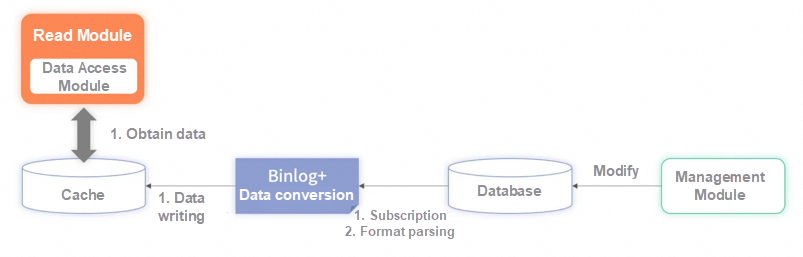

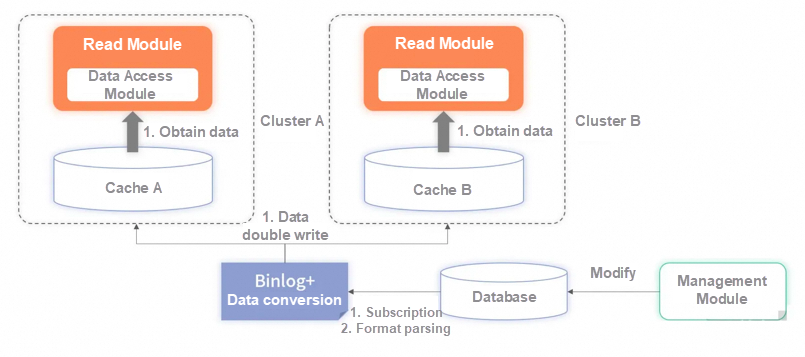

All data is stored in the cache, the read service will not be downgraded to the database during queries, and all requests are completely dependent on the cache. In this case, the glitch problem caused by downgrading to the database is solved. However, full caching does not solve the problem of distributed transactions during updates but rather amplifies the problem. The full cache has stricter requirements for data updates. The full cache requires that the existing data and real-time updated data of all databases must be completely synchronized to the cache. To solve this problem, an effective solution is to use the binlog of the subscription database to synchronize data.

Now many open-source tools (such as Canal of Alibaba) can simulate the master-slave replication protocol. Read the binlog file of the master database by using the simulation protocol to obtain all changes to the master database. For these changes, they open various interfaces for business services to obtain data.

After you mount the binlog middleware to the destination database, you can obtain all the change data of the database in real time. After the changed data is parsed, it can be directly written to the cache. There are also other advantages:

• Greatly improve the speed of reading and reduce the delay.

• Binlog master-slave replication is an ACK-based mechanism that solves distributed transaction problems.

If the synchronization cache fails, the consumed binlog is not confirmed. The next time the binlog will be consumed again and the data will be written to the cache.

Disadvantages:

This can be complemented by an asynchronous calibration scheme but at the expense of database performance. However, this solution will hide the details of middleware usage errors. In the early stage of the online environment, it is more important to record logs and troubleshoot them before subsequent optimization, instead of putting the cart before the horse.

The way to report the current node status to other nodes at a fixed frequency. If the heartbeat is received, the status of the network and node is healthy. When the heartbeat is reported, it usually carries some additional status and metadata for easy management.

Periodic heartbeat detection mechanism: no response is returned after timeout

Cumulative failure detection mechanism: retries exceed the maximum number

Real-time hot standby in multiple data centers

The two sets of cache clusters can be deployed to data centers in different cities. The read service is also deployed to different cities or different partitions accordingly. When receiving requests, read services in different data centers or partitions depend only on cache clusters with the same attributes. This scheme has two benefits.

Although this solution brings improvements in performance and availability, the resource cost is increased.

The ability of distributed systems to tolerate errors.

Enhance system robustness through throttling, degradation, failover policy, retry, and load balancing

When the host is down, the standby takes over all the work of the host. After the host returns to normal, the service is switched to the host in automatic (hot standby) or manual (cold standby) mode, which is commonly used in MySQL and Redis.

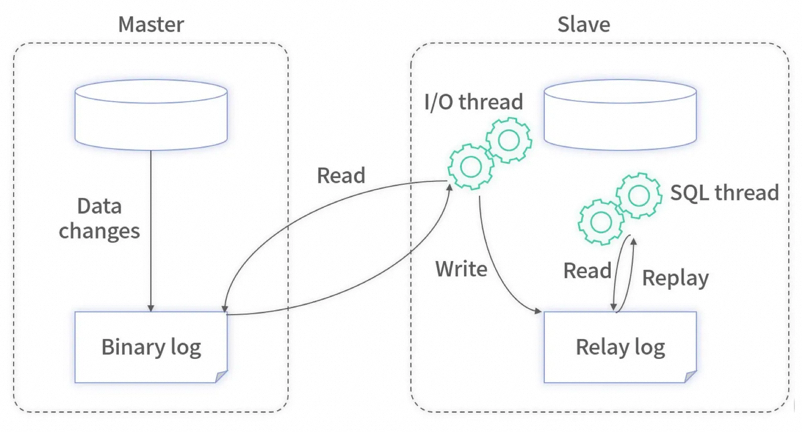

Binary log files are the basis for data replication between MySQL. All operations in its database are recorded in the binary log as "events", and other databases are used as slaves through one I/O thread to maintain communication with the master server and monitor changes in the master binary log file. If changes are found in the master binary log file, the changes are copied to its own relay log. Then a SQL thread of slave will execute the relevant "event" into its own database, thus realizing the consistency between the slave database and the master database as well as master-slave replication.

Two hosts run their own services at the same time and monitor each other. In the highly available part of the database, the common mutual backup is the MM mode. The MM mode is the Multi-Master mode, which means that a system has multiple masters, and each master has the read-write capability and merges versions based on the timestamp or business logic.

When multiple nodes are running, and they can share service requests through the master node. An example is ZooKeeper. The cluster mode needs to solve the high availability problem of the master node. Generally, the active/standby mode is used.

• Resources are locked in the preparation phase, which causes performance problems and deadlock problems in severe cases.

• After the transaction request is submitted, a network exception occurs, and some data is received and executed, which will cause consistency problems.

• Try phase: This phase refers to the detection of resources of each service and the resource lock or reservation.

• Confirm phase: This phase means the execution of actual operations in each service.

• Cancel phase: If any business method of a service fails to be executed, compensation and rollback are required;

Transactional Compensation / Long Transactions

• Long process, many processes, and calling third-party business.

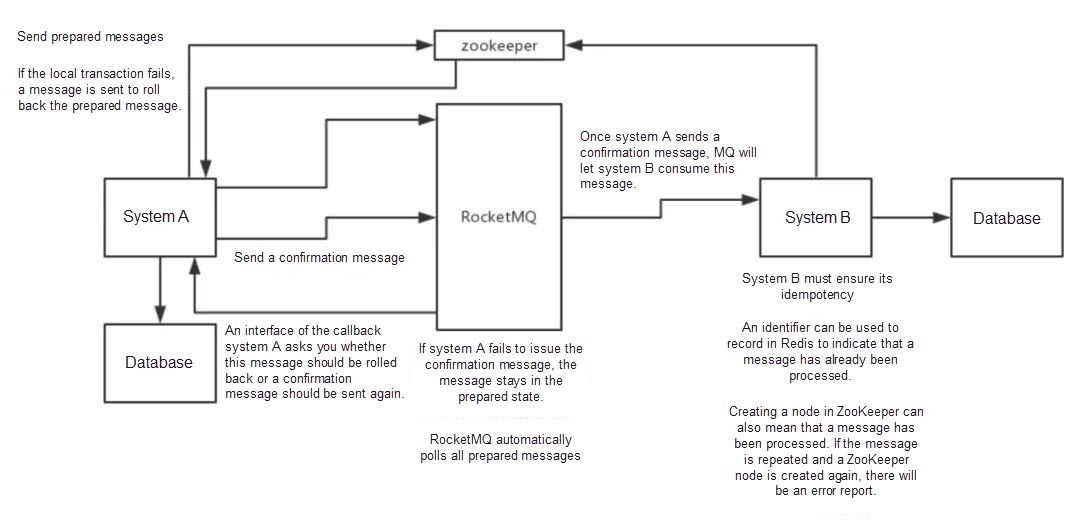

For example, ApsaraMQ for RocketMQ supports message transactions (core: double-ended acknowledgment, retry idempotence, etc. )

If you are in a scenario with strict requirements on the precision of funding, you can choose the TCC scheme.

For general distributed transaction scenarios, such as credit data, you can use the reliable message-based eventual consistency scheme.

If inconsistency is allowed in the distributed scenarios, you can use the best effort notification scheme.

• JWT-based Token obtains its data from the cache or database

• Tomcat-based Redis configures the conf file

• Spring-based Redis supports SpringCloud and Springboot.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Interview Questions We've Learned Over the Years: Multi-threading

1,304 posts | 461 followers

FollowAlibaba Cloud Community - May 3, 2024

Alibaba Cloud Community - May 1, 2024

Alibaba Cloud Community - May 7, 2024

Alibaba Cloud Community - May 6, 2024

Alibaba Cloud Community - July 29, 2024

Alibaba Cloud Community - July 29, 2024

1,304 posts | 461 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Organizational Data Mid-End Solution

Organizational Data Mid-End Solution

This comprehensive one-stop solution helps you unify data assets, create, and manage data intelligence within your organization to empower innovation.

Learn More ChatAPP

ChatAPP

Reach global users more accurately and efficiently via IM Channel

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Cloud Community