By Taosu

A thread is the smallest execution unit of CPU task scheduling. Each thread has its own independent program counter, virtual machine stack, and local method stack.

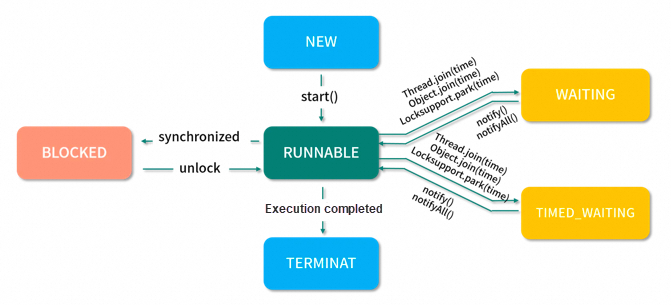

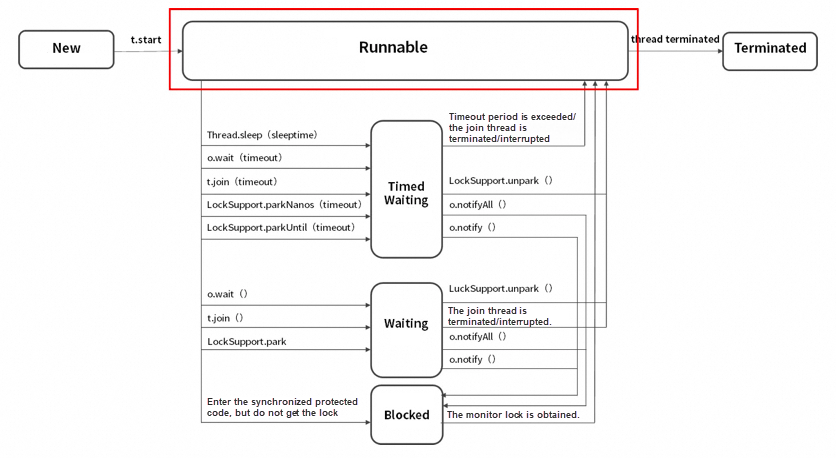

Thread Status: creation, ready, running, blocked, dead

| Method | Description | Difference |

|---|---|---|

| start | Start the thread, which is automatically scheduled by the virtual machine to execute the run() method | The thread is in the ready status |

| run | Thread logic code block processing. JVM scheduling execution. | The thread is in the running status |

| sleep | Sleep the currently executing thread (suspend execution) | Do not release the lock |

| wait | Make the current thread wait | Release the synchronization lock |

| notify | Wake up a single thread waiting on this object monitor | Wake up a single thread |

| notifyAll | Wake up all threads waiting on this object monitor | Wake up multiple threads |

| yiled | Stop the current thread and let a thread of equal priority run | Call with the Thread class |

| join | Stop the current thread and wait until another thread that calls the join method terminates | Call with a thread object |

Calling the three methods can block the current thread. The thread will be placed in the request waiting queue for the object, and then relinquish all the current synchronous requests for the object. The thread suspends all thread scheduling until one of the following occurs:

The thread is removed from the wait queue and becomes schedulable again. It competes with other threads for object synchronous requests in a regular way. Once it regains the synchronous request for the object, all the previous request state is restored, that is, the status where the thread call waited. The thread will continue to run where the call waited.

Why should it appear in a synchronized code block:

The wait() method is an object method. After you call the wait() method, the lock on the current object is forcibly released. Therefore, you must obtain the monitor object of the current object when you call the wait() method. Therefore, the wait() method is called in a synchronous method /code block.

• The wait method must be used in synchronized protected code, while the sleep method does not have this requirement.

• The wait method automatically releases the monitor lock. When you execute the sleep method in the synchronized code, the monitor lock is not released.

• The wait method means to wait permanently and cannot resume until it is interrupted or awakened. It will not resume actively. In the sleep method, a time is defined, and after the time expires, the thread will resume.

• Wait and notify are methods of the Object class, and sleep is a method of the Thread class.

Implement the Runnable interface (preferably use)

public class RunnableThread implements Runnable { @Override public void run () {System.out.println( 'Implement the thread with the implementation of Runnable');}}Implement the Callable interface (An exception can be thrown if a value is returned)

class CallableTask implements Callable<Integer> { @Override public Integer call() throws Exception { return new Random().nextInt();}}Inherit the Thread class (Java does not support multiple inheritance)

public class ExtendsThread extends Thread { @Override public void run() {System.out.println( 'Implement the thread with the Thread class');}}Use the thread pool (the underlying layer implements the run method)

static class DefaultThreadFactory implements ThreadFactory { DefaultThreadFactory() { SecurityManager s = System.getSecurityManager(); group = (s != null) ?s.getThreadGroup() : Thread.currentThread().getThreadGroup(); namePrefix = "pool-" + poolNumber.getAndIncrement() +"-thread-"; } public Thread newThread(Runnable r) { Thread t = new Thread(group, r,namePrefix + threadNumber.getAndIncrement(),0); if (t.isDaemon()) t.setDaemon(false); //Whether it is a daemon thread if (t.getPriority() != Thread.NORM_PRIORITY) t.setPriority(Thread.NORM_PRIORITY); //Thread priority return t; }}Benefits: You can reuse the created threads to reduce resource consumption. The threads can directly process tasks in the queue to accelerate response and facilitate centralized monitoring and management.

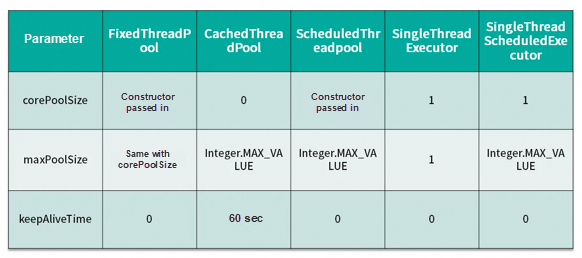

/*** 7 parameters of thread pool constructor */public ThreadPoolExecutor(int corePoolSize,int maximumPoolSize,long keepAliveTime, TimeUnit unit,BlockingQueue<Runnable> workQueue,ThreadFactory threadFactory, RejectedExecutionHandler handler) {}| Parameter | Description |

|---|---|

| corePoolSize | Core thread pool size |

| maximumPoolSize | Maximum thread pool size |

| keepAliveTime | The maximum survival time of idle threads that exceed the number of corePoolSize in the thread pool |

| TimelUnit | keepAliveTime time unit |

| workQueue | Blocking task queue |

| threadFactory | Create a thread factory |

| RejectedExecutionHandler | The rejection policy. When the number of submitted tasks exceeds the sum of maxmumPoolSize + workQueue, the tasks are handled by the RejectedExecutionHandler. |

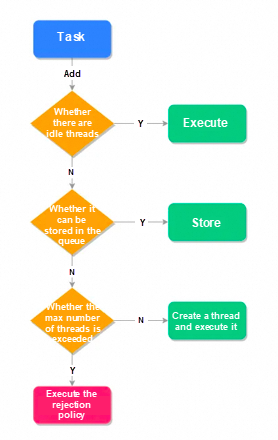

The thread pool has run out of threads and cannot continue to serve new tasks. At the same time, the waiting queue is full and cannot be filled with new tasks. At this time, we need to refuse the strategy mechanism to deal with this problem.

The built-in rejection policies of JDK are as follows:

AbortPolicy: Throw an exception directly, preventing the system from running normally. You can choose policies such as retry or abandon submission based on your business logic.

CallerRunsPolicy: As long as the thread pool is not closed, the policy runs the currently discarded task directly in the caller thread. It does not cause task loss, and it slows down the speed of submitting tasks and buffers time for executing tasks.

DiscardOldestPolicy: Discard the oldest request, that is, the task about to be executed, and try to submit the current task again.

DiscardPolicy: This policy silently discards tasks that cannot be processed without any processing. This is the best solution if the task loss is allowed.

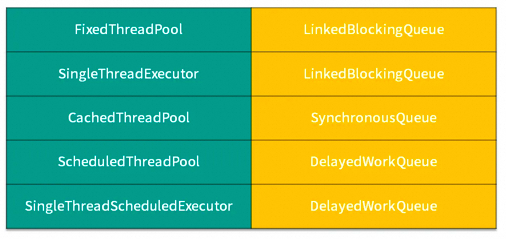

• newSingleThreadExecutor (): A thread pool with only one thread. Tasks are executed sequentially. Suitable for scenarios where tasks are executed one by one.

• newCachedThreadPool (): There are many threads in the thread pool that need to be executed at the same time and reused within 60 sec. It is suitable for executing many short-term asynchronous small programs or services with a light load.

• newFixedThreadPool (): A thread pool with a fixed number of threads. If there is no task execution, the threads will wait all the time, which is suitable for executing long-term tasks.

• newScheduledThreadPool (): A thread pool for scheduling upcoming tasks.

• newWorkStealingPool (): Deque of forkjoin is adopted at the bottom layer, and an independent task queue can reduce competition and speed up task processing.

The above methods have disadvantages, so:

FixedThreadPool and SingleThreadExecutor : Allow the queue length for requests to be Integer.MAX_VALUE, which can lead to OOM.

CachedThreadPool and ScheduledThreadPool : Allow the number of threads created to be Integer.MAX_VALUE, which can lead to OOM.

Manually created thread pools use ArrayBlockingQueue at the bottom layer to prevent OOM.

• CPU intensive (n +1)

CPU intensive means that the task requires a lot of operations, without blocking, and the CPU runs at full speed.

The number of threads for CPU-intensive tasks is as small as possible, generally the number of CPU cores +1 thread in the thread pool.

• I/O intensive (2*n)

Since IO-intensive task threads are not always executing tasks, you can allocate a few more threads, such as CPU * 2

You can also use the formula: Number of CPU cores *(1 + Average waiting time / Average working time).

The optimistic lock is the opposite of the pessimistic lock. It is a positive thought. It always thinks that the data will not be modified, so it will not lock the data. However, an optimistic lock will judge whether the data has been updated when updating. There are generally two implementations of the optimistic lock (version number mechanism and CAS). The optimistic lock is suitable for scenarios where there are more reads and fewer writes. This improves system concurrency. The atomic variable class in the java.util.concurrent.atomic in Java is implemented by CAS, an implementation of the optimistic lock.

The optimistic lock is mostly implemented based on the data version recording mechanism. That is, a version identifier is added to the data. In a version solution based on a database table, this is generally achieved by adding a "version" field to the database table. When the data is read out, this version number is read out together, and then when it is updated, 1 is added to this version number. At this time, the version data of the submitted data is compared with the current version information of the corresponding record in the database table. If the version number of the submitted data is greater than the current version number of the database table, it is updated. Otherwise, it is considered to be expired data.

CAS is compare-and-swap. It is a well-known lock-free algorithm. It can achieve synchronization between threads without the use of the lock mechanism. The use of CAS threads is not blocked, so it is also called non-blocking synchronization. The CAS algorithm involves three operations:

Memory value V to be read and written; value A to be compared; value B to be written

If and only when the value of V is equal to the value of A, and the value of A is equal to the value of V, the value of V is updated with the value of B. Otherwise, no operation will be performed. Compare-and-swap is an atomic operation: A and V are compared, and V and B are swapped. In general, it is a spin operation, that is, constant retries.

In the case of high concurrency, concurrency conflicts are easy to occur. If CAS fails all the time, it will keep retrying, wasting CPU resources.

CAS can ensure that the operation of a single variable is atomic. In Java, volatile keywords should be used together to ensure thread safety. CAS is powerless when multiple variables are involved. In addition, CAS implementation requires hardware-level support and cannot be directly used in Java common users. It can only be implemented with the atomic class under the atomic package, thus its flexibility is limited.

Usage: mainly three ways of usage

Modifying instance method: It is used to lock the current object instance, and before it enters the synchronization code, it needs to obtain the lock of the current object instance.

Modifying static method: Lock the current class. It applies to all object instances of the class, because static members do not belong to any instance object, and are all class members.

Modifying code block: Lock the specified object, and obtain the lock of the given object before entering the synchronization code base.

Summary: There are only two types of resources that are locked in synchronized mode: objects and classes.

The object header is a critical point that we need to focus on. It is the basis for the implementation of locks by synchronized, because the operations of requesting a lock, locking, and unlocking in synchronized are all linked to the object header. The main structure of the object header is composed of Mark Word, in which Mark Word stores the object's hashCode, lock information, generation age, GC flag, and other information.

Locks are also divided into different states. Before JDK6, there were only two states: not locked and locked (heavyweight lock). After JDK6, the synchronized mechanism is optimized and two states are added. There are four states in total: no lock, biased lock, lightweight lock, and heavyweight lock. Among them, no lock is one state. The type and state of the locks are recorded in the object header Mark Word. JVM needs to read the Mark Word data of the object in the process of applying for the lock and upgrading the lock.

Synchronized code blocks are implemented using the monitorenter and monitorexit instructions, while synchronized methods are implemented using flags.

ReentrantLock is a set of mutex locks provided by the java.util.concurrent package. Compared with synchronized, the ReentrantLock class provides some advanced features.

API-based mutexes require the lock() and unlock() methods and the try/finally statement block to complete the mutexes.

The implementation of ReenTrantLock is a spin lock that is implemented by calling the CAS operation in a loop. Its better performance is also because it avoids blocking the thread into the kernel state. Trying to prevent threads from entering the blocking state of the kernel is the key to analyzing and understanding lock design.

The fair lock is naturally based on the FIFO (first-in-first-out) principle. Threads that arrive first will obtain resources first, and threads that arrive later will wait in line.

All threads can get resources and will not starve to death in the queue. Suitable for big tasks.

The throughput will decrease. Except for the first thread in the queue, other threads will be blocked, and the CPU will wake up the blocked thread with high overhead.

When multiple threads obtain the lock, they will directly try to obtain it. If they cannot obtain it, they will enter the waiting queue. If they can obtain it, they will directly obtain the lock.

The CPU can reduce the overhead of waking up threads, the overall throughput efficiency will be high, and the CPU does not have to wake up all threads, which will reduce the number of threads awakened.

You may have noticed that this may cause the thread in the middle of the queue to not acquire the lock all the time or for a long time.

The reasons for the low efficiency of fair locks:

Fair locks maintain a queue, and later threads need to be locked. Even if the lock is idle, it is required to first check whether other threads are waiting. If there are, the threads are suspended and added to the back of the queue, and then the thread at the front of the queue is woken up. In this case, there is one more time to suspend and wake up compared with that of a non-fair lock.

Thread switching overhead is actually the reason why the non-fair lock is more efficient than the fair lock. Since a non-fair lock reduces the probability of thread suspension, later threads have a probability of escaping the overhead of being suspended.

Code that does not need to be executed synchronously can be executed without being placed in the synchronization block so that the lock can be released as soon as possible.

The idea is to split a physical lock into multiple logical locks to increase parallelism and reduce lock competition. Its idea is to trade space for time. Many data structures in Java use this method to improve the efficiency of concurrent operations:

ConcurrentHashMap in Java versions before JDK 1.8 uses an array of Segments: Segment< K,V >[] segments

Segment inherits from ReenTrantLock, so each Segment is a reentrant lock. Each Segment has a HashEntry< K,V > array to store data. During put operation, it is only necessary to lock this Segment and execute put, and other Segments will not be locked. Therefore, the number of Segments in the array directly corresponds to the number of threads allowed to store data simultaneously, which boosts the system's concurrency.

In most cases, we want to minimize the granularity of locks, while the coarsening of locks is to increase the granularity of locks.

If there is a cycle, the operation in the cycle needs to be locked, we should put the lock outside the cycle., Otherwise, every time we enter and exit the cycle, we enter and exit the critical area, and the efficiency is very poor.

The ReentrantReadWriteLock is a read-write lock. The read operation is added with a read lock and can be read concurrently. The write operation uses a write lock and can only be written by a single thread.

If the operations that need to be synchronized are executed very fast and the thread competition is not fierce, CAS is more efficient. It is because that, locking will cause the context switch of the thread. If the context switch is more time-consuming than the synchronization operation itself and the thread competition for resources is not fierce, using the volatile + CAS operation is a very efficient choice.

Spin locks can avoid the loss of kernel state and user state switching caused by waiting for the competing lock to enter the blocking suspend state and be woken up. They only need to wait (spin). However, if the lock is occupied by other threads for a long time and the CPU is not released, waiting will bring more performance overhead. The default value of spin times is 10.

Further optimization is adopted to the above spin lock optimization method. The number of its spins is no longer fixed, and the number is determined by the previous spin time and the lock owner's state on the same lock, which solves the shortcomings of spin locks.

Lock elimination refers to the process of removing locks on certain codes that are required to be synchronized but are detected to have no potential contention on shared data during the runtime of a virtual machine's real-time compiler. In the pipeline of the lock-free design of Netty, channelHandler will optimize the lock elimination.

If the thread already owns the lock, when it tries to acquire the lock again, it will have the fastest way to get the lock without performing some monitor operations. This is because there is no competition in most cases, using biased locks can improve performance.

In the case of low competition, avoiding thread context switching through CAS can significantly improve performance.

The loss caused by the locking and unlocking process of a heavyweight lock is fixed. A heavyweight lock is suitable for situations with fierce competition, high concurrency, and long synchronization block execution time.

Usually, the variables we create can be accessed and modified by any thread. If you want to achieve each thread has its own variables.

How to ensure dedicated local variables? The ThreadLocal class provided in the JDK is designed to solve this problem. It is similar to TLAB in operating systems.

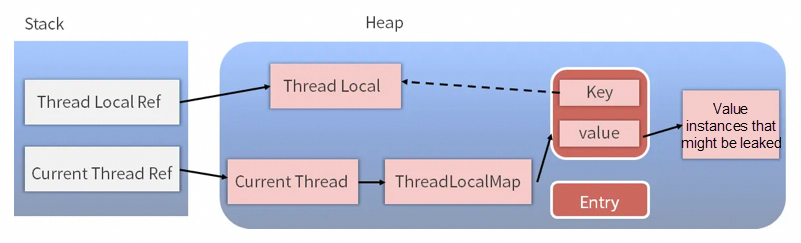

First, ThreadLocal is a generic class that is guaranteed to accept any type of object. Since multiple ThreadLocal objects can exist in a thread, ThreadLocal actually maintains a Map, which is a static internal class called ThreadLocalMap implemented by ThreadLocal.

The final variable is placed in the ThreadLocalMap of the current thread, not on ThreadLocal, which can be understood as just the encapsulation of ThreadLocalMap and passes the variable value.

The get() and set() methods we use actually call the get() and set() methods corresponding to this ThreadLocalMap class. For example:

1) Store user sessions

private static final ThreadLocal threadSession = new ThreadLocal();2) Solve thread safety problems

private static ThreadLocal<SimpleDateFormat> format1 = new ThreadLocal<SimpleDateFormat>()In fact, the key used in ThreadLocalMap is a weak reference to ThreadLocal, and the value is a strong reference. The characteristic of a weak reference is that if the object holds a weak reference, it must be cleaned up during the next garbage collection.

Therefore, if ThreadLocal is not strongly referenced by the outside, it will be cleaned up during garbage collection, thus the key using this ThreadLocal in ThreadLocalMap will also be cleaned up. However, the value is a strong reference and will not be cleaned up, thus a value with a null key will appear. If we do not take any measures, value will never be recycled by GC. If the thread is not destroyed for a long time, there will be a memory leak.

This situation has been considered in the ThreadLocalMap implementation. When the set(), get(), and remove() methods are called, records with null keys will be cleaned up. If there is a memory leak, it is only if the remove() method is not manually called after the record with a null key appears, and the get(), set(), and remove() methods are no longer called. Therefore, after using the ThreadLocal method, it is better to manually call the remove() method.

HashMap may have an infinite loop and cause CPU 100%. The main reason for this is that during scaling out, that is, during the construction of the inside New HashMap, the logic of expansion will reverse the order of nodes in a hash bucket. When multiple threads are expanding at the same time, because HashMap is not thread-safe, if two threads are reversed at the same time, a loop may be formed. In addition, this loop is the loop of the linked list, which is equivalent to node A pointing to node B, and node B pointing back to node A. Then, when you want to obtain the value corresponding to the key next time, you will never be able to traverse the linked list, and the CPU will be 100%.

Therefore, HashMap is thread-unsafe. In multi-thread scenarios, it is recommended to use ConcurrentHashMap with thread safety and better performance.

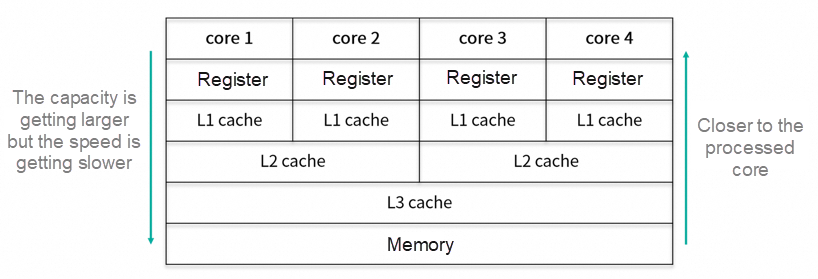

The Java Memory Model (JMM) is a mechanism and specification that conforms to the memory model specification, shields the access differences of various hardware and operating systems, and ensures that Java programs can access memory on various platforms to ensure consistent results.

JMM is a specification that solves the problems caused by inconsistent local memory data, reordering of code instructions by compilers, and out-of-order execution of code by processors when multiple threads communicate through shared memory. The goal is to guarantee atomicity, visibility, and orderliness in concurrent programming scenarios.

In Java, to ensure atomicity, two high-level bytecode instructions, Monitorenter and Monitorexit, are provided. The corresponding keyword of these two bytecodes in Java is Synchronized. Therefore, you can use Synchronized in Java to guarantee that methods and operations within code blocks are atomic.

Variables decorated with the Volatile keyword in Java can be synchronized to the primary memory as soon as they are modified. The variable it decorates is flushed from the primary memory before each use. Therefore, you can use Volatile to guarantee the visibility of variables during multithreaded operations. In addition to Volatile, the Synchronized and Final keywords in Java also enable visibility. It's just that the implementations are different.

In Java, you can use Synchronized and Volatile to guarantee the orderliness of operations between multiple threads. Difference: Volatile prohibits instruction reordering. Synchronized ensures that only one thread is allowed at a time.

Ensure the visibility of data: A variable modified by volatile ensures that each thread can obtain the latest value of the variable, thus avoiding the phenomenon of dirty data reading.

Disable instruction rearrangement: In the case of multi-thread operation, instruction rearrangement will lead to inconsistent calculation results.

"When observing the assembly code generated with and without the volatile keyword, it is noted that the inclusion of the volatile keyword introduces an additional lock prefix instruction."

This lock prefix instruction functions as a memory barrier (also known as a memory fence), offering three key features:

1) It prevents instructions from being reordered across the barrier, ensuring no instructions are moved ahead of the memory barrier or queued behind it.

2) It ensures any modifications made to the cache are immediately written to the primary memory.

3) In the case of a write operation, it invalidates the corresponding cache line in other CPUs.

To prevent the situation where the object referenced by the instance is not initialized when the instance read by the code is not null.

class Singleton{ private volatile static Singleton instance = null; //Disable instruction rearrangement private Singleton() { } public static Singleton getInstance() { if(instance==null) { //Reduce the loss of locking synchronized (Singleton.class) { if(instance==null) //Check whether the initialization is completed instance = new Singleton(); } } return instance; }}AQS is AbstractQueuedSynchronizer. It is an abstract queue synchronizer. It is a framework used to build a lock and synchronizer, which enables simple and effective construction of synchronizers that are used widely and extensively, such as AQS-based locks, CountDownLatch, CyclicBarrier, and Semaphore. Problems that need to be solved:

The management of the blocking and unblocking queues of the status atomicity management threads

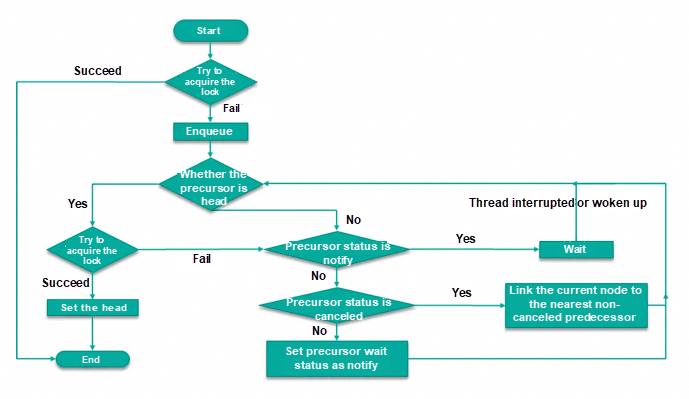

The core idea of the AQS is that if the requested shared resource is idle, the thread that currently requests the resource is set to an active work thread, and the shared resource is set to the locked state. If the requested shared resource is occupied, a mechanism for thread blocking, waiting, and lock allocation when awakened is required. AQS implements this mechanism by using a CLH (virtual bidirectional queue) queue lock, that is, threads that cannot obtain the lock temporarily are added to the queue.

It is a reentrant lock that, in addition to doing all the work that synchronized can do, provides methods such as responding to interrupt locks, pollable lock requests, and timed locks to avoid multithreading deadlocks. The default is the non-fair lock, but it can be initialized as a fair lock. Lock and unlock operations are performed through methods lock() and unlock().

Through the counting method (countdown timer), some threads are blocked and will not be woken up until another thread completes a series of operations. This tool is usually used to control thread waiting. It can make a certain thread wait until the countdown ends and then start to execute. Specifically, you can use countDownLatch.await() to wait for the result. It is mainly used for summarizing multi-thread information.

By setting parameters, you can complete the same multi-platform response problem as CountDownLatch, but you can make a more flexible display for some of the returned results.

Literally, it means a barrier that can be recycled. What it does is block a group of threads when they reach a barrier (also called a synchronization point). The barrier will not open until the last thread reaches the barrier. Then, all threads blocked by the barrier will continue to work. Threads enter the barrier through the await() method of CyclicBarrier. It can be used to send message queue information in batches and asynchronously limit throttling.

Semaphores are mainly used for two purposes, the mutual exclusion of multiple shared resources, and the control of the number of concurrent threads. It has the idea of SpringHystrix throttling.

Used to describe issues related to visibility: If the first operation happens-before the second operation, then we say that the first operation is visible to the second operation.

Common happens-before: volatile, lock, and thread lifecycle.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Interview Questions We've Learned Over the Years: The Distributed System

1,348 posts | 478 followers

FollowAlibaba Cloud Community - May 3, 2024

Alibaba Cloud Community - May 8, 2024

Alibaba Cloud Community - May 7, 2024

Alibaba Cloud Community - May 1, 2024

Alibaba Cloud Community - July 29, 2024

Alibaba Cloud Community - May 6, 2024

1,348 posts | 478 followers

Follow AIRec

AIRec

A high-quality personalized recommendation service for your applications.

Learn MoreMore Posts by Alibaba Cloud Community