By Junyu

With the rise of ChatGPT, Large Language Models (LLMs) have once again come into view, showing amazing language recognition, understanding, and inference capabilities in the field of Natural Language Processing (NLP). More and more industries are beginning to explore the application of LLMs, such as medical care, transportation, shopping, and other industries.

However, LLMs also have many shortcomings:

• The limitations of knowledge: The depth of model knowledge is heavily dependent on the breadth of the training dataset. Currently, the training sets of most large models on the market are derived from open network datasets, and there is no way to learn some internal data, specific domains, or highly specialized knowledge.

• The lag in knowledge: Model knowledge is acquired through training datasets. Models cannot learn new knowledge that is generated after model training. The cost of large model training is extremely high, so model training cannot be performed frequently to acquire new knowledge.

• Hallucination: The underlying principles of all AI models are based on mathematical probability, and their model output is essentially a series of numerical operations, and large models are no exception. Therefore, they sometimes generate some incorrect or misleading results, especially in scenarios where the large model itself lacks knowledge or is not proficient in a certain area. This kind of hallucination is more difficult to distinguish, as it requires the user to know the knowledge in corresponding field.

• Data security: For enterprises, data security is of paramount importance. No enterprise is willing to take data breach risks to upload its private domain data to a third-party platform for training. This also leads to applications that rely entirely on the capabilities of the general large model itself having to make trade-offs in terms of data security and effectiveness.

To solve the limitations of the purely parametric model, the language model can take a semi-parametric approach, combining the non-parametric corpus and database with the parametric model. This method is called Retrieval-Augmented Generation (RAG).

By retrieving the vast amount of existing knowledge, combined with a powerful generative model, RAG provides a completely new solution to complex Q&A, text summarization, and task generation. However, although RAG has its own unique advantages, it also encounters many challenges in practice.

In the RAG model, the output of the retrieval phase directly affects the input of the generation phase and the final output quality. If there is a large amount of error information in the RAG database that is retrieved, this may lead the model in the wrong direction, and even if much efforts are made in the retrieval phase, it may have little impact on the results.

To achieve efficient document retrieval, it is necessary to convert the original text data into numerical vectors, which is also called data vectorization. The purpose of data vectorization is to map text data to a low-dimensional vector space, so that semantically similar texts are relatively close in the vector space, while semantically dissimilar texts are farther away in the vector space. However, data vectorization also leads to a certain degree of information loss, as the complexity and diversity of text data are difficult to fully express with limited vectors. Therefore, data vectorization may ignore the details and features of some text data, thus affecting the accuracy of document retrieval.

In RAG, semantic search refers to retrieving the documents that are most related to the semantics of the question from the document collection according to the question input by the user, which is also called data recall. The difficulty of semantic search is how to understand the semantics of users' questions and documents, and how to measure the semantic similarity between questions and documents. At present, the mainstream method of semantic search is based on the results of data vectorization, using distance or similarity in vector space to measure semantic similarity. However, this method also has some limitations. For example, the distance or similarity in the vector space does not necessarily reflect the real semantic similarity, and the noise and outliers in the vector space can also interfere with the results of the semantic search. Therefore, the accuracy of semantic search can not be 100%.

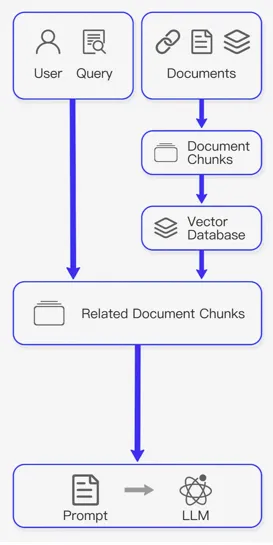

Naive RAG is the earliest research paradigm, which mainly includes the following steps:

Naive RAG mainly faces challenges in three aspects: low retrieval quality, poor generation quality, and difficult enhancement process.

• Low retrieval quality: Long text is used as an index, which does not highlight a topic. When you create an index, the core knowledge is lost in a large amount of useless information. Additionally, the original query is used as a search, which does not highlight its core requirements. As a result, the user’s query cannot be well matched with the knowledge index, thus resulting in low retrieval quality.

• Poor generation quality: When no knowledge is retrieved or the quality of retrieved knowledge is poor, the large model is prone to hallucinations when autonomously answering private domain questions, or the content of the answers is misleading and cannot be used directly, and knowledge base becomes useless.

• Difficult enhancement process: Integrating the retrieved information with different tasks can be challenging, sometimes resulting in inconsistent output. In addition, there is a concern that the generative model may rely too heavily on enhanced information, resulting in the output being merely a retelling of the retrieved content without adding insight or comprehensive information.

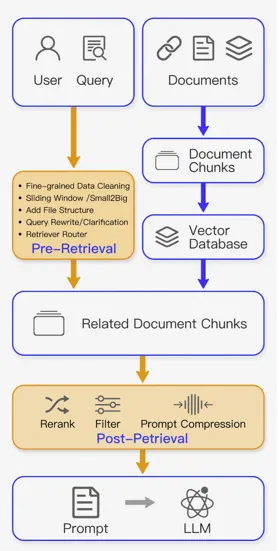

To solve the above problems, some optimization needs to be done before and after retrieval, which leads to the solution of advanced RAG.

Compared with naive RAG, advanced RAG is optimized around knowledge retrieval based on the naive RAG paradigm, adding optimization strategies before, during, and after retrieval to solve indexing, retrieval, and generation problems.

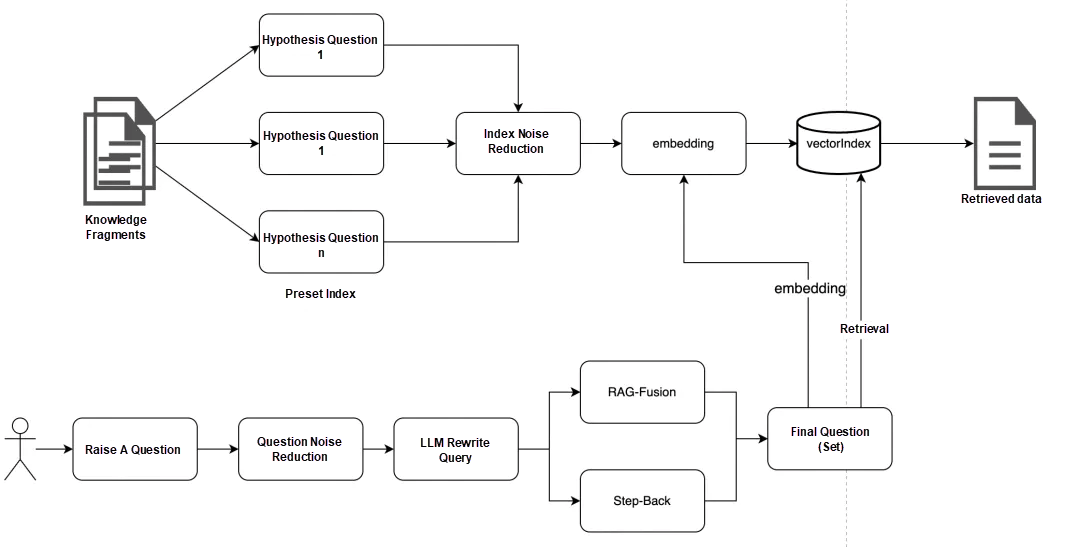

Optimization before retrieval focuses on knowledge segmentation, indexing methods, and query rewriting.

Knowledge segmentation mainly cuts long texts into small pieces according to the analysis of semantic cohesion to solve the problems of core knowledge oblivion and semantic truncation.

The index mode optimization technology improves the retrieval by optimizing the data index organization mode. For example, remove invalid data or insert some data to improve the index coverage, so as to achieve a high degree of matching with the user's question.

Query rewriting mainly needs to understand the user's intention and convert the user's original question into a question suitable for knowledge base retrieval, so as to improve the accuracy of retrieval.

The goal of the retrieval phase is to recall the most relevant knowledge in the knowledge base.

Typically, retrieval is based on vector search, which computes the semantic similarity between a query and indexed data. Therefore, most retrieval optimization techniques are based on the embedding model:

Fine-tune the embedding model to tailor the embedding model to the context of a specific domain, especially for domains where terminology is evolving or rare. For example, BAAI/bge is a high-performance embedding model that can be fine-tuned.

Dynamic embeddings adjust according to the context of the word, while static embeddings use a single vector for each word. For example, the embeddings-ada-02 of OpenAI is a complex dynamic embedding model that captures contextual understanding.

In addition to vector search, there are other retrieval techniques, such as hybrid search, which generally refers to the concept of combining vector search with keyword-based search. This search technique is beneficial if your search requires exact keyword matching.

Additional processing of the retrieved context can help solve some problems, such as exceeding the context window limit or introducing noise, which prevents the attention of key information. Optimization after retrieval techniques summarized in the RAG survey include:

Prompt compression: Reduce overall prompt length by removing extraneous content and highlighting important context.

Reordering: Recalculates the relevance scores of the retrieved contexts using the machine learning model.

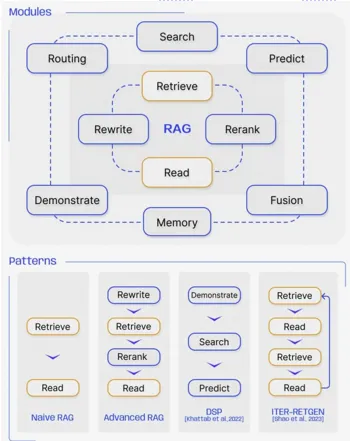

With the further development and evolution of RAG technology, the new technology breaks through the traditional retrieval-generation framework, based on which the concept of modular RAG is born. It is more free and flexible in structure, and introduces more specific function modules, such as query search engine and fusion of multiple answers. Technically, retrieval is integrated with fine-tuning, reinforcement learning, and other technologies. In the process, RAG modules are also designed and arranged, and a variety of RAG modes appear.

However, modular RAG does not appear suddenly, and the relationship between the three paradigms is inheritance and development. Advanced RAG is a special case of modular RAG, while naive RAG is a special case of advanced RAG.

• Search module: Unlike similarity retrieval, this part can be applied to specific scenarios and retrieved on some special corpora. Generally, vectors, tokenization, NL2SQL, or NL2Cypher can be used to search for data.

• Predict module: This technology reduces redundancy and noise in user's questions and highlights the user's real intentions. Instead of retrieving directly, the module uses the LLM to generate the necessary context. Content retrieved after LLM generates the context is more likely to contain relevant information than content obtained through direct retrieval.

• Memory module: Multiple rounds of conversations can be retained so that what questions the user asked before can be known during the next conversion.

• Fusion module: RAG-Fusion uses LLM to expand user’s queries into multiple queries. This method not only captures the information that the user needs but also reveals a deeper level of knowledge. The fusion process includes parallel vector search of the original query and the extended query, intelligent reordering, and obtaining the best search results. This sophisticated approach ensures that the search results are closely aligned with the user's explicit and implicit intent to find deeper and more relevant information.

• Routing module: The retrieval process of the RAG system uses content from various sources, including different fields, languages, and forms. These contents can be modified or combined as needed. The query router also selects the appropriate database for the query, which may include various sources, such as vector databases, graph databases or relational databases, or hierarchical indexes. The developer needs to pre-define the way to query the router's decision and execute it through the LLM call, which points the query to the selected index.

• Demonstrate module: You can customize an adapter based on a task.

Based on the above six modules, the RAG of your own business can be quickly combined, and each module is highly scalable and extremely flexible.

For example:

RR mode can build a traditional naive RAG.

RRRR mode can build an advanced RAG.

It is also possible to implement a reward and punishment mechanism based on retrieval results and user evaluation, so as to ensure the user can reinforce and correct the behavior of the retriever.

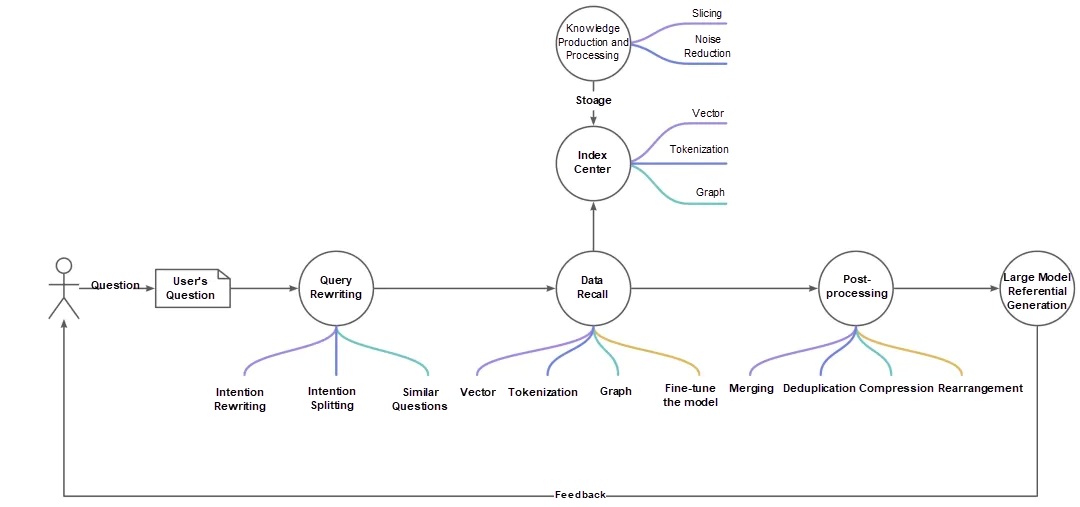

The RAG technology architecture we practice can be divided into one base and three centers, namely, the data management base, model center, multi-engines center, and recall strategy center.

In terms of engineering architecture, each subsystem is divided into sub-modular according to its capability, and scheduling policies are configured and scheduled in the upper layer, which conforms to the technical specifications of modular RAG.

In terms of retrieval technology, a large number of operations such as index noise reduction, multi-channel recall, knowledge deduplication, and rearrangement are performed around retrieval, which conforms to the technical specifications of advanced RAG.

The basic database contains data production and data processing capabilities.

Data production has capabilities such as data version, lineage management, and engine synchronization.

Knowledge processing mainly includes data slicing, index optimization, and other capabilities.

The model center mainly contains generative large models and comprehensible small models.

Generative large models mainly provide:

• Referential generation can enhance generation by retrieving knowledge and user questions;

• Query rewriting can understand the user's real intention, and rewrite or generalize it into a query suitable for knowledge base retrieval;

• Text2Cypher can convert knowledge base metadata and user questions into graph language to support graph retrieval of upper-layer services.

• NL2SQL provides this capability if you need to access databases to obtain specific data in your business.

Comprehensible small models mainly provide:

• Document slicing is responsible for slicing large pieces of knowledge into small fragments;

• Embedding is responsible for converting knowledge base metadata and user questions into vectors.

• Reranking is responsible for multi-channel data recall.

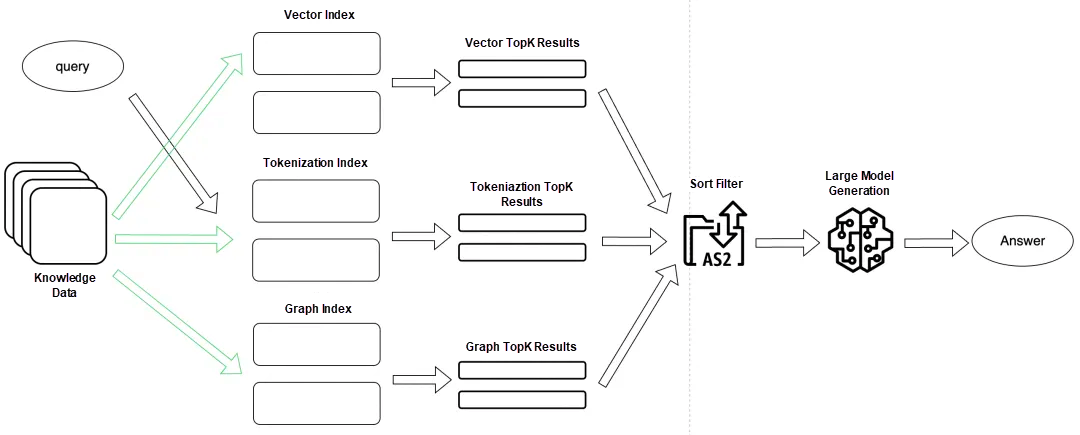

The multi-engines center includes vector, tokenization, and graph engines. The multi-engines center provides multiple retrieval methods to improve the knowledge hit rate.

The recall strategy center plays a scheduling role in the entire RAG construction, where query rewriting, multi-channel recall, post-retrieval processing, and large model reference generation of answers are performed.

Based on the preceding one-base-two-center architecture, each sub-capability is modularized, and scheduling policies are configured on the upper layer, which complies with the technical specifications of Modular RAG.

The overall business link of RAG is divided into five major steps: knowledge production and processing, query rewriting, data recall, post-processing, and large model production.

The first phase ensures that the system is available.

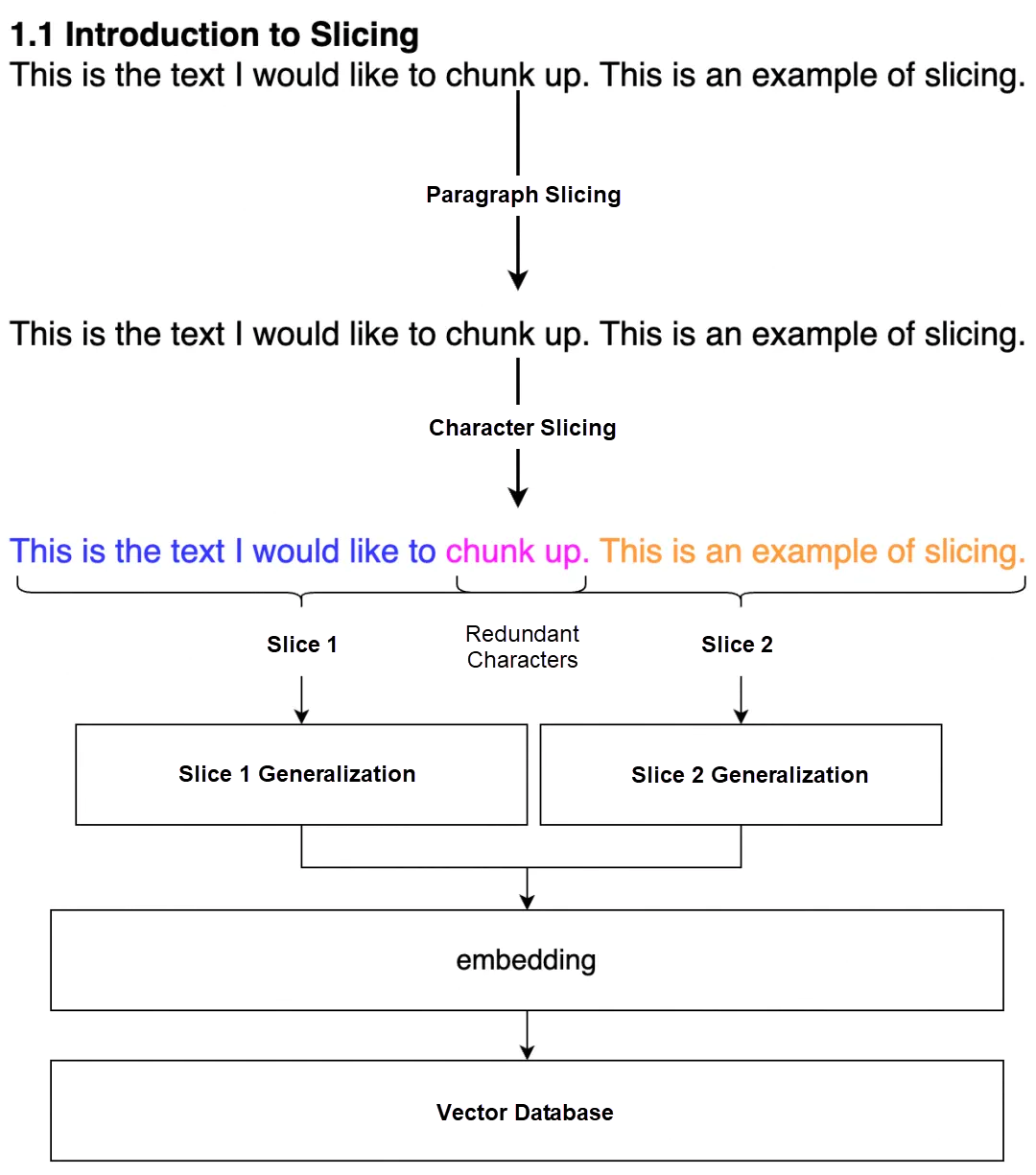

First, split the data based on fixed characters and reserve redundant characters to ensure that the semantics are not truncated.

According to the context, first, use the understanding ability of large models to highlight user's intentions in order to better answer user's questions.

The first step is to perform vector recall. In multi-channel recall, vector recall has the largest proportion and is also the most critical recall method. You need to find an embedding model and vector database that are more suitable for your business.

As vector recall is the only way for data recall, this step can only use vector approximation scores for ranking and set thresholds that meet business expectations to filter data. The filtered knowledge data is provided to the large model to generate answers.

The main goal of the second phase is to improve the retrieval results of RAG.

• The knowledge segmentation is based on fixed characters, although redundant characters are reserved, the cohesion of knowledge will be destroyed. At this time, a model based on semantic segmentation knowledge is needed. According to semantic segmentation, sentences with relatively close context are split into one piece of knowledge.

• Analyze index noise and specify noise reduction measures based on data retrieval.

• Clarify the user's intentions.

• Explore the RAG-Fusion mode, generate multiple similar queries based on the user's query, and retrieve data with multiple similar queries.

• Multi-task query extraction splits a query task into multiple sub-queries to retrieve data.

• Based on vector retrieval, explore the capabilities of tokenization and graph mapping according to business scenarios, and even some businesses need the capabilities of NL2SQL.

• Data deduplication and merging.

• The rearrangement ability of multi-channel recall results is built, and unified ranking and filtering standards are set.

The main goal of the third phase is to improve scalability in engineering, modularize the design of each business function, and configure the RAG process required by the business through the recall strategy configuration center.

If the document fragment is too long, it will have a great impact on knowledge retrieval. There are mainly two problems:

1) Index confusion: The core keyword is buried in a large amount of invalid information, resulting in the relatively small proportion of core knowledge in the index that created, whether semantic matching, tokenization matching, or map retrieval, it is difficult to accurately hit the key data, thus affecting the quality of the generated answer;

2) If the token is too long, the semantics will be truncated: When the knowledge data is embedded, the semantics may be truncated due to the token being too long. After the knowledge retrieval is completed, the longer the knowledge fragment is, the fewer pieces of information are input into the large model. As a result, the large model cannot obtain enough valuable input, thus affecting the quality of the generated answer.

Slice knowledge by fixed characters and set redundant characters to solve the problem of sentence truncation, so that a complete sentence is either above or below. This method can avoid the problem of disconnection in the middle of the sentence as much as possible.

This implementation method has the lowest cost and can be used first in the initial stage of business.

If slice knowledge by fixed characters, sometimes fragments with closely related semantic meanings are sliced into two pieces of data, resulting in poor data quality. You can slice sentences by using a small semantic comprehensible model to ensure the semantic integrity of the sliced knowledge fragments.

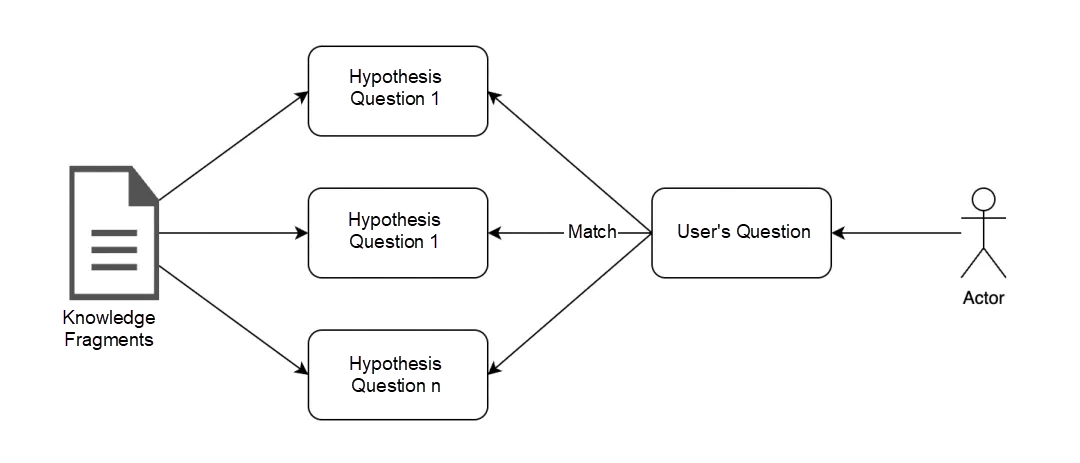

If the original document matches the user’s question one-to-one, there will be a problem of low matching fault tolerance. Once the knowledge is not matched, it cannot be recalled.

For the processed knowledge data, in order to improve the coverage of knowledge, some associated hypothetical questions can be generated in advance with a large model for the knowledge data, and when these hypothetical questions are hit, the corresponding knowledge data can also be searched.

Index noise reduction mainly removes invalid components in index data based on business characteristics, highlights its core knowledge, and reduces noise interference. The knowledge of QA-pairs and article fragments is handled in a similar way.

This kind of data generally uses Q as the index column and forms a QQ search pattern with user's questions. In this way, the matching difficulty during data recall is low. If the original Q is used as the index, there will be the problem of invalid interference, such as:

| User's query | The query matched |

| How can I start to sell on Alibaba? | How can I register an account on Alibaba.com? |

In a sentence, there are more than 60% invalid similar components, which will cause great interference with index matching.

The Q in the vector index is generalized by the large model to highlight the core keyword, and the topic of the corresponding Answer is extracted by the large model. Both Q and A are highlighted keywords.

How can I register an account on Alibaba.com?--> Register an account. + Answer theme.

Highlight the core theme and reduce the interference of invalid data.

The knowledge of article fragment class cannot be well matched due to its length and its semantics may be quite different from the question.

HyDE will generate hypothetical questions form QA-pairs, and then use the large model to extract core keywords for noise reduction.

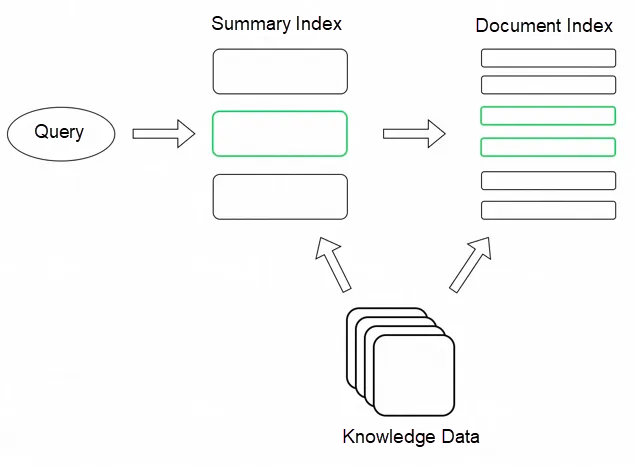

Approximate retrieval is different from traditional database retrieval. After approximate retrieval is indexed through clustering or HNSW, there will be a certain approximation error during retrieval. If you search in a large number of knowledge bases, it will cause retrieval accuracy and performance problems. In the case of a large database, an effective method is to create two indexes-one composed of abstracts and the other composed of document chunks and search in two steps, first filtering out relevant documents by abstracts, and then searching only within this relevant group.

If you use the original query to retrieve data, the following issues may occur:

1) The data in the knowledge base cannot be answered directly, and a combination of knowledge is required to find the answer.

2) When questions involving more details are involved, large models often cannot provide high-quality answers.

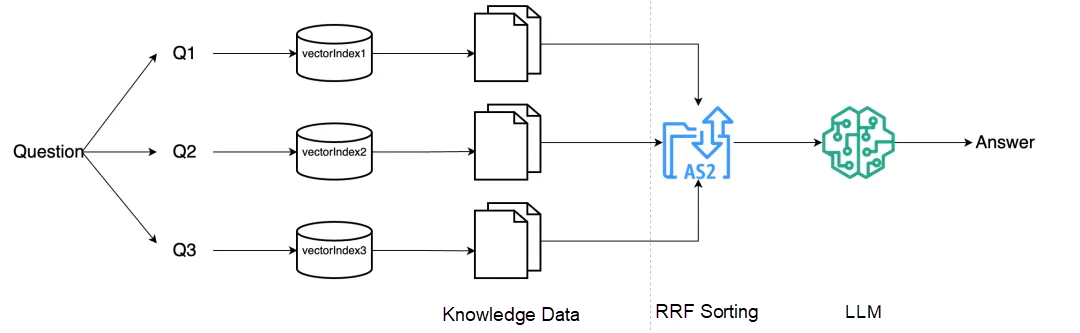

The service proposes two optimization schemes for RAG-Fusion and Step-Back Prompting.

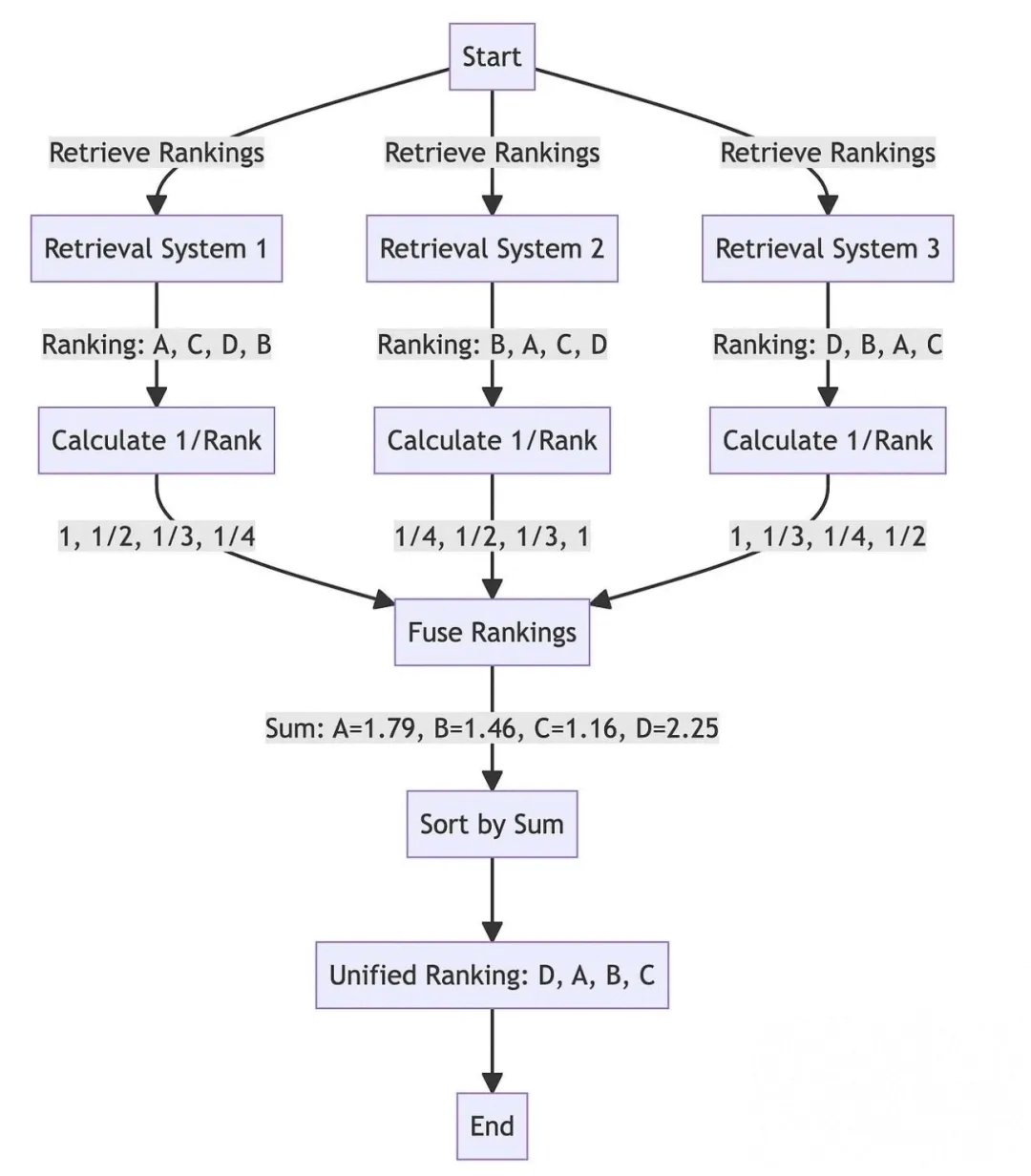

RAG-Fusion can be considered as an evolved version of MultiQueryRetriever. RAG-Fusion first generates multiple versions of new questions from different angles according to the original question to improve the quality of the question. Then vector retrieval is performed for each question, which is the feature of MultiQueryRetriever so far. In contrast, RAG-Fusion adds a ranking step before feeding LLM to generate answers.

The following figure shows the main process of RAG-Fusion:

Ranking includes two steps. One is to retrieve the returned content of each question independently and rank it according to the similarity to determine the position of each returned chunk in its respective candidate set. The higher the similarity, the higher the ranking. The second is to use RRF(Reciprocal Rank Fusion) to comprehensively rank the content returned by all questions.

By introducing a step-back question, which is usually easier to answer and revolves around a broader concept or principle, large language models can provide more effective inferences.

Step-Back Prompting Process: A typical Step-Back Prompting process mainly contains two steps:

1) Abstraction: This means that LLM does not immediately attempt to answer the original question. Instead, it raises a more general question about a larger concept or rule. This helps it analyze and search for facts.

2) Inference: After obtaining answers to general questions, the LLM uses this information to analyze and answer the original question. This is called "abstract basic inference". It uses information from a larger point of view to give a good answer to an original, harder question.

Examples

If a car travels at a speed of 100 km/h, how long does it take to travel 200 kilometers?

At this point, the large model may be confused about mathematical calculations.

Step-Back Prompting: Given speed and distance, what is the basic formula for calculating time?

Input: In order to calculate the time, we use the following formula: time=distance /speed

Use the formula, so the time=200 km /100 km/h=2 hours.

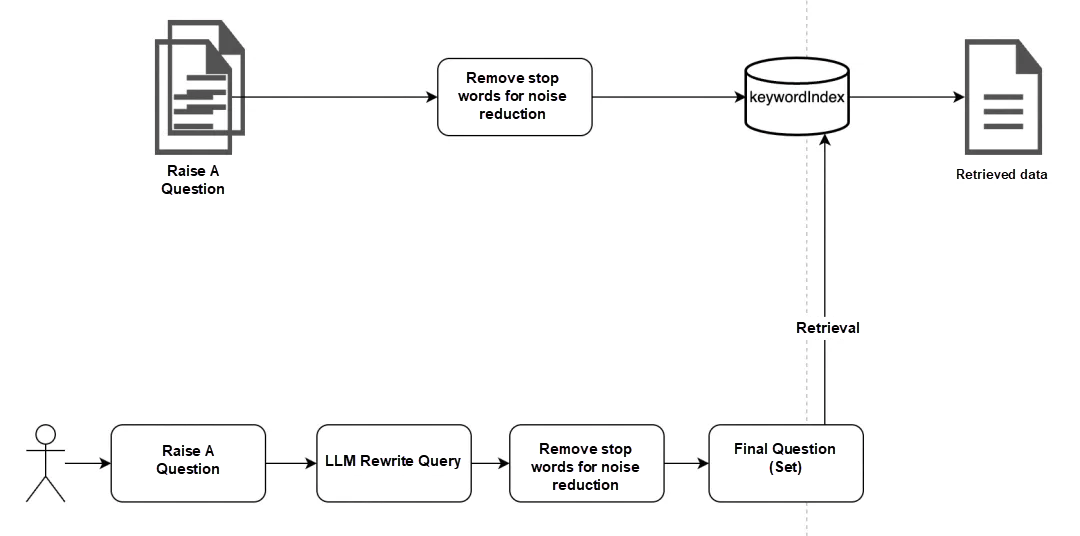

When a user asks a question, some stop words do not work. For example, How to register an account on Alibaba.com, in this context, the core appeal is to register an account. As for How to express the core appeal, the meaning is not so important. Secondly, when the foreign trade business of Alibaba is sunk, the on Alibaba.com becomes not so important, because the foreign trade business system of Alibaba runs on Alibaba.com. Knowledge in the knowledge base is naturally also Alibaba.com relevant.

Stop words can be removed for user questions. For example, a stop word database is maintained in ES and can be used directly. If the solution does not include ES, you can also maintain your own stop-word library. A large number of stopword libraries are maintained in open-source libraries such as NLTK, stopwords-iso, Rank NL, and Common Stop Words in Various Languages, which can be used as needed.

In the NLP field, vector recall has always been in an irreplaceable position. It is also a popular practice in the industry to convert natural language into low-latitude vectors and judge semantic similarity based on the similarity of vectors. Combined with the above-mentioned vector index noise reduction, hypothetical problems, and optimization of the user query, relatively good results can be achieved.

However, for simple semantic vector recall, if the text vectorization model is not well-trained, the vector recall accuracy will be low. In this case, other recall methods need to be used as supplements.

In addition to vector recall, common recall methods include tokenization recall and graph recall.

The traditional inverted index retrieval is based on the BM25 scoring and ranking mechanism, so as to find the knowledge data that is similar to the tokenization. Combine the above-mentioned stop word removal strategy to be more accurate.

Knowledge graph has unique advantages in knowledge production and relationship extraction. It can generate new knowledge based on existing data and abstraction of its relationship.

For example, there are now two pieces of knowledge:

1) Alibaba adopts company A's logistics services in China.

2) Alibaba has reached a cooperation with logistics company B to provide customers with more high-quality and convenient logistics services.

The above two pieces of knowledge are extracted by NL2Cypher:

alibaba-logisticsServices-A

alibaba-logisticsServices-B

Based on these two pieces of knowledge, a new piece of knowledge can be generated: alibaba-logisticsServices-A & B

When users ask which logistics services are supported by Alibaba platform, they can directly find A & B.

In the NLP field, when the text vectorization model is not well trained, the accuracy of vector recall is relatively low. In this case, other recalls need to be used as supplements. In general, multi-channel recall is used to achieve a better recall effect. The results of the multi-channel recall are refined by the model and finally high-quality results are selected. As for the use of recall strategies, depending on the business.

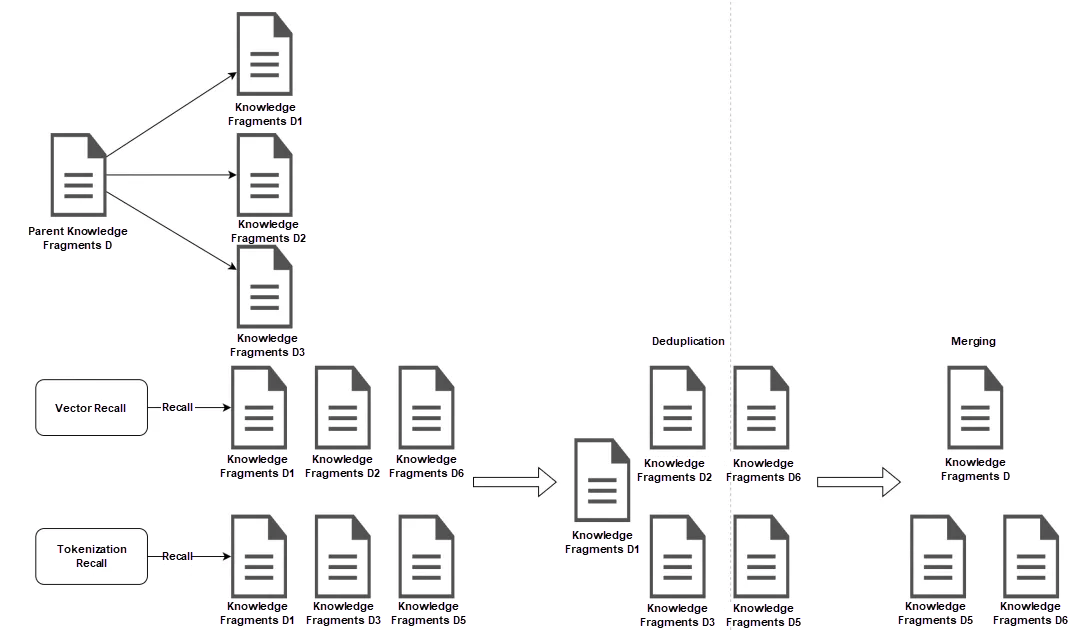

First, the multi-channel recall may recall the same result. This part of the data must be re-evaluated. Otherwise, the number of tokens input to the large model is a waste.

Secondly, the deduplication documents can be merged according to the lineage relationship of data segmentation.

For example, if the retrieved D1, D2, and D3 all come from the same parent knowledge fragment D, D is used to replace D1, D2, and D3 to ensure better knowledge semantic integrity.

The ranking and scoring model of each recall strategy is different, and there must be a unified evaluation standard at the final unified data filtering level.

Currently, there are not many reranking models available. One option is the online model provided by Cohere, which can be accessed through an API. In addition, there are some open-source models, such as bge-reranker-base and bge-reranker-large, which are selected according to business needs.

It's easy to perform basic RAG, but excelling at it is quite challenging, as each step can affect the final outcome.

We have also done a lot of exploration in RAG, such as:

In terms of knowledge segmentation, the effect of fixed character segmentation is verified, the index noise points are analyzed, and a large number of noise reduction processes are done using large models.

In terms of query rewriting, a large model is used to extract more clearly the intention and explore the noise reduction of the user's query.

In terms of data recall, the embedding model has done a lot of evaluation based on BGE, voyage, and Cohere to explore the recall strategy of vector and tokenization.

In terms of post-processing optimization, we have worked on knowledge deduplication and reranking explorations.

RAG is developing rapidly. As long as the issues of knowledge dependence and knowledge renewal remain unresolved, RAG will continue to hold its value and find its place.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

AI ON CLOUD | Article Collections on Artificial Intelligence

1,306 posts | 461 followers

FollowAlibaba Cloud Community - September 6, 2024

Alibaba Cloud Native Community - October 15, 2025

Alibaba Cloud Native - September 12, 2024

Alibaba Cloud Native Community - October 11, 2025

Apache Flink Community - May 7, 2024

Alibaba Cloud Data Intelligence - August 14, 2024

1,306 posts | 461 followers

Follow Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Cloud Community