By Sun Jianbo (nicknamed Tianyuan), Alibaba technical expert

Open Application Model (OAM) is a standard specification for building and delivering cloud native applications co-launched by Alibaba and Microsoft in the community. It aims to use a new application definition, O&M, distribution, and delivery model to promote the progress of application management technology towards lightweight O&M. Also, it strives to kick off a technical revolution in next-generation cloud native DevOps.

Today, I will discuss the value of OAM, that is, why we should use OAM as a standard model for cloud native applications.

In December 2019, AWS released ECS CLI v2, the first major version update in the four years since the release of v1 in 2015. The release of the v2 CLI focuses more on the end-to-end application experience, that is, managing the entire application delivery process from source code development to application deployment.

Based on user feedback received over the years, they summarized four CLI development principles:

As you may notice, these principles are not so much the development principles of ECS CLI as the demands of users:

Now, let's look at how OAM meets each of these demands and the value it provides.

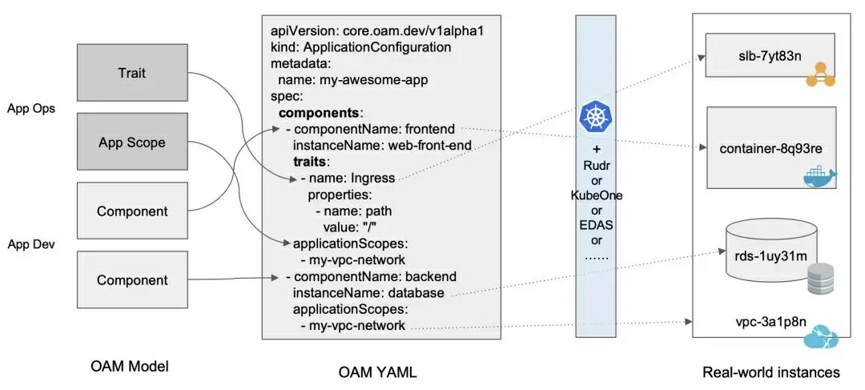

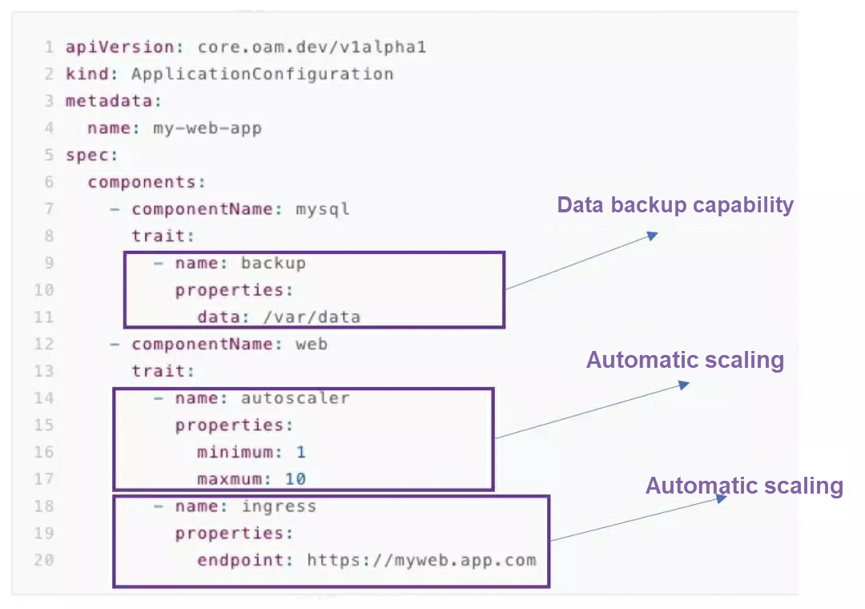

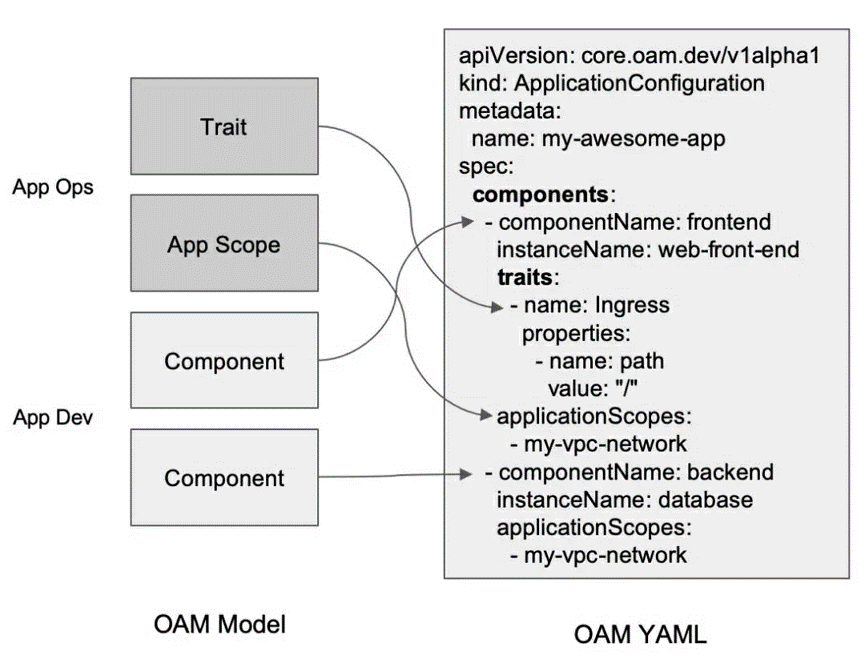

As shown in the following figure, an application configuration that is running OAM uses Kubernetes API specifications, which contain all the resources of an application.

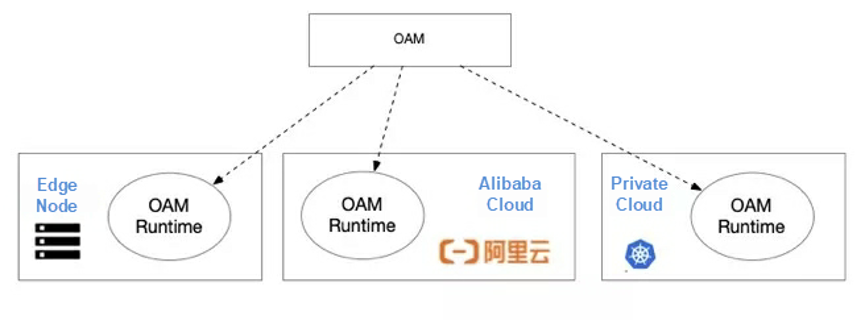

OAM application definitions do not limit your underlying platform and actual runtime. You can run the application on a platform other than Kubernetes, including ECS, Docker, and FaaS (Serverless). This means you are not locked into a single vendor. If your application meets the conditions of a serverless application, the OAM description of the application can naturally run in a serverless runtime environment that supports OAM specifications.

In different environments that support OAM, you can use a unified application description to achieve identical application delivery. As shown in the following figure, users only need to describe the unified application configuration to achieve the same application experience in different environments.

The popularity of cloud native has pushed forward the implementation of infrastructure as code. As an infrastructure platform, Kubernetes uses declarative APIs. As a result, users are accustomed to describing required resources through YAML files. This is actually the implementation of infrastructure as code. In addition, OAM provides unified definitions of infrastructure resources that are not included in the native Kubernetes and uses the infrastructure by configuring the OAM standard YAML (code).

Today, the OAM implementation of the Alibaba Cloud resource orchestration product ROS provides a classic example. You can use OAM configurations to pull up infrastructure resources in the cloud.

Let's look at a real-world example. Assume that we want to pull up a NAS persistent storage, which contains two ROS resources, which are NAS FileSystem and NAS MountTarget.

apiVersion: core.oam.dev/v1alpha1

kind: ComponentSchematic

metadata:

name: nasFileSystem

annotations:

version: v1.0.0

description: >

component schematic that describes the nas filesystem.

spec:

workloadType: ros.aliyun.com/v1alpha1.NAS_FileSystem

workloadSettings:

ProtocolType: NFS

StorageType: Performance

Description: xxx

expose:

- name: fileSystemOut

---

apiVersion: core.oam.dev/v1alpha1

kind: ComponentSchematic

metadata:

name: nasMountTarget

annotations:

version: v1.0.0

description: >

component schematic that describes the nas filesystem.

spec:

workloadType: ros.aliyun.com/v1alpha1.NAS_MountTarget

workloadSettings:

NetworkType: VPC

AccessGroupName: xxx

FileSystemId: ${fileSystemOut.FileSystemId}

consume:

- name: fileSystemOut

expose:

- name: moutTargetOut

---

apiVersion: core.oam.dev/v1alpha1

kind: ApplicationConfiguration

metadata:

name: nas-demo

spec:

components:

- componentName: nasMountTarget

traits:

- name: DeletionPolicy

properties: "Retain"

- componentName: nasFileSystem

traits:

- name: DeletionPolicy

properties: "Retain"In the definition, you can see that NAS MountTarget uses FileSystemId that is output by NAS FileSystem, and ultimately, this YAML file is translated by ROS OAM Controller into the template file of the ROS resource stack. In this way, the cloud resources are pulled up.

The OAM implementation of ROS will soon be made open source, so stay tuned!

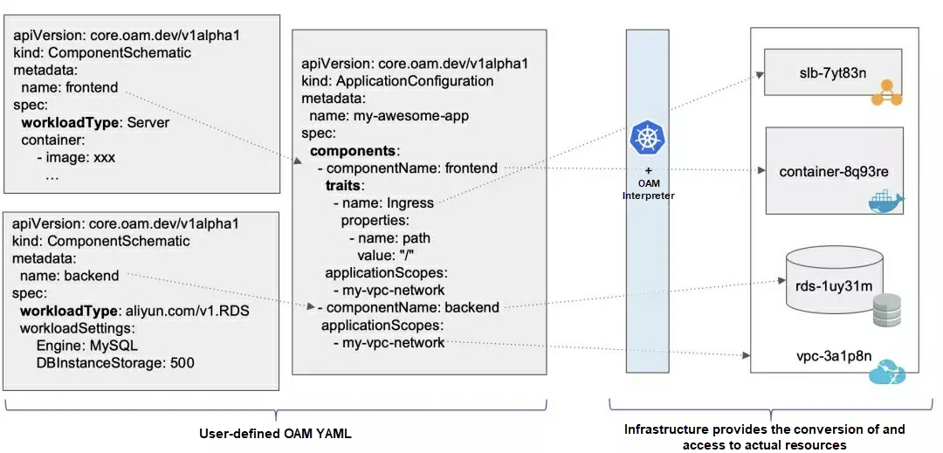

User requirements concern the application architecture, rather than the specific infrastructure resources that are used.

OAM addresses such requirements by describing the WorkloadType of an application to define the application architecture. This WorkloadType can be a simple stateless application "server", which means that the application can be replicated, accessed, and persistently run as a daemon. Also, the WorkloadType can be a database-type application "RDS", which starts an RDS instance in the cloud.

The "Component" user component selects a specific architecture template by specifying "WorkloadType", and multiple Components constitute a complete architecture.

When using OAM application definitions, users are concerned with the architecture, not the specific infrastructure.

As shown in the following figure, an OAM application description allows you to specify that the application requires a public network access capability instead of a Server Load Balancer (SLB). In this case, you can specify that the components of the application are database components.

Users want O&M capabilities to be part of the application lifecycle, and OAM does exactly this. To define the O&M capabilities used by a Component, you can bind a Trait. In this way, O&M capabilities are added to the application description to facilitate the central management of the underlying infrastructure components.

As shown in the following figure, an application contains two components: a web service and a database. The database component is able to back up data, while the web service can be accessed and elastically scaled. These capabilities are managed by the OAM interpreter (the OAM implementation layer) in a centralized manner. This eliminates the need to worry about any O&M capability binding conflicts.

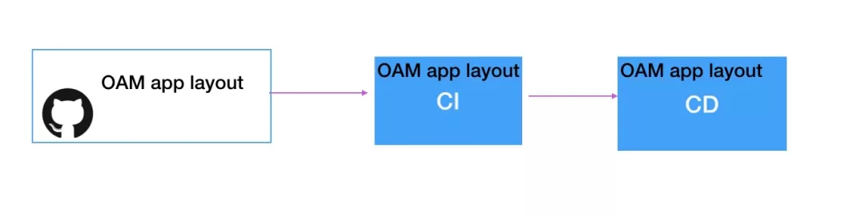

Just as Docker images resolve long-standing inconsistencies between development, testing, and production environments, unified and standardized OAM application descriptions make the integration of different systems transparent and standardized.

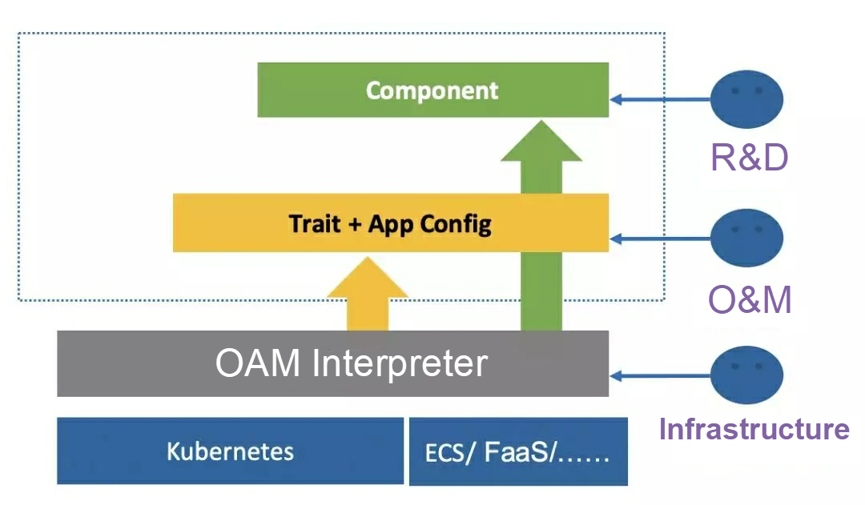

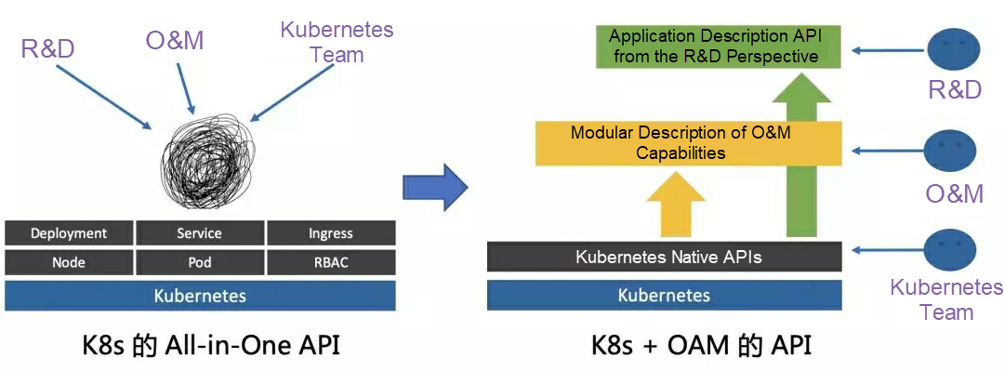

OAM also decouples the originally complex Kubernetes All-in-One APIs at a certain level and divides them into three main roles: application R&D (management Component), application O&M (combines Components and binds Traits to the AppConfig file), and infrastructure provider (mapping OAM interpretation capabilities to actual infrastructure components). With these three roles, workload is divided among them to simplify the duties of the individual roles. This gives different roles greater focus and specialization.

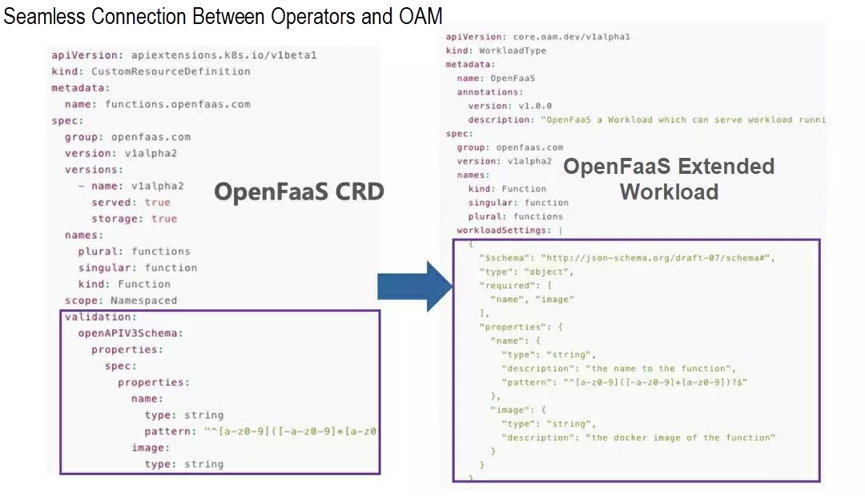

OAM application definitions are elastic and scalable. You can define different types of workloads by extending "Workload". You can also describe your O&M capabilities by using custom Traits. In addition, both of these objects can be perfectly integrated with the CRD + Operator extension methods in existing Kubernetes systems.

Through this separation of focus, OAM divides applications into three layers: R&D, O&M, and infrastructure. It also provides modular collaboration for R&D Workloads and O&M Traits, significantly improving reusability.

As the number of modular Workloads and Traits increases, a market for these components will be formed. On this market, users will be able to quickly find architectures (Workloads) suitable for their applications and the required O&M capabilities (Traits) in CRD Registry and other similar registries. This makes building applications effortless.

I believe that, in the future, application construction will become increasingly simple and infrastructure choices will be automatically matched to users' architecture requirements. In this way, users will be able to truly enjoy the benefits of cloud platforms and quickly reuse existing modular capabilities. Going forward, OAM will become the inevitable choice for cloud native applications.

Getting Started with Kubernetes | Deep Dive into Kubernetes Core Concepts

664 posts | 55 followers

FollowAlibaba Cloud Native Community - February 1, 2023

Alibaba Developer - September 7, 2020

Alibaba Developer - August 18, 2020

Alibaba Developer - May 27, 2020

Alibaba Developer - July 30, 2020

Alibaba Clouder - January 25, 2021

664 posts | 55 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by Alibaba Cloud Native Community