By Song Shuangyong, Wang Chao, Chen Haiqing and Chen Huan

When answering customers' frequently asked questions, a good intelligent customer service robot needs to provide customers with multi-dimensional and human-like service capabilities, including assistance, shopping guidance, voice chat, and entertainment. This will improve customers' overall satisfaction with intelligent customer service robots, and may even convince customers that the customer service robots are indeed individuals. In this process, emotion analysis technology plays a vital role in empowering robots with human-like capabilities.

This article will discuss the principles of emotion analysis algorithm models, their actual application, and their performance. This article also summarizes and introduces the application scenarios of emotion analysis technology along five dimensions by looking at formats of combined human-machine services in intelligent customer service systems.

Human-machine interaction has always been an important area of research in the field of natural language processing. In recent years, with the progress of human-machine interaction technologies, dialog systems are gradually being used in actual applications. Among such applications, intelligent customer service systems have drawn the attention of many enterprises, especially large- and medium-sized enterprises. Intelligent customer service systems aim to reduce the workload on traditional human customer service staff. In this way, human staff can provide service of higher quality when dealing with specific questions or specific users. Therefore, the combination of intelligent customer service and human customer service can improve overall service efficiency and service quality. In recent years, many large- and medium-sized enterprises have built their own intelligent customer service systems, such as Fujitsu's FRAP, Jingdong's JIMI, and Alibaba's AliMe.

The construction of an intelligent customer service system must be based on industry data as well as related technologies such as massive knowledge processing and natural language understanding. The first intelligent customer service systems were designed to answer frequently asked questions related to business. This process requires business experts to accurately organize answers to frequently asked questions. The main technological capability is accurately matching the text of user questions with knowledge points. New intelligent customer service systems now cover a wider scope of business scenarios. In addition to answering frequently asked questions, such systems must also focus on and meet requirements for intelligent shopping guidance, obstacle prediction, intelligent voice chat, daily life assistance, and entertainment interaction. Emotion analysis is an important human-like capability that has been applied in different intelligent customer service system scenarios. It plays a vital role in improving the human-like capabilities of these systems.

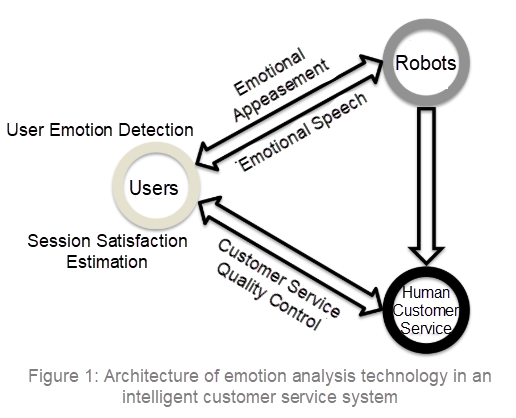

Figure 1 shows the classic intelligent customer service model based on the human-machine combination. Users receive services from robots or human customer service staff through a dialog-based process. When being served by robots, users can be redirected to human customer service staff through instructions or based on automatic recognition by robots. In the complete customer service model shown in Figure 1, emotion analysis technology is used to achieve multi-dimensional capabilities.

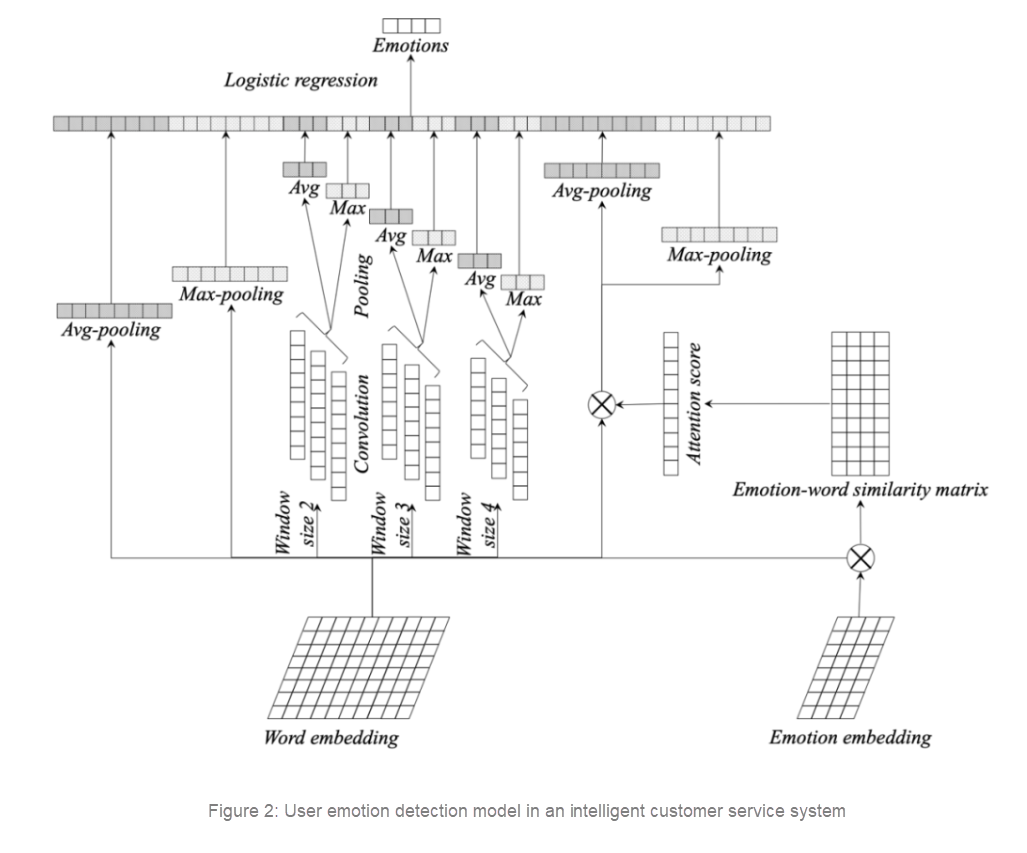

User emotion detection is the foundation and core of many emotion-related applications. This article proposes an emotion classification model that integrates word semantic features, multi-phrase semantic features, and sentence semantic features. This model is used to identify emotions such as "anxiety", "anger", and "gratitude" contained in user dialogue of the intelligent customer service system. The technology of extracting semantic features at different levels has been mentioned many times in related work. We can effectively improve the final emotion identification performance by combining semantic features at different levels. Figure 2 shows the architecture of the emotion classification model.

Shen et al.[3] proposed the SWEM model. This model applies simple pooling policies to word embedding vectors in order to extract sentence semantic features. The classification model and the text matching model trained based on these features can achieve an experimental effect nearly the same as that of the classical convolution neural network (CNN) model and the recurrent neural network (RNN) model.

In our model, we use the feature extraction capability of the SWEM model to obtain sentence semantic features from user questions and use these features in the emotion classification model for user questions.

In many scenarios, the traditional CNN model is used to extract n-phrase semantic features, where n is a variable that indicates the convolution window size. In this article, we set n to 2, 3, and 4, respectively, based on experience. We also set 16 convolution kernels for each window size, so as to extract rich n-phrase semantic information from the original word vector matrix.

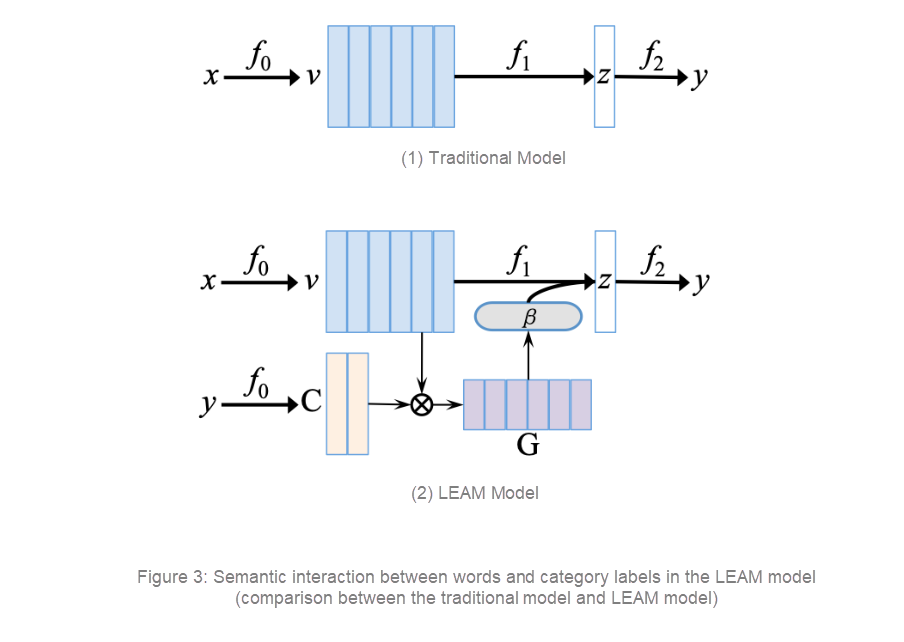

We use the LEAM model[1] to extract word semantic features. The LEAM model implements an embedded representation of semantic information in the same dimension for both words and category labels and then implements text classification based on this representation. The LEAM model adds semantic interaction between words and labels by using category labels. This allows it to explore word semantic information more deeply. Figure 3 (2) shows the semantic interaction between category labels and words and compares the LEAM model and the traditional model.

Finally, semantic features of different levels are merged and input into the last layer of the model for final classification training by the logic regression model.

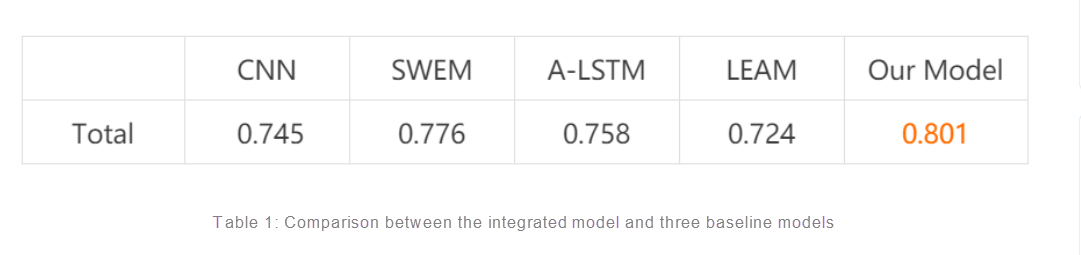

Table 1 compares the real online evaluation results of our integrated model and three contrast models that only consider single-level features.

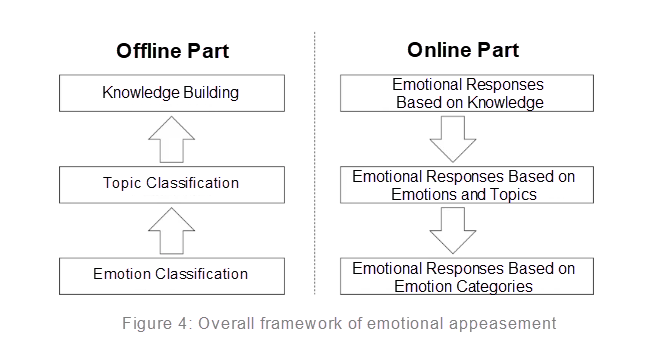

This article proposes an emotional appeasement framework made up of an offline part and online part, as shown in Figure 4.

Offline Part

First, user emotions need to be identified. We selected seven common user emotions to identify. They are fear, abusiveness, disappointment, grievance, anxiety, anger, and gratitude.

Then, we identified topic content included in user questions. We asked business experts to summarize 35 common topics of user expressions, including "complaints about service quality" and "feedback on slow logistics". We used the same classification model that was used for identifying emotions to design the topic identification model.

Knowledge building aims to sort out frequently asked questions that express user emotions that require appeasement to more precisely address user expressions. We did not merge these specific user questions into the above-mentioned topic dimension because the topic-dimension processing is relatively coarse-grained. Instead, we want to answer these frequently asked specific questions in a centralized manner to achieve better response results.

Business experts organize appeasing responses along the dimensions of emotions, "emotions + topics", and frequently asked questions, respectively. Specifically, in the dimension of frequently asked questions, we call a Q&A pair a piece of knowledge.

Online Part

Knowledge-based appeasement aims to appease users who express specific emotional content. We use a text matching model to evaluate the match between a user's question and the questions in our knowledge base. If our knowledge base contains a question that is similar in meaning to the user's question, the corresponding response is directly returned to the user.

Emotional responses based on emotions and topics consider both the emotional and topic information contained in user expressions and then give users appropriate emotional responses. Compared with knowledge-based appeasement, emotional response based on emotions and topics is a more versatile method.

Emotional responses based on emotional categories only consider emotional factors in users' expressions and then give users appropriate appeasing responses. This response method is the supplements and supports the preceding two response methods, and its response content is more general.

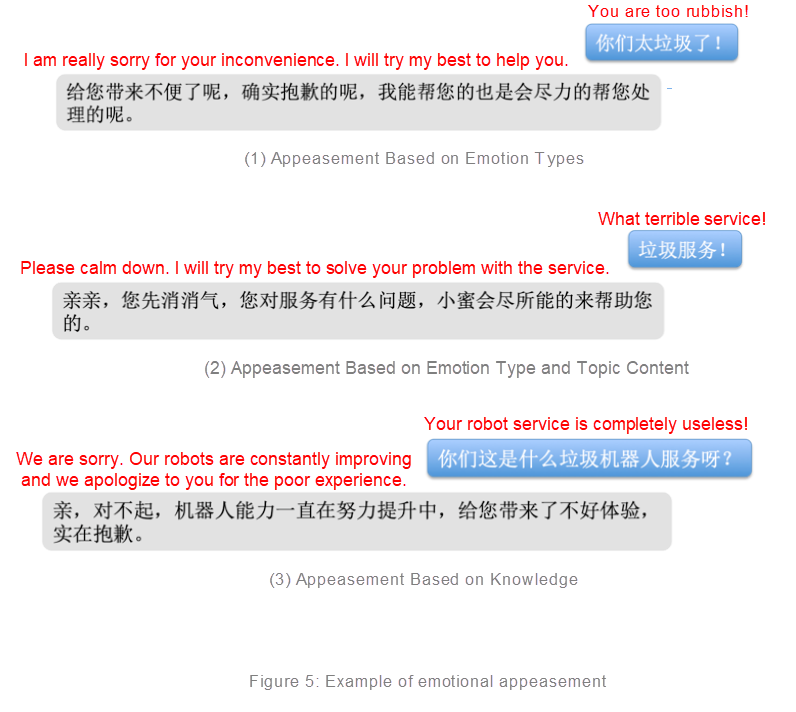

Figure 5 shows three examples of online emotional appeasement, which correspond to the preceding three response methods.

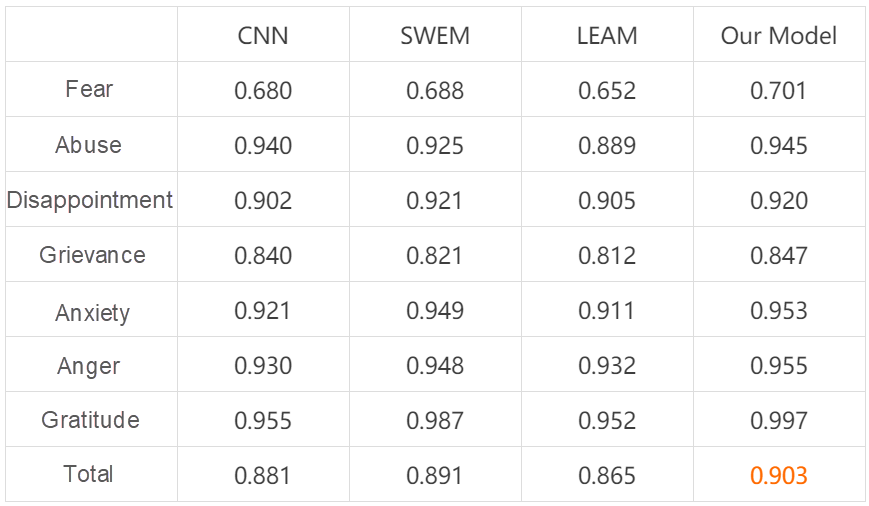

Table 2: Comparison of emotion classification performance

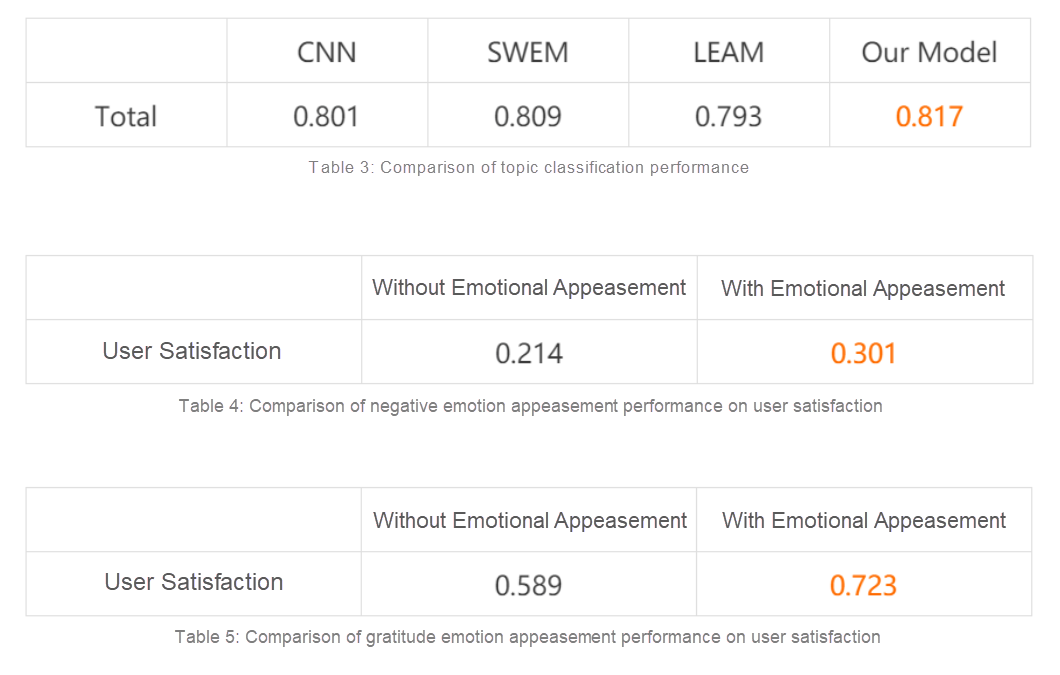

Table 2 compares the performance of the emotion classification models, including the individual performance of each emotion category and the final overall performance. Table 3 compares the performance of topic classification models. Table 4 compares the user satisfaction improvement before and after emotional appeasement is added for several negative emotions. Table 5 compares the user satisfaction improvement before and after emotional appeasement is added for the gratitude emotion.

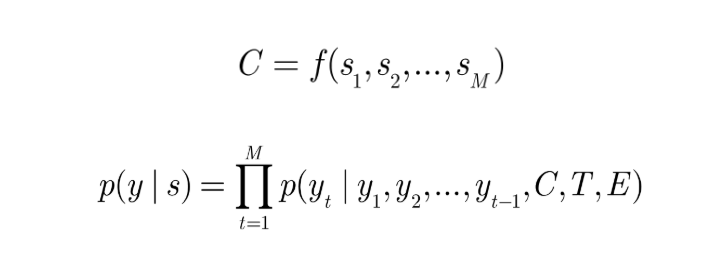

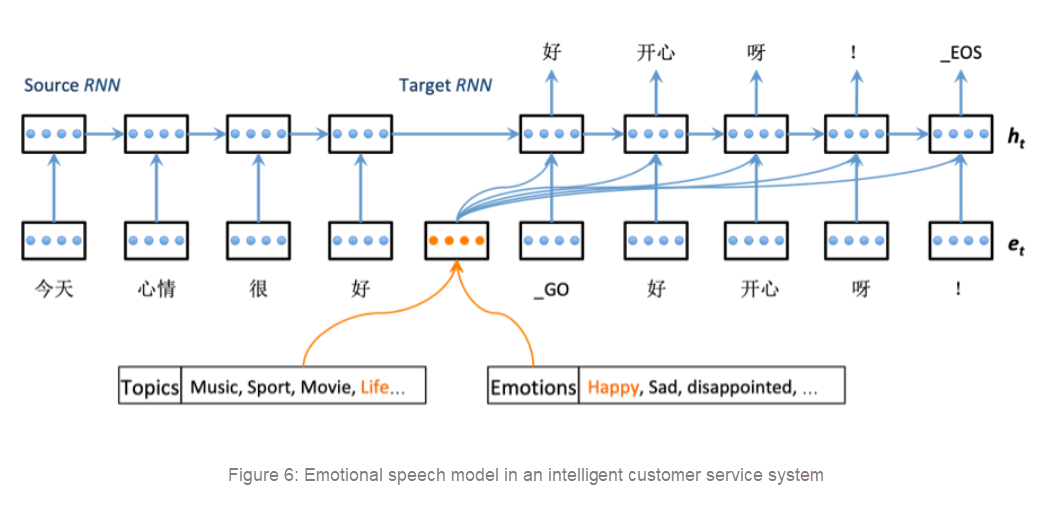

Figure 6 shows the emotional speech model in an intelligent customer service system. As shown in Figure 6, the source RNN serves as an encoder, which maps the source sequence (s) to the intermediate semantic vector (C). The target RNN serves as a decoder, which can decode the target sequence (y) based on the semantic encoding (C) as well as the emotion representation (E) and topic representation (T) we set. Here, s and y correspond to the sentences "I'm in a good mood today" and "I'm so happy" that are composed of word sequences in Figure 6.

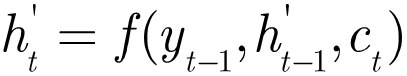

Generally, the last state of the encoder will be passed to the decoder as the initial state, allowing the decoder to retain information from the encoder. In addition, the encoder and decoder often use different RNNs to capture expressions that contain different questions and answers. The formula is as follows:

Although the dialog generation model based on Seq2Seq has achieved good results, it may generate safe yet meaningless responses in practical applications. The reason is that the decoder in this model only receives the last state output (C) from the encoder. This mechanism is not adept at processing long-term dependencies since the status memory of the decoder will gradually weaken or even lose source sequence information with the continuous generation of new words. An effective method is to use the attention mechanism[2].

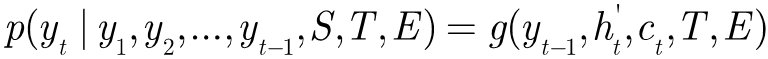

In a Seq2Seq framework that uses the attention mechanism, the probability that the output layer of the decoder predicts a word based on the input is as follows:

Where, ht is the hidden state output of the decoder at time t. The formula for calculating ht' is as follows:

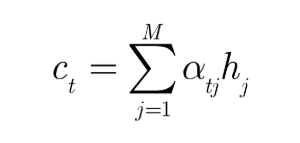

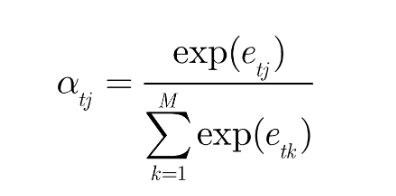

For each output ht of the encoder, different weights are used as follows:

The formula for calculating the weight atj of each hidden state hj is as follows:

The objective function of the training process and the search policy of the prediction process are the same as those in the traditional RNN.

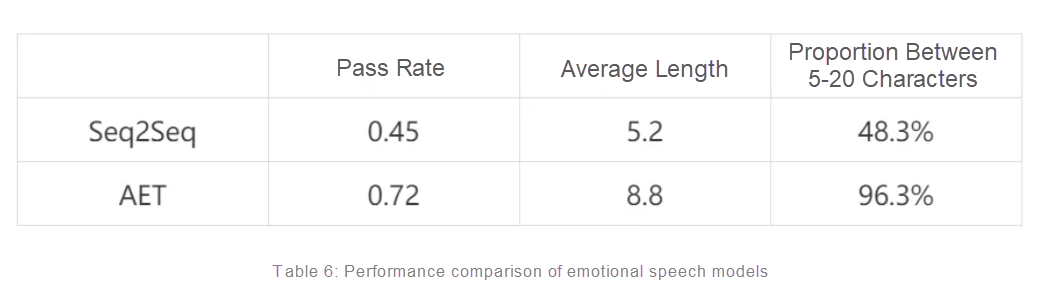

After the model was trained, it was tested on real user questions, and the results were reviewed by business experts. Ultimately, it generated a satisfactory answer 72% of the time. In addition, the average length of the response text was 8.8 characters, which meets AliMe's requirement for response text length in voice chat scenarios. Table 6 compares the effects of the Attention-based Emotional & Topical (AET) Seq2Seq model proposed in this article and the traditional Seq2Seq model. This comparison focuses on the content pass rate and response length. After emotional information is added, the response content is richer than that in the traditional Seq2Seq model. In addition, robots will output a higher proportion of responses with the optimal length (5 to 20 characters) in voice chat scenarios. Ultimately, this significantly increases the overall pass rate of responses.

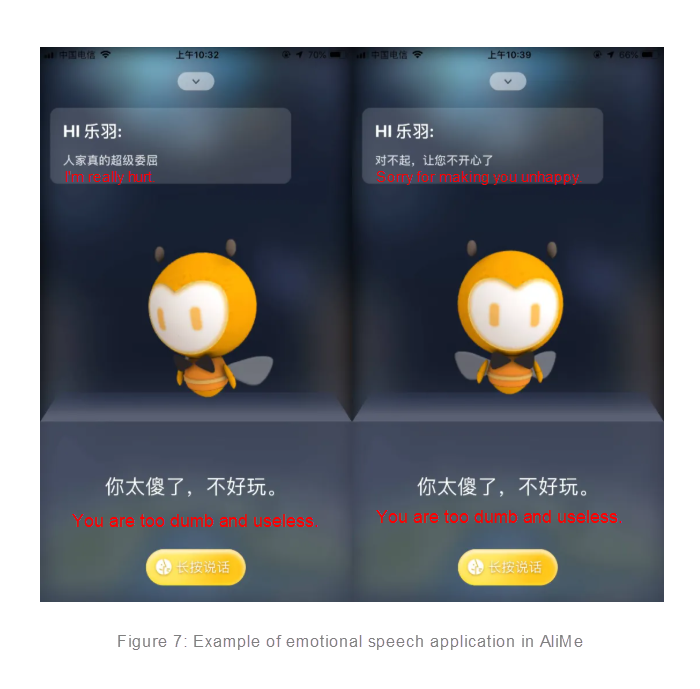

Figure 7 shows an application of the emotional speech model in AliMe. In Figure 7, both responses are generated by the emotional model. When a user inputs "You are silly. No fun.", our model can generate different responses based on the topic and emotion, which makes its responses more diversified. The two responses in Figure 7 are generated based on the emotions "grievance" and "regret".

The customer service quality control function described in this article is used to detect service content that may lead to problems during dialogues between human customer service staff and customers. It aims to better identify problems that occur during human customer service and help customer service representatives improve. Ultimately, this helps improve the quality of customer service and increase customer satisfaction. To the knowledge of the authors, there is currently no publicly implemented AI-related algorithm model for customer service quality control in the customer service systems.

Different from human-machine dialogues, the dialogues between human customer service staff and customers are not in the form of one-question-to-one-answer, but can involve multiple sentences in a row from either party. Our goal is to detect whether any utterances by human staff involve "negative" or "poor attitude" quality problems.

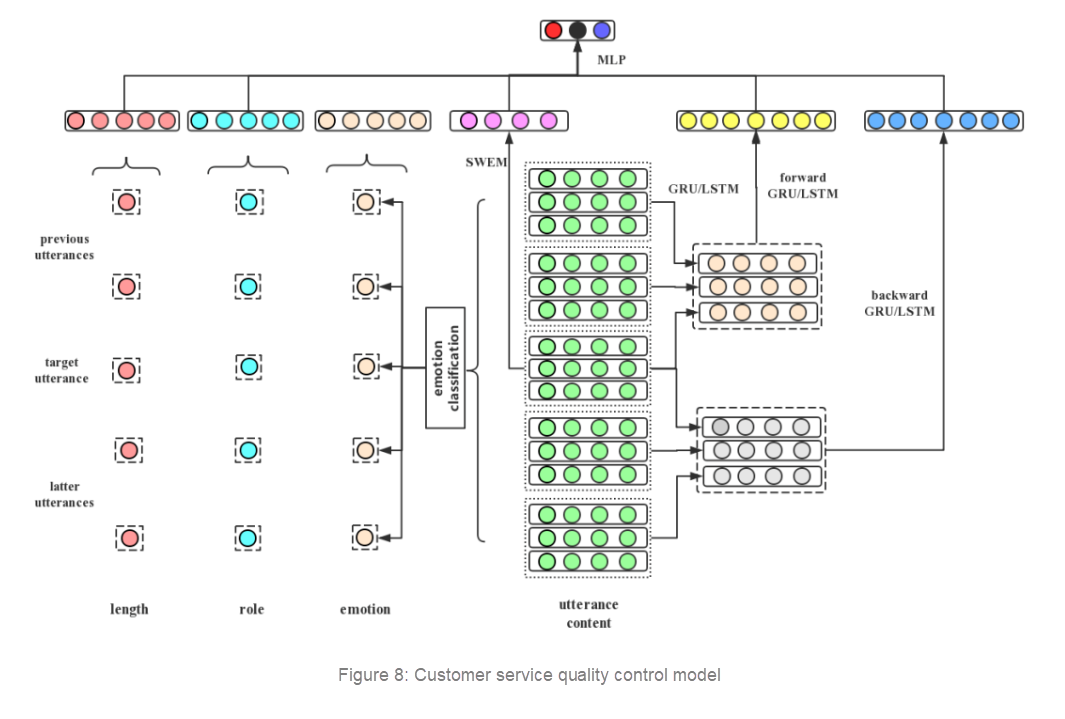

To inspect the quality of customer service, we must consider the context, including users' questions and the language used by human staff. We must consider features such as the text length, speaker role, and text content. For the text content, we use the SWEM model to extract features from human staff's utterances and detect emotions in each round of dialogue while considering the context. The user emotion category and customer service staff emotion category detected are used as model features. The emotion identification model used here is the same as that described in section 2. We also used two structures (model 1 in Figure 8 and model 2 in Figure 9) to extract semantic features of text sequences based on context content.

Model 1 performs GRU- or LSTM-based encoding for the current customer service staff utterance and each sentence in its context. Then, the model uses forward and reverse GRU or LSTM to perform serialized encoding against for the encoding results of the preceding and following text encoding. In this way, both serialized encoding results use the current utterance as the last sentence, which can better express the semantic information of the current utterance. Figure 8 shows the structure of the customer service quality control model.

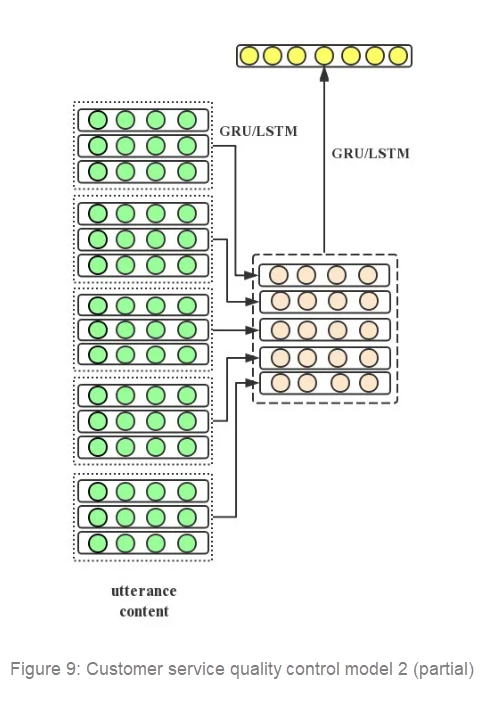

Model 2 performs forward GRU- or LSTM-based encoding on the encoding results of the current customer service utterance and its context in sequence. The result is used as the final semantic feature. Figure 9 shows a part of the model structure. Model 1 highlights the semantic information of the current customer service utterance, while model 2 outputs more serialized semantic information to better describe the overall context.

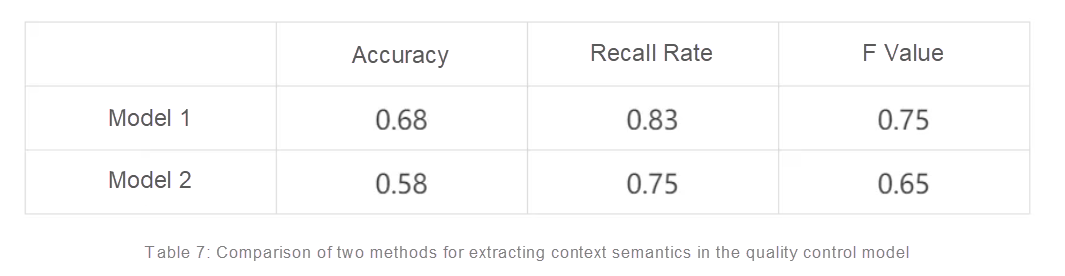

Table 7 compares the performance of the two context semantic information extraction models. The result shows that the performance of model 1 is better than that of model 2. According to the result, we need to place more weight on the semantic information of the current customer service utterance. This way, we can use the semantic information of the context to facilitate identification. The results of the GRU and LSTM methods are not very different in actual model training. However, the GRU method is faster than the LSTM method. Therefore, the GRU method is used in all model experiments.

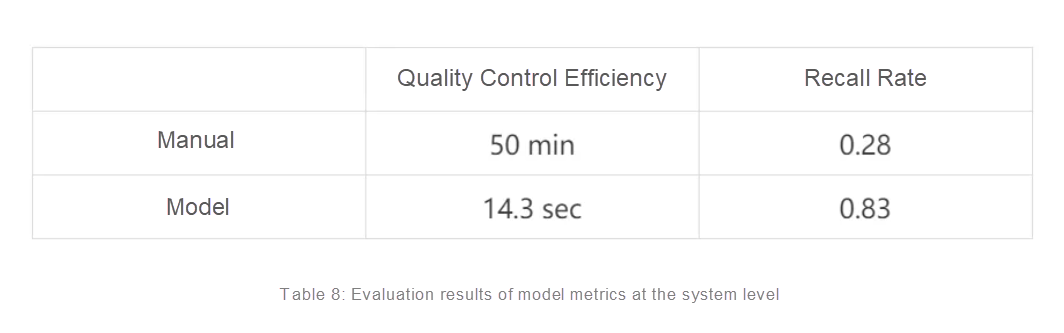

In addition to model metrics at the model level, we also analyzed the model metrics at the system level, including the quality inspection efficiency and recall rate. We obtained these two metrics by comparing the results of our model and those of previous manual service quality inspections. As shown in Table 8, both the quality inspection efficiency and the recall rate are significantly improved. The recall rate of manual quality inspection is lower because it is impossible to manually inspect all customer service records.

Currently, the most important metric for evaluating the performance of an intelligent customer service system is user session satisfaction. To our knowledge, there are currently no research results concerning the automatic estimation of user session satisfaction in an intelligent customer service system.

To address the problem of user session satisfaction estimation in an intelligent customer service system, we proposed a session satisfaction analysis model to better show the satisfaction of current users with intelligent customer service. Since different users have different evaluation standards, there may be many sentiment category inconsistencies when the session content, answer source, and emotion information are completely the same. Therefore, we used two model training methods: One method is to train a classification model to fit sentiment categories (satisfied, neutral, and dissatisfied). The other method is to train a regression model to fit sentiment distribution in sessions. Finally, we compared the performance of the two methods.

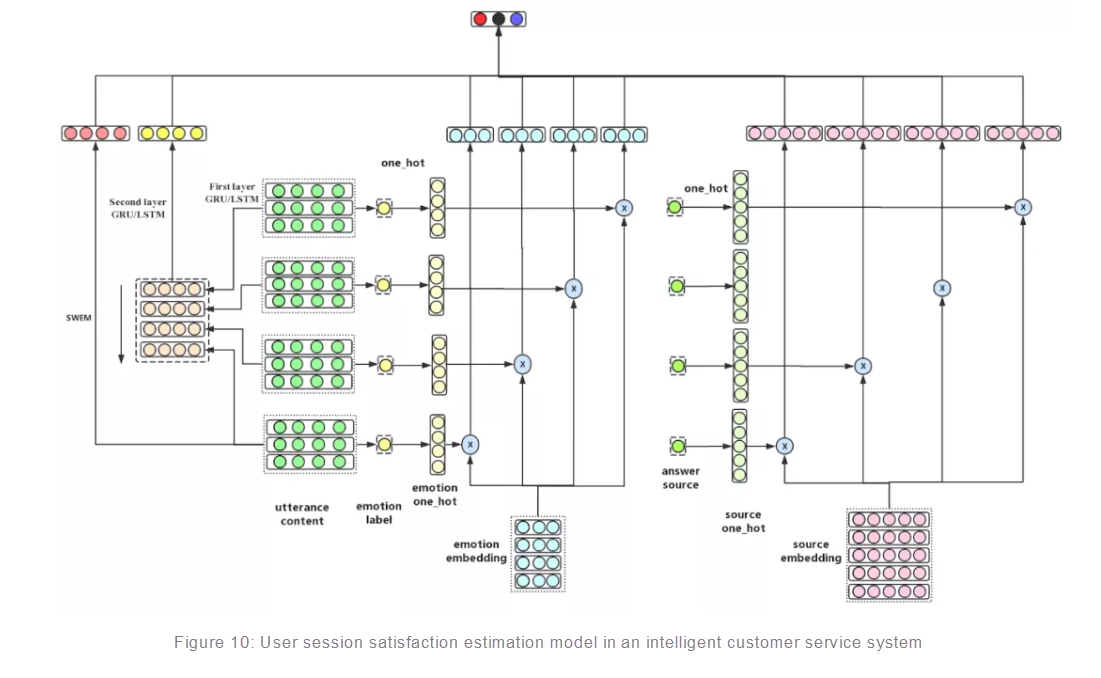

A session satisfaction model considers various dimensions: semantic information (user utterances), emotional information (obtained with the emotion detection model), and answer source information (source of answers in the current utterance).

Semantic information is the content information expressed during the communication between users and the intelligent customer service system. The information allows us to better represent current user satisfaction based on user utterances. The semantic information we use in the model is multi-round utterance information from a session. To ensure that the model can always process utterances in the same round each time, we only use the last four sentences in the session during the experiment. This is because session data analysis shows that user semantic information from the end of a session is better correlated with overall satisfaction levels. For example, if a user expresses gratitude at the end of a session, this indicates that the user is basically satisfied. If the user expresses critical opinions, this indicates that the user is dissatisfied.

Generally, emotional information serves as an important reference for user satisfaction. When a user shows extreme emotions such as anger and abuse, the probability that the user will report dissatisfaction is extremely high. Here, emotional information is mapped to utterances in the semantic information with a one-to-one correspondence. Then, we can identify emotions from the selected rounds of utterances to obtain sentiment category information.

Answer source information can reflect the problems that users encounter. Different answer sources represent different business scenarios, and user satisfaction levels differ significantly in different scenarios. For example, users are more likely to express dissatisfaction in complaint and rights protection scenarios than in consulting scenarios.

This article proposes a session satisfaction estimation model based on semantic, emotional, and answer source features. The model fully considers the semantic information in sessions and fully expresses emotional information and answer source information by compressing data. Figure 10 shows the model structure.

Extraction of semantic features: Layered GRU or LSTM is used to extract semantic information. The first layer is used to obtain the sentence expression of each utterance (first layer GRU/LSTM in Figure 10). The second layer is used to obtain the high-order expression (second layer GRU/LSTM in Figure 10) of multi-round utterances based on the sentence expression result of the first layer.

This makes full use of the sequence information of user utterances. In addition, the SWEM feature of the last utterance is also obtained to enhance the semantic feature influence of the last utterance.

Extraction of emotional features: The obtained emotional features are one-hot type, which are characterized by sparse data and an inability to express the direct relationships between emotions. Therefore, we learned emotion embedding to better express emotional features.

Extraction of answer source features: Initial answer source features are also one-hot type, but sparse data is caused by the more than 50 types of answer sources. Therefore, the features must be compressed. We also learned answer source embedding to express answer source features.

Model prediction layer: We tried satisfaction category prediction and satisfaction distribution prediction, respectively. The former method uses a classification model, while the latter uses a regression model.

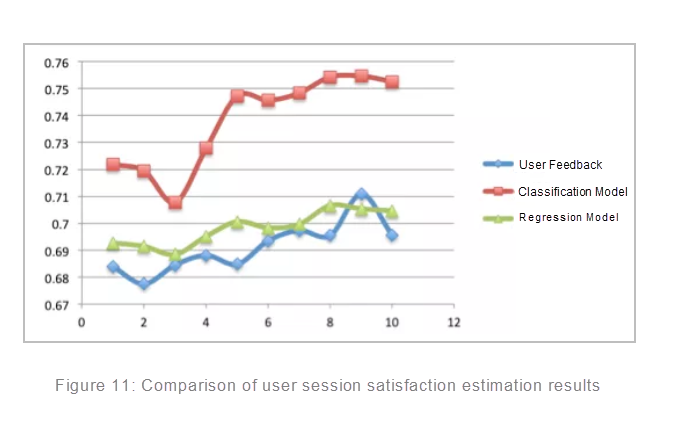

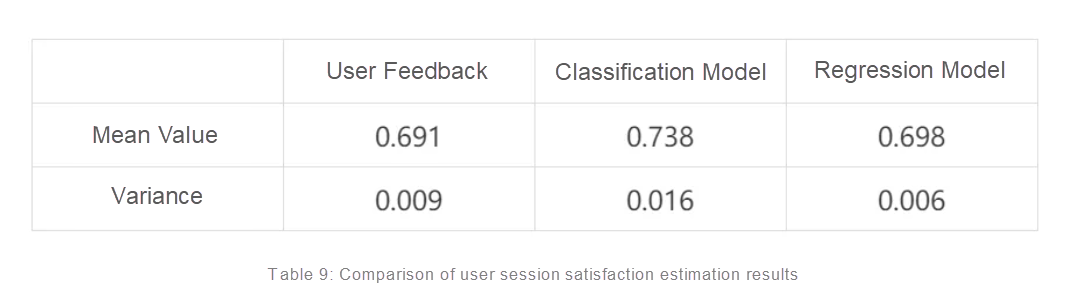

Figure 11 shows the experimental results. According to the experimental results, the satisfaction estimation performance of the classification model is poor, with results more than 4% higher than actual user feedback on average. The regression model is better at predicting user feedback and reduces result fluctuations due to a small number of samples. Therefore, the regression model meets our expectations. As shown in Table 9, the difference between the mean value of the regression model and the results from the user feedback is only 0.007. This reduces the previous variance by one third, proving the effectiveness of the regression model.

This article summarizes the applications of emotion analysis capabilities in intelligent customer service systems, introduces relevant models, and shows their performance. Although emotion analysis capabilities are already widely used in the human-machine dialogue of intelligent customer service systems, this only represents a good start. Emotional analysis needs to play a greater role in empowering intelligent customer service systems with human-like capabilities.

1 posts | 0 followers

FollowAlibaba Clouder - June 12, 2018

Alibaba Cloud Community - June 24, 2022

Alibaba Clouder - October 10, 2018

Alex - July 9, 2020

Alex - June 18, 2020

Rupal_Click2Cloud - June 14, 2022

1 posts | 0 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Online Education Solution

Online Education Solution

This solution enables you to rapidly build cost-effective platforms to bring the best education to the world anytime and anywhere.

Learn More Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn More

5165083774057630 May 11, 2023 at 3:01 am

Welcome to our news website, where we strive to provide you with the latest and most accurate news updates from around the world. Our team of experienced journalists and reporters work tirelessly to bring you news stories as they unfold, keeping you informed and up-to-date on the latest happenings in politics, business, sports, entertainment, and more.https://newsyera.com/