In this post, you'll learn how to use Alibaba Cloud's MaxCompute Migration Assist (MMA) to synchronize Hive data to MaxCompute. With a presentation of data migration using MMA, we will also look at the functionality, technological design, and application concepts of MMA.

1.1 MMA Features

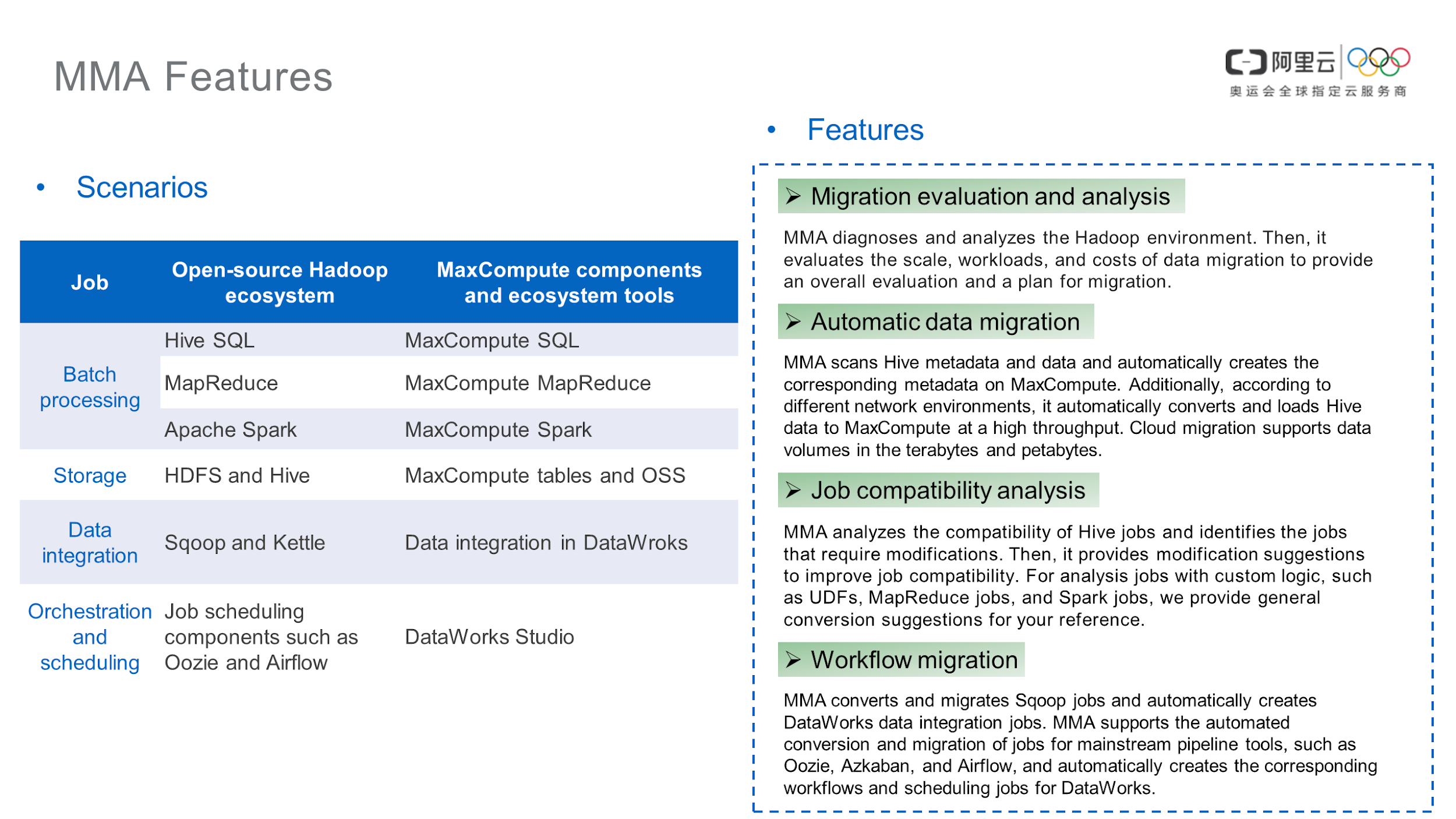

MaxCompute Migration Assist (MMA) is a MaxCompute data migration tool that is used for batch processing, storage, data integration, and job orchestration and scheduling. MMA has a migration evaluation and analysis feature that automatically generates migration evaluation reports, which help you determine data type mapping compatibility issues when synchronizing data from Hive to MaxCompute, such as syntax issues.

MMA supports automatic data migration, batch table creation, and automatic batch data migration. It also provides a job syntax analysis feature to check whether Hive SQL can be run on MaxCompute. In addition, MMA supports workflow migration, job migration and transformation for the mainstream data integration tool Sqoop, and automatic creation of DataWorks data integration jobs.

1.2 MMA Architecture

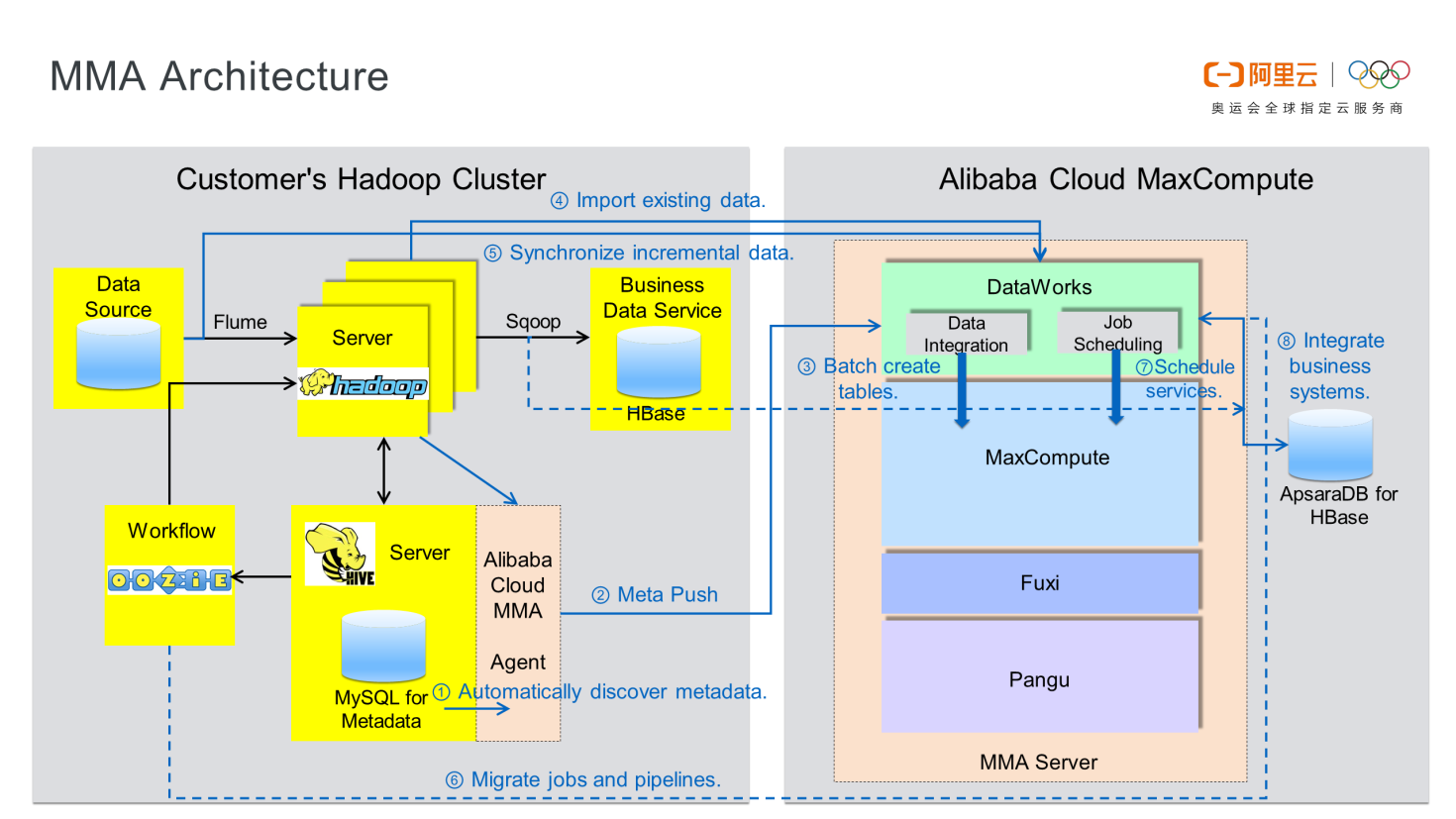

The following figure displays the MMA architecture. The left side shows the customer's Hadoop cluster, and the right side shows Alibaba Cloud big data services, mainly DataWorks and MaxCompute.

MMA runs on your Hadoop cluster, and your server must be able to access the Hive Server. Post-deployment on a host, the MMA client automatically obtains the Hive metadata. It reads the Hive metadata from MySQL and automatically converts it to MaxCompute Data Definition Language (DDL) statements.

Next, run DDL statements to create tables on MaxCompute in batches, start batch synchronization jobs, and submit concurrent Hive SQL jobs to the Hive Server. You can call a user-defined function (UDF) based on the Hive SQL job. The UDF integrates the Tunnel SDK to write data to MaxCompute tables in batches based on Tunnel. When migrating jobs and workflows, you can check workflow jobs based on the Hive metadata that MMA discovers automatically. This includes batch converting workflow configurations in workflow components to DataWorks workflow configurations for generating DataWorks workflows. After these steps, data is migrated to jobs and workflows. After the migration completion, you need to connect to the business system based on the MaxCompute and DataWorks architectures.

1.3 Technical Architecture and Principles of MMA Agent

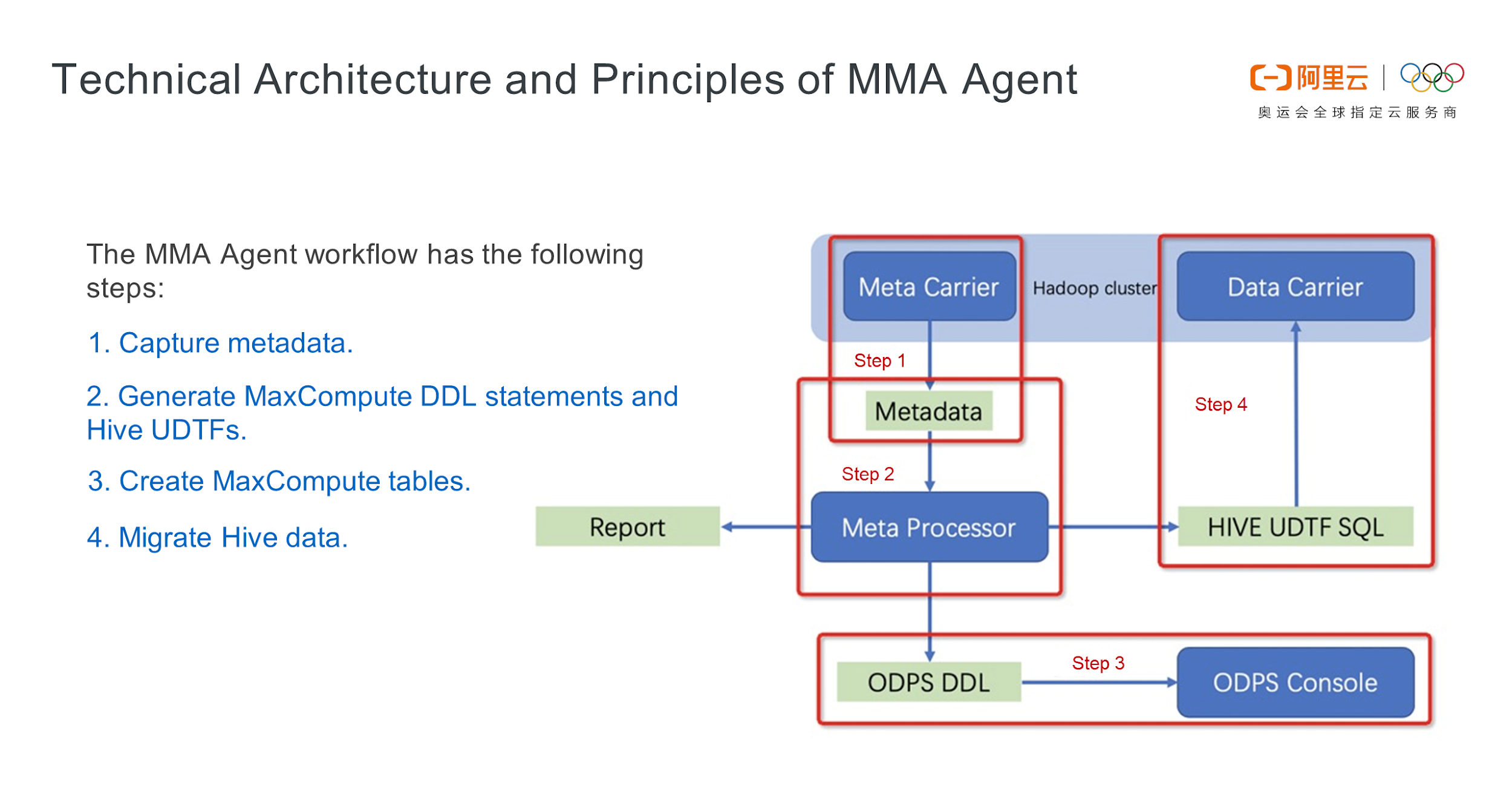

MMA supports the batch migration of data and workflows through the client and server. The MMA client installed on your server provides the following features:

Accordingly, MMA contains four components:

In this post, you'll learn how to synchronize Message Queue for Apache Kafka to MaxCompute on Alibaba Cloud and obtain a general understanding of Message Queue for Apache Kafka. We'll also go into the different configuration approaches and implementation operations that are involved from conception to launch.

1. Objective

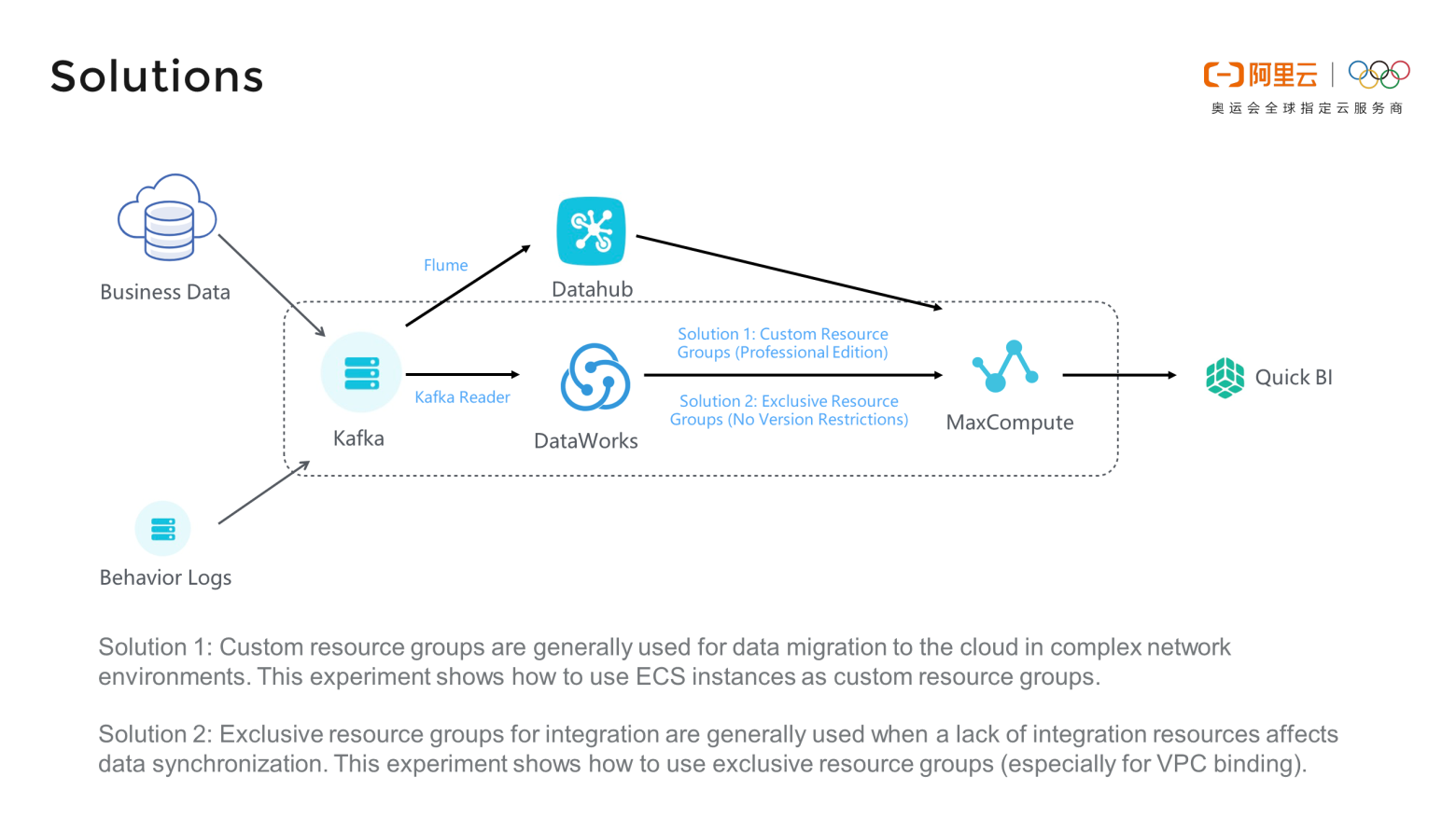

For daily operations, many enterprises use Message Queue for Apache Kafka to collect the behavior logs and business data generated by apps or websites and then process them offline or in real-time. Generally, the logs and data are delivered to MaxCompute for modeling and business processing to obtain user features, sales rankings, and regional order distributions, and the data is displayed in data reports.

2. Solutions

There are two ways to synchronize data from Message Queue for Apache Kafka to DataWorks. In one process, business data and behavior logs are uploaded to Datahub through Message Queue for Apache Kafka and Flume, then transferred to MaxCompute, and finally displayed in Quick BI. In the second process, business data and action logs are transferred through Message Queue for Apache Kafka, DataWorks, and MaxCompute, and finally displayed in Quick BI.

In this a, I will use the second process. Synchronize data from DataWorks to MaxCompute using one of two solutions: custom resource groups or exclusive resource groups. Custom resource groups are used to migrate data to the cloud on complex networks. Exclusive resource groups are used when integrated resources are insufficient.

Alibaba Cloud MaxCompute is a big data processing platform that processes and stores massive batch structural data to provide effective warehousing solutions. Start your MaxCompute journey here to discover infinite possibilities with Alibaba Cloud.

MaxCompute (previously known as ODPS) is a general purpose, fully managed, multi-tenancy data processing platform for large-scale data warehousing. MaxCompute supports various data importing solutions and distributed computing models, enabling users to effectively query massive datasets, reduce production costs, and ensure data security.

MaxCompute provides a variety of tools for you to upload data to or download data from MaxCompute. You can use the tools to migrate data to MaxCompute in different scenarios. This topic describes how to select data transmission tools in three typical scenarios.

This topic describes the best practices for data migration, including migrating business data or log data from other business platforms to MaxCompute or migrating data from MaxCompute to other business platforms.

Introducing Intelligent Retail: The Next Revolution for E-Commerce

2,593 posts | 793 followers

FollowAlibaba Cloud MaxCompute - April 26, 2020

Alibaba Clouder - July 20, 2020

Alibaba Cloud MaxCompute - June 24, 2019

Alibaba Cloud MaxCompute - December 22, 2021

Alibaba Cloud MaxCompute - December 7, 2018

Alibaba Cloud MaxCompute - April 26, 2020

2,593 posts | 793 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn MoreMore Posts by Alibaba Clouder