By Bin Fu, Data Technical Expert at Alibaba Cloud

In the MaxCompute developer exchange group on DingTalk in 2018, Bin Fu delivered a live speech entitled "Data Conversion Practices of MaxCompute Data Warehouse". This article describes the MaxCompute data architecture and process, three extract, transform, load (ETL) algorithms (update or insert, loading, and full history table), NULL value processing in the OLTP, and detailed ETL knowledge.

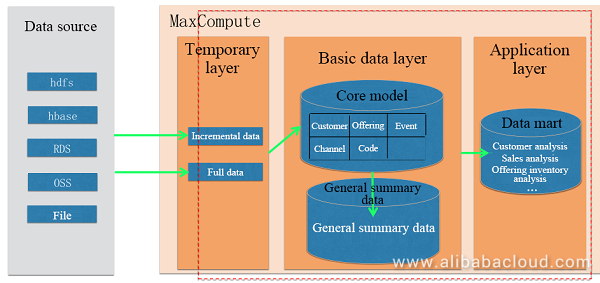

MaxCompute consists of a temporary layer, a basic data layer, and an application layer. After data is transferred to the cloud, the data is first transferred to the temporary layer and processed. Then the data is converted and transferred to the basic data layer, and further aggregated to the application layer for service provisioning. Details about the three layers are as follows:

There are five extract, transform, load (ETL) strategies: full table replace (M1), update or insert (M2), loading (M3), full history table (M4), and incremental history table (M5). The following describes the update or insert (to primary tables) (M2), loading (M3), and full history table (M4) ETL algorithms.

This algorithm applies to keeping the latest-state table. It compares data based on primary keys (or specified fields). If records exist in the target table, the existing records are updated. Otherwise, new records are inserted into the table. Full Outer Join is required to implement this algorithm because MaxCompute does not support "update or delete."

During the implementation by using Full Outer Join, if the Source Table primary key value is NULL and the Target Table FULL primary key value is NOT NULL, the Outer Join selects unmodified data as the result. If the Source Table value is NOT NULL and the Target Table FULL value is NULL, the Outer Join selects new data as the result. If both the Source Table and Target Table FULL values are NOT NULL, the Outer Join selects modified data as the result.

The loading algorithm is used to add incremental data directly to target tables. It applies to incremental, unmodified data such as flows, transactions, events, and CDRs.

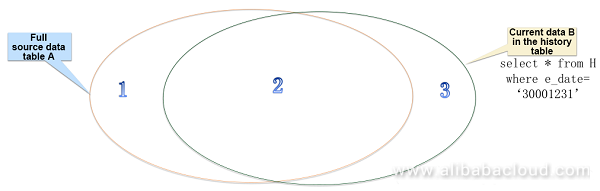

A full history table must contain the s_date (start date) and e_date (end date) fields, which record the data modification track in the table. The s_date field indicates the start date of data in the table. If this field is missing in a service table during initial loading, the earliest date is used. The e_date field indicates the expiration date or renewed effective date of data in the table. During initial loading, this field must be set to the latest date. The full history table algorithm involves two loadings and four data types. The two loadings include initial loading and routine loading. During initial loading, full data is loaded to the history table, in which the start date is the service date or the earliest date and the end date is the latest date. During routine loading, all fields excluding s_date and e_date are compared, and Full Outer Join is used to generate new, expired, and unmodified data. In this way, expired data is found directly in the history table. The four data types include currently new data, currently expired data, currently unmodified data, and expired data. Currently new data indicates data whose start date is the data date and end date is the latest date. Currently expired data indicates data whose start date remains unchanged and end date is the data date. Currently unmodified data indicates data whose start date and end date remain unchanged. Expired data indicates data whose start date and end date remain unchanged but the data itself is invalid. All the preceding types of data can be directly inserted into the new history table.

In the preceding figure, full source data table A is on the left, and current data B in the history table is on the right; 1 indicates new data, 2 indicates currently unmodified data, and 3 indicates currently expired data. A FULL OUTER JOIN B is used to generate new data, currently unmodified data, and currently expired data. These three types of data and the expired data in the old history table formulate the new history table.

NULL is a SQL keyword indicating unknown data or values. It does not specify data types or data features. Upon comparison of any value with NULL, false is returned and the comparison result is empty. In the OLTP system, most table fields are set to NULL. During table association or field convergence involving the NULL value, the result may be different from business personnel's expectations. Except for special cases, we recommend that you convert the NULL value of fields into non-NULL values before saving data to tables in the data warehouse. The NULL issue is related to database technical processing. Because of the NULL value, the computing result may be different from business personnel's expectations. You need to exercise caution when running SQL statements and analyzing data involving the NULL value.

The ETL program reads column schema from the MaxCompute metadata table and generates a unified script based on the column schema. The ETL logic is fixed. You can use the ETL program to generate algorithm scripts and modify the NULL processing content in the scripts. If the Python and Python ODPS plug-in have been installed, name the program scripts_gen.py and set the ODPS configuration file, target table name, source table name, primary keys, and ETL algorithm parameters to generate a SQL script file.

Before developing ETL conversion tasks, you need to sort the mapping between tasks to facilitate task development and progress records. During or after development, you need to record the field-level mapping and conversion in a detailed document to facilitate unified task management and maintenance.

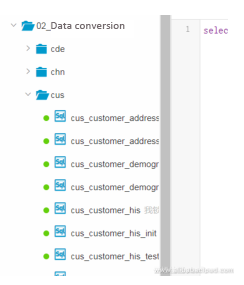

As shown in the preceding figure, we recommend that you name the root directory 02_data conversion and plan subdirectories by subject in the DataWorks task directory structure. The subdirectories need to have task scripts for the subject tables stored in them, with the task name as the table name.

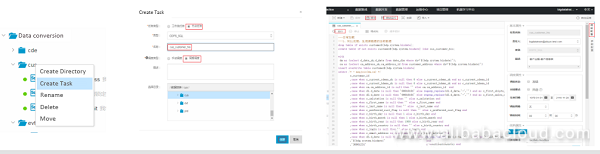

As shown in the preceding figure, the task development procedure is as follows: Right-click a subject directory and choose Create Task from the shortcut menu. Enter the task name and other required information to create the task, and copy and save the conversion script on the task script page that appears. Run or submit the script on the task script page. Test the task running and click Schedule at the upper right corner to set scheduling attributes.

There are three steps in the ETL development procedure: generate an ETL script file, create a DataWorks task, and test and release the script file. The detailed process is as follows:

After the preceding steps, the task runs automatically as scheduled.

In this article, we discussed about the MaxCompute data architecture as well as data conversion practices of MaxCompute data warehouse using three ETL algorithms. We have also seen how to implement ETL on Alibaba Cloud using DataWorks.

Optimizing Alimama's Digital Marketing Solution with MaxCompute's Advanced Features

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - October 18, 2021

Alibaba Cloud Community - October 17, 2025

Alibaba Cloud MaxCompute - December 8, 2020

Alibaba Cloud MaxCompute - April 25, 2019

Alibaba Cloud MaxCompute - September 18, 2019

Alibaba Cloud MaxCompute - December 22, 2021

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba Cloud MaxCompute