By Changbie

With the development of IT infrastructure, modern data processing systems need to process more data and support more complex algorithms. The growth of data volume and the complexity of algorithms bring performance challenges to data analysis systems. In recent years, we have seen many performance optimization techniques in areas, such as databases, big data systems, and AI platforms, ranging from system architecture, compilation techniques, and high-performance computing. As a representative of compilation optimization technologies, this article mainly introduces the code generation technology based on LLVM (Codegen).

LLVM is a popular open-source compiler framework that supports multiple languages and base hardware. LLVM allows developers to build compiling framework for secondary development. Different languages or logic can be compiled into executable files that run on multiple types of hardware. For Codegen technology, we mainly focus on the format of LLVM IR and the API for generating LLVM IR. The following parts of this article introduce LLVM IR, the principle and usage scenarios of Codegen technology, and the typical application scenarios of Codegen in AnalyticDB PostgreSQL, an Alibaba Cloud cloud-native data warehouse product.

IR is a critical component in compiler theory and practice. IR stands for Intermediate Representation. A compiler must go through many passes and take different forms from the upper abstract high-level language to the lower assembly language. There are many compilation optimization techniques, each of which functions in different compilation steps. However, IR is an obvious watershed. For compilation optimizations above IR, there is no need to care about the details of the base hardware, such as the instruction set of the hardware and the file size of the register. IR or lower compilation optimization must deal with hardware. LLVM is best known for its IR design. Thanks to the ingenious IR design, LLVM can support different languages in the upper layer and different hardware in the lower layer. Different languages can multiplex the optimization algorithms in the IR layer.

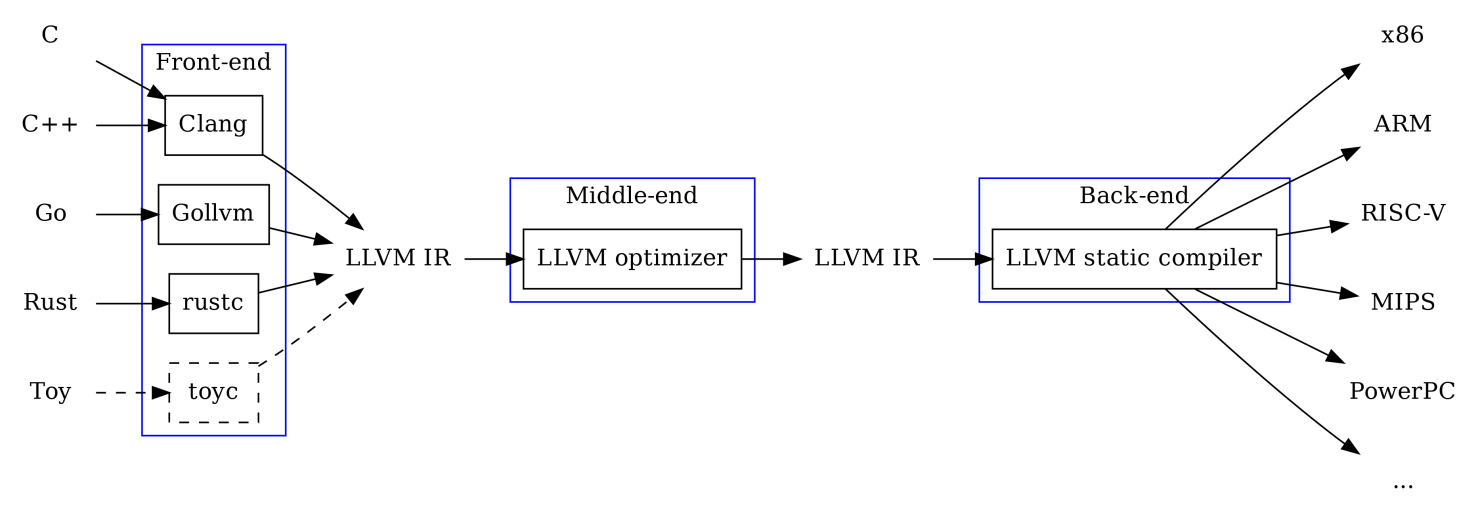

The figure above shows the framework of LLVM. LLVM divides the entire compilation process into three steps:

Thus, LLVM has high scalability. For example, if you are implementing a language called toyc and want to run it on the ARM platform, you only need to implement a frontend of toyc->LLVM IR and adjust the LLVM modules in other parts. If you want to build a new hardware platform, you only need to deal with the stage of LLVM IR-> new hardware. Then, the hardware can support many existing languages. Therefore, IR is the most competitive part for LLVM, and it is also the core to start learning LLVM Codegen.

The IR format of LLVM is very similar to assembly format. It is very easy for those who have learned assembly language to learn how to use LLVM IR for programming. If you have not learned assembly language before, don't worry, it is not difficult. The difficult part about compilation is not learning it but engineering implementation. The difficulty of assembly language development increases exponentially as the engineering complexity rises. Next, we need to comprehend the three most important parts of IR: instruction format, Basic Block & CFG, and SSA. For more information about LLVM IR, please refer to the following document: https://llvm.org/docs/LangRef.html

"%6 = add i 32 %0, %1" has the add as operator, the type is i32, the input is %0 and % 1, and the return value is %6. In general, IR supports some basic instructions, and the compiler carries out some complicated operations through these basic instructions. For example, we write an expression like "A * B + C" in the C language. LLVM IR can do this equation with a multiply instruction and an addition instruction, and some type of conversion instructions may be concluded as well.define i32 @ir_add(i32, i32, i32, i32, i32){

%6 = add i32 %0, %1

%7 = add i32 %6, %2

%8 = add i32 %7, %3

%9 = add i32 %8, %4

ret i32 %9

}

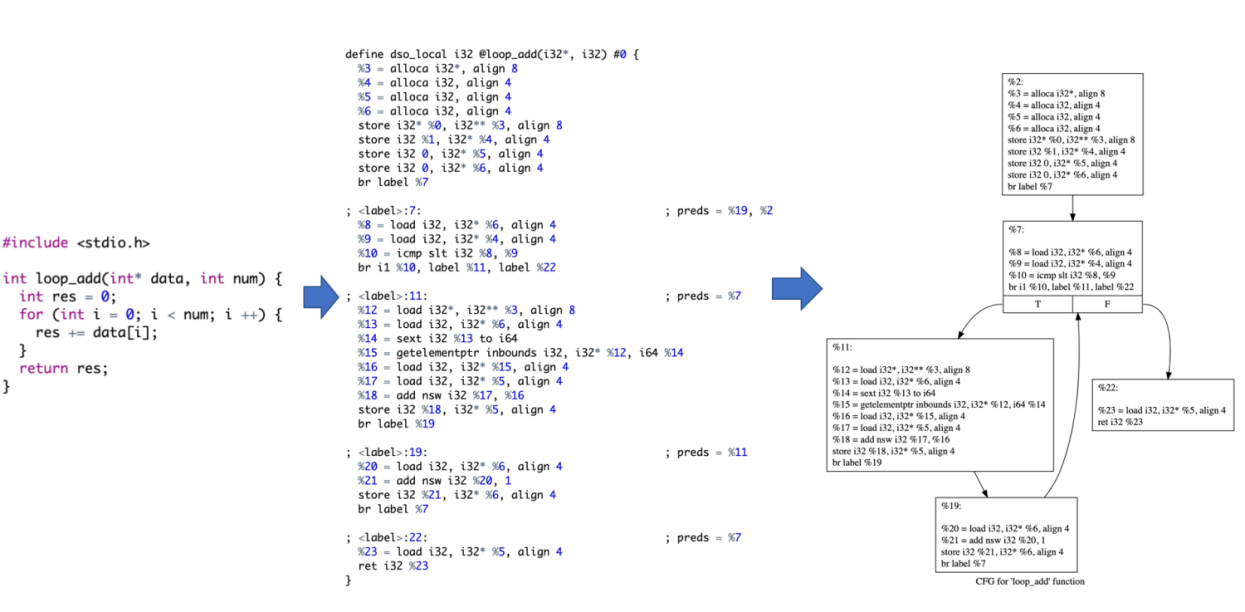

Each advanced language we are familiar with usually has many branch jump statements. For example, keywords, such as for, while, and if, also exist in the C language. These keywords represent branch jump statements. Developers can perform different logical operations through branch jump statements. Assembly languages usually use conditional jumps and unconditional jumps to perform logical operations, which is the same for LLVM IR. For example, in LLVM IR, br label %7 indicates that it jumps to the label named %7 in any case, which is an unconditional jump instruction. br i1 %10, label %11, label %22 is a conditional jump, meaning that if %10 is true, it jumps to a label named %11. Otherwise, it jumps to a label named %22.

After understanding the concept of jump instructions, we will introduce the concept of Basic Block (BB). A Basic Block refers to an instruction stream for serial execution. There will be no jump instructions except the last sentence. The first instruction at the entry of a Basic Block is called leading instruction. Each Basic Block (except for the first one) has a name (label). The first Basic Block can also have a name, but sometimes it is unnecessary. For example, this code segment includes five Basic Blocks. The concept of Basic Block solves the problem of control logic. The Basic Block allows us to divide the codes into different blocks. In the compilation optimization, some optimizations work for a single Basic Block, and some work for multiple Basic Blocks.

A Control Flow Graph (CFG) is a graph that consists of Basic Blocks and the jump relationship between them. For example, the code shown in the figure above has five Basic Blocks. The arrows demonstrate the jump relationship between the Basic Blocks, and they form a CFG. If a Basic Block only has one arrow pointing to another Block, the jump is an unconditional jump. Otherwise, it is a conditional jump. CFG is a simple and basic concept in compilation theory. Data Flow Graph (DFG) is the next stage of CFG. Many advanced compilation optimization algorithms are based on DFG. For those using LLVM to perform Codegen development, you only need to understand the concept of CFG.

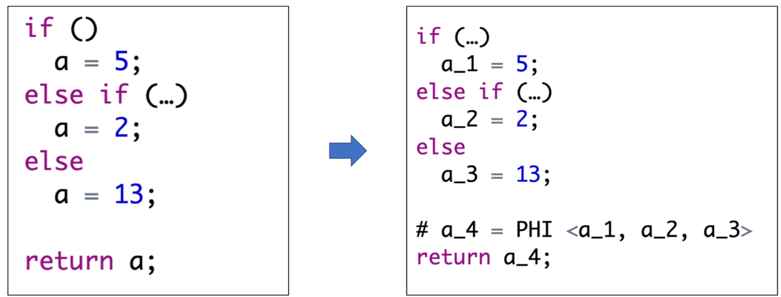

The figure above (on the left) shows a simple C code, and the figure above (on the right) is the SSA version of the short code, which is the code in the compiler's eyes. In the C language, we know that data are stored as variables, so the core of data operations is variables. Developers need to be concerned about the lifetime of variables, and when a variable is assigned or is used. However, the compiler only cares about data flow, so each assignment operation generates a new l-value. For example, the code on the left only has one a, but the code on the right has four variables because the data inside a has four versions. A new variable is generated for each value assignment, and a new variable is generated for the last Phi. In SSA, each variable represents a version of the data. That means, the high-level language takes variables as its core, while the SSA format takes data as its core. Every assignment operation in SSA will generate a version of data. Therefore, when writing IR, developers must always remember that IR variables are different from high-level languages, and an IR variable represents a version of the data. A Phi node is an important concept in SSA. In this example, the value of a_4 depends on the branch that was executed earlier. If the first branch is executed, then a_4=a_1, and so on. Phi selects the appropriate version of the data by determining which Basic Block this code jumps from. LLVM IR requires developers to write Phi nodes. In scenarios where loops and conditional branches jump, it is often necessary to write a lot of Phi nodes manually, which is a logical difficulty when writing LLVM IR.

The best way to get familiar with LLVM IR is to write several programs with IR. We recommend taking 30 minutes to one hour to read the official manual to get familiar with what types of instructions are available before writing. Next, we will familiarize ourselves with the entire LLVM IR programming process through two simple cases.

The following is a function fragment of loop addition. This function contains three Basic Blocks: loop, loop_body, and final. Loop is the beginning of the whole function, loop_body is the body of the function, and final is the end of the function. In line 5 and line 6, we use the Phi node to implement the result and loop variables.

define i32 @ir_loopadd_phi(i32*, i32){

br label %loop

loop:

%i = phi i32 [0,%2], [%newi,%loop_body]

%res = phi i32[0,%2], [%new_res, %loop_body]

%break_flag = icmp sge i32 %i, %1

br i1 %break_flag, label %final, label %loop_body

loop_body:

%addr = getelementptr inbounds i32, i32* %0, i32 %i

%val = load i32, i32* %addr, align 4

%new_res = add i32 %res, %val

%newi = add i32 %i, 1

br label %loop

final:

ret i32 %res;

}The following is a function fragment of bubble sort. This function contains two loop bodies. It is not easy for LLVM IR to implement loop. It will be more complicated if two loops are nested. If we use LLVM IR to implement a bubble algorithm, we can virtually understand the entire logic of LLVM.

define void @ir_bubble(i32*, i32) {

%r_flag_addr = alloca i32, align 4

%j = alloca i32, align 4

%r_flag_ini = add i32 %1, -1

store i32 %r_flag_ini, i32* %r_flag_addr, align 4

br label %out_loop_head

out_loop_head:

;check break

store i32 0, i32* %j, align 4

%tmp_r_flag = load i32, i32* %r_flag_addr, align 4

%out_break_flag = icmp sle i32 %tmp_r_flag, 0

br i1 %out_break_flag, label %final, label %in_loop_head

in_loop_head:

;check break

%tmpj_1 = load i32, i32* %j, align 4

%in_break_flag = icmp sge i32 %tmpj_1, %tmp_r_flag

br i1 %in_break_flag, label %out_loop_tail, label %in_loop_body

in_loop_body:

;read & swap

%tmpj_left = load i32, i32* %j, align 4

%tmpj_right = add i32 %tmpj_left, 1

%left_addr = getelementptr inbounds i32, i32* %0, i32 %tmpj_left

%right_addr = getelementptr inbounds i32, i32* %0, i32 %tmpj_right

%left_val = load i32, i32* %left_addr, align 4

%right_val = load i32, i32* %right_addr, align 4

;swap check

%swap_flag = icmp sge i32 %left_val, %right_val

%left_res = select i1 %swap_flag, i32 %right_val, i32 %left_val

%right_res = select i1 %swap_flag, i32 %left_val, i32 %right_val

store i32 %left_res, i32* %left_addr, align 4

store i32 %right_res, i32* %right_addr, align 4

br label %in_loop_end

in_loop_end:

;update j

%tmpj_2 = load i32, i32* %j, align 4

%newj = add i32 %tmpj_2, 1

store i32 %newj, i32* %j, align 4

br label %in_loop_head

out_loop_tail:

;update r_flag

%tmp_r_flag_1 = load i32, i32* %r_flag_addr, align 4

%new_r_flag = sub i32 %tmp_r_flag_1, 1

store i32 %new_r_flag, i32* %r_flag_addr, align 4

br label %out_loop_head

final:

ret void

}We compile the LLVM IR above into an object file using the clang compiler and link it with a program written in the C language, which can be called normally. In the preceding case, we only use basic data types, such as i32 and i64. LLVM IR supports high-level data types, such as struct, which allows for more complex functionality.

The function of the compiler is essentially calling various API and generating corresponding code according to the input, and LLVM Codegen is no exception. In LLVM, a function is a class, a Basic Block is a class, and each instruction or variable is also a class. Implementing Codegen with the LLVM API means using the LLVM internal data structures to implement the corresponding IR according to the requirements.

Value *constant = Builder.getInt32(16);

Value *Arg1 = fooFunc->arg_begin();

Value *val = createArith(Builder, Arg1, constant);

Value *val2 = Builder.getInt32(100);

Value *Compare = Builder.CreateICmpULT(val, val2, "cmptmp");

Value *Condition = Builder.CreateICmpNE(Compare, Builder.getInt1(0), "ifcond");

ValList VL;

VL.push_back(Condition);

VL.push_back(Arg1);

BasicBlock *ThenBB = createBB(fooFunc, "then");

BasicBlock *ElseBB = createBB(fooFunc, "else");

BasicBlock *MergeBB = createBB(fooFunc, "ifcont");

BBList List;

List.push_back(ThenBB);

List.push_back(ElseBB);

List.push_back(MergeBB);

Value *v = createIfElse(Builder, List, VL);Here is an example of using LLVM API to implement Codegen. This is the process of writing IR with the C++ language. If you know how to write IR, you only need to know this set of API. It provides some basic data structures, such as instructions, functions, basic blocks, and LLVM builder. Then, we only need to call the corresponding functions to generate these objects. In general, we will develop the prototype of the function, including the function name, parameter list, and return type. Then, according to different functions, we can determine what Basic blocks are needed and the jump relationships among them and generate the corresponding Basic Block. Finally, we will add instructions to each Basic Block in a specific order. The logic is that this process is similar to writing code using LLVM IR.

If we use the methods described above to generate some simple functions and write the corresponding versions in the C language for performance comparison, we will find that the performance of LLVM IR is not faster than the C language. On the one hand, the underlying computer executes the assembly language, and the C language itself is very similar to the assembly language. Programmers that know the underlying information can infer what kind of assembly will be generated from the C code. On the other hand, modern compilers tend to make a lot of optimizations, some of which reduce the programmer's burden significantly. Therefore, the performance of LLVM IR for Codegen is no better than of the handwritten C language. There are also disadvantages of LLVM Codegen. You need to familiarize yourself with the LLVM characteristics to make full use of LLVM.

Knowing the disadvantages of LLVM Codegen, we can analyze its advantages and select appropriate scenarios. The following section describes the applicable scenarios of using LLVM Codegen during the development process.

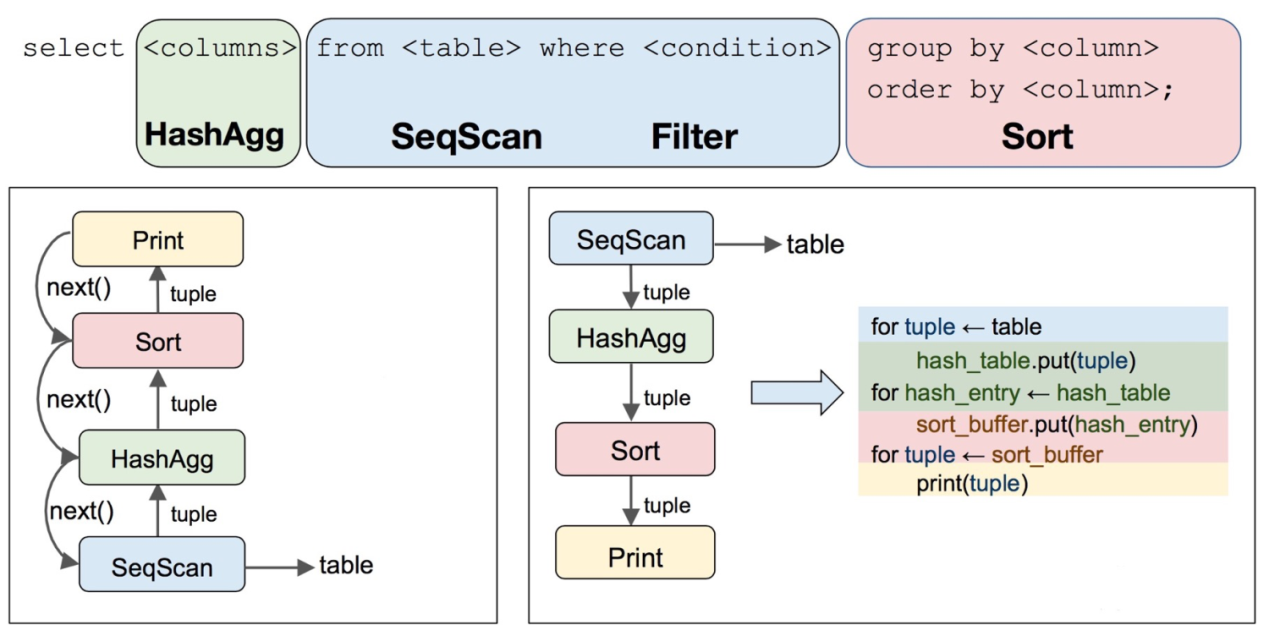

In the database, the team uses LLVM to process expressions. Next, we will compare the PostgreSQL database with cloud-native data warehouse AnalyticDB PostgreSQL to explain the application methods of LLVM.

PostgreSQL adopts a set of functionalization solutions to perform the interpretive execution of expressions. PostgreSQL implements a large number of C functions, such as addition, subtraction, and number comparison. In the generation and execution stages, SQL will select the corresponding functions according to the types of expressions and data types. It also saves the pointer and then calls it at execution. Therefore, for filter conditions, such as "a > 10 and B <5", assuming that a and b are int32, PostgreSQL calls " Int8AndOp(Int32GT(a, 10), Int32LT(b, 5))", which are like building blocks. This scheme has two performance problems. On the one hand, this scheme will lead to more function calls, which causes cost increases. On the other hand, this scheme must have a unified function interface. Some type conversions are required inside and outside the function, which is also extra performance overhead. Odyssey uses LLVM for Codegen to get the minimized code. After the SQL statement is sent, the database knows the operators of the expressions and the types of input data. Therefore, it only needs to select the corresponding IR instructions according to the requirements. Therefore, three IR instructions are needed to implement this expression. Then, we encapsulate the expression into a function, which can be called during execution. In this step, multiple function calls are simplified to one function call, which reduces the total instructions significantly.

// Sample SQL

select count(*) from table where a > 10 and b < 5;

// PostgreSQL interpretive execution scheme: multiple function calls

result = Int8AndOp(Int32GT(a, 10), Int32LT(b, 5));

// AnalyticDB PostgreSQL scheme: use LLVM codegen to generate the minimized low code

%res1 = icmp ugt i32 %a, 10;

%res2 = icmp ult i32 %b, 5;

%res = and i8 %res1, %res2;In the database, expressions mainly appear in several scenarios. One is filter conditions, which usually appear in where conditions. The other is the output list, which generally follows select statements. Some operators, such as join and agg, may also contain some complicated expressions in their judgment conditions. Therefore, the processing of expressions will appear in various modules of the database execution engine. In AnalyticDB PostgreSQL, the Development Team put forward an expression processing framework to process these expressions with LLVM Codegen, improving the overall performance of the execution engine.

LLVM is a popular open-source compiling framework used in recent years to accelerate the performance of databases, AI, and other systems. Due to the high threshold of the compiler theory, it is difficult to learn LLVM. Moreover, from the engineering perspective, it is necessary to have an accurate understanding of the engineering characteristics and performance features to find the appropriate acceleration scenarios. AnalyticDB PostgreSQL, the cloud-native data warehouse product of the Alibaba Cloud Database, implements an expression processing framework at runtime based on LLVM, which can improve the system performance for complex data analysis.

Alibaba Tech - August 29, 2019

ApsaraDB - August 12, 2020

Alibaba Cloud Community - September 14, 2023

OpenAnolis - November 29, 2022

OpenAnolis - April 11, 2022

Alibaba Cloud Data Intelligence - September 6, 2023

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More ApsaraDB RDS for SQL Server

ApsaraDB RDS for SQL Server

An on-demand database hosting service for SQL Server with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by ApsaraDB