By Zisu

JavaScript is a dynamic typing language, so AOT cannot optimize it. Only JIT can optimize it.

Mozilla launched asm.js to make JIT more efficient. It is similar to Typescript in that it is a strongly typed language, but its syntax is a subset of js. It is designed to improve JIT efficiency.

After Mozilla launched asm.js, some companies thought this was a good idea, so they banded together to launch WebAssembly.

WebAssembly is a set of instruction set (bytecode) standards but not a native instruction set that the CPU can directly execute, so it currently requires a virtual machine (low-level) for execution.

Currently, the mature toolchains are:

Let's take emscripten as an example. It allows any language that uses LLVM as a compiler backend to be compiled to wasm.

How does this work? Let's look at LLVM first.

It is a compiler. Instead of compiling languages directly into machine code, it uses the frontend compiler of each language to compile the language to an intermediate representation (IR) and then uses the backend compiler to compile the language to the target machine code. The advantage of this design is that when you need to support a new architecture, you only need to add a backend compiler.

WebAssembly is one of these compilation targets, so any LLVM-based language can be compiled into WebAssembly.

In this case, we can try to directly use LLVM to compile wasm and experience the whole process.

brew install llvm

brew link --force llvm

llc --version

# Ensure that wasm32 is in the targetsLet’s suppose we have a C file, add.c.

int add(int a, int b) {

return a * a + b;

}Step 1: Compile the C file to LLVM IR through clang.

clang \

--target=wasm32 \

-emit-llvm # Generate LLVM IR instead of generating machine code directly

-c \ # compile only (no linking)

-S \ # Generate readable wat format instead of binary format

add.cWe will get an add.ll file, which is LLVM IR and looks like this:

; ModuleID = 'add.c'

source_filename = "add.c"

target datalayout = "e-m:e-p:32:32-p10:8:8-p20:8:8-i64:64-n32:64-S128-ni:1:10:20"

target triple = "wasm32"

; Function Attrs: noinline nounwind optnone

define hidden i32 @add(i32 noundef %0, i32 noundef %1) #0 {

%3 = alloca i32, align 4

%4 = alloca i32, align 4

store i32 %0, ptr %3, align 4

store i32 %1, ptr %4, align 4

%5 = load i32, ptr %3, align 4

%6 = load i32, ptr %3, align 4

%7 = mul nsw i32 %5, %6

%8 = load i32, ptr %4, align 4

%9 = add nsw i32 %7, %8

ret i32 %9

}

attributes #0 = { noinline nounwind optnone "frame-pointer"="none" "min-legal-vector-width"="0" "no-trapping-math"="true" "stack-protector-buffer-size"="8" "target-cpu"="generic" }

!llvm.module.flags = !{!0}

!llvm.ident = !{!1}

!0 = !{i32 1, !"wchar_size", i32 4}

!1 = !{!"Homebrew clang version 15.0.7"}Step 2: Compile the LLVM IR to the object file:

llc -march=wasm32 -filetype=obj add.llWe will get an add.o, a wasm module containing all the compiled code for this C file, but it cannot be run yet. This module is a readable format, and we can use some tools to parse it, such as WebAssembly Binary Toolkit (wabt).

brew install wabt

wasm-objdump -x add.oIt looks like this.

add.o: file format wasm 0x1

Section Details:

Type[1]:

- type[0](i32, i32) -> i32

Import[2]:

- memory[0] pages: initial=0 <- env.__linear_memory

- global[0] i32 mutable=1 <- env.__stack_pointer

Function[1]:

- func[0] sig=0 <add>

Code[1]:

- func[0] size=44 <add>

Custom:

- name: "linking"

- symbol table [count=2]

- 0: F <add> func=0 [ binding=global vis=hidden ]

- 1: G <env.__stack_pointer> global=0 [ undefined binding=global vis=default ]

Custom:

- name: "reloc.CODE"

- relocations for section: 3 (Code) [1]

- R_WASM_GLOBAL_INDEX_LEB offset=0x000006(file=0x00005e) symbol=1 <env.__stack_pointer>

Custom:

- name: "producers"The add method is defined here, but it also contains a lot of other information (such as imports), which is consumed by the next link (Linking).

Step 3: Linking

In general, the role of a connector is to connect multiple object files into an executable file.

The connector in LLVM is called lld. There are multiple llds according to different compilation targets. We will use warm-ld here:

wasm-ld \

--no-entry \ # add.c There is no entry file and it is a lib.

--export-all \ # Export all methods.

-o add.wasm \

add.oHere, we get the final wasm product, add.wasm.

Step 4: Run the wasm

We create a new html file to run wasm.

<!DOCTYPE html>

<script type="module">

async function init() {

const { instance } = await WebAssembly.instantiateStreaming(fetch ("./add.wasm"))

console.log(instance.exports.add(4, 1));

};

init(); // ouput 17(4 * 4 + 1)

</script>The process above is slightly complicated but gives us a better understanding of what is happening. We can complete these operations in one step.

clang \

-target=wasm32 \

-notstdlib \ # Do not connect to the C standard library

-Wl,--no-entry \ # Wl (Wasm linker) indicates that --no-entry is passed as a parameter to Wasm linker.

-Wl,--export-all \

-o add.wasm \

add.cWe can use the wasm2wat (included in the wabt mentioned above) tool to see what the S expression format of wasm looks like.

(module

(type (;0;) (func))

(type (;1;) (func (param i32 i32) (result i32)))

(func $__wasm_call_ctors (type 0))

(func $add (type 1) (param i32 i32) (result i32)

(local i32)

global.get $__stack_pointer

i32.const 16

i32.sub

local.tee 2

local.get 0

i32.store offset=12

local.get 2

local.get 1

i32.store offset=8

local.get 2

i32.load offset=12

local.get 2

i32.load offset=12

i32.mul

local.get 2

i32.load offset=8

i32.add)

(memory (;0;) 2)

(global $__stack_pointer (mut i32) (i32.const 66560))

(global (;1;) i32 (i32.const 1024))

(global (;2;) i32 (i32.const 1024))

(global (;3;) i32 (i32.const 1024))

(global (;4;) i32 (i32.const 66560))

(global (;5;) i32 (i32.const 131072))

(global (;6;) i32 (i32.const 0))

(global (;7;) i32 (i32.const 1))

(export "memory" (memory 0))

(export "__wasm_call_ctors" (func $__wasm_call_ctors))

(export "add" (func $add))

(export "__dso_handle" (global 1))

(export "__data_end" (global 2))

(export "__global_base" (global 3))

(export "__heap_base" (global 4))

(export "__heap_end" (global 5))

(export "__memory_base" (global 6))

(export "__table_base" (global 7)))S expression looks much easier on the eyes. We can see some information, including method definitions, local variables, and export statements. However, you may find that such a simple add method requires so many lines of instruction (because we haven't started optimization yet).

clang \

--target=wasm32 \

-O3 \

-flto \ # Connection optimization

-nostdlib \

-Wl,--no-entry \

-Wl,--export-all \

-Wl,--lto-O3 \ # Connector connection optimization

-o add.wasm \

add.cThe link-time optimization (connection optimization) does not work because we only have one file here, and a good optimization effect will be achieved when there are many files.

After optimization, we found that the file became smaller.

(module

(type (;0;) (func))

(type (;1;) (func (param i32 i32) (result i32)))

(func (;0;) (type 0)

nop)

(func (;1;) (type 1) (param i32 i32) (result i32)

local.get 0

local.get 0

i32.mul

local.get 1

i32.add)

(memory (;0;) 2)

(global (;0;) i32 (i32.const 1024))

(global (;1;) i32 (i32.const 1024))

(global (;2;) i32 (i32.const 1024))

(global (;3;) i32 (i32.const 66560))

(global (;4;) i32 (i32.const 131072))

(global (;5;) i32 (i32.const 0))

(global (;6;) i32 (i32.const 1))

(export "memory" (memory 0))

(export "__wasm_call_ctors" (func 0))

(export "add" (func 1))

(export "__dso_handle" (global 0))

(export "__data_end" (global 1))

(export "__global_base" (global 2))

(export "__heap_base" (global 3))

(export "__heap_end" (global 4))

(export "__memory_base" (global 5))

(export "__table_base" (global 6)))So far, everything has gone well. However, once libc is used in the C code, things can become very troublesome.

This is because most libc are developed for POSIX environment. They implement many syscalls by calling kernel, but we do not have kernel interfaces in JavaScript. We must implement these POSIX syscalls through JavaScript to make libc run in wasm, which is difficult and has a heavy workload. Fortunately, emscripten has already helped us do these things. Please visit this link for more details.

Before we understand how values are passed between JS and wasm, let's learn its memory model.

If you compile wasm using LLVM IR-based methods, the memory segmentation of wasm is determined by the llvm-ld. It's a segment of linear memory.

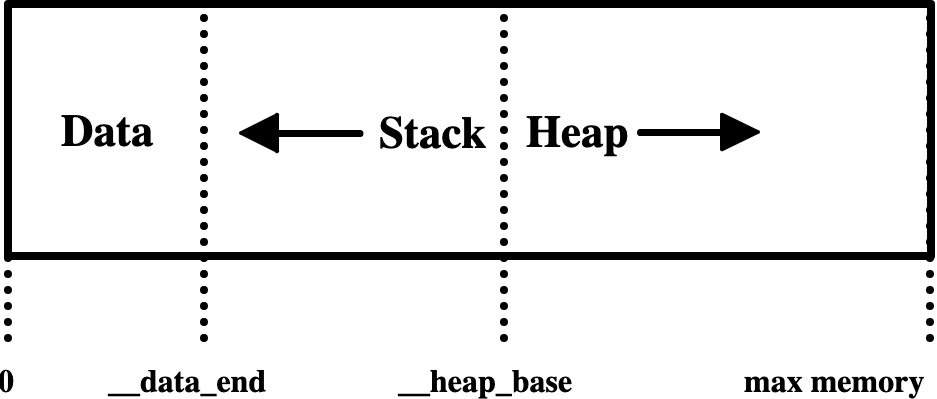

wasm memory layout

We can see that the stack is followed by the heap. The stack grows from high to low, and the stack grows from low to high. The reason for this design is that the memory of wasm can grow dynamically at runtime.

The figure shows us that the stack size is fixed. (The compile time is determined, and the -Wl,-z,stack-size=$[8 * 1024 * 1024]) can be modified through compile parameters.) The size is __heap_base - __data_end. These two values can be found in the wat format above.

The heap memory of wasm can grow up to the maximum value. However, if we just use it and don't recycle it, it will run out soon. So, we need a memory allocator. Many compilation tools have their own memory allocators. There are many self-implemented memory allocators in open-source. They all have advantages and disadvantages (such as wee_alloc), which is only about 1KB in size but is slower.

The memory of wasm is a shared memory allocated on the JS side when the wasm module is initialized. Both can use this shared memory. Zero copy sounds nice but is not that simple.

Since the function parameters imported and exported in wasm can only be numbers, if you want to pass in the complex object of JS, you need to use the wasm memory allocator.

The general method is to use malloc to allocate a block of memory (wasm code implementation) and return its pointer, use TypedArray to fill bytes for this block of memory, and pass the pointer to the wasm method.

Example:

// js side

const uints = [1, 2, 3, 4];

// Allocate a memory block of size bytes in the wasm heap and return a pointer

const ptr = this.module._malloc(uints.length)

// Extract the block of heap memory from the wasm heap

const heapBytes = new Uint8Array(this.module.HEAPU8.buffer, ptr, uints.length)

// Fill the block of heap memory with the 8-bit unsigned integer

heapBytes.set(uints)

// Internal method in emscripten

ccall(ptr, heapBytes.length);

// c side

void c_fn(uint8_t *buf, size_t buf_len) {}We can see that when the value of JS is passed into wasm, we need to apply for a block of memory in the shared memory, serialize the value of JS into TypedArray, and write it into this block of memory. After the value of JS is fetched into this block of memory according to the pointer, wasm deserializes it into the data structure it wants.

This is a process of serialization-> copy into memory-> read memory-> deserialization. The same process applies if you want to pass a value out in wasm.

This process is cumbersome and time-consuming because the data structures of languages are not interoperable, and converting the data structures between two languages is difficult. The common practice is to have the two languages use a shared memory and then make them write and read according to the same memory layout, but the premise is that the data must be serializable. If our data cannot be serialized, other means may be needed.

I will take DOM Event as an example to offer you an idea. Create an object mapped with DOM Event in wasm (it is not a real DOM Event), return its pointer, create a new DOM Event instance on the JS side, and establish a mapping relationship with this pointer. All subsequent operations on this wasm object are carried out through its mapped object. This is similar to the implementation process of binding.

At this point, we should know that when we compile complex applications into wasm, the performance may not necessarily be improved but may be reduced. This requires a reasonable evaluation of whether your application needs wasm to improve performance. If wasm is helpful, we should carefully consider how to design data transmission in JS, wasm, and other optimization points.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

First Asian Games Core Systems to be Hosted on Alibaba Cloud

Alibaba Integrates LLM Tongyi Qianwen into Taobao to Refine E-commerce Searches For Users

1,349 posts | 478 followers

FollowAlibaba Cloud Native Community - November 8, 2021

Alibaba F(x) Team - August 29, 2022

Alibaba Cloud Community - March 9, 2022

OpenAnolis - February 2, 2023

Alibaba Cloud Community - March 11, 2025

Alibaba Container Service - April 28, 2020

1,349 posts | 478 followers

Follow Web Hosting Solution

Web Hosting Solution

Explore Web Hosting solutions that can power your personal website or empower your online business.

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More mPaaS

mPaaS

Help enterprises build high-quality, stable mobile apps

Learn More Web Hosting

Web Hosting

Explore how our Web Hosting solutions help small and medium sized companies power their websites and online businesses.

Learn MoreMore Posts by Alibaba Cloud Community