By Kai Zhu, Machine Learning Platform for Artificial Intelligence (PAI) Team

As deep learning develops, the AI model structure is rapidly evolving, and the underlying computing hardware technology continues to emerge. It is necessary for the majority of developers to consider how to effectively utilize computing power in complex and changeable scenarios and deal with the continuous iteration of the computing framework. Therefore, the deep compiler has become a widely concerned technical direction to address the problems above, allowing users to focus on upper-level model development, reducing the human development cost of manual performance optimization, and further optimizing the hardware performance. Alibaba Cloud Machine Learning PAI open-sourced BladeDISC, a dynamic shape compiler for deep learning, and BladeDISC was put into practical business applications early. This article explains the design principles and applications of BladeDISC in detail.

BladeDISC is Alibaba's latest open-sourced dynamic shape compiler for deep learning based on MLIR.

https://github.com/alibaba/BladeDISC

In recent years, deep learning compilers have been extremely active as a new technical direction, including some old-fashioned compilers (such as TensorFlow XLA, TVM, Tensor Comprehension, Glow, and later highly popular compiler MLIR), and its extension projects in different fields (such as IREE and mlir-hlo). We can see that different companies and communities are making a lot of exploration and progress in this field.

Deep learning compilers have received continuous attention in recent years for the following reasons:

The model generalization is needed for framework performance optimization. With the rapid development of deep learning and ever-increasing innovative application fields, how to effectively give full play to the computing power of hardware in complex and volatile scenarios has become an important part of AI application. In the early days, the focus of neural network deployment was on frameworks and operator libraries, which were largely undertaken by deep learning frameworks, operator libraries provided by hardware manufacturers, and manual optimization by business teams.

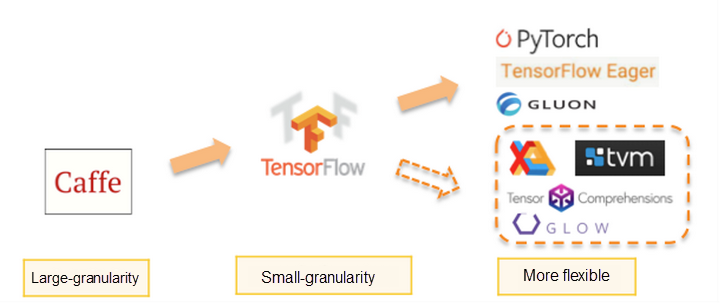

The figure above divides the deep learning frameworks in recent years into three generations. At the upper-layer user API level, these frameworks are becoming more flexible, which poses greater challenges for underlying performance. The first generation of deep learning frameworks is similar to Caffe, which describes the neural network structure in a sequence of layer way. The second-generation deep learning frameworks are similar to TensorFlow, which describes the computational graph with a finer-grained graph of operators. The third-generation deep learning frameworks are similar to the dynamic graphs of PyTorch and TensorFlow Eager Mode. We can see that the framework is becoming more flexible and has a stronger description ability, making it difficult to optimize the underlying performance. Business teams also need to do manual optimizations required for performance improvement. These works depend on the specific business and understanding of the underlying hardware, which are labor-intensive and difficult to generalize. The deep learning compiler generalizes the principle of manual optimization to solve the various problems caused by pure manual optimization by combining layer optimization and automatic or semi-automatic code generation during compilation. This way, the mismatch between the flexibility and performance of the deep learning framework can be solved.

Hardware generalization is needed for AI frameworks. In recent years, it seems that AI development has been widely recognized and is on the rise, while the decade-old development of hardware computing power in the background is the core driving force for the prosperity of AI.

Hardware innovation is an issue, and how to bring the computing power of hardware into play in real business scenarios is another issue. In addition to hardware innovation, new AI hardware manufacturers have to face the problem of investing heavy manpower in the software stack. How to achieve downward compatibility with hardware has become one of the core difficulties of today's deep learning frameworks, and the problem in compatibility with hardware needs to be solved by compilers.

The frontend AI framework generalization is needed for the AI system platform. Today, the mainstream deep learning frameworks include TensorFlow, Pytorch, Keras, and JAX. These frameworks have advantages and disadvantages. They have different upper-layer interfaces for users, but they all face problems in hardware adaptation and giving full play to hardware computing power. Different teams will choose different frameworks according to their modeling scenarios and usage habits, while the performance optimization tools and hardware adaptation solutions of cloud vendors or platforms need to consider different frontend frameworks and the requirements of future framework evolution. Google uses XLA to support TensorFlow and JAX, and other open-source communities have also developed access solutions (such as Torch_XLA and Torch-MLIR). Although there are some problems in terms of ease of use and maturity for these access solutions, they reflect that the generalization of the frontend AI framework has become the requirement of AI systems and the future technology trend.

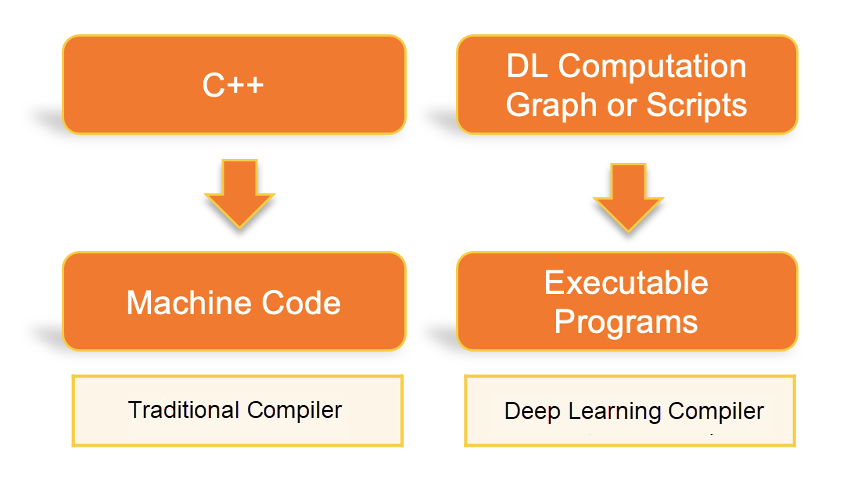

Traditional compilers use high-level languages as input to allow users to use more flexible and efficient language to work instead of writing machine code directly. At the same time, they introduce optimization in the process of compilation to solve the performance problem introduced by high-level language. This way, the mismatch between development efficiency and performance can be solved. Deep learning compilers have similar features and use flexible computational graphs with a higher level of abstraction as input. The output of deep learning compilers includes CPU, GPU, underlying machine code, and execution engines on other heterogeneous hardware platforms.

One of the missions of traditional compilers is to reduce the pressure on programmers. The high-level language (as the input of the compiler) is often more about describing logic. For the convenience of the programmer, the description of the high-level language is more abstract and flexible, and it is an important indicator to test the compiler whether this logic can be efficiently executed on the machine. As an application field that has enjoyed rapid development in recent years, the performance optimization of deep learning is important. There is also a mismatch between the flexibility and abstraction of high-level descriptions and the performance of low-level computing. Therefore, compilers designed for deep learning have emerged. Another important mission of the traditional compiler is to ensure that the high-level language input by the programmer can be executed on hardware computing units of different architectures and instruction sets. This mission is also important for deep learning compilers. In the face of a new hardware device, it is unlikely to manually rewrite all the operator implementations required for a framework for numerous target hardware. The deep learning compiler provides the IR of the middle layer, converts the model flow diagram of the top-layer framework into the IR of the middle layer, performs general layer optimization on the IR of the middle layer, and generates the machine code of each target platform with the versatility of the optimized IR at the backend.

The deep learning compiler is developed to achieve performance optimization and hardware adaptation for AI computing tasks by working as a common compiler. When you use a deep learning compiler, you only need to focus on upper-level model development. This helps reduce manual optimization efforts and make full use of hardware performance.

Although the deep learning compiler has many similarities with the traditional compiler in terms of objectives and technical architecture and has shown good potential in the technical direction, its current practical application scope is still far away from traditional compilers. The main reasons are listed below:

Ease of Use: Deep learning compilers are designed to reduce the labor cost of manual performance optimization and hardware adaptation. However, at this stage, there are great challenges in the deployment and application of deep learning compilers on a large scale, and it is difficult to make full use of deep learning compilers. The main reasons for this include:

Robustness: Most of the mainstream AI compilers are still experimental products, and the maturity of the products is far from industrial-grade applications. The robustness here includes the successful compilation of the input computational graph, the correctness of the calculation results, and the avoidance of extreme bad case under the corner case in terms of performance.

Performance Issue: Compiler optimization essentially aims to replace the human cost of manual optimization with limited compilation overhead through generalized integration and abstraction of manual optimization methods or optimization methods not easily explored by people. However, how to integrate and abstract optimization methods is the most essential and difficult problem in the whole link. Deep learning compilers can only play their real value when they can replace or exceed manual optimization in performance or when they can significantly reduce labor costs.

However, it is not easy to achieve this goal. Most deep learning tasks are tensor-level computations, which have high requirements for splitting parallel tasks. However, there are still more unknowns to explore about how to integrate manual optimization in compiler technology in a generalized way to avoid high compilation overhead and achieve the optimization linkage between different layers after layering. This has also become a problem that needs problem-solving by the next-generation deep learning compilers represented by the MLIR framework.

The project was initiated to solve the static shape limitation of the XLA and TVM. The internal name is Dynamic Shape Compiler (DISC), and it is designed to build a deep learning compiler that can be used in actual business and fully supports dynamic shape semantics.

Since the team started working on the deep learning compiler four years ago, the dynamic shape issue has been one of the serious problems that prevent the actual implementation. At that time, mainstream deep learning frameworks, including XLA, were compiler frameworks based on static shape semantics. A typical solution requires the user to specify the input shape, or the compiler captures the actual input shape combination of the subgraph to be compiled at runtime and generates a compilation result for each input shape combination.

The advantages of the static shape compiler are clear. When the compiler captures the static shape information, it can make better optimization decisions and obtain better CodeGen performance, better video memory, and better memory optimization plans and scheduling execution plans. However, its disadvantages are also clear, including:

DISC was officially put into practice within Alibaba in Summer 2020. It was first put into use in several business scenarios that have been suffering from dynamic shape problems for a long time and achieved the expected results. In the case of one-time compilation and no special processing on the computational graph, the dynamic shape semantics are fully supported, and the performance is almost equal to that of the static shape compiler. Compared with TensorRT and other optimization frameworks based on manual operator libraries, DISC's technical architecture, based on the automatic CodeGen technology, has obtained significant performance and ease-of-use advantages in the actual business of non-standard open-source models.

Since the second quarter of 2020, DISC has continued to invest in research and development. In view of the bottleneck mentioned above that prevents deep learning compilers from large-scale deployment and application from the perspective of the cloud platform, the deep learning compiler has improved in terms of performance, operator coverage and robustness, CPU and new hardware support, and frontend framework support. In terms of scenario coverage capability and performance, DISC has replaced the team's previous work based on static shape frameworks (such as XLA and TVM) and has become the main optimization method for PAI-Blade to support the internal and external businesses of Alibaba. After 2021, DISC has significantly improved its performance on the backend hardware of the CPU and GPGPU architecture. At the same time, it has invested more technical effort in supporting new hardware. At the end of 2021, in order to attract more technical exchanges, cooperation, and co-construction needs and a wider range of user feedback, the official name of DISC was changed to BladeDISC, and the initial version was open-sourced.

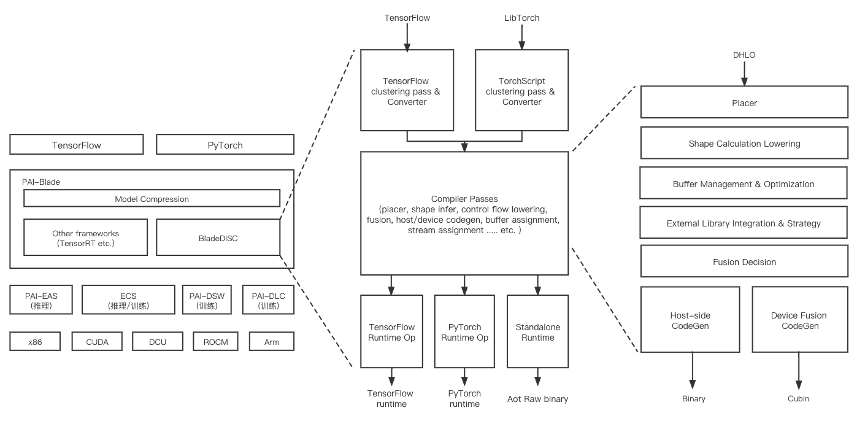

The following figure shows the overall architecture of BladeDISC and the context relationship with related Alibaba Cloud products.

MLIR is a project initiated by Google in 2019. MLIR is a set of flexible multi-level IR infrastructure and compiler utility libraries. It is deeply influenced by LLVM and reuses many of its best ideas. The main reasons we choose MLIR are its rich infrastructure support, modular design architecture for easy extensibility, and strong glue capability.

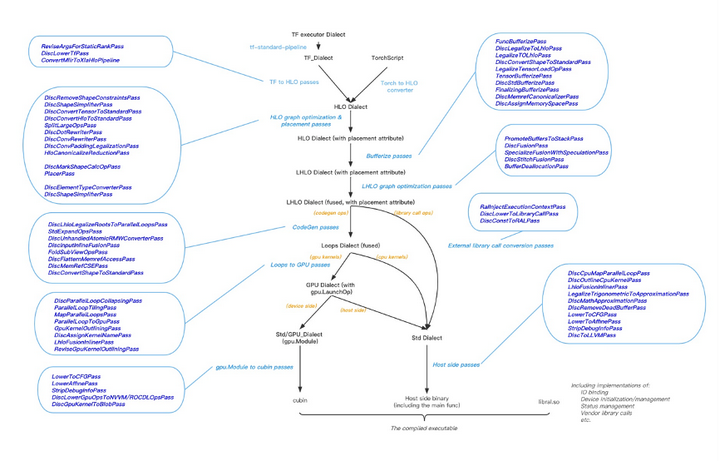

The figure above shows the main pass pipeline design of BladeDISC. Compared with the current mainstream deep learning compiler projects, its main technical features are listed below:

BladeDISC connects to different frontend frameworks based on HLO as the core graph IR, but HLO is an IR with pure static shape semantics originally designed for XLA. In static shape scenarios, the shape expression in HLO IR will be static, and all shape calculations will be solidified into compile-time constants and retained in the compilation results. IR needs to have sufficient ability to express shape calculation and transfer dynamic shape information in dynamic shape scenarios. BladeDISC has maintained close cooperation with the MHLO community since the inception of the project. Based on the HLO IR of XLA, BladeDISC has expanded a set of IR with complete dynamic shape expression capability and added corresponding infrastructure and operator conversion logic of the frontend framework. This part of the implementation has been completely updated to the MHLO community to ensure the consistency of IR in other MHLO-related projects in the future.

The main challenge in dynamic shape compilation is handling dynamic computational graph semantics during static compilation. In order to fully support dynamic shape, the compilation results need to support real-time shape derivation calculation at runtime. In addition to computing data, generating code for shape calculation code is needed. The calculated shape information is used for memory or video memory management and parameter selection during kernel scheduling. The design of the pass pipeline of BladeDISC fully considers the requirements for dynamic shape semantic support mentioned above and uses the solution of host-device joint codegen. Let’s take GPU Backend as an example. The automatic code generation is used for shape calculation, memory or video memory application release, hardware management, and kernel launch runtime to obtain a complete end-to-end support solution for dynamic shape and better overall performance.

When the shape is unknown or partially unknown, deep learning compilers face greater challenges of performance. On most mainstream hardware backends, BladeDISC adopts a strategy that distinguishes between compute-intensive and memory-access-intensive parts to achieve a better balance between performance, complexity, and compilation overhead.

For the compute-intensive part, different shapes require more refined schedule implementation to obtain better performance. The main consideration in the design of the pass pipeline is to support the selection of appropriate operator libraries implementation according to different specific shapes at runtime and to deal with layout problems under dynamic shape semantics.

For the memory-access-intensive part, as one of the main sources of performance gains for deep learning compilers, automatic operator fusion also faces performance challenges when the shape is unknown. Many deterministic issues in static shape semantics (such as the vectorization of the instruction layer, the selection of CodeGen templates, and whether the implicit broadcast is required) become complex in dynamic shape scenarios. In response to these problems, BladeDISC chooses to sink some of the optimization decisions from compile-time to runtime. Multiple versions of kernel implementations are generated according to certain rules at compile-time, and the optimal implementation is automatically selected according to the actual shape at runtime. This mechanism is called speculation and is implemented in BladeDISC based on joint CodeGen of host-device. In addition, in the absence of specific shape values at compile-time, it is easy to lose a large number of optimization opportunities at all levels, from linear algebra simplification and fusion decisions of layers to CSE and constant folding at the instruction level. In the design process of IR and pass pipeline, BladeDISC focuses on the abstraction of shape constraint in IR and its use in pass pipeline (such as the constraint relationship between different dimension sizes unknown at compile-time. It plays an obvious role in optimizing the overall performance, ensuring that the performance can be comparable to or exceed the performance of the static shape compiler.

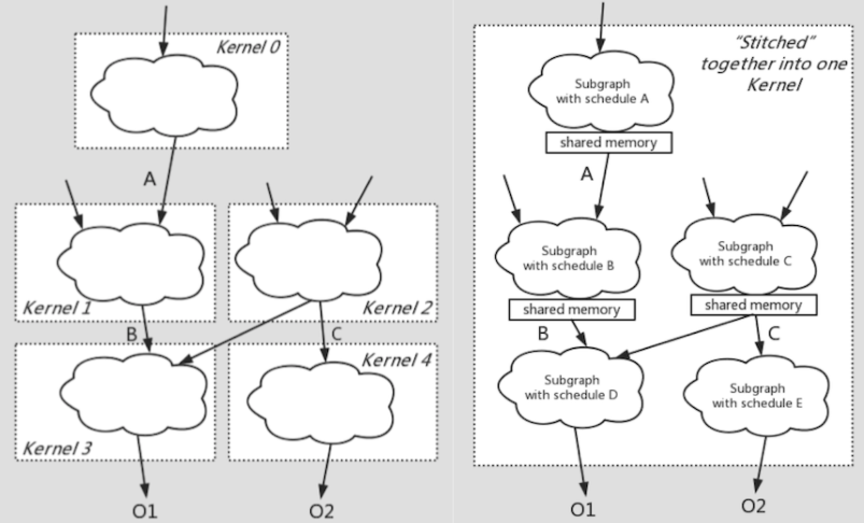

Before initiating the BladeDISC project, the team made several explorations in large granularity operator fusion and automatic code generation based on the static shape compiler 3. The basic idea can be summarized as stitching computational subgraphs of different schedules into the same kernel to realize multiple parallel loop fusion with the help of shared memory with low memory and access overhead in GPU hardware or Memory Cache with low memory and access overhead in CPU. This CodeGen method is called fusion-stitching. This automatic code generation of memory-access-intensive subgraphs breaks the limits on fusion granularity imposed by conventional loop fusion and input/output fusion. It significantly increases fusion granularity while maintaining code generation quality and avoiding complexity and high compilation overhead. The entire process is transparent to users without the need to manually specify the schedule description.

Compared with static shape semantics, the implementation of fusion-stitching under dynamic shape semantics needs to deal with greater complexity. The shape constraint abstraction under dynamic shape semantics simplifies this complexity to a certain extent, making the overall performance closer to or better than manual operator implementation.

The AICompiler framework took extending support to different frontend frameworks into account when it was designed. On the PyTorch side, a lightweight converter is implemented to convert TorchScript to DHLO IR to cover PyTorch inference jobs. The complete IR infrastructure of MLIR also facilitates the implementation of Converter. BladeDISC contains the Compiler and the Bridge that adapts to different frontend frameworks. The Bridge is further divided into two parts: the layer pass and the runtime Op in the host framework. These two parts are connected to the host framework in the form of plug-ins. This way of working enables BladeDISC to transparently support frontend computational graphs and adapt to various versions of the host framework.

In order to execute the compilation results in their respective running environments with hosts (such as TensorFlow and PyTorch) and to manage the state information that is not easily expressed by the runtime IR layer, we have implemented a unified compiler architecture for different runtime environments and introduced runtime abstraction layer, namely Runtime Abstraction Layer (RAL).

RAL supports the adaptation of multiple runtime environments. You can choose a runtime environment based on your needs:

The environments above differ in resource management, API semantics, etc. RAL isolates the compiler from the runtime by abstracting a minimum set of APIs and clearly defining their semantics to achieve the purpose of being able to execute the compiled results in different environments. In addition, RAL implements statelessness compilation. It can solve the state information processing problem that the compilation result may be executed multiple times after the computational graph is compiled. On the one hand, the complexity of code generation is simplified. On the other hand, it is easier to perform multi-thread concurrent execution (such as inference) and error handling and rollback.

The typical application scenarios of BladeDISC can be divided into two types. Firstly, on mainstream hardware platforms, it serves as a universal and transparent performance optimization tool, reducing the human burden of deploying AI tasks for users and improving model iteration efficiency. Secondly, it helps new hardware in adaptation and connection in AI scenarios.

BladeDISC has been widely used in many different application scenarios inside Alibaba and on the Alibaba Cloud. The covered model types include NLP, machine translation, Apsara Stack Resilience (ASR), TTS, image detection, image recognition, AI for science, and other typical AI applications. The covered industries include the Internet, e-commerce, autonomous driving, security, online entertainment, medical treatment, and biology.

In inference scenarios, BladeDISC and inference optimization tools provided by vendors (such as TensorRT) have good technical complementarity. Their main differentiating strengths include:

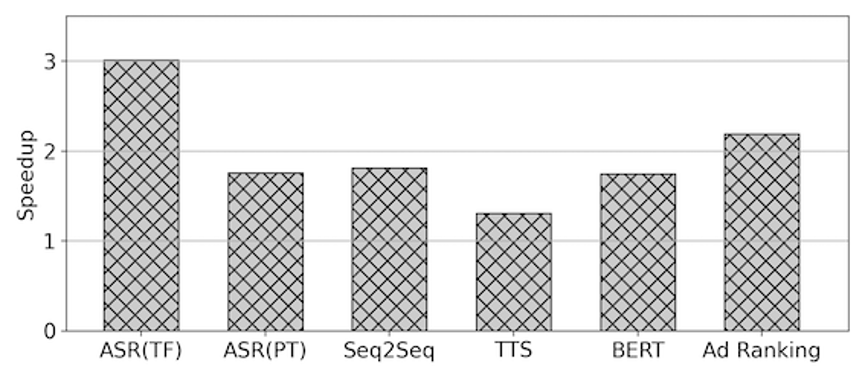

The following figure shows the performance benefit figures for several business examples on Nvidia T4:

In terms of new hardware support, the common situation is that in addition to Nvidia and other head manufacturers, ROCM and other GPGPU hardware have quite competitive hardware indicators. However, manufacturers are subject to a relatively small accumulation on the AI software stack, and there is a common problem that the computing power of hardware cannot be brought into play, making the hardware application and implementation difficult. As mentioned earlier, the compiler-based technology path has a certain generalization ability for the backend of the hardware and forms a strong complementarity with the technical reserves of the hardware manufacturer. BladeDISC has a relatively mature reserve of GPGPU and general CPU architecture. Taking GPGPU as an example, most of the technology stacks on Nvidia GPU can be migrated to hardware with similar architecture (such as Hygon DCU and AMD GPU). BladeDISC's strong hardware generalization capability, combined with the strong versatility of the hardware, solves the performance and availability problems in new hardware adaptation.

The following figure shows the performance figures on several business examples on Hygon DCU:

| A recognition model | Inference | 2.21X to 2.31X at different batch sizes |

| A detection model A | Inference | 1.73X to 2.1X at different batch sizes |

| A detection model B | Inference | 1.04X to 1.59X at different batch sizes |

| A molecular dynamics (MD) model | Training | 2.0X |

We decided to build an open-source ecosystem based on the following consideration:

BladeDISC originates from the business requirements of the Alibaba Cloud computing platform team. During the development process, the discussions and exchanges with communities (such as MLIR, MHLO, and IREE) provide us with help and reference. In the current field of AI compilers, there are more experimental projects and fewer practical products, and the work between different technology stacks is relatively fragmented. Therefore, while we gradually improve ourselves with the iteration of business requirements, we opened the source code and gave back our experience and understanding to the community. We hope to have more and better communication and co-construction with developers of deep learning compilers and practitioners of the AI System and contribute our technical strength to this industry.

We hope to receive more feedback from users in business scenarios with the help of open-source work to help us continue to improve, iterate, and provide input for the direction of subsequent work.

In the future, we plan to release the release version every two months. The recent roadmap of BladeDISC is listed below:

In addition, we will continue to invest in the following exploratory directions. We welcome feedback, suggestions for improvement, and technical discussion from various dimensions. At the same time, we look forward to the participation of colleagues interested in the construction of an open-source community.

DeepRec: A Training and Inference Engine for Sparse Models in Large-Scale Scenarios

The EasyNLP Chinese Text-to-Image Generation Model Can Make Anyone an Artist in Seconds!

44 posts | 1 followers

FollowAlibaba Cloud Community - March 9, 2023

Alibaba Cloud Data Intelligence - July 18, 2023

Alibaba Clouder - March 3, 2021

OpenAnolis - February 6, 2025

Alibaba Clouder - August 27, 2018

Alibaba Cloud Native Community - September 11, 2024

44 posts | 1 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn MoreMore Posts by Alibaba Cloud Data Intelligence