By Bai Xueyu, R&D engineer of Zhongyuan Bank

This article is written by Bai Xueyu, an R&D engineer of a big data platform for Zhongyuan Bank. It mainly introduces the application of a real-time financial data lake in Zhongyuan Bank, including:

Located in Zhengzhou city, Henan province, Zhongyuan Bank is the only provincial corporate bank and the largest city commercial bank. It was successfully listed in Hong Kong on July 19, 2017. Since its establishment, Zhongyuan Bank has taken the strategy of strengthening and developing through technology to be more technology and data oriented. Zhongyuan Bank engages in and advocates technology by adopting technology-based measures.

This article introduces from the perspectives of backgrounds, architectures, and scenario practices of the real-time financial data lake.

Today, the decision-making models of banks are facing great changes.

First of all, traditional bank data analyses mainly focus on the bank's income, cost, profit distribution and response to the supervision of the regulatory authorities, which is very complex but with certain rules. It belongs to the financial data analysis. With the continuous development of Internet finance, the bank's business has been continuously squeezed and can no longer meet the business needs if it remains stagnant.

Today, it is imperative to do more targeted marketing and decision analyses through better customer understanding. Therefore, the bank's decision-making model gradually switches from the traditional financial analysis to the KYC-oriented (Know Your Customer) analysis.

Secondly, the traditional banking business mainly relies on business personnel to make decisions to meet the development needs of the business. However, as the banking business develops, various applications have generated massive data with multi types. Business personnel alone cannot meet business needs. Facing complex problems and increasing influencing factors, more comprehensive and intelligent technology measures are expected. Therefore, banks need to transform the decision-making model from purely based on business personnel to more on machine intelligence.

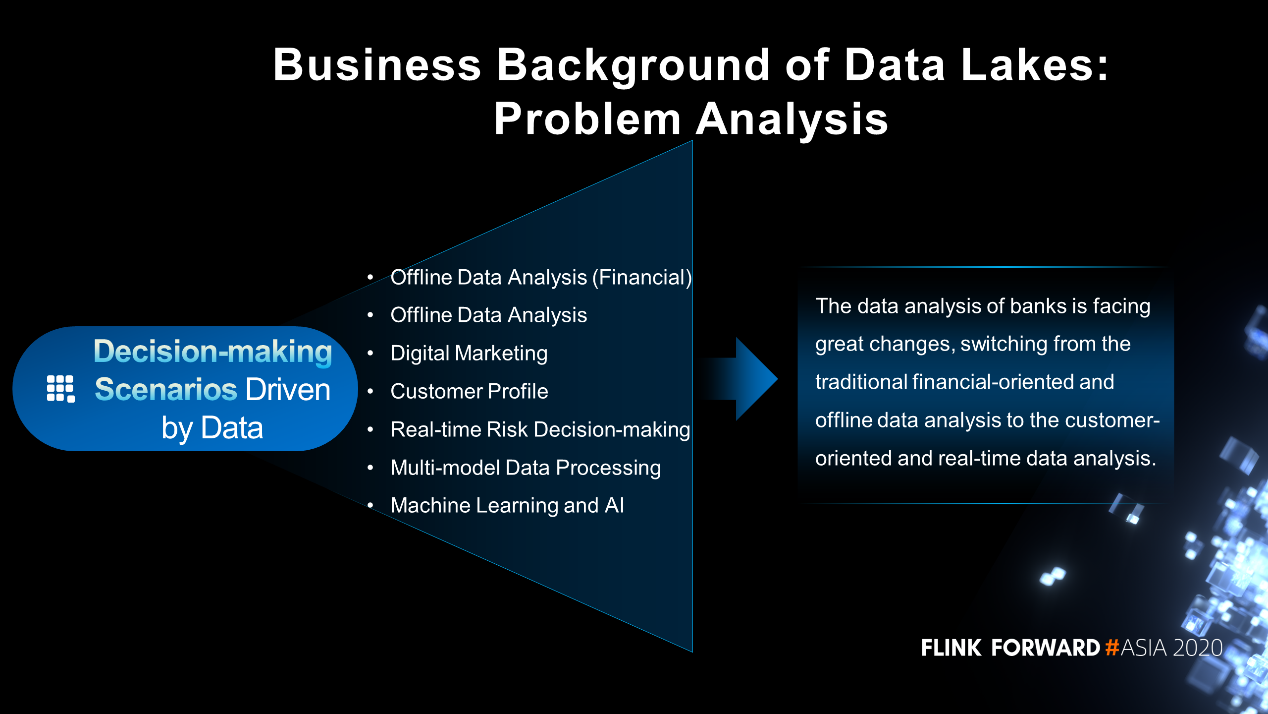

The most typical feature of the big data era is its large amount and types of data. Various technologies are involved in the use of massive data, including

Diversified digital marketing methods are needed to paint a more comprehensive, accurate and scientific customer profile. Furthermore, real-time risk decision-making technologies are required to monitor risks in real time and multi-mode data processing technologies to effectively support different types of data, including structured data, semi-structured data and unstructured data. Moreover, machine learning and AI technologies are also needed to support intelligent problem analyses and decision-making.

Various technologies and decision-making scenarios driven by data lead to a huge change, switching from the traditional financial-oriented and offline data analysis to the customer-oriented and real-time data analysis.

In the banking system, the traditional data warehouse architecture based on standardized and precise processing can better solve scenarios in financial analyses. It will remain the mainstream scheme for a long time.

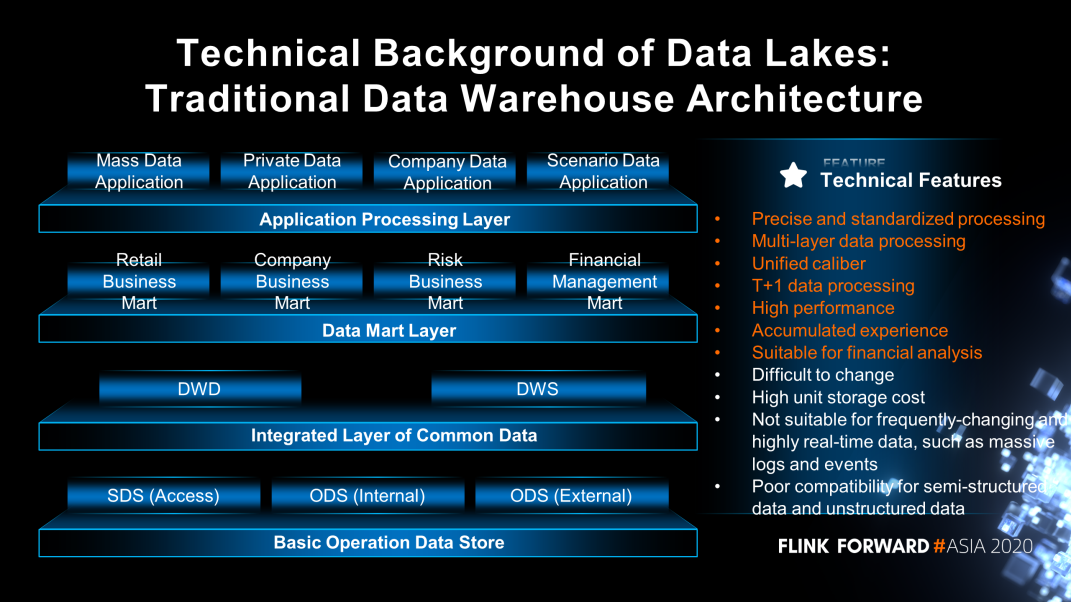

The following figure shows the traditional data warehouse architecture. There are the basic Operational Data Store (ODS), the integrated layer of common data, the data mart layer, and the application processing layer from the bottom up. Different layers perform massive operations in batches every day to obtain the desired business results. Banks have long been relied on the traditional data warehouse system because it provided excellent financial analysis solutions, featured by obvious advantages:

However, a traditional data warehouse system also has its shortcomings. This include:

To sum up, the traditional data warehouse architecture has both advantages and shortcomings and will exist for a long time.

The analysis based on KYC and machine intelligence needs to support data of multiple types and multiple time-effectiveness with agility. Therefore, a new architecture complementary to the data warehouse architecture is required.

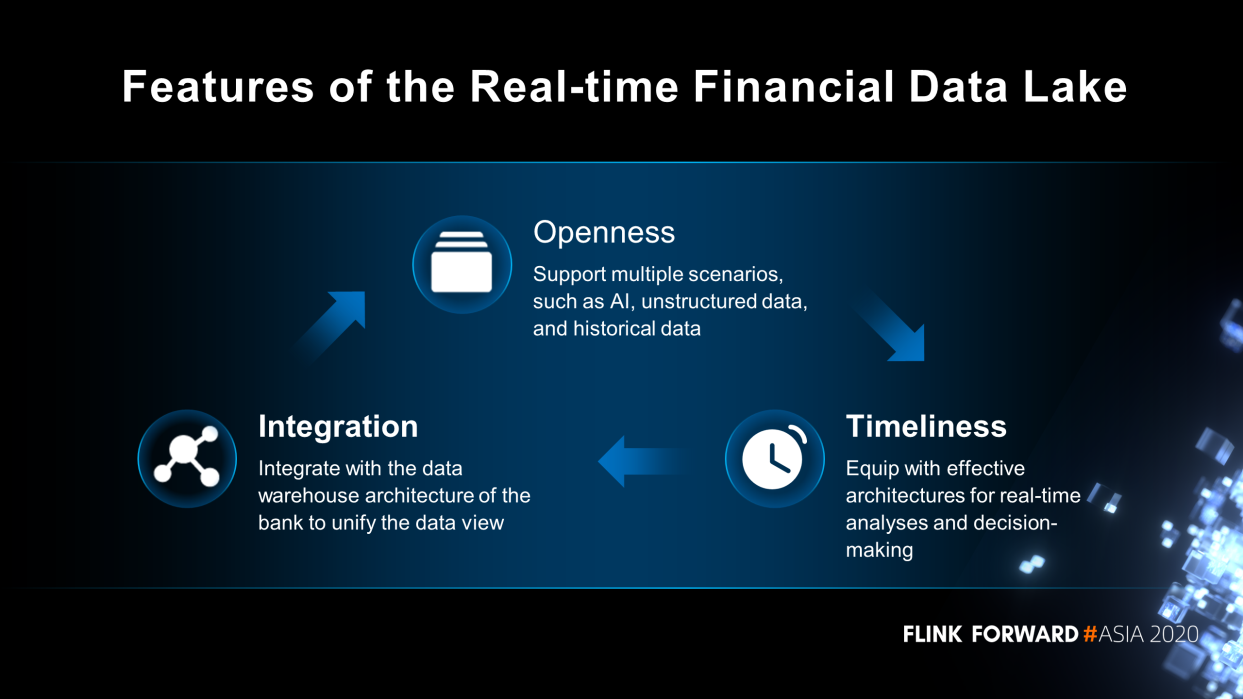

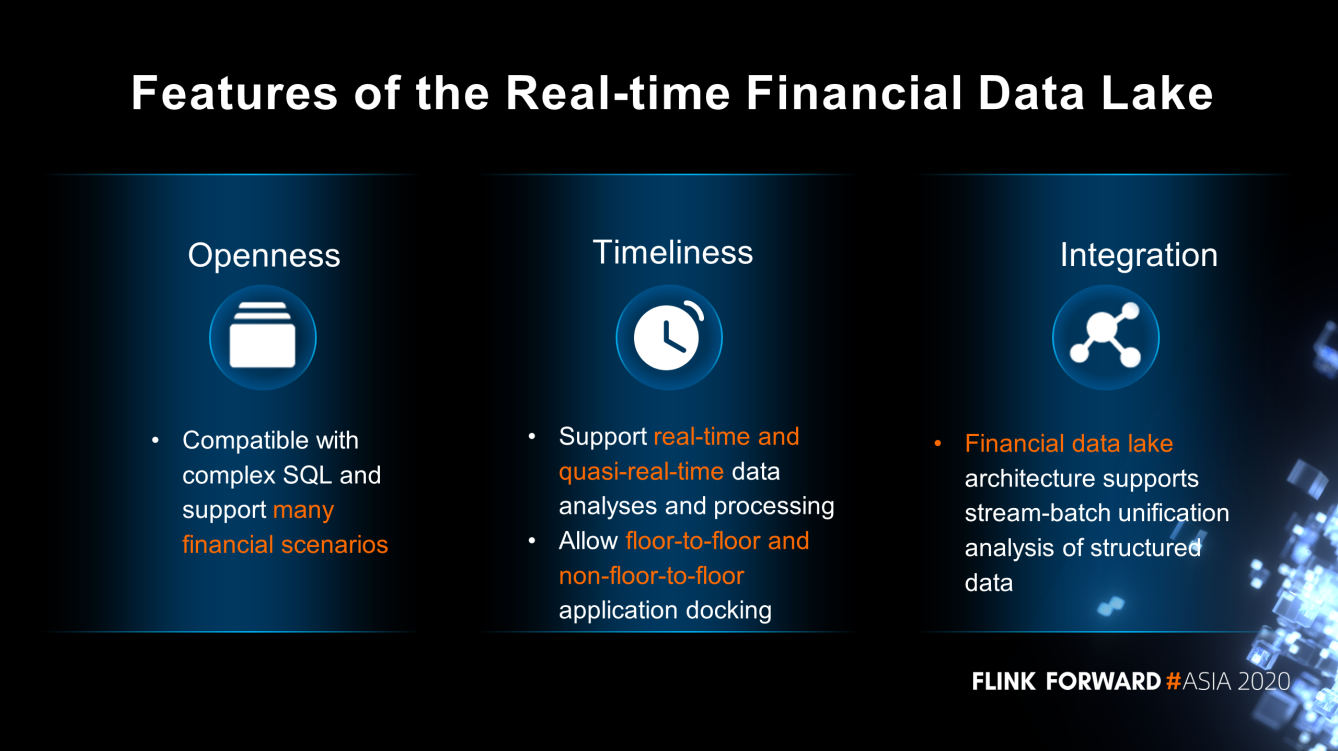

Now, it comes to the theme of this article, the real-time financial data lake. It has three main features:

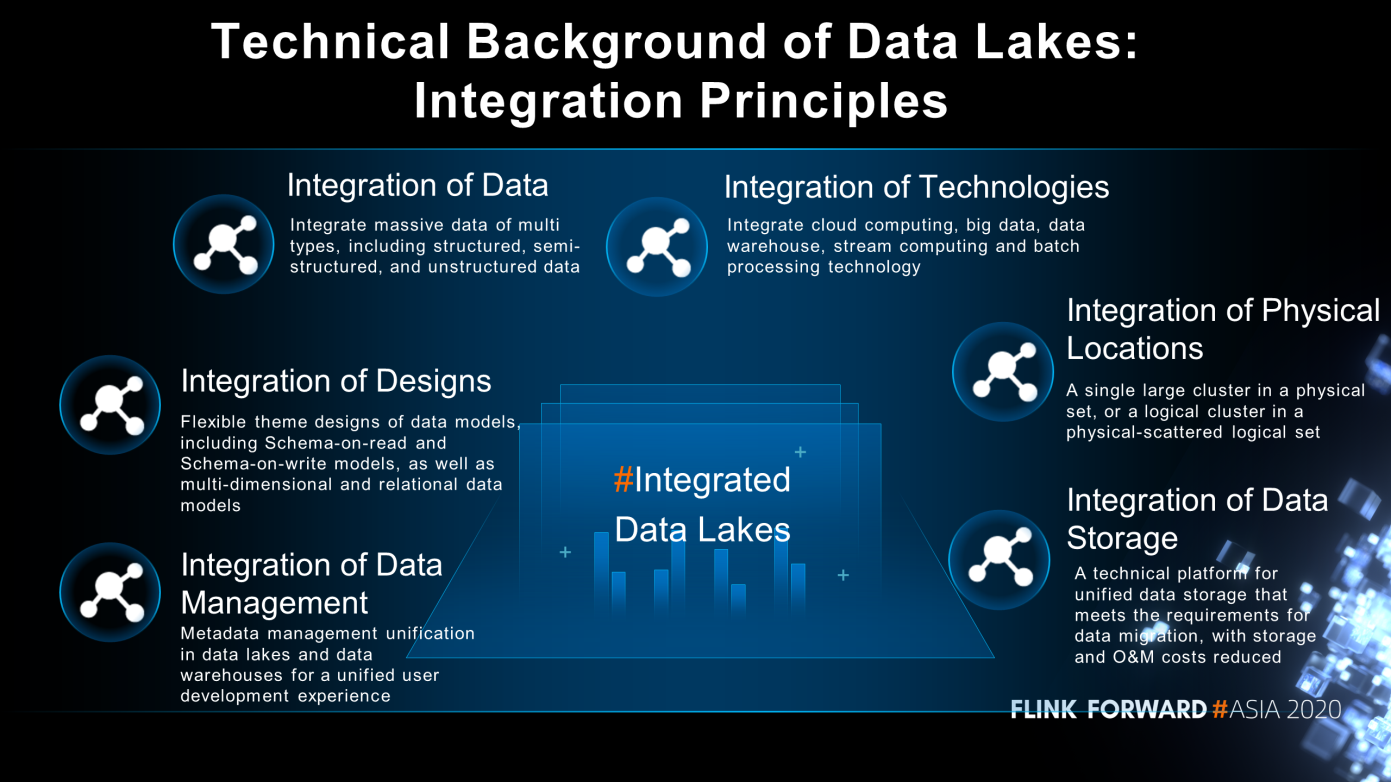

The overall real-time financial data lake is integrated. Its integration concepts are as follows.

First, it integrates data, including structured, semi-structured and unstructured data. Second, it integrates technologies, including cloud computing, big data, data warehouse, stream computing and batch processing technology. Third, it integrates designs. Flexible theme designs of data models include Schema-on-read and Schema-on-write models, as well as multi-dimensional and relational data models.

Fourth, it integrates data management, which enables metadata management unification in data lakes and data warehouses for a unified user development experience. Fifth, it integrates physical locations. It can be a single large cluster in a physical set, or a logical cluster in a physical-scattered logical set.

Sixth, it integrates data storage. A technical platform for unified data storage meets the requirements for data migration, with storage and O&M costs reduced.

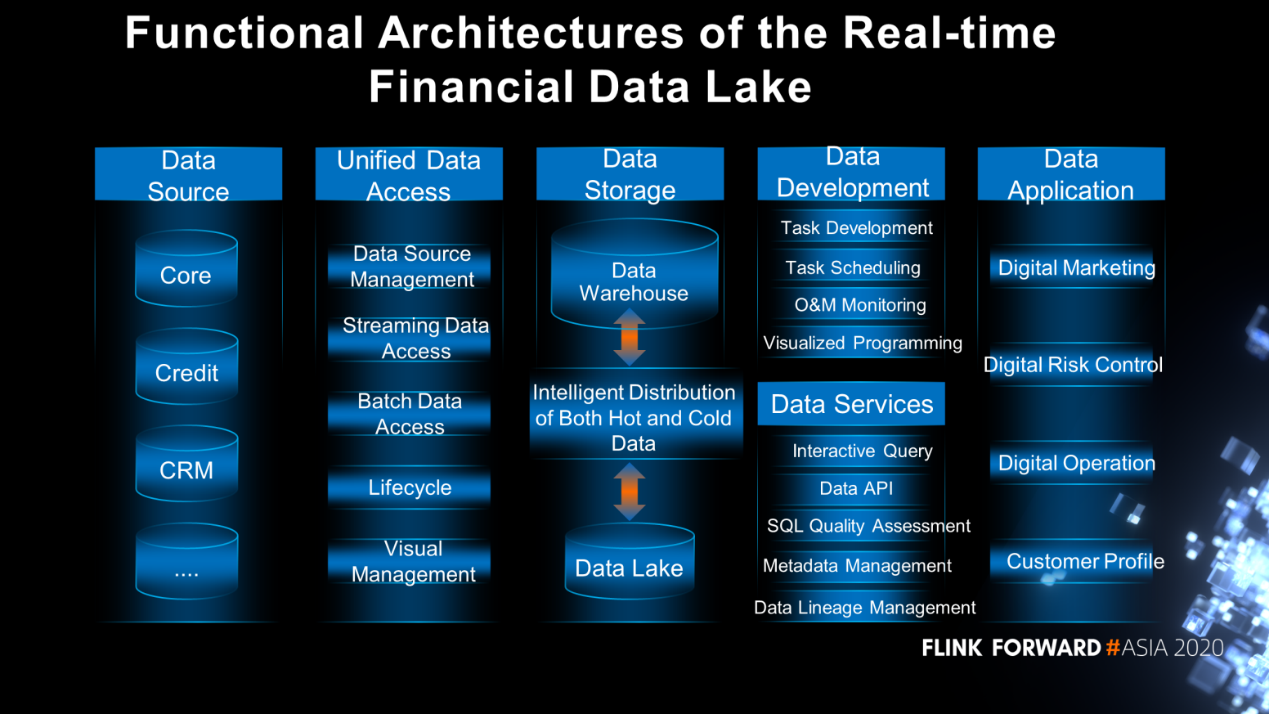

Its functions cover data sources, unified data access, data storage, data development, data services, and data applications.

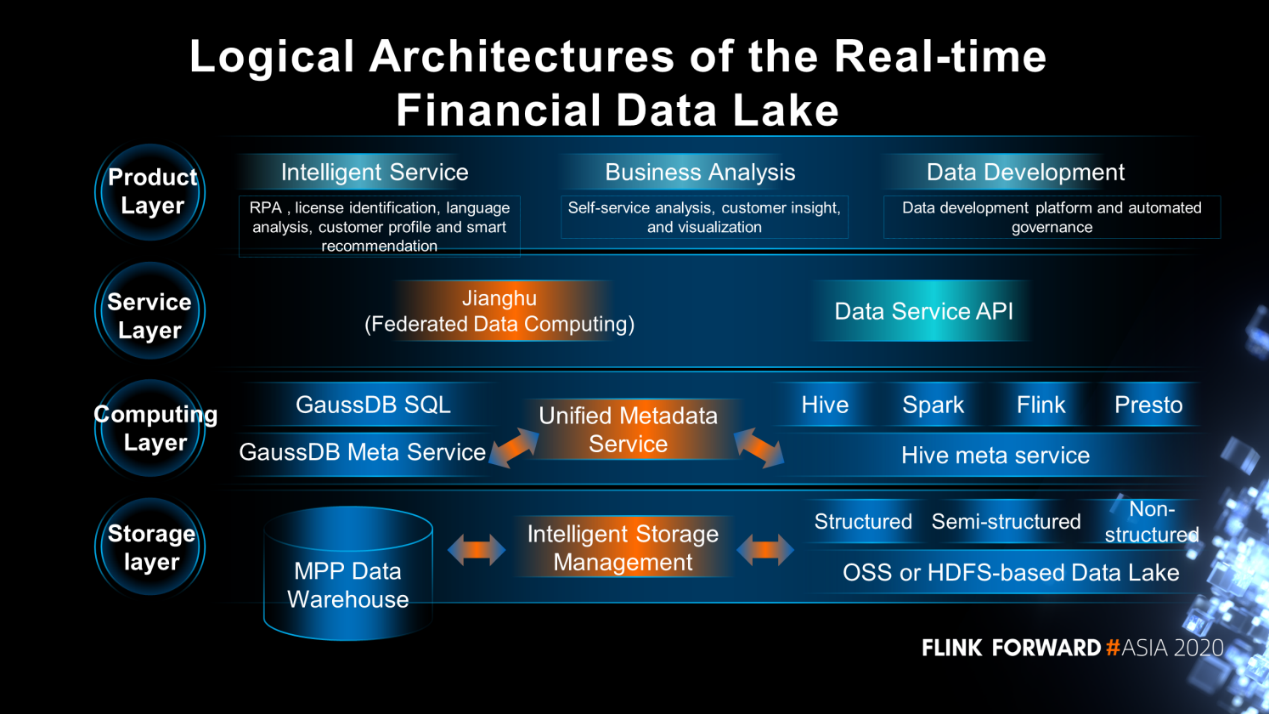

The logical architecture of the real-time financial data lake mainly includes the storage layer, computing layer, service layer, and product layer.

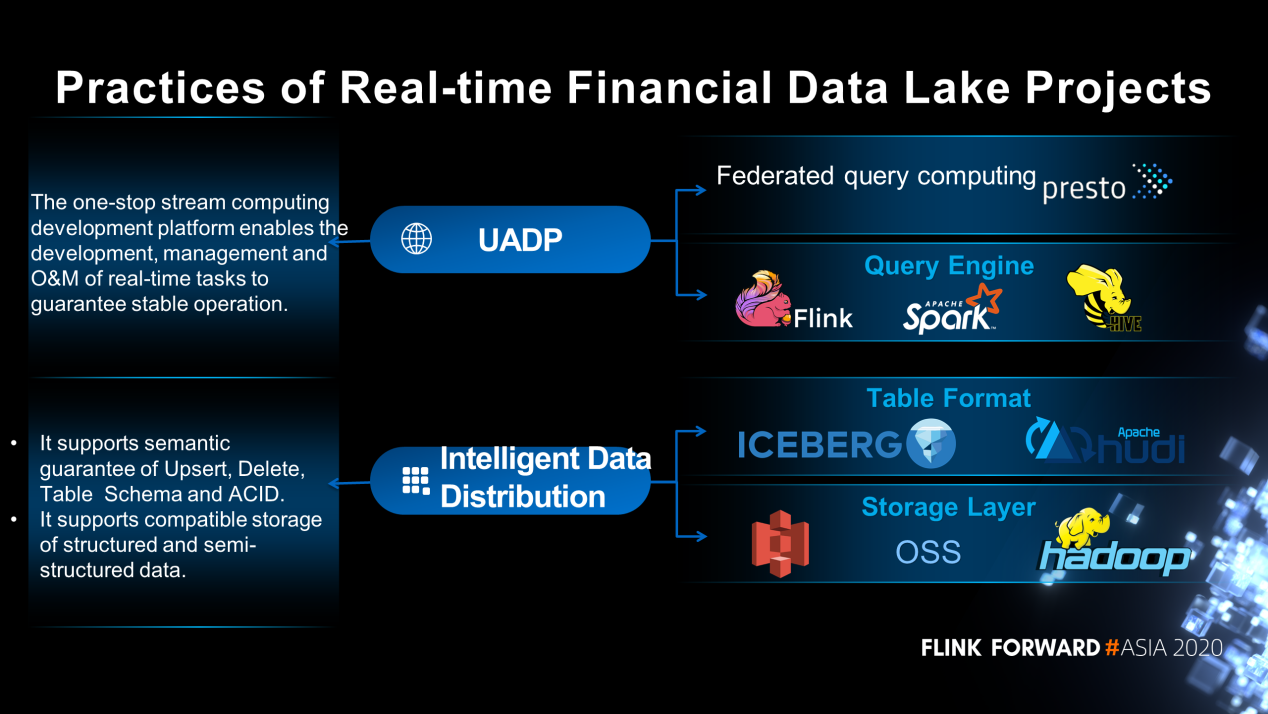

The practices are mainly tailored to real-time structured data analyses. As shown in the following figure, the open-source architecture consists of four layers, including the storage layer, table structure layer, query engine layer and federated computing layer.

The storage layer and table structure layer make up an intelligent data distribution, which supports semantic guarantees of Upsert, Delete, Table Schema and ACID. It also is compatible storage of semi-structured and unstructured data.

The query engine layer and federated computing layer make up a UADP. The one-stop data development platform enables development of real-time and offline data tasks.

This article mainly introduces the development of real-time data risks. The one-stop stream computing development platform will be described later, which enables the development, management and O&M of real-time tasks to guarantee stable operation.

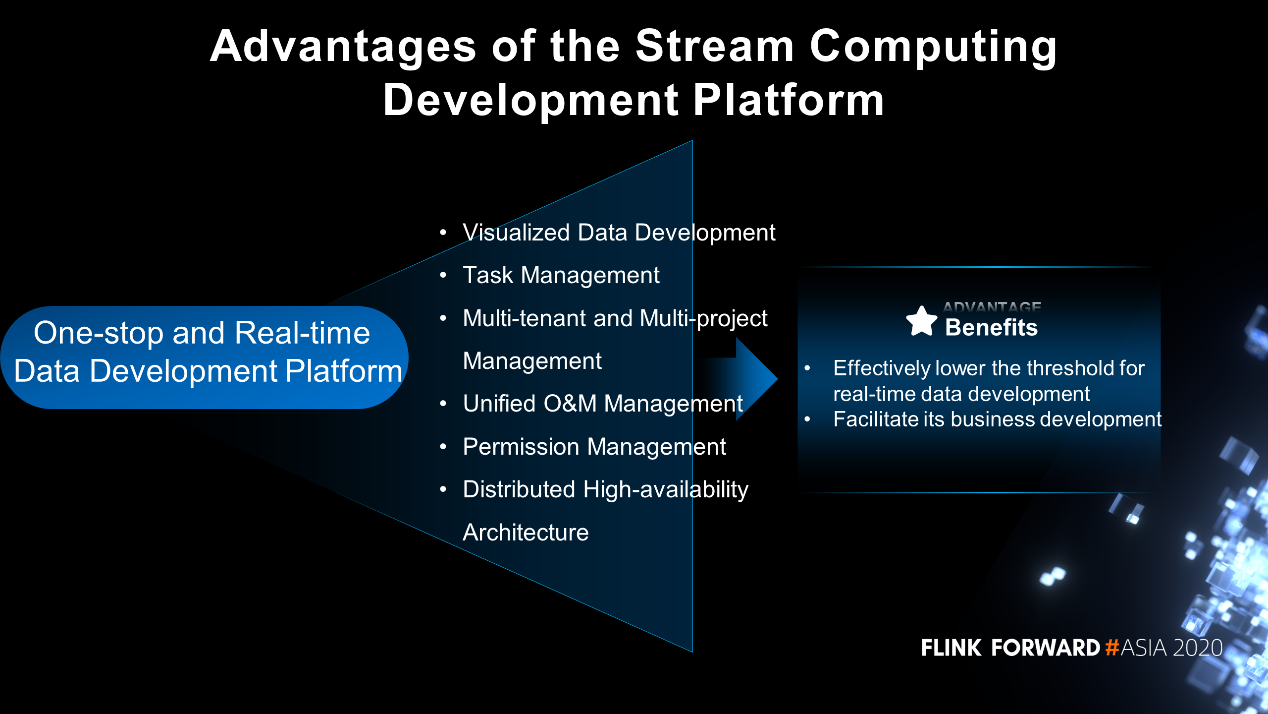

Why do banks need a stream computing development platform? What are its advantages?

The stream computing development platform can effectively lower the threshold for real-time data development and facilitate its business development. This platform offers a one-stop and real-time data development platform that allows developments of real-time data tasks, including visualized data development and task management, multi-tenant and multi-project management, unified O&M management and permission management. The stream computing platform is developed based on Flink SQL, which is born with productivities.

Through the continuous application of Flink SQL, the capabilities of the stream computing development platform can be divided into branch banks. They can independently develop real-time data tasks based on their own business requirements for further enhancement.

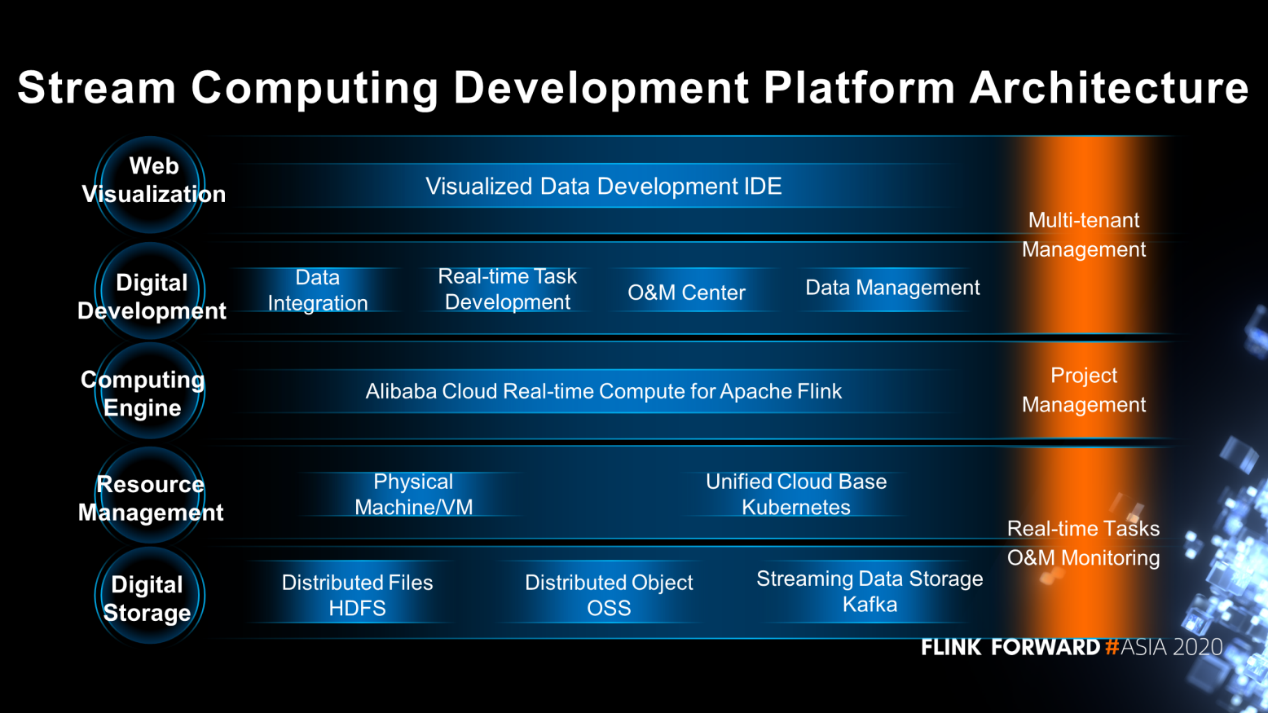

The following figure shows the architecture of the stream computing development platform, including data storage, resource management, computing engines, data development and Web visualization.

It allows multiple-tenant and multi-project management, as well as O&M monitoring for real-time tasks. This platform enables to management of resources on both physical and virtual machines, and supports a unified cloud base, Kubernetes. The computing engine is based on Flink, which provides data integration, real-time task development, O&M center, data management and visual data development of integrated development environment (IDE).

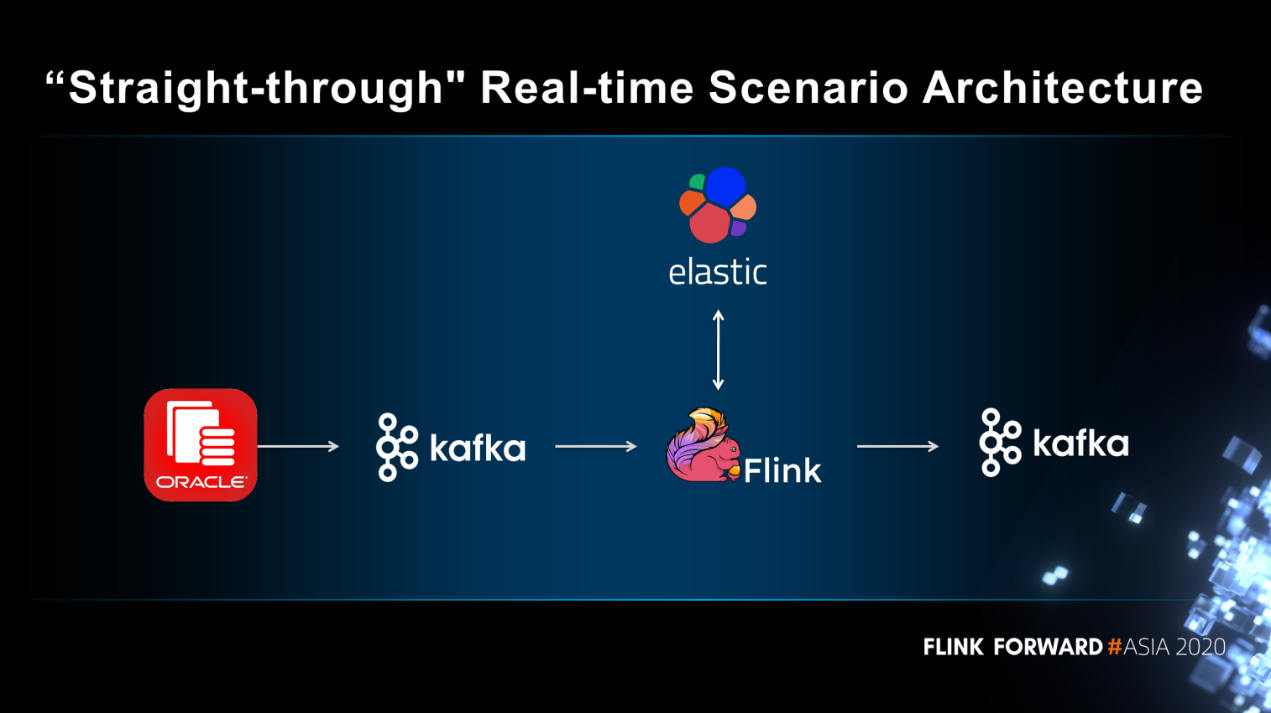

Specific scenarios are described below. Firstly, it's the "straight-through" architecture for real-time scenarios.

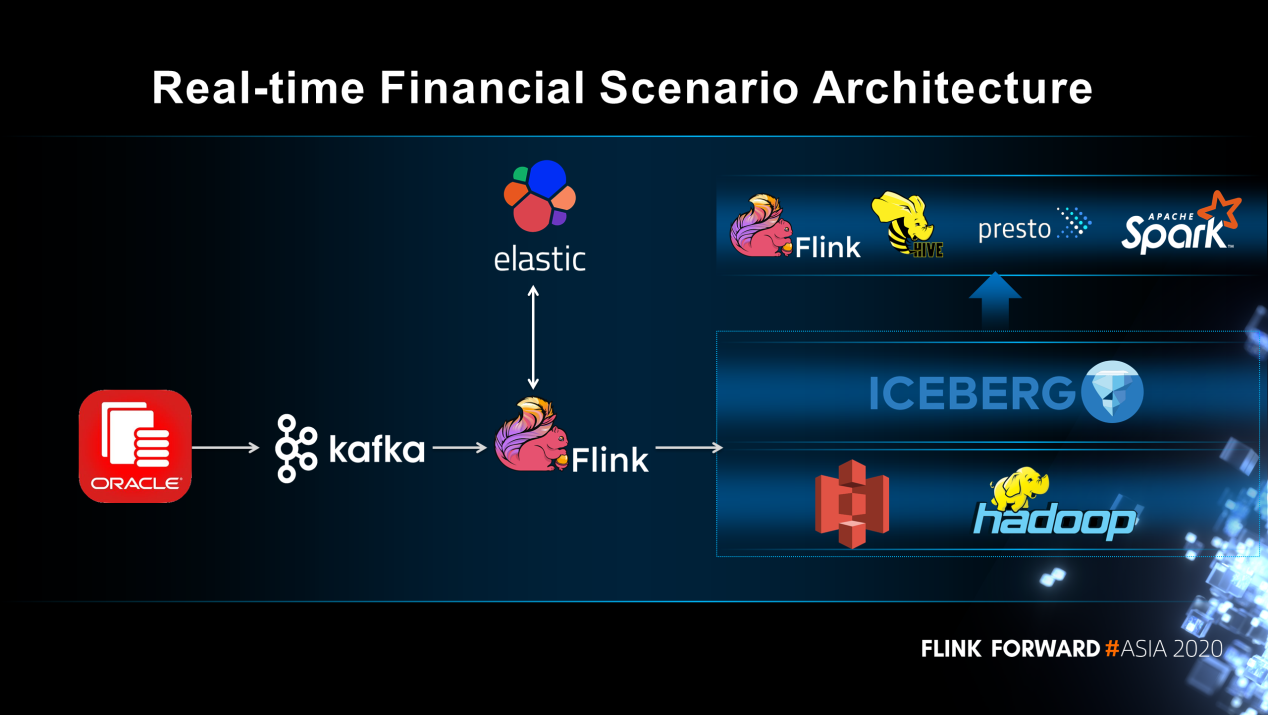

Data from different data sources is wired to Kafka in real time. Then, Flink reads Kafka data for processing and sends the results to the business end. The business side can be Kafka or a downstream side such as HBase. Dimension table data is stored through Elastic. The "straight-through" architecture that realizes T+0 data timeliness is mainly used in real-time decision-making scenarios.

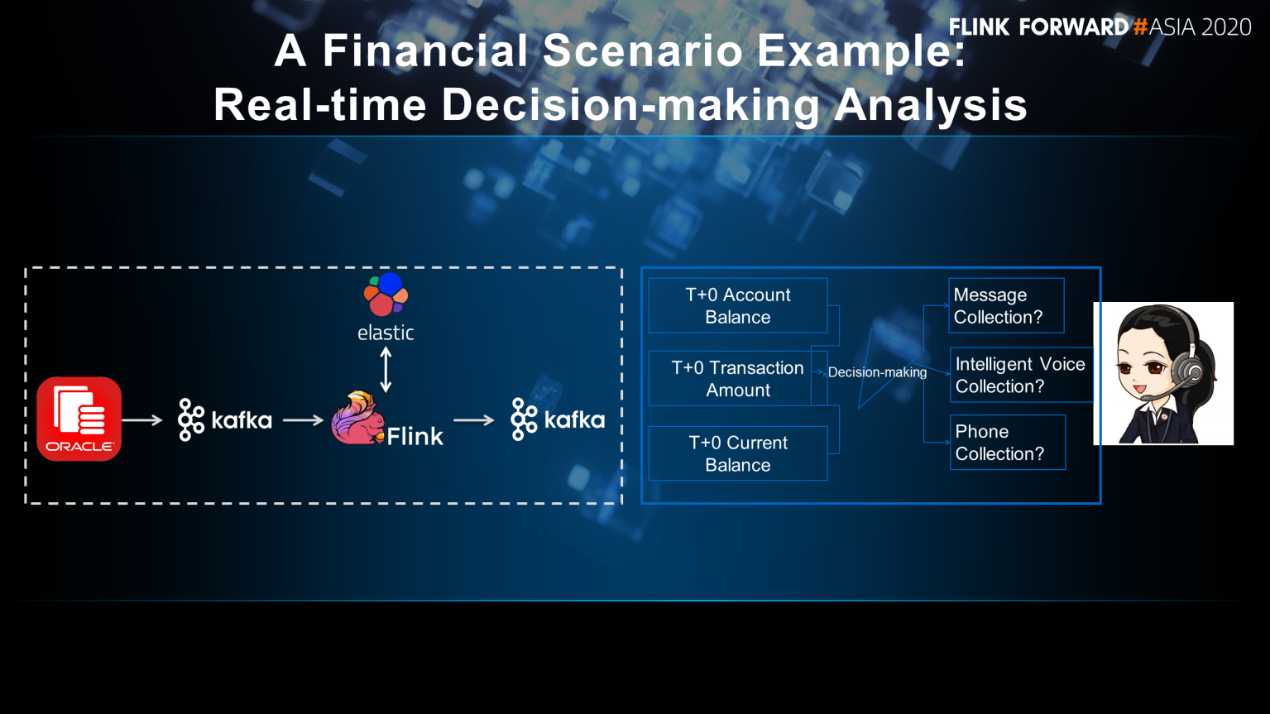

Take calling in loans coming due as an example. The business depends on account balance, transaction amount and current balance. To make decisions based on the three types for different businesses, whether to choose a message, intelligent voice, or phone collection?

If the architecture is based on the originally offline data warehouse architecture, the obtained data is T+1. Making decisions based on expired data causes the situation that one customer may have already made the payment, but still faces phone collection. However, the application of the straight-through architecture can realize T+0 account balance, transaction amount and current balance, making real-time decisions to improve user experience.

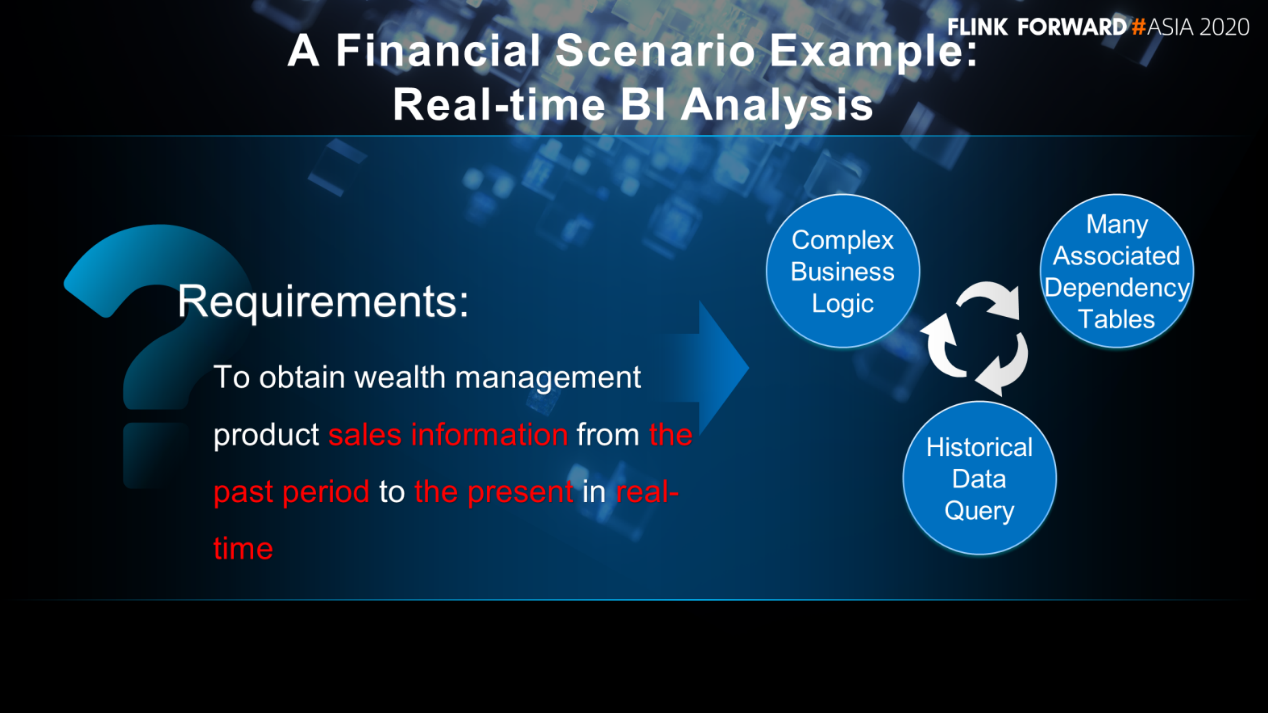

Some keywords are needed to meet requirements. For example, "real-time acquisition" is required to obtain wealth management product sales information from the past period to the present in real-time. This means that T+0 data is required. "From the past period to the present" involves queries of historical data. The sales information of wealth management products is related to the banking business, which is generally complex and requires multi-streaming join.

In this case, this is a real-time BI requirement, which cannot be effectively solved with a "straight-through" architecture. Because this architecture is based on Flink SQL, Flink SQL cannot effectively handle historical data queries. And banks often run complex businesses. Therefore, a double-streaming join is needed. To solve this problem, a new architecture is expected, which should be different from the "straight-through" architecture for real-time scenarios.

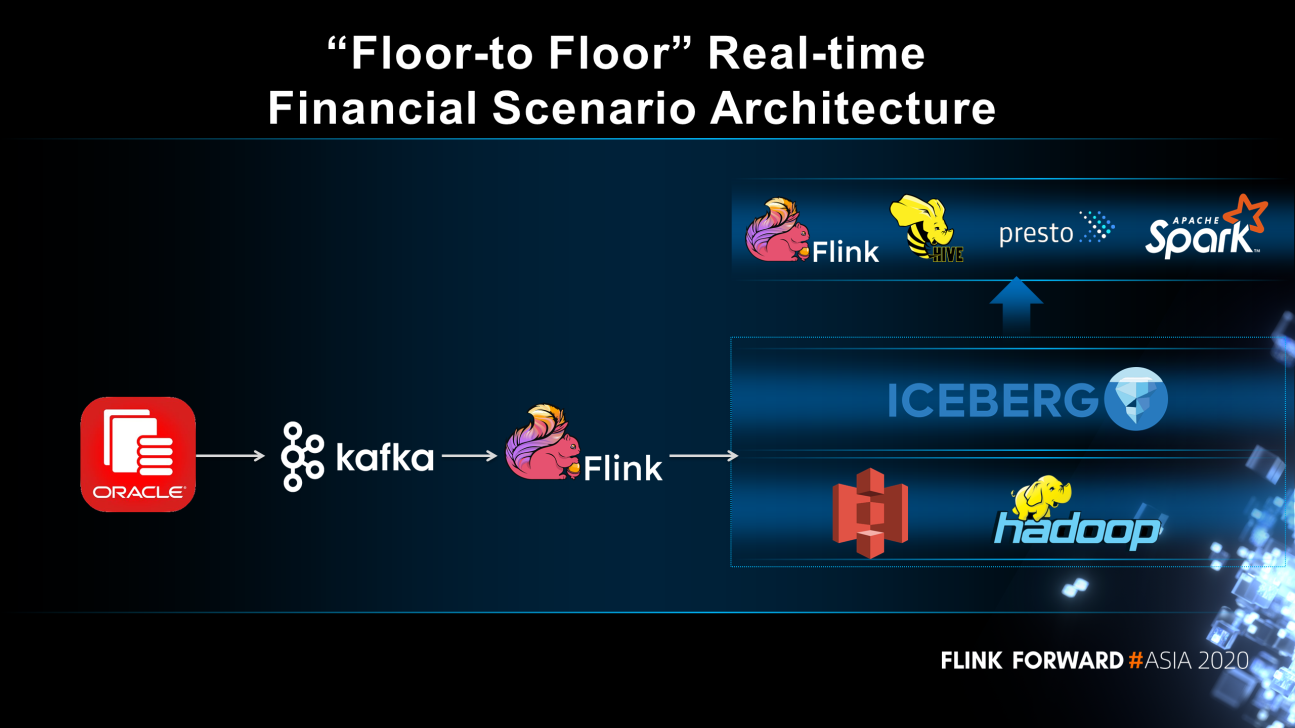

After data sources are accessed to Kafka, Flink can process data on Kafka in real time and migrate processing results to data lakes. Based on open-sourced construction, data in data lakes is stored through HDFS and S3, and the tabular solution is Iceberg. Flink reads data from Kafka and processes data in real time. At the same time, Flink can write the intermediate results into data lakes and process them step by step to obtain the desired results. Then these results can be connected to applications through query engines, such as Flink, Spark, and Presto.

The real-time financial architecture of Zhongyuan Bank includes "straight-through" and "floor-to-floor" real-time financial scenarios. Data is wired to Kafka in real time. Flink reads data from Kafka in real time and processes. The dimension table data is stored in Elastic.

There are two cases:

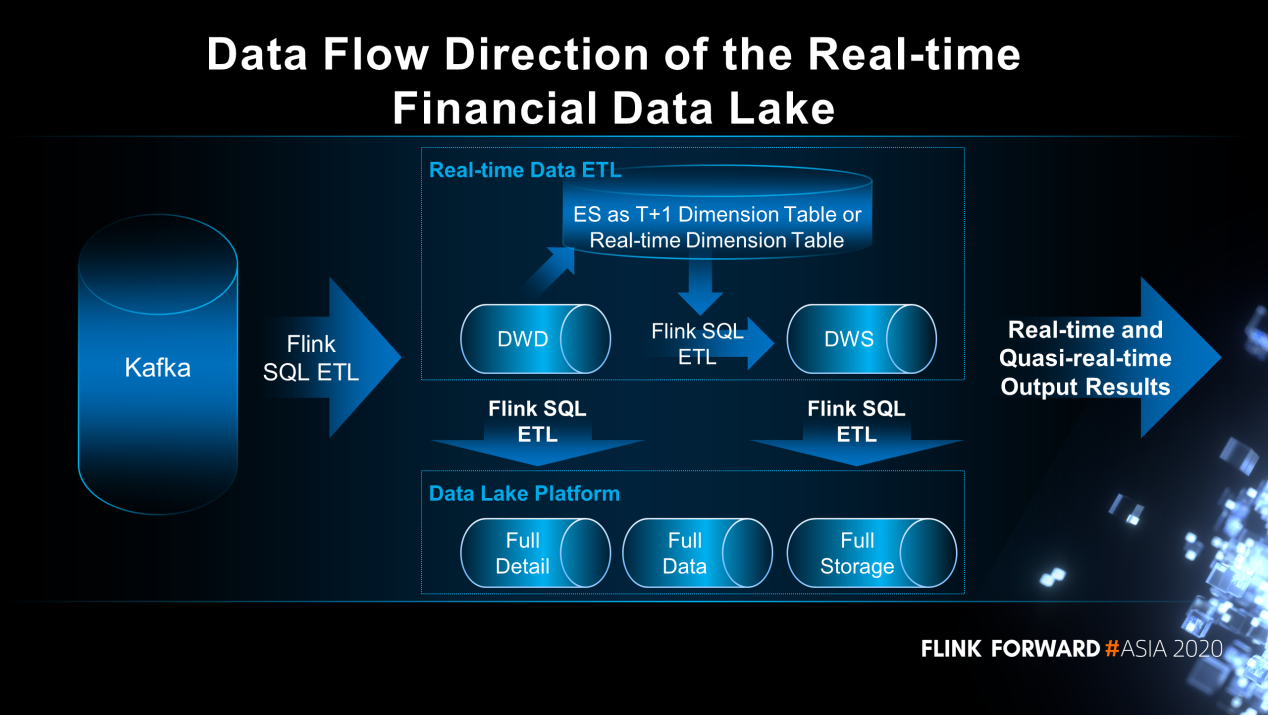

This figure shows the data flow direction of the real-time financial data lake. All data sources come from Kafka and Flink SQL reads the data in real time through Extract-Transform-Load (ETL). By docking application through ETL and the data lake platform of real-time data, real-time and quasi-real-time output results can be generated. Real-time data ETL refers to the "straight-through" architecture for real-time scenarios, while the data lake platform to the "floor-to-floor" architecture for real-time application scenarios.

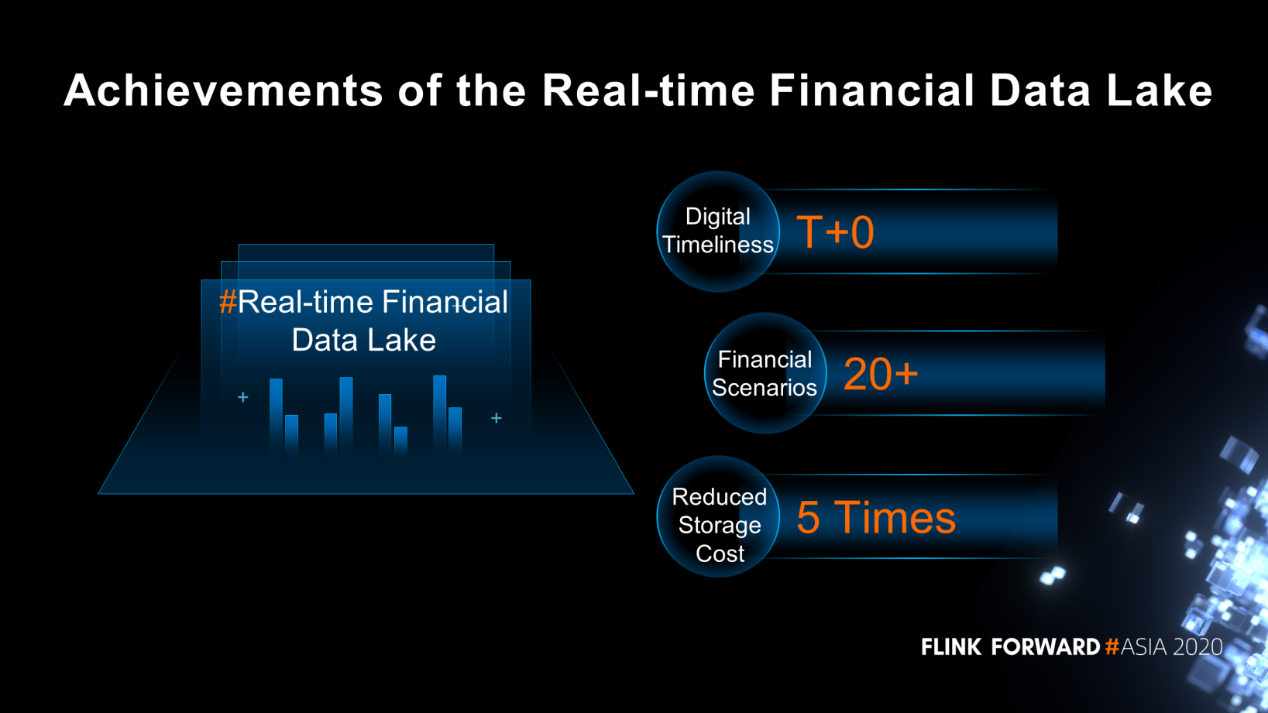

Through continuous construction, a series of achievements have been made. Now T+0 data timeliness is available, supporting more than 20 financial products and reducing storage costs by 5 times.

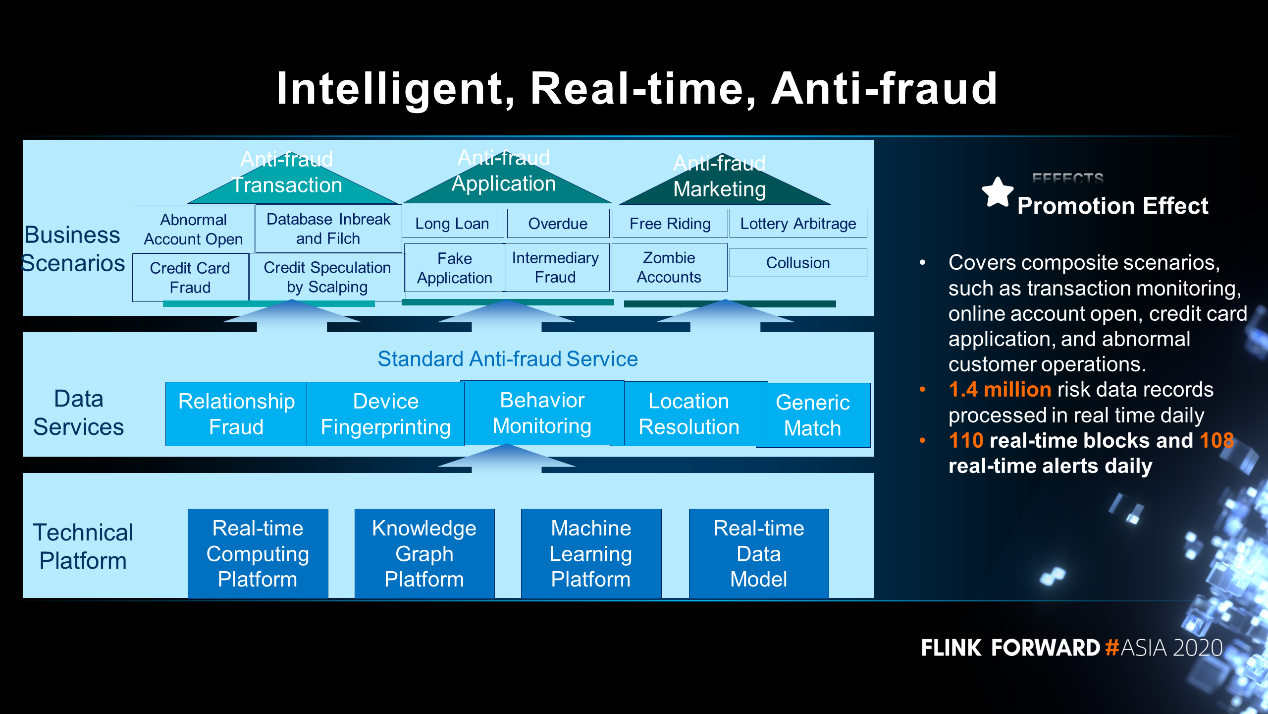

The real-time financial data lake is mainly used in real-time BI and real-time decision-making. A typical application of real-time decision-making is the intelligent, real-time and anti-fraud business, which relies on real-time computing platforms, Knowledge Graph platforms, machine learning platforms, and real-time data models. It provides a series of data services, including relationship fraud, device fingerprinting, behavior monitoring, location resolution and generic match to support anti-fraud transactions, anti-fraud applications and anti-fraud marketing scenarios.

Currently, 1.4 million risk data records are processed in real time daily, with 110 real-time blocks and 108 real-time alerts per day.

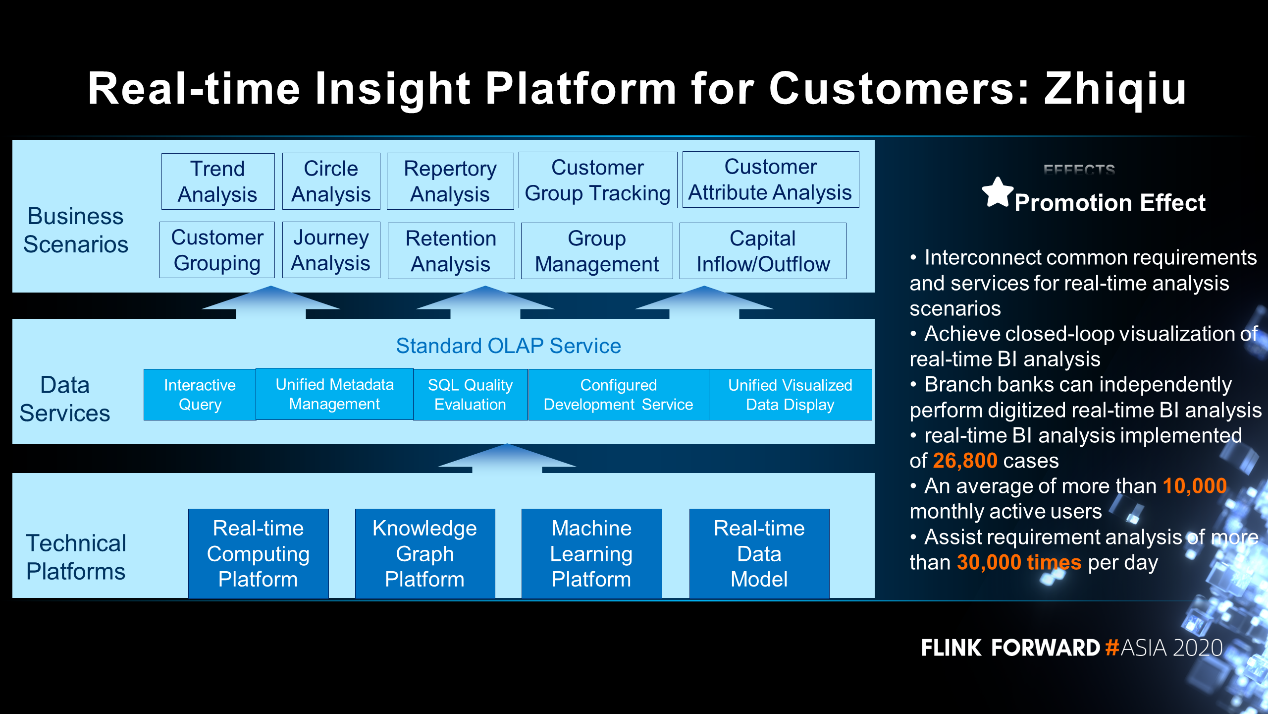

Take a customer real-Time insight platform as an example. It's internally called Zhiqiu platform, which relies on the real-time computing platform, knowledge graph platform, customer profile platform and intelligent analysis platform. Different platforms combine together to provide interactive query services, unified metadata management services, SQL quality assessment services, configuration development services, unified visual data display, and so on. It supports scenarios such as trend analysis, circle analysis, retention analysis and customer group analysis.

Now common requirements and services for real-time analysis scenarios can be interconnected to achieve closed-loop visualization of the real-time BI analysis. The branch banks can independently perform the digitized real-time BI analysis. 26,800 real-time BI analysis cases have been implemented, with an average of more than 10,000 monthly active users. Assisting analyses of real-time BI requirements are more than 30,000 per day.

How Does Flink Maintain Rapid Development Even as One of the Most Active Apache Project?

206 posts | 54 followers

FollowAlibaba Cloud Big Data and AI - October 27, 2025

Apache Flink Community China - January 11, 2021

Alibaba EMR - March 18, 2022

Apache Flink Community China - March 29, 2021

Apache Flink Community - July 28, 2025

Apache Flink Community China - May 14, 2021

206 posts | 54 followers

Follow Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More FinTech on Cloud Solution

FinTech on Cloud Solution

This solution enables FinTech companies to run workloads on the cloud, bringing greater customer satisfaction with lower latency and higher scalability.

Learn MoreMore Posts by Apache Flink Community