By Zhaofeng Zhou (Muluo)

Apache Lucene is an open-source, high-performance, scalable data retrieval engine with powerful data retrieval capabilities. Lucene has been developed over many years, providing more powerful features and an increasingly refined architecture. It currently supports whole-text indexing as well as providing many other types of indexing to satisfy the requirements of different types of query.

Many open-source projects are based on Lucene. The best known are Elasticsearch and Solr. If we compare Elasticsearch and Solr to an exquisitely designed, high-performance sports car, then Lucene would be the engine providing the powerful motive force. We need to study its engine in minute detail to tune the car to give a faster and more stable ride.

We have previously published many articles in this column analyzing Elasticsearch's data model, read/write path, distributed architecture and data/meta consistency. After this article, we plan a series of articles on the principles of Lucene and its source code to give a complete analysis of Lucene's data model and data read/write path.

Lucene officially summarizes its advantages in several points:

We hope that through this series of articles, readers will come to an understanding of how Lucene achieves these goals.

The whole analysis is based on Lucene 7.2.1. Some background knowledge is required before reading this article, including an understanding of basic searching and index principles, concepts such as inverted indexes, tokenizing and relevance, as well as an understanding the basic uses of Lucene, for example, Directory, IndexWriter and IndexSearcher.

Before going into the details of Lucene, it is good to know a few basic concepts as well as some hidden aspects behind these concepts.

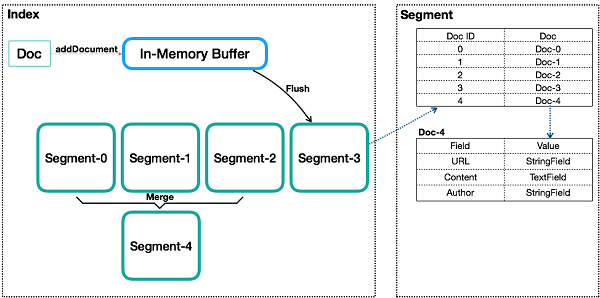

The figure shows the basic internal structure of an index. The data in a segment is represented abstractly rather than as a realistic representation of the actual data structure.

This is similar conceptually to a database table, but differs in some important ways. The table in a traditional relational database or NoSQL database needs to at least have its scheme defined when it is created. There are some clear constraints on the definition of the primary key, columns and the like. However, there are no such constraints on a Lucene index. A Lucene index can be understood as a document folder. You can put a new document into the folder or you can take one out, but if you want to modify one of the documents, you must first take it out, modify it and then put it back in the folder. You can put all sorts of documents in the folder, and Lucene can index the document whatever the content.

Similar to a row in a relational database or a document in a document database, an index can contain multiple documents. A document written into an index is assigned a unique ID, that is, a sequence number (more usually called a DocId). Sequence numbers are discussed in detail later on.

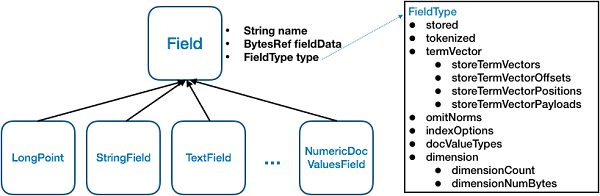

One document is made up of one or more fields. A field is the smallest definable unit of a data index in Lucene. Lucene provides many different types of field, including StringField, TextField and NumericDocValuesField. Lucene determines the type of indexing (such as Invert Index, Store Field, DocValues or N-dimensional) to use for the data depending on the type of field (FieldType). Field and FieldType will be discussed in detail later on.

The smallest unit of index and search in Lucene. A field consists of one or more terms. A term is produced when a field is put through an Analyzer (tokenizer). A term dictionary is the basic index used to perform conditional searches on terms.

An index is composed of one or more sub-indexes. A sub-index is called a segment. The conceptual design of Segments in Lucene is similar to LSM but there are some differences. They inherit the data writing advantages of LSM but only provide near real-time and not real-time queries.

When Lucene writes data it first writes to an in-memory buffer (similar to MemTable in LSM, but not readable). When the data in the Buffer reaches a certain amount, it will be flushed to become a Segment. Every segment has its own independent index and are independently searchable, but the data can never be changed. This scheme prevents random writes. Data is written as Batch or as an Append and achieves a high throughput. The documents written in the Segment cannot be modified, but they can be deleted. The deletion method does not change the file in its original, internal location, but the DocID of the document to be deleted is saved by another file to ensure that the data file cannot be modified. Index queries need to query multiple Segments and merge the results, as well as handling deleted documents. In order to optimize queries, Lucene has a policy to merge multiple segments and in this regard is similar to LSM's Merge of SSTable.

Before segments are flushed or committed, data is stored in memory and is unsearchable. This is another reason why Lucene is said to provide near-real time and not real-time queries. After reading the code, I found that it's not that it can't implement data writing that's searchable, just that it's complicated to implement. The reason for this is the searching of data in Lucene relies on the index setup (for example, an inverted index relies on Term Dictionary), and the data index in Lucene is set up during a Segment flush and not in real-time. The purpose of this is to set up the most efficient index. Naturally, it can introduce another index mechanism that sets up the index in real time as it is written, but the implementation of such a mechanism would differ from the current index in the segment, needing the introduction of an extra index at write time and an extra query mechanism, involving some degree of complexity.

Sequence Number (called DocId below) is an important concept in Lucene. A database uniquely identifies a row using a primary key while Lucene's Index uniquely identifies a Doc by its DocId. However, the following points should be kept in mind:

The inverted index, the very core of Lucene, is essentially a list mapping each Term to the DocId's of the document containing the Term. So when Lucene is searching internally, it makes a two-phase query. The first phase is to list the DocId's found to contain the given Term, and the second phase is to find the Doc based on the DocId. Lucene provides functionality to search by Term as well as to query on the basis of DocId.

DocId is basically an int32 value starting from 0, which is an important optimization, also reflected in data compression and query efficiency. This includes such optimizations as the Delta policy for data compression, ZigZag code and SkipList used in inverted index lists. These are described in detail later on.

Lucene supports many types of field and each type of field determines the supported data types and index modes. Currently supported field types include LongPoint, TextField, StringField, and NumericDocValuesField.

The figure shows the basic relationship between the different types of Field in Lucene. All Field types inherit from the class Field. Field has three main attributes: name(String), fieldsData(BytesRef) and type(FieldType). The attribute name is the name of the field, fieldsData is the field value. The value of all types of field are ultimately represented as binary byte streams. The attribute type is the type of field, which determines how the field is indexed.

FieldType is an important class containing many important attributes. The value of these attributes decides how the field is indexed.

Lucene provides many different types of Field. They have two essential differences: the first difference is the different type values in fieldData define different conversion methods; and the second is defining combinations of different values in different attributes in FieldType. In this mode, you can customize types using custom data and combining the index parameters in FieldType.

You only have to understand the specific meaning of each attribute in FieldType to know what index modes Lucene can provide. Let's look at them one by one:

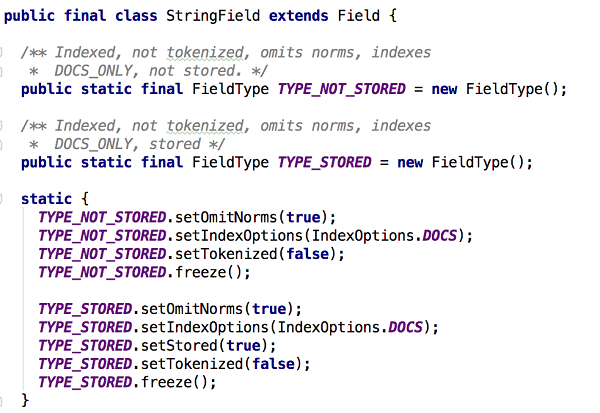

Let's look at the defining a StringField in Lucene:

StringField defines two types of index: TYPE_NOT_STORED and TYPE_STORED. The only difference is whether the Field needs to be stored. It can be interpreted from other attributes that if omitNorms is selected for StringField, inverted indexing without tokenization is required.

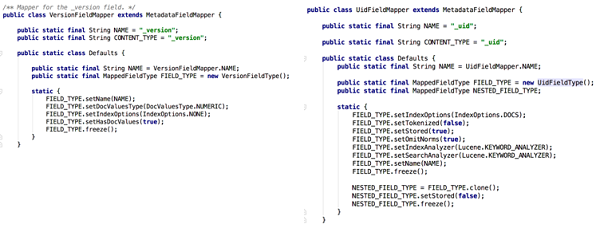

The indexing modes of Fields in documents input by users in Elasticsearch are also provided according to Lucene's indexing modes. Elasticsearch has its own reserved system fields for certain special purposes, in addition to user-defined fields. These fields map to what in effect are Fields in Lucene and which are the same as user-defined Fields. However, different indexing methods have been formulated in Elasticsearch depending on the purposes of these system fields.

For example, the preceding figure shows the definitions in FieldType of _version and _uid of two system fields in Elasticsearch. Let's decode their indexing methods. Elasticsearch uniquely identifies a document by its _uid field and records the current version of the document by its _version field. From the FieldType definitions of these two fields we can see that the _uid field will be indexed by an inverted index, it does not need tokenizing and needs to be stored. The _version field does not need to be indexed by an inverted index, does not need storing but needs forward indexing. This is easy to understand because _uid has to be searched but _version does not. However, _version has to be queried by docId while the versionMap in Elasticsearch has to do a large number of queries by docId but only needs to query the _version field. making _version most suited to forward indexing.

See the next article for a complete analysis of system fields in Elasticsearch.

This article mainly deals with some of Lucene's basic concepts and the index types supported. We are planning a series of future articles to analyze how IndexWriter, part of Lucene, writes, the structure of its in-memory buffers and the structure of its index file once persistent, to understand how Lucene achieves such highly efficient data indexing. We will also look at IndexSearcher's query process in addition to some special data structures to optimize queries to see why Lucene provides such highly efficient search and query.

57 posts | 12 followers

FollowAlibaba Cloud Community - April 15, 2024

Alibaba Cloud Storage - March 28, 2019

ApsaraDB - December 13, 2024

Alibaba Cloud Storage - April 10, 2019

ApsaraDB - July 8, 2021

ApsaraDB - July 7, 2021

57 posts | 12 followers

Follow Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch helps users easy to build AI-powered search applications seamlessly integrated with large language models, and featuring for the enterprise: robust access control, security monitoring, and automatic updates.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn MoreMore Posts by Alibaba Cloud Storage