Featuring simplicity, ease of use, and open-source support since its first launch, MySQL has become the database system of choice for many developers. Unsurprisingly, it also has played an important role in Alibaba's IT infrastructure on and off the cloud. Starting as early as 2008, MySQL databases started to replace existing Oracle database systems at Alibaba as part of a campaign to reduce dependence on the major system vendors IBM, Oracle, and EMC at Alibaba. As part of this transition, Alibaba developed AliSQL, which is based on MySQL but also adapts several additional features and performance patches that can meet the unique demands and challenges of Alibaba's various transactional applications and ultra-high traffic scenarios like Double 11.

AliSQL quickly became an important piece of Alibaba's cloud infrastructure. And by 2014, Alibaba developed active geo-redundancy as a new criteria for applications using AliSQL, as the former standard, zone-disaster recovery, could no longer keep up with Alibaba's growing requirements for improved scalable deployment, internationalization, and ensured disaster recovery. Active geo-redundancy also meant for new disaster recovery requirements for underlying databases. In the conventional master/slave architecture, data may be lost if the master node and secondary nodes are not deployed in strong synchronization mode. However, if a node encounters an exception in strong synchronization mode, the entire system becomes unavailable. In addition, in this architecture, the election of the primary node must be arbitrated and controlled with a high availability (HA) tool.

At Alibaba, we believe that technological development can bring greater O&M convenience and a better user experience. In the past, Alibaba database administrators (DBAs) worked diligently to develop an efficient and reliable HA system and a complete set of tools and processes to verify and correct the data and logs after failover. Along these same lines, the Alibaba Database Team continues to innovate and create new systems with superior capabilities.

In this article, we will discuss AliSQL X-Cluster, which is an all-new database system that offers strong consistency and superior performance. AliSQL X-Cluster, similar to Google Spanner and Amazon Aurora, was developed as a NewSQL system, to ensure strong data consistency through the development of distributed multi-replica databases based on the consensus protocol.

AliSQL X-Cluster (X-Cluster for short) is a distributed database cluster launched by Alibaba's Database Team. Compatible with MySQL 5.7, X-Cluster provides strong consistency and supports global deployment. In this new database system, the consensus protocol serves as the core of distribution.

X-Paxos is a consensus protocol library developed in-house at Alibaba. It aims to fill a market gap with a high-performance and easy-to-access solution. Existing open-source consensus protocol implementations, including etcd and those provided by other vendors, have relatively poor performance or cannot meet the requirements of complex real-world application scenarios in terms of functionality.

Unlike its open-source counterparts, X-Paxos allows you to build a highly consistent distributed system. X-Cluster is the first important product connected to the X-Paxos ecosystem. Based on X-Paxos, X-Cluster implements various features such as automatic primary node election, log synchronization, strong intra-cluster data consistency, and online cluster configuration change. In addition, based on MySQL, X-Cluster is compatible with MySQL 5.7 and integrates and also enhances on several of the legacy features of AliSQL. Therefore, those already using MySQL can migrate to X-Cluster easily and without costs to them.

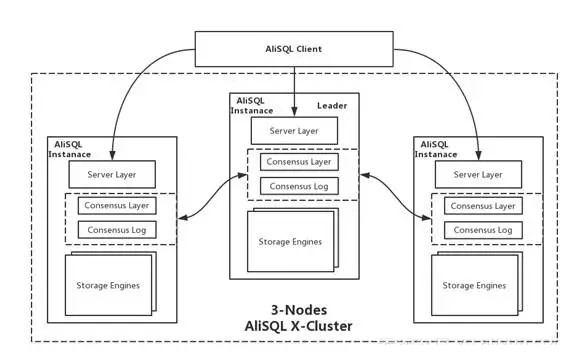

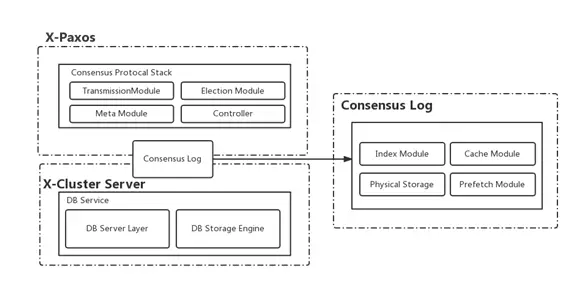

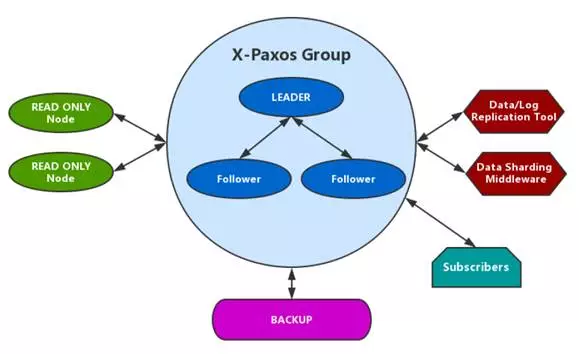

The below figure shows the basic architecture of X-Cluster.

The X-Cluster in the above figure is with three AliSQL instances. X-Cluster is a cluster system that supports single-point writing and multi-point reading. Only one leader can write data to the cluster at a time. Different from multi-point writing, single-point writing can avoid conflicts in data set, thus achieving better performance and throughput.

Each instance in X-Cluster is a single-process system. X-Paxos is deeply integrated into the database kernel to replace the original replication module. The incremental data synchronization between cluster nodes is completely driven by X-Paxos.

Therefore, O&M personnel or external tools are no longer required in determining the replication process and the node where the playback starts. To pursue the highest performance, X-Cluster deeply transforms and customizes MySQL binary logs (Binlogs) as consensus logs of X-Paxos. It also implements a series of X-Paxos log APIs, enabling X-Paxos to directly manipulate MySQL logs.

To ensure cluster data consistency and global deployment capabilities, X-Cluster is has been designed from ground up with special attention given to transaction commitment, log replication and playback, and recovery.

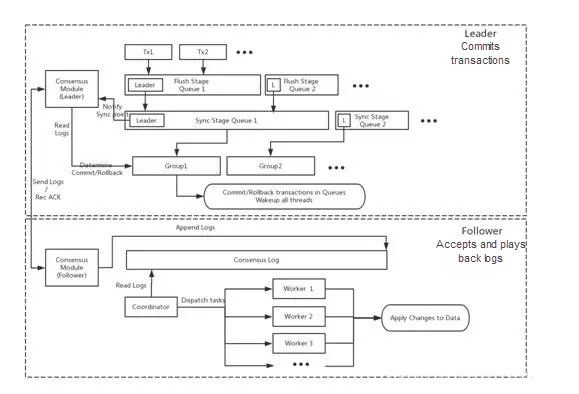

In X-Cluster's replication process, X-Paxos drives consensus logs to implement data replication.

In the prepare phase of a transaction, the leader collects the logs generated for the transaction and sends them to the X-Paxos protocol layer. Then, the leader enters waiting mode. The X-Paxos protocol layer efficiently forwards the consensus logs to other nodes in the cluster. After logs are written to more than half of the nodes in the cluster, X-Paxos notifies the leader that the transaction can proceed to the commit phase. Otherwise, if the leader changes during this phase, the prepared transaction will be rolled back based on the status of the Paxos logs. Compared with the native MySQL, AliSQL optimizes log transmission by providing approaches such as multi-threading, asynchronization, batching, and pipelining. In particular, the efficiency is significantly improved in persistent connections scenarios.

The follower also uses X-Paxos to manage logs. To improve the receiving efficiency, the follower appends the log content that is received from the leader to the consensus log. The leader asynchronously sends the follower the messages about the log that reaches consensus. The follower's coordinator thread reads and parses the log that reaches consensus, and transfers the log to each playback worker thread for concurrent data updates. The follower can implement concurrent playback through group commit on the leader or based on the table level. In the future, the latest write set method will be introduced to precisely control the maximum concurrency.

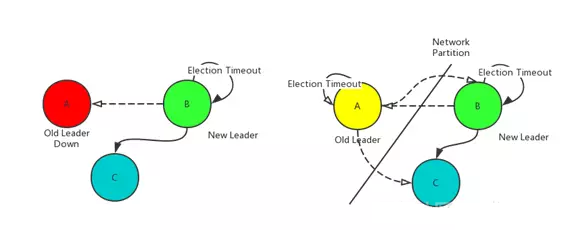

X-Cluster can appropriately provide external services, provided that more than half of the nodes in the cluster are normal. Therefore, the cluster can still provide services when a minority of followers are faulty. When the leader becomes faulty, the system automatically triggers the leader election process for the cluster. The leader election process is driven by X-Paxos. The system elects a new leader based on the Paxos protocol and node priorities.

For X-Cluster, failover_time is the sum of election_time and apply_time. The election_time value indicates the time spent by the protocol in electing a leader, which is generally about 10 seconds. The apply_time value indicates the time spent in applying the log to the database, which depends on the playback speed. A strong parallel playback algorithm can control the log application time to within 10 seconds.

Leader failure scenarios are relatively complex. The failures include instance crashes, server downtime, and network isolation.

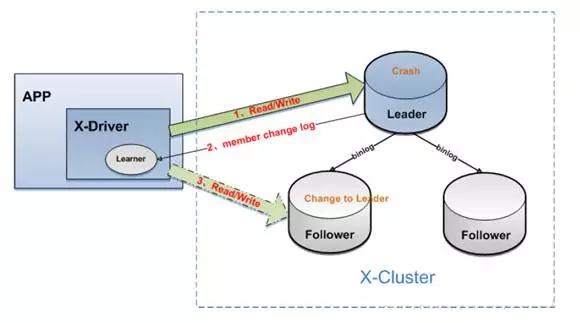

As shown in the preceding figure, in the three-node X-Cluster on the left, the original leader (node A) is faulty, therefore, nodes B and C cannot receive heartbeat messages from the leader for a long period of time. After an election timeout period, node B attempts to elect itself as the leader. With the consent of node C, node B becomes the new leader and resumes the service capabilities of the cluster.

In the three-node X-Cluster on the right, a network partition occurs and the original leader (node A) is separated from the cluster. In this case, after the election timeout period, the behavior of nodes B and C is similar to that in the left-side case. Node A fails to send heartbeat messages and logs to nodes B and C, and therefore is downgraded after timeout. Then, node A continuously attempts to elect itself as the leader. However, node A keeps failing, because it cannot reach consensus without the consent of other nodes. After the system recovers from the network partition, node A receives heartbeat messages from node B, and triggers the leader stickiness mechanism, so that node A is automatically added back to the cluster.

X-Cluster has a series of new functions and features, meeting the functional and performance requirements of different types of applications.

The most significant feature of X-Cluster is its support for cross-region deployment. When X-Cluster is deployed across regions, it can ensure strong consistency of cluster data and provide region-level disaster recovery. Even if all the data centers in a region are faulty, none of the data will be lost, provided that a majority of the nodes in the cluster are normal. With X-Paxos and asynchronization of worker threads in the database kernel, X-Cluster still has very high throughput when the network round trip time (RTT) is only tens of milliseconds. X-Cluster can be deployed very flexibly by using the cross-region deployment capability. Based on the actual business situations and different disaster recovery requirements, you can deploy services in intra-data center disaster recovery, inter-data center disaster recovery, and region-level disaster recovery, and can flexibly switch between different levels of disaster recovery solutions.

X-Cluster supports online cluster configuration changes. You can perform the following operations online:

All the preceding configuration modifications can be made online without blocking the normal running of services. In addition, cluster configuration changes strictly comply with the Paxos protocol, logs are recorded, the state machine is changed accordingly, and a comprehensive recovery mechanism is implemented. In this way, the eventual configurations in the cluster are consistent. This prevents split-brain caused by exceptions in the cluster configuration change process or inconsistency of the eventual states in other configurations.

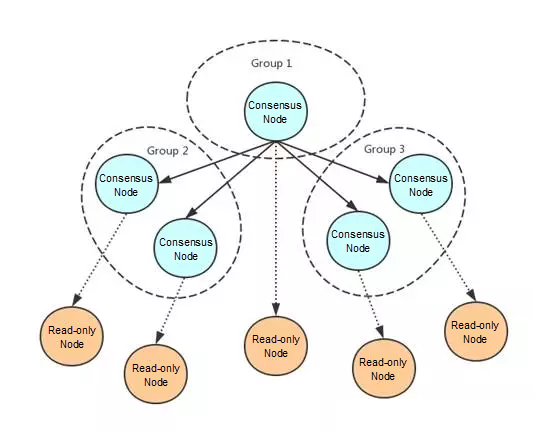

The nodes in X-Cluster are classified into two categories according to functionality: consensus nodes that participate in leader election and the consensus protocol and read-only nodes. Consensus nodes are the basis of X-Cluster. Currently, one X-Cluster can have at least one consensus node and a maximum of 99 consensus nodes. Read-only nodes can be scaled out without limits. You can start with a single-node cluster and subsequently add different types of nodes as required.

Applications usually have requirements for the new leader that is elected after disaster recovery. If the new leader elected after the original leader accidentally becomes faulty has low specifications, applications are reluctant to degrade services. X-Cluster allows nodes in the same cluster to have different priorities. You can set the priorities for each instance node based on the actual deployment requirements when configuring the cluster.

Prioritization primarily has the following functions:

Weighting the nodes during leader election is to sort the nodes by weight. If no network partition occurs during election, the leader election is based on the sorting of weights of the active nodes in the cluster. A node with a higher weight takes a higher priority to contend for the new leader. We implement a two-phase election time. The first phase is the unified lease time in the cluster, and the second phase is determined based on weights. The node with the higher weight has a shorter election time, indicating that the node starts self-election earlier. In addition, a weight detection mechanism is provided to ensure the weight-based prioritization. When a node becomes the leader, it broadcasts a message to check all the accessible nodes in the cluster. If a node with a higher weight than the leader is found, the leader initiates leader transfer to transfer the leader role.

Weight-based leader election is especially important in cross-region deployment scenarios. Services deployed across regions are highly sensitive to the latency of access between databases. If the leader is randomly switched to a data center in another region, applications may access a large number of remote instances, which significantly increases the response time of the client. Weight-based leader election can perfectly resolve this problem. This feature allows you to dynamically set weights based on application deployment requirements, which is very flexible.

Setting policies for the majority is to specify the nodes that need to complete log replication during transaction commitment and log synchronization. Replication priorities are divided into two categories: strong replication and weak replication. In the standard Paxos, a log entry can be committed to the state machine, provided that more than a half of the nodes have synchronized the log. However, a route is automatically allocated for each connection, and therefore, the RTT may be incorrect in cross-region access.

To shorten the time interval from the time when a network or node becomes faulty to the time when a leader is elected according to the leader election priorities to resume the services, we can do as follows: In addition to ensuring that a log entry is replicated to a majority of nodes, we need to replicate the log entry to all the nodes with strong replication before committing the log entry to the state machine. Then, we need to return a response indicating a successful transaction commitment to the client. This is similar to the max protection mode. This design allows you to downgrade the service appropriately if a node with strong replication is faulty.

In X-Cluster, replica nodes are divided into normal replica nodes and log replica nodes according to whether a data state machine is available for the replica node. Normal replica nodes have all the features, whereas log replica nodes include only logs, no data. If a log replica node is also a consensus node that participates in Paxos voting, it has only the right to vote, but no right to be elected.

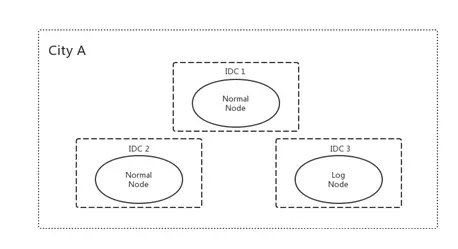

To meet application requirements and control costs, different types of replica nodes are created. Unlike the conventional two-node master/slave replication, the common single-region deployment of X-Cluster includes three nodes.

Log replica nodes are an option to reduce deployment costs. Log replica nodes store logs only, but do not need to store data or play back log update data. Therefore, log replica nodes offer great advantages over normal replica nodes in terms of storage and CPU usage. In actual application planning, log replica nodes can be deployed as disaster recovery nodes.

As shown in the above figure, a read-only node in X-Cluster can replicate logs from any consensus node. This feature overcomes two problems. If the logs of all the nodes are replicated from the leader, the leader may have network and I/O bottlenecks. In addition, in the cross-region deployment mode, the data state machines in different regions may be delayed. In this case, users who require read and write splitting must allow latency in read-only state in specific scenarios. However, if a consensus node becomes faulty, how can the read-only node that is in a replication relationship with it perform disaster recovery?

As a self-maintained database service, X-Cluster needs to solve this problem. X-Cluster defines a learner source group. Each group is a disaster recovery unit for one read-only node. When an exception, such as downtime or network partition, occurs in a consensus node in a group, the cluster will mount the read-only node that is attached to the faulty node to another normal working node in the group for data synchronization, according to the group configuration.

When master/slave replication is enabled in the MySQL system, the secondary database records a relay log in addition to the Binlog. Specifically, the slave database synchronizes a replica of the Binlog to the relay log after specifying the position of the corresponding primary database's Binlog, so that a replication thread can read and play back the log. In X-Cluster, only one log is used for synchronization between nodes. According to the feature of the plug-in log module in X-Paxos, each node has a consensus log.

This not only facilitates the management of consensus logs, but also reduces log storage and read and write overheads. Methods such as Append, Get, Truncate, and Purge can be run for consensus logs, which are completely controlled by X-Paxos. In addition, X-Cluster provides log indexing, log caching, and pre-reading mechanisms for consensus logs. Therefore, the performance of the log module is greatly improved and the efficiency of the consensus protocol is ensured.

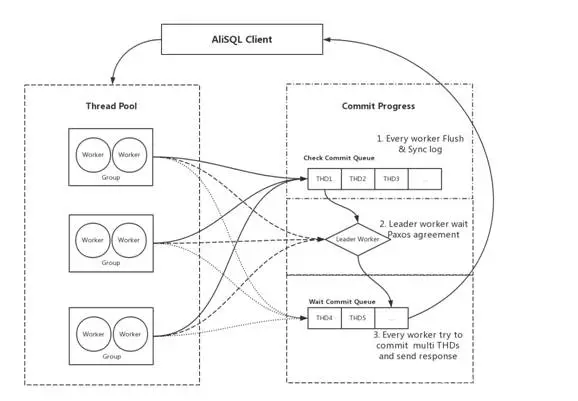

In conventional MySQL, one thread works for one connection. After the thread pool is introduced, one thread pool incubates a certain number of worker threads, and each thread deals with one query by parsing, optimizing, modifying the data and submitting the modification, and sending responses. However, when a cluster needs to be deployed across regions, it takes tens of milliseconds to commit a transaction, because the transaction logs need to be synchronized between clusters, and the network RTT is limited. In this case, for a common write transaction, much time is spent in synchronizing node logs and waiting for the transaction commitment.

Under such heavy loads, the worker threads in the database will be quickly exhausted and the throughput will reach the bottleneck. However, if you only increase the number of worker threads in the database, the cost of thread context switches increases significantly. In contrast, if the entire transaction commitment process is asynchronized, the worker threads are freed from waiting for X-Paxos log synchronization, and can process new connection requests, providing a higher processing capability under heavy loads.

The following figure shows the asynchronous process.

The key concept of the asynchronous commitment in X-Cluster is to divide the request processing of each transaction into two phases: the before-commit phase and the commit and response phase. Both phases can be completed by different worker threads. To complete asynchronous commitment, X-Cluster adds a synchronization waiting queue and a commitment waiting queue to store transactions in different phases. The former queue includes transactions that are waiting for synchronization of X-Paxos consensus logs, and the latter queue includes transactions to be committed. When the user initiates commit, rollback, and XA_Prepare, the worker thread in the thread pool that processes the user's connections generates transaction logs and stores the transaction context in the synchronization waiting queue. A small number of worker threads deal with the consumption of the synchronization waiting queue. The other worker threads in the thread pool can be directly used to process other tasks. Transactions are pushed into the commitment waiting queue after a majority of nodes completes the synchronization. All the transactions in the commitment waiting queue can be committed immediately. When finding that this queue contains a transaction, all the worker threads can take it out and commit it.

In this way, only a small number of threads in the system are waiting for log synchronization, and the rest of the threads can make full use of the CPU to process requests from the client. Based on such a concept and the group commit logic of MySQL, X-Cluster asynchronizes all the operations that originally require waiting, so that the worker threads can respond to new requests. According to the test results, after asynchronization, the throughput is increased by 10% in single-region deployment and by several times in cross-region deployment as compared with the throughput in synchronous commitment.

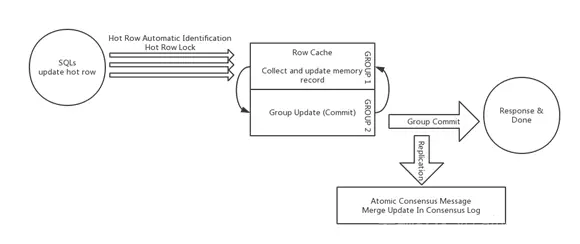

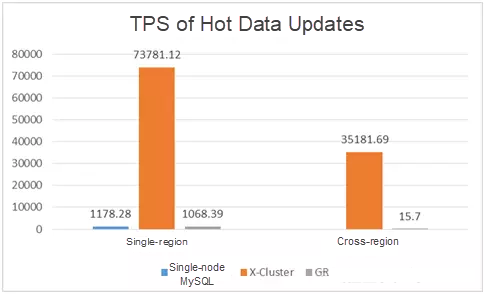

Hot data updates are originally a tough database issue. Due to the row lock contention, it is always difficult to improve the performance and throughput. This is even worse for persistent connections of X-Cluster in the cross-region deployment scenario, because the commitment time is prolonged, and the time when a transaction occupies a row lock also significantly increases.

To solve this problem, X-Cluster optimizes the replication based on the hot data feature of the single-node AliSQL. In this way, the hot data updates performance is increased by 200 times while strong data consistency is ensured.

As shown in the preceding figure, the basic concept of updating X-Cluster based on hot data rows is to merge the updates performed by multiple transactions to the same row. To commit batch-updated transactions at one time, X-Cluster adds a new type of row lock, that is, hot data row lock. The hot data row lock allows hot data update transactions to be compatible with each other. To ensure data consistency in X-Cluster, special flags are added to the logs of the same batch of hot data update transactions. Based on these flags, X-Paxos combines the logs of the entire batch of transactions into a separate network packet for inter-cluster data synchronization. This ensures that these transactions are atomically committed or rolled back. In addition, to improve the efficiency of log playback, in X-Cluster, the update logs of hot data rows in each batch of transactions are merged.

X-Cluster has a complete client-server ecosystem. Therefore, the entire system does not require any external component and can be closed to an ecological closed loop. As the client, X-Driver can subscribe to all changes to the servers, so as to automatically search for the leader and dynamically maintain the instance list. The metadata of the client is stored in the X-Cluster service. Compared with the external metadata center, this self-closed system can obtain accurate cluster changes at the fastest speed, and reduce the user perception of cluster changes.

The powerful X-Paxos system allows both log backup and data subscription systems to be accessed by log subscribers. With the globally unique log positions in X-Paxos, it is no longer difficult to find accurate log positions if failover occurs in these subscription systems. In addition, X-Paxos pushes log messages through streams, and therefore, the data timeliness can be greatly improved.

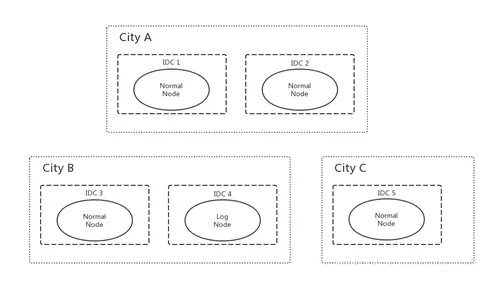

There are two typical deployment solutions of X-Cluster. One is to deploy three replica nodes in one region, with two replica nodes storing data and one replica node storing logs. The other one is to deploy five replica nodes across regions, with four replica nodes storing data and one replica node storing logs. They are used to meet data center-disaster recovery requirements and region-disaster recovery requirements, respectively. The replica node here refers to a consensus node. The deployment of read-only nodes does not affect the disaster recovery capability. These two typical deployment solutions provide the target disaster recovery capabilities at a relatively low cost by taking into account the balance between availability and costs.

When three replica nodes are deployed in the same region, X-Cluster can easily implement instance disaster recovery and data center disaster recovery without data loss. Compared with the master/slave mode, this mode includes an additional log node, which is added in exchange for strong consistency and availability.

When five instances are deployed in three regions, with four of them storing data and one of them storing logs, region disaster recovery is ensured. As shown in the figure, when all the nodes in any region become faulty, the cluster availability is not affected, because at least three of the five nodes can run properly. In routine operations, each of the four data nodes must synchronize logs to the other three nodes every time before a transaction is to be committed. Therefore, cross-region network synchronization definitely occurs. This is also the persistent connection network scenario. The optimization of X-Cluster for slow networks is to meet such requirements.

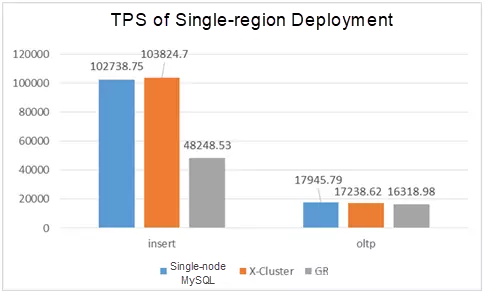

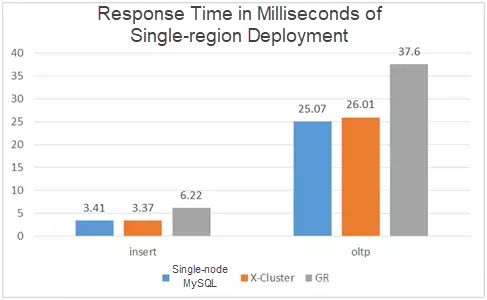

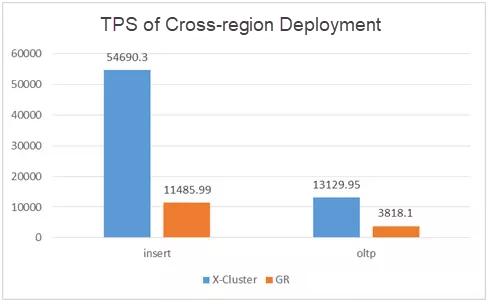

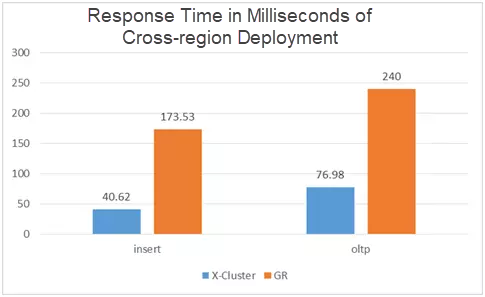

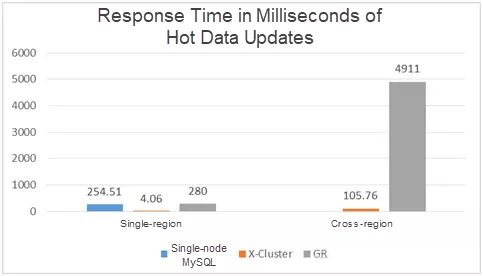

We set up two types of environments to conduct the performance tests of X-Cluster. In environment 1, we deploy three nodes in three data centers located in the same region. In environment 2, we deploy three nodes in three data centers across regions. The standard Sysbench insert and online transaction processing (OLTP) are used as testing tools. In the insert test, each transaction contains only one insert statement. This is an intensive small transaction scenario. On the contrary, the OLTP test is to deal with large read and write transactions. In the test scenario for hot data environments, update_non_index is modified to update the same row of data. Read-only node scenarios are not covered in this test, because read-only nodes do not involve node log and data synchronization. It can be considered that read-only tests are meaningless for distributed systems such as X-Cluster. In each of the tests, the data volume is 10 tables, with each table containing 200,000 records.

For comparison, we use the latest open-source single-node MySQL 5.7.19 and the corresponding version of Group Replication. Throttling is disabled during the test of Group Replication.

The test machines are all 64-core peripheral component interconnect express (PCIe) solid-state drives (SSDs) with a memory of 256 GB.

To test a single-region cluster, we use 300 concurrent threads in the insert test, 400 concurrent threads in the OLTP test, and 300 concurrent threads in the hot data updates test.

In the same region, the throughput and response time of X-Cluster are excellent and even slightly better than those of the single-node MySQL 5.7.19. Compared with Group Replication, under the test workloads, X-Cluster has a throughput of more than doubled and a response time of approximately 55% in the insert test, and a throughput of over 5% and a response time of nearly 70% in the OLTP test.

To test a cross-region cluster, you need to ensure the throughput by using a large number of connections. Therefore, 2,000 concurrent threads are used in the insert test, 700 concurrent threads are used in the OLTP test, and 2,500 concurrent threads are used in the hot data updates test.

When a cluster is deployed across regions, both the throughput of X-Cluster and the throughput of Group Replication decrease compared with those in the single-region deployment mode. The impact of physical network latency on the response time is also greatly increased. However, in the insert test, the throughput of X-Cluster can still be five times ahead of that of Group Replication, but the response time is only one fourth of that of Group Replication. In the OLTP test, the throughput of X-Cluster is nearly four times ahead of that of Group Replication, and the response time is only one third of that of Group Replication.

Hot data updates is an important feature of X-Cluster. According to the test results, the throughput and response time of X-Cluster are much better than those of single-node MySQL and Group Replication, regardless of whether the cluster is deployed in single-region or cross-region mode.

The test of a distributed system is very complex, because no one can list all the possible situations through exhaustive methods. Simple API testing and performance regression can cover only a small number of scenarios and therefore cannot measure the quality of a specific version of the distributed system. The robustness of the distributed system can be demonstrated only through persistent testing and the normal running of the online system.

For X-Cluster, the biggest challenge is to ensure the correctness of its implementation, which is based on the distributed consensus protocol. Practice has proven that gray-box testing is the most effective method. X-Cluster has integrated with X-Paxos, and the X-Paxos project originally had a series of testing frameworks for discovery and regression. In addition, based on tools such as tc and systemtap, X-Cluster has built various environments that simulate network exceptions, instance downtime, and I/O exceptions. You can randomly combine the network partition, packet loss, various I/O exceptions, and various instance downtime in these environments to conduct testing. You can also use a benchmark tool to stress heavy read and write workloads on each node, and periodically verify data and log consistency between different nodes in the cluster. All consistency-related operations are recorded in the dedicated logs of X-Cluster to facilitate the tracing of interaction between cluster nodes. The eventual consistency of data and logs is checked by external systems.

Alibaba provides a dedicated sharding verification system to perform full data verification on different nodes in X-Cluster. The consensus log parsing tool can quickly parse log content for comparison. This set of test environments has helped us discover many system bugs, including bugs caused during instance recovery and bugs resulted from network exceptions. We believe that a stable version must be tested in this scenario for a long time and meet all performance expectations.

In addition to the eventual data consistency and log consistency, we need to verify the linear data consistency and transaction isolation. Therefore, we introduced the Jepsen tool. Jepsen helps a large number of distributed systems identify the issues of distributed protocols and implementation correctness. We have specially created a series of test cases for X-Cluster that cover various exception scenarios, to discover system isolation and consistency problems.

X-Cluster is not the first MySQL-based strong-consistency cluster solution. However, it is the most suitable database solution for companies that deal with a huge volume of data such as Alibaba. The following shows the comparison between some similar products.

Galera is a MySQL cluster version supported by MariaDB. It supports strong synchronization, multi-point writing, automatic cluster configuration, node failover, row-level parallel replication, and native MySQL clients. In this architecture, the slave node has no latency, the data read by any node is consistent, no transaction data is lost, and read and write capabilities are scalable.

In Galera, the Totem multicast protocol, which is based on a single-token ring, is used for cluster communication. To support multi-point writing, after receiving a write request, the host atomically broadcasts the write request to all the machines in the group. The transaction management layers of the machines determine whether to commit or roll back the write request. However, multicast is peer-to-peer (P2P) communication. As the number of nodes increases, the latency increases, the response slows down, and multicast becomes applicable only to low-latency local area networks (LANs). In addition, if a machine in the group becomes faulty, multicast will time out. In this case, the entire cluster is unavailable after the failed machine is removed and the member relationship in the group is determined again.

Galera uses a dedicated file named gcache for incremental state replication. The gcache file is not used for other purposes, and therefore costs additional computing and storage for maintenance.

Group Replication is a cluster solution officially developed by MySQL. Group Replication borrows the concept of Galera and also supports multi-master and multi-point writing. Group Replication implements an XCom communication layer. In addition, the Paxos algorithm has been used in the new version of Group Replication. Currently, a Group Replication cluster can have a maximum of nine nodes, and the response latency is relatively stable. For the logs synchronized by the nodes, Binlog is used in Group Replication, which is more common than that in Galera.

In Group Replication, XCom is used for replication at the protocol layer, and the replication is strongly dependent on global transaction identifiers (GTIDs). The performance in the test, especially in cross-region deployment mode, cannot meet the requirements, and a large number of bugs in the current version are still being fixed. It can be used in production environments for stability and performance improvement in later versions.

X-Cluster is a new database solution for users with high data quality requirements. X-Cluster not only benefits from the open-source community, but also can be flexibly changed based on service requirements. Key technologies that are related to consistency can be fully automated and controllable. In the future, X-Cluster will be further optimized based on this, for example, to support multi-shard Paxos and provide strongly consistent reading and writing on multiple nodes. With the continuous evolution of X-Paxos and AliSQL, X-Cluster will also bring more surprises to you.

2,605 posts | 747 followers

FollowAlibaba Clouder - January 22, 2020

Alibaba Clouder - December 10, 2019

ApsaraDB - April 28, 2020

Alibaba Clouder - January 28, 2021

ApsaraDB - March 27, 2024

ApsaraDB - August 1, 2022

2,605 posts | 747 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn MoreMore Posts by Alibaba Clouder

anishkhatri10 November 29, 2019 at 5:49 pm

Love!Great I liked it.