By Yang Xu, nicknamed Pinshu at Alibaba.

The progress of Flink in the machine learning field has long been the focus of many developers. This year, a new milestone was set in the Flink community when we open-sourced the Alink machine learning algorithm platform. This also marked Flink's official entry into the field of artificial intelligence.

Yang Xu, a senior algorithm expert at Alibaba, will give you a detailed introduction to the main functions and characteristics of the Alink project. We hope to work with colleagues in the industry to promote the further development of the Flink community.

Download Alink from GitHub: https://github.com/alibaba/Alink

With the advent of the big data era and the rise of AI, machine learning can be applied in a wider range of scenarios. Its models need to process batch data and, to meet real-time requirements, real-time prediction of streaming data is required. In addition, today we need models that we can apply to enterprise applications and microservices. To achieve better performance, algorithm engineers need to work with a greater variety of more complex models and process larger datasets. Therefore, distributed clusters have become the norm. To promptly respond to market changes, more and more businesses are choosing online learning to directly process streaming data and update models in real time.

Our team has long been engaged in the research and development of algorithm platforms, and has benefited from efficient algorithm components and easy-to-use platforms for developers. Looking at the diverse range of the emerging application scenarios of machine learning, in 2017, we started to develop a next-generation machine learning algorithm platform based on Flink. This platform allows data analysts and application developers to easily build end-to-end business processes. We named this project "Alink", which is an amalgam of several terms closely related to our work: Alibaba, Algorithm, AI, Flink, and Blink.

Alink is a next-generation machine learning algorithm platform developed by the PAI team from Alibaba's Computing Platform Division. The development of this platform began in 2017 and was based on the real-time computing engine Flink. The platform provides a wide range of algorithm component libraries and a convenient operation framework. It allows developers to quickly build algorithm model development processes that cover data processing, feature engineering, model training, and model prediction.

Leveraging Flink's advantage in unifying batch and stream processing, Alink can provide consistent operations for batch and stream processing tasks. In practice, the limitations of FlinkML, Flink's original machine learning library, have been exposed. It supports only about a dozen algorithm types, and the supported data structures are not universal enough. However, we admire the excellent performance of Flink's underlying engine, and therefore redesigned and developed the machine learning algorithm library based on Flink. This project was launched within Alibaba in 2018 and has been continuously improved and perfected in complex business scenarios within Alibaba.

Since we first developed Alink, we have been working closely with the community. We have repeatedly introduced our latest progress in the R&D of the machine learning algorithm library and shared our technical experiences at the Flink Forward conference.

As the first machine learning platform in the industry to support both batch algorithms and streaming algorithms, Alink provides a Python API so that developers can easily build algorithm models without the need for Flink technology.

Alink has already been widely used in Alibaba's core real-time online services, such as search, recommendation, and advertising. During the 2019 Double 11 Shopping Festival, 970 PB of data was processed per day, and up to 2.5 billion pieces of data were processed each second. Alink successfully withstood this massive real-time data training test and helped increase the click-through rate (CTR) by 4% on the Tmall e-commerce platform.

When Blink was made open-source last year, we considered whether to open source Alink at the same time. However, we did not dare to take such a leap because our first open-source project had not yet been completed. Instead, we decided to do things step by step. Moreover, many preparations had to be made so that Blink could go open-source. At that time, we could not simultaneously deal with the two major open-source projects. Therefore, we determined to open source Blink first.

After Blink became open-source, we considered that it would be better to open source Alink's algorithm library on Flink. However, we found that contributing to a community is actually a complicated thing. Blink already occupied a large amount of bandwidth when we were open sourcing it, and the community has only so much bandwidth. This made it impossible to do multiple things at the same time. The community also needs some time to digest new things. Therefore, we decided to let the community first digest Blink and then gradually introduce Alink to the community. This was a process that could not be overlooked.

FlinkML is an existing machine learning algorithm library in the Flink community. This library has been around for a long time and is updated quite slowly. In the contrary, Alink is based on the new generation of Flink. The algorithm library of Alink is completely new and has nothing to do with FlinkML in terms of code. Alink was developed by the PAI team of Alibaba's Computing Platform Division. After development, Alink was used within Alibaba and is now officially open-source.

In the future, we hope that the Alink algorithms will gradually replace the FlinkML algorithms. Alink may become a new generation of FlinkML, but this process will take a long time. In the first half of this year, we actively participated in the design of the new FlinkML APIs and shared our experience in Alink API design. In addition, Alink's Params and other concepts were adopted by the community. We began to contribute FlinkML code in June and have already submitted more than 40 PRs, including basic algorithm frameworks, basic utility classes, and several algorithm implementations.

Alink contains many machine learning algorithms, and therefore contributing or releasing these algorithms to Flink requires a large amount of bandwidth. We worried that the whole process would take a long time. Therefore, we first released Alink as a separate open-source project so that anyone who needs it could use it. If subsequent contributions go smoothly, Alink can be completely merged into FlinkML and become a major component in the Flink ecosystem. This is the perfect place for Alink. At this point, FlinkML would correspond exactly to Spark ML.

One advantage is that Alink works on the Flink computing engine layer. Next, the Flink framework also contains operators with user-defined functions (UDFs), and Alink has improved its algorithms in many aspects, such as detailed algorithm implementation optimizations covering communication, data access, and iterative data processing. These optimizations enable the algorithms to run more efficiently. At the same time, we also provide many auxiliary tools to improve ease of use. Alink also provides online learning algorithms, which represent another of its core technologies. Online learning requires rapid high-frequency updates of iterative algorithms. Alink has a natural advantage to deal with this. Such online scenarios are common for information streams of TopBuzz and Weibo.

In offline learning, Alink and Spark ML are basically similar. As long as they have done a good job in engineering, offline learning does not involve a generational difference. True generational differences arise from different design concepts. In terms of design, significant generational differences are due to differences in the product format and technical format.

Compared with Spark ML, Alink provides basically consistent batch algorithms, with similar features and performance. Alink supports all the algorithms commonly used by algorithm engineers, including clustering, classification, regression, data analysis, and feature engineering. Before we open sourced Alink, we benchmarked its algorithms against all Spark ML algorithms and achieved 100% consistency. The greatest highlight of Alink lies in streaming algorithms and online learning. Alink is unique in these areas, providing users with significant advantages and no drawbacks.

Alink comes with a variety of batch and streaming algorithms. It not only implements a wide range of efficient algorithms, but also provides a convenient Python API. This helps data analysts and application developers to complete end-to-end processes that cover data processing, feature engineering, model training, and prediction.

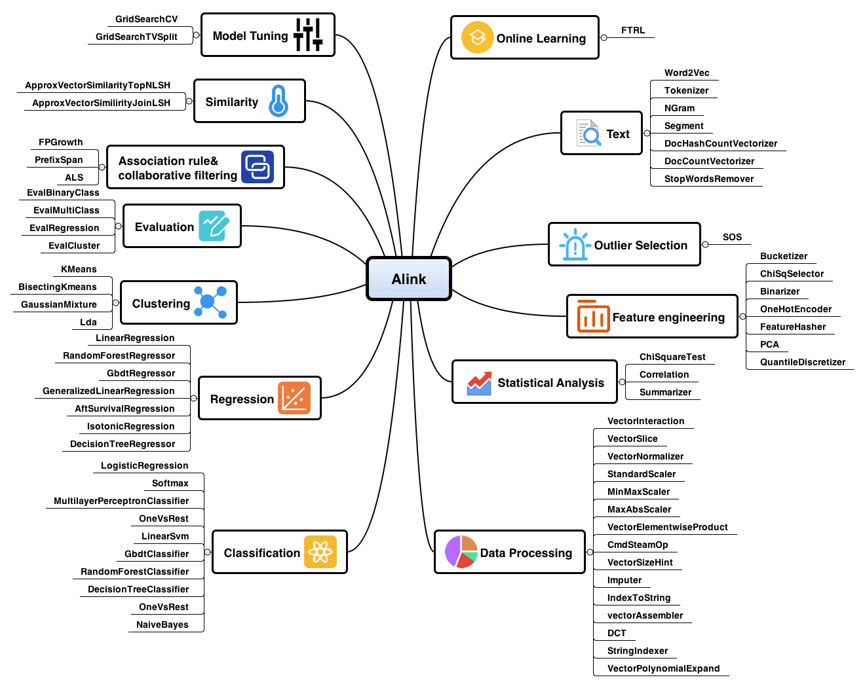

As shown in the following figure, each of the open-source algorithm modules provided by Alink contains batch and streaming algorithms. For example, the linear regression module includes linear regression model training based on batch data, linear regression-based prediction that uses streaming data, and linear regression-based prediction that uses batch data.

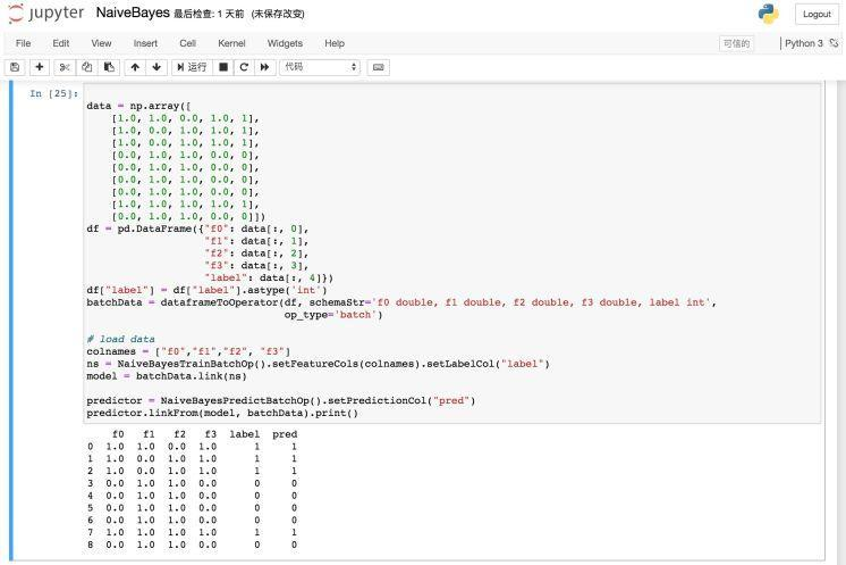

To provide a better interactive and visualized experience, we provide PyAlink on Notebook, which allows users to use Alink through the PyAlink Python package. Alink supports operation on a single machine and job submission to clusters In addition, the Operator (Alink operators) and DataFrame APIs seamlessly integrate the entire Alink algorithm process into Python. PyAlink allows you to use Python functions to call UDFs and user-defined table functions (UDTFs).

The following figure shows the use of PyAlink on Notebook, displaying model training and prediction and the result printing process.

PyAlink provides an installation package and requires Python 3.5 or later.

For more information about download and installation, see https://github.com/alibaba/Alink#%E5%BF%AB%E9%80%9F%E5%BC%80%E5%A7%8B--pyalink-%E4%BD%BF%E7%94%A8%E4%BB%8B%E7%BB%8D .

We provided five examples on GitHub. They are in ipynb format so that you can use them directly.

For more information about PyAlink examples, see https://github.com/alibaba/Alink/tree/master/pyalink .

The following animated examples show how to use PyAlink:

Example 1

Example 2

We have also open sourced Alink's intermediate function library. This library was built up during our development of machine learning algorithms based on Flink and continuous performance optimization. The library is very useful for algorithm developers in the Flink community. They can quickly develop new algorithms based on our intermediate function library. In addition, using this library will exponentially improve performance, in contrast to directly developing basic interfaces based on Flink.

The most important thing in the intermediate function library is the Iterative Communication and Computation Queue (ICQ). This is an iterative communication and computing framework summarized for iterative computation scenarios. It integrates memory cache technology and memory data communication technology. We abstracted each iteration step into a queue composed of a series of ComQueueItems, which are communication and computation modules. This component offers a significant performance improvement over Flink's basic IterativeDataSet, with the same volume of code and improved readability.

There are two types of ComQueueItems, including computation and communication. ICQ also provides the initialization function to cache DataSet to the memory. The cache formats include Partition and Broadcast. The former caches DataSet shards to the memory, whereas the latter caches the entire DataSet to the memory of each worker. By default, the AllReduce communication model is supported. In addition, ICQ allows you to specify iteration termination conditions.

The code for iterative development of LBFGS algorithms based on ICQ is as follows:

DataSet <Row> model = new IterativeComQueue()

.initWithPartitionedData(OptimVariable.trainData, trainData)

.initWithBroadcastData(OptimVariable.model, coefVec)

.initWithBroadcastData(OptimVariable.objFunc, objFuncSet)

.add(new PreallocateCoefficient(OptimVariable.currentCoef))

.add(new PreallocateCoefficient(OptimVariable.minCoef))

.add(new PreallocateLossCurve(OptimVariable.lossCurve, maxIter))

.add(new PreallocateVector(OptimVariable.dir, new double[] {0.0, OptimVariable.learningRate}))

.add(new PreallocateVector(OptimVariable.grad))

.add(new PreallocateSkyk(OptimVariable.numCorrections))

.add(new CalcGradient())

.add(new AllReduce(OptimVariable.gradAllReduce))

.add(new CalDirection(OptimVariable.numCorrections))

.add(new CalcLosses(OptimMethod.LBFGS, numSearchStep))

.add(new AllReduce(OptimVariable.lossAllReduce))

.add(new UpdateModel(params, OptimVariable.grad, OptimMethod.LBFGS, numSearchStep))

.setCompareCriterionOfNode0(new IterTermination())

.closeWith(new OutputModel())

.setMaxIter(maxIter)

.exec();Sentiment analysis analyzes the emotional tonality, especially the complimentary or derogatory, and positive or negative nature of a subjective text to determine the views, preferences, and emotional orientation of the text. In this case, we analyze a dataset composed of hotel reviews.

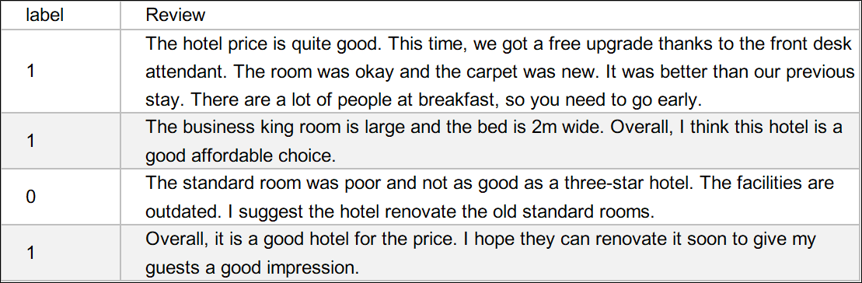

Data preview:

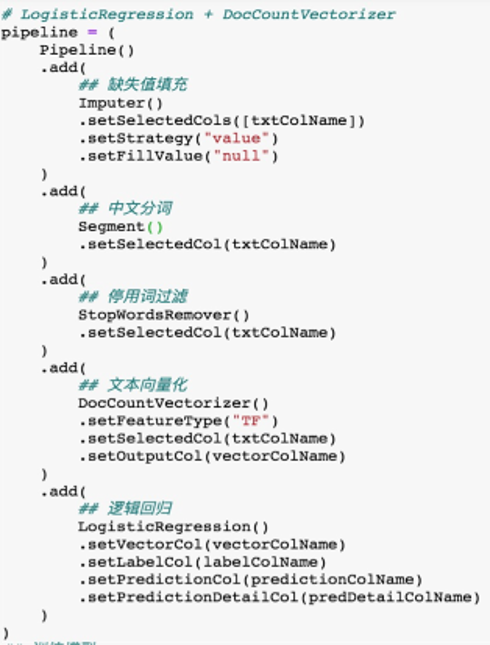

First, we define a pipeline that contains components for missing value filling, Chinese word segmentation, stopword filtering, text vectorization, and logistic regression.

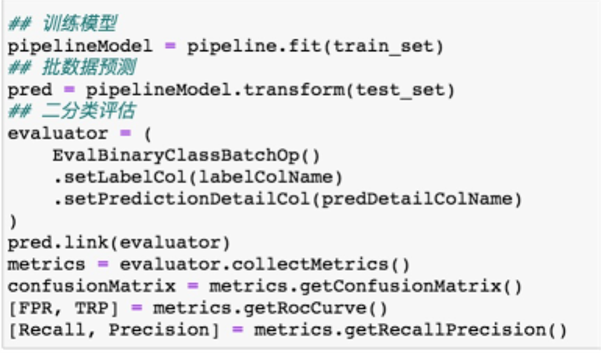

Next, we use the pipeline defined above to perform model training, batch prediction, and result evaluation.

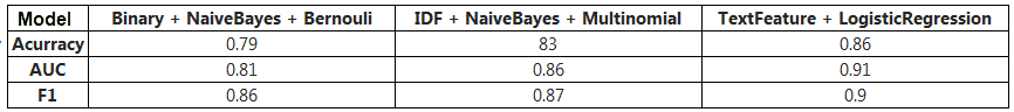

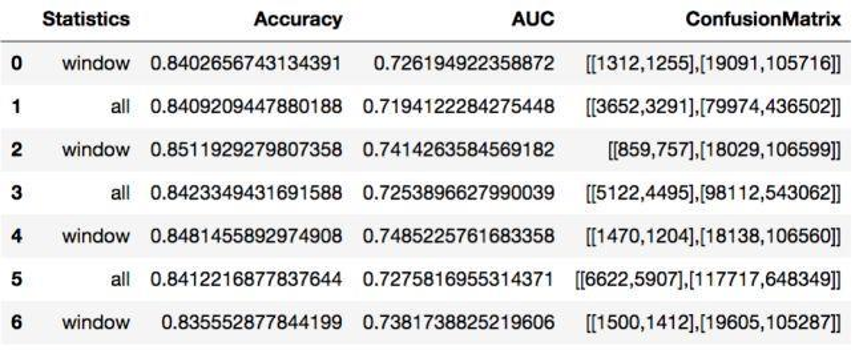

By using different text vectorization methods and classification models, we can compare the model results in a quick and intuitive manner:

In online advertising, the click-through rate (CTR) is an important indicator used to measure the effectiveness of ads. Therefore, the click prediction system plays an important role in sponsored search and real-time bidding. In this demo, we use the follow-the-regularized-leader (FTRL) method to train a classification model in real time and perform real-time prediction and evaluation.

Dataset: https://www.kaggle.com/c/avazu-ctr-prediction/data

Data preview:

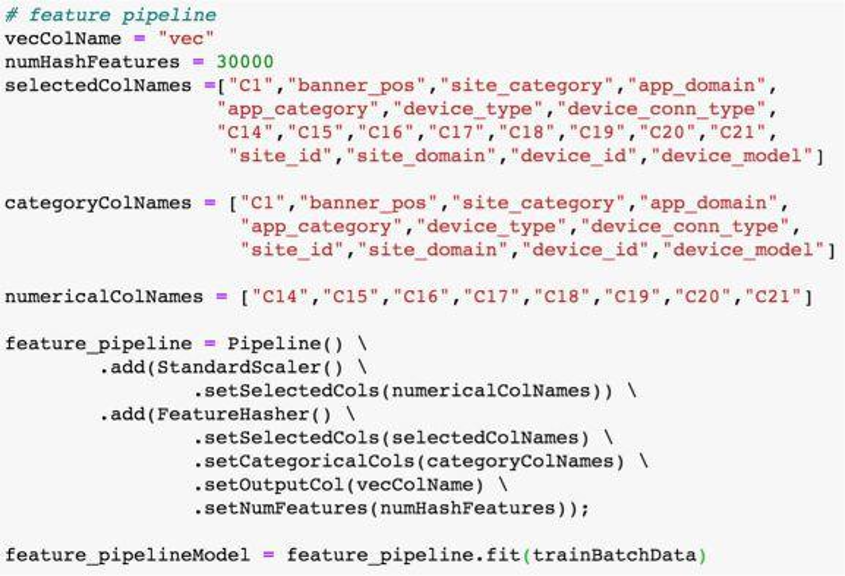

First, we build a pipeline for feature engineering, which is composed of two components connected in series: standardization and feature hashing. Through training, we obtain a pipeline model.

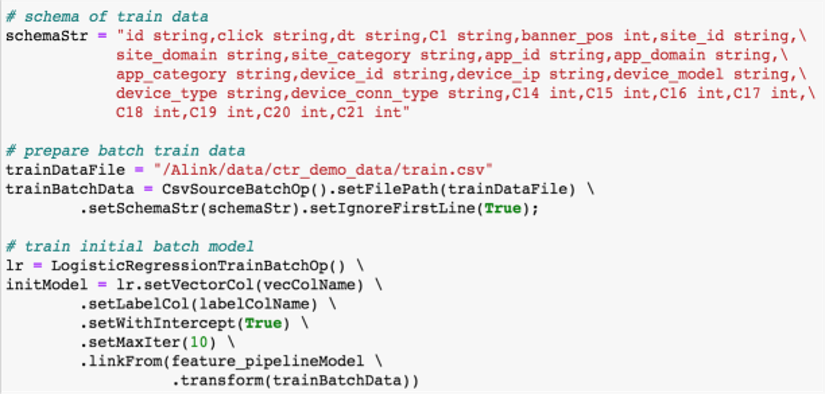

Second, we use a logistic regression component for batch training to obtain an initial model.

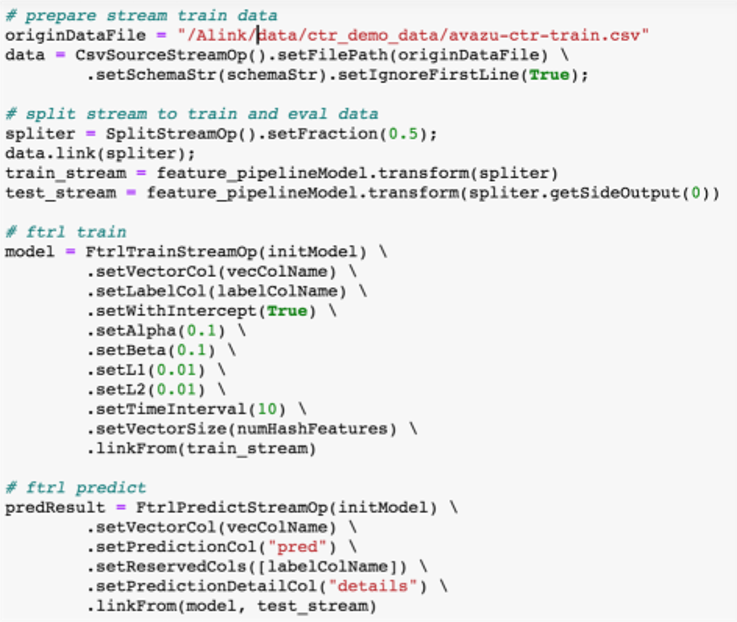

Next, we use the FTRL training component for online training and the FTRL prediction component for online prediction.

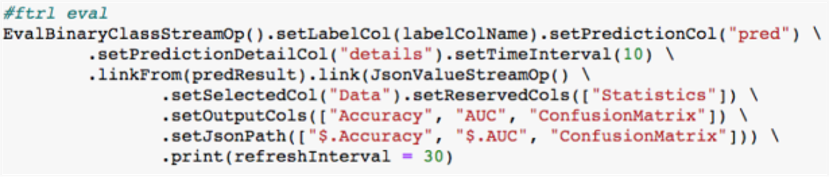

Finally, we use a binary classification evaluation component for online evaluation.

The evaluation results are displayed in Notebook in real time, allowing developers to monitor the model status in real time.

Now, the first step we have taken was to open source Alink. In the future, we will continue to work with the community to develop and improve Alink's functionality, performance, and ease of use based on user feedback and fix the problems Flink users encounter when using machine learning algorithms. In addition, we will continue to actively submit algorithm code to FlinkML. If subsequent contributions go smoothly, Alink can be completely merged into FlinkML and become a major component in the Flink ecosystem. This is the perfect place for Alink. At this point, FlinkML would correspond exactly to Spark ML.

Apache Flink Community China - September 27, 2020

Apache Flink Community China - April 13, 2022

Alibaba EMR - August 19, 2020

Apache Flink Community China - January 11, 2021

Apache Flink Community China - March 29, 2021

GXIC - February 25, 2019

Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Machine Translation

Machine Translation

Relying on Alibaba's leading natural language processing and deep learning technology.

Learn More