By Ying Hui, Senior Technical Expert for Alibaba Computing Platforms

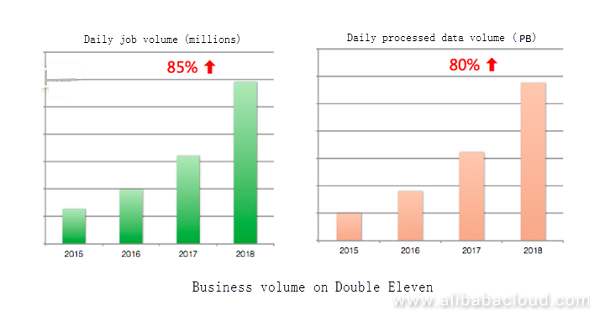

As the main computing platform of Alibaba, MaxCompute yet again proved its capacity to handle massive data and high concurrency during the 2018 Double Eleven Shopping Festival. Over the years, MaxCompute has provided powerful computing capacity to Alibaba's annual Double Eleven as the core super-computing platform, supporting all business units in the group.

This document shows how the tens of thousands of clustered servers deployed for MaxCompute support the rapid growth of the Group's businesses.

Every year, just before Double Eleven, projects are migrated to new clusters for MaxCompute. This is because Double Eleven is the natural deadline of various complex projects. Before the 2018 Double Eleven shopping festival, projects were migrated northwards and deployed offline in mixed mode. With the exception of Ant Financial, all clusters in Hangzhou involving projects, data storage, and computing tasks for most businesses had been migrated to Zhangbei. This created challenges for supporting big data computing services during 2018 Double Eleven.

Currently, MaxCompute incorporates tens of thousands of servers, including those for the clusters that are deployed offline in mixed mode. The total volume of data storage reaches the EB level, and millions of operations are executed every day with a total data volume for daily operations of up to hundreds of PB. The clusters are distributed in three separate regions and interconnected through long transmission links. Due to the inherent universal association of the Group's data services, these clusters have a lot of interdependent data and are heavily dependent on bandwidth.

The use of a large number of servers results in high costs. To reduce costs, it is necessary to use the computing and storage capabilities of each cluster efficiently, maximizing resource usage. At the same time, different businesses have different features. Some may require more storage space and fewer computing resources while others may require more computing resources and less storage space. Additionally, some businesses may involve frequent and massive ETL I/O while others may require CPU-intensive machine learning.

The following requirements present challenges to MaxCompute both during the Double Eleven Shopping Festival and also during daily operations: maximizing the utilization of the capabilities of each cluster by improving the utilization of CPU, memory, I/O, and storage while balancing the workloads of all clusters; addressing the pressure of long-distance transmission bandwidth between sites; ensuring stable operations with high resource usage; and supporting mass migration tasks such as migrating all clusters in Hangzhou.

The following sections will address the various aspects from which MaxCompute handles these challenges.

In 2018, projects were migrated northwards and deployed offline in mixed mode. All clusters in Hangzhou were migrated to Zhangbei, including the control and computing clusters of MaxCompute. The large-scale migration of physical resources also raised some problems and challenges for MaxCompute.

When projects were migrated to other clusters, job failures may have occurred, and an AllDenied error may have been displayed. In the past, migrating a project from one cluster to another affected users, requiring that a notification be issued in advance, and causing problems to both the users and the O&M personnel.

This year, MaxCompute supported normal operations and submission during project migration, for a migration process that was transparent to users.

Due to business differences between different clusters, the usage of computing resources and storage space of the different clusters may be unbalanced. For normal migration, both the storage and computing space of the target cluster must meet requirements. Migration of a large-scale project to a target cluster with surplus available computing capacity but high existing storage usage will fail.

The lightweight migration mechanism that was launched this year enables the migration of only computing data and partial hot data to the new cluster, while retaining earlier data in the original cluster. This enables the balancing of computing resources without frequent cross-cluster access.

MaxCompute uses the core metadata of OTS, meaning that the entire MaxCompute instance can be affected if OTS is abnormal. Moreover, this MaxCompute dependency on OTS did not allow primary/backup switchover, preventing the migration of OTS clusters.

This year, as part of the northward migration planning, we thoroughly developed and tested the OTS primary/backup switchover solution, resolving the dependencies on control services and OTS clusters. This not only enables OTS primary/backup switchover but also allowed the direct migration of OTS clusters from Hangzhou to Zhangbei.

Despite the initial failure of the flexible internal switchover mechanism, with further optimization and testing, we ultimately managed to reduce the switchover duration from the planned minutes to several seconds and successfully implemented the switchover mechanism on the public cloud online environment. During the switchover process, no abnormalities were reported, making the entire process transparent to users.

This provides OTS, the key component of MaxCompute, with lossless primary/backup switchover capability, dramatically reducing the overall risks of the service.

Due to differences in job types or business features, the computing resources between different clusters may not be fully utilized. For example, the peak hours and duration of business resources are different throughout the day. Usage gaps in clusters that handle operations for businesses requiring major resources can be utilized by overflow small operations. In special cases, temporary resource borrowing may be required.

For this purpose, MaxCompute provides several global job-scheduling mechanisms that can schedule specific batches of jobs to the specified clusters or automatically schedule the jobs to idle cluster resources when the current cluster resources are busy.

In addition to balancing resource usage, these mechanisms also offer the flexibility of manual scheduling. Moreover, development of scheduling mechanisms that are integrated with data arrangement to schedule jobs based on the real-time status of clusters is currently underway.

There are two available ways to allow jobs to access the table data of other clusters. One, "direct reading," allows the remote cluster to be directly read from the current cluster. The other, "waiting for replication," preliminarily copies the remote data to the current cluster. Both options have their pros and cons, and both are applicable to different scenarios. The network topology between clusters (such as long-distance transmission across regions or short-distance transmission in the same city with the same core) also influences the choice between the "direct reading" and "waiting for replication" methods. For long-distance transmission across regions, the costs are high and the bandwidth is low, while short-distance transmission in the same city features higher network bandwidth. However, both topologies are subject to traffic congestion during peak hours, and thus a global scheduling policy is required to exploit the bandwidth advantage in the same-city scenario without introducing a bottleneck to this scenario.

Because businesses and their data dependencies change every day, we continuously optimize and update the replication policy based on analysis of historical tasks. To address these changes, we select a hub cluster in each region to receive long-distance transmission replication requests, and then implement chain replication or short-distance direct reading within the region. By implementing the hub 2.0 project, we have reduced the data replication traffic between regions by more than 30%.

Different models have different bottlenecks.

Today, MaxCompute still involves tens of thousands of clusters, but the number of CPU cores on a single machine has increased from 24 or 32 to 96. Namely, the capability of one new machine is equivalent to that of three old ones. However, regardless of the number of CPU cores on a single machine, in a MaxCompute cluster the CPU runs at full capacity for several hours a day with a daily average CPU usage of 65%.

Another issue is that the data I/O capability is still determined by the unchanged number of hard disks on a single machine. Although the hard disk space has been expanded and the single-disk capacity is three times larger than before, the IOPS of each disk is similar, making DiskUtil a major bottleneck.

Several optimization measures were implemented for the large number of 96-core clusters that were launched in 2018 to avoid the issues that resulted from the launch of 64-core clusters in 2017. These measures maintained DiskUtil at a controllable level.

Most of you may have encountered the following situation: a FILE_NOT_FOUND error is reported when you first run a job but the second attempt succeeds, or a job that scans a long time range fails repeatedly.

To mitigate the pressure from cluster files, the file merging at the backend of MaxCompute is at the risk of hitting the top if it is stopped for one or two days. However, over long periods, to ensure data consistency and efficiency, jobs that are reading must be interrupted to merge only the partitions that are idle. On one hand, the pressure from cluster files forces to reduce the judgment threshold from one month to two weeks or even less. On the other hand, certain jobs may still read earlier partitions and may be interrupted by the merger operation.

This year, the platform has implemented a new merging mechanism that allocates a certain period for jobs that are running jobs to read the files before the merger operation is performed. Through this mechanism, the jobs are no longer affected, and this issue is resolved.

This new mechanism has achieved good results in the public cloud and is in canary testing within the Group.

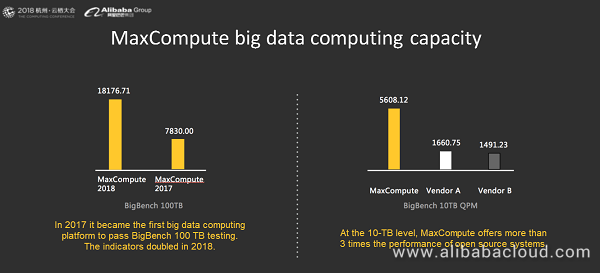

As a computing platform, MaxCompute takes computing capacity as its core indicator, supporting the rapid growth of the Group through increasing computing capacity. The number of MaxCompute jobs performed on 2018 Double Eleven almost doubled that performed on 2017 Double Eleven. During 2018, MaxCompute was continuously improved in terms of NewSQL, structural enrichment, computing platform integration, and AliORC, to build a high-availability, high-performance, and highly-adaptive big data computing platform with enhanced computing power. During its launch at Computing Conference held in September 2018, the evaluation results of TPC-BB showed that, at the 10-TB level, the performance of MaxCompute is more than 3 times higher than that of open source systems. At the 100-TB level, its score increased from 7,800+ in 2017 to 18,000+ in 2018.

The robustness of Alibaba Cloud MaxCompute has been proven yet again by its successful performance during the 2018 Double Eleven shopping festival. Despite this success, there is room for further enhancement of the overall capability of multiple clusters through improved distributed computing. Meanwhile, sustained and rapid business growth can be supported by improving computing capacity to ensure the stability of large-scale computing scenarios. Through continuous engine optimization, framework development, intelligent data warehouse development, and development in other dimensions, MaxCompute is evolving into an intelligent, open, and ecological platform that is capable of supporting another 100% of growth in businesses.

135 posts | 18 followers

FollowAlibaba Cloud MaxCompute - January 7, 2019

Alibaba Clouder - August 28, 2019

Alibaba Cloud MaxCompute - May 30, 2019

Alibaba EMR - May 6, 2021

Alibaba Cloud MaxCompute - March 31, 2021

Alibaba Cloud Community - March 29, 2022

135 posts | 18 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn MoreMore Posts by Alibaba Cloud MaxCompute

Raja_KT March 12, 2019 at 5:14 am

Whopping 600-PB level, I can't imagine.It may require so much of planning :)