By Chen Gong (Yunxi) and Li Yue (Zhiqian), technical experts of Alibaba data technology and product department

2020 Double 11 Global Shopping Festival marked a new technological feat achieved by Alibaba; Alibaba cloud-native data warehouse was implemented for real-time core data scenarios for the first time. This cloud-native data warehouse was built based on Hologres and Realtime Compute for Apache Flink, and has set a new record for the big data platform. In this article, we'll discuss about Hologres' best practices at Alibaba, in particular for AliExpress. Hologres has supported the upgrades of AliExpress real-time data warehouse, saving nearly 50% of costs and increasing the efficiency by 300%.

AliExpress, or “全球速卖通” in Chinese, is a cross-border e-commerce platform built by Alibaba for international markets. It is sometimes dubbed as the "international version of Taobao" by sellers. 2020 marked AliExpress' 10th anniversary, but it encountered many challenges when expanding its business in the global market while facing the global pandemic.

In fact, the entire retail and e-commerce industry was facing similar issues, including the decrease of customer traffic, increase in cost for attracting new users, and decrease of efficiency in marketing efforts. The business development for many businesses now relied on the optimization of traffic-oriented operations. Sellers and e-commerce platforms started to use new channels to discern in-site traffic distribution and improve receiving efficiency.

During the 48-hour promotion for the international version of Double 11, the frequency of data updates directly determines the number of business decisions made. Thus, processing and analyzing data of traffic channels in real time is a necessity for AliExpress to ensure accurate decision making. The following sections of this article describes the process of implementing the real-time analysis system of traffic channels with outstanding performance using Hologres.

Before the upgrade, traffic channels were deployed in a quasi-real-time architecture. The overall data timeliness is H+3, which means that data of three hours earlier is available for query. Therefore, the next step was to design and implement a real-time architecture, based on the application scenarios of AliExpress and other business units. The main evolution of real-time data warehouse architectures is as follows.

A lambda-based real-time data warehouse is an industry-wide solution. After data collection, according to business demands, data is divided into the real-time layer and the offline layer, for real-time and offline processing respectively. Merged data of processing results are generated before the data service layer connects with data applications. Thus, real-time and offline data can be provided to online data applications and products.

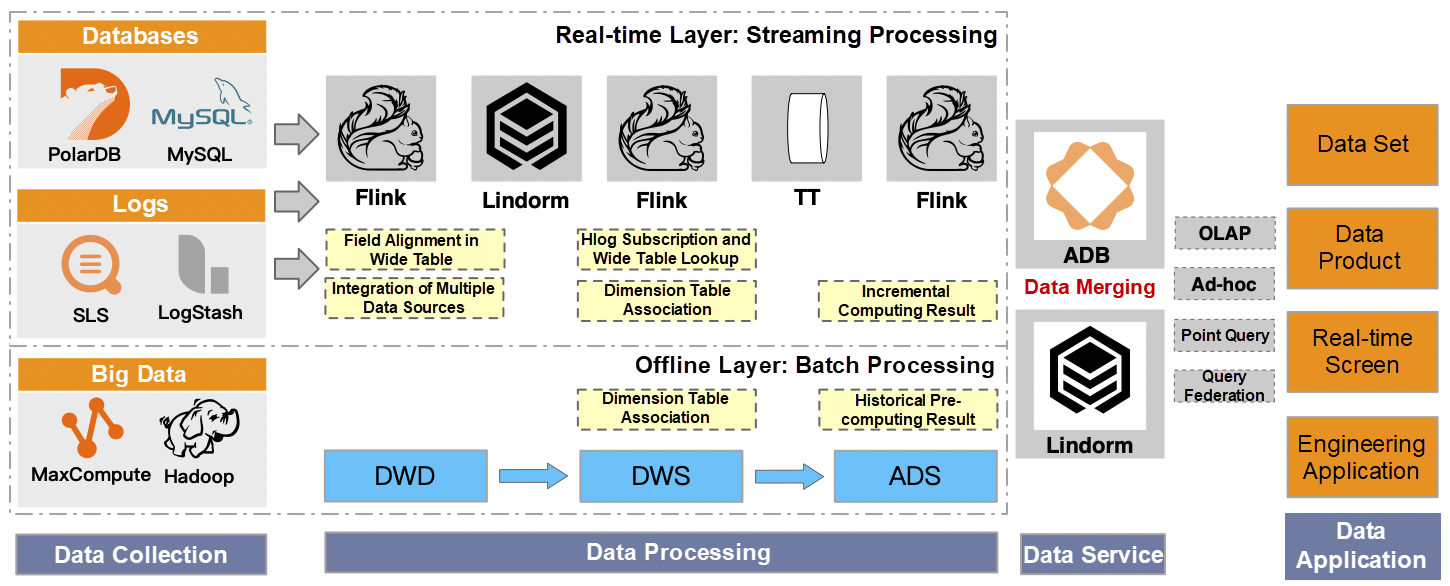

Figure 1 - Lambda architecture of data warehouse based on Apache Flink and multiple data engines

Processing at the offline layer: Collected data is consolidated to an offline data warehouse and the Operational Data Store (ODS) layer in sequence. Data at the ODS layer is cleansed and then written to the Data Warehouse Detail (DWD) layer. During the data modeling process, further processing of data at the DWD layer is conducted to improve the data analysis efficiency and implement business hierarchy. This process is equivalent to pre-computation, whose results are dumped to some offline storage systems before provided to data services.

Processing at the real-time layer: The real-time layer has a similar processing logic to that of the offline layer, while emphasizing timeliness. The real-time layer subscribes to and consumes real-time data stored in the upstream database or system. After consumption, the data is written to the real-time DWD or DWS layer. As a real-time computing engine provides computing capabilities, the final processing results are written to real-time storage, such as KV Store.

In many scenarios, the offline layer offers an important function in correcting data problems at the real-time layer. In addition, considering the cost and development efficiency, the real-time layer generally only retains data for two to three days. The monthly and annual data and other longer period data are stored in offline data warehouses. For scenarios that require the combination of offline data and real-time data, more products are introduced, such as MySQL and AnalyticDB.

In traffic channel scenarios, promotion-related analysis often requires comparison of data from different periods. For example, data of the previous year's Double 11 is needed for comparative analysis with data of this year's Double 11. What's more, offline data refresh and data consistency issues need to be considered. The existing real-time data warehouse of AliExpress is also based on Lambda architecture. Here are several key ideas of the streaming processing layer.

1) Apache Flink is responsible for most of the parsing and aggregation computing.

2) TimeTunnel of Message Queue stores and abstracts results from the DWD and DWS layers.

3) Lindorm or MaxCompute storage is selected based on the data volume of dimension tables.

4) Result data is imported to different storage engines based on the characteristics of downstream application scenarios. For example, Lindorm is introduced to provide point queries with high QPS, and AnalyticDB is introduced to perform interactive analysis on offline data.

Rapid business growth and data expansion have highlighted issues in data quality, Operations and Maintenance (O&M) complexity, high costs, and business agility. The following are concrete descriptions of these issues.

1) Consistency: Offline and real-time processing require 2 sets of code, 2 sets of semantics, and 2 copies of data. Streaming and batch processing have different processing logics in terms of code, so processing results of same data may be different. During merging offline and real-time data, data structures need to be constantly redefined. Data consistency problems may occur due to data dumping, changing, and merging. However, the “stream-batch unification” technology is aiming to solve consistency problems.

2) Multiple interconnected systems with complex architectures and high latency risks: The data computing procedure is long, and TT sink nodes inserted among several Flink tasks reduce the system robustness. When a data exception is found in a downstream task, the tracing and troubleshooting costs in upstream are increased. Due to the real-time nature of streaming computing tasks, developers must locate and solve problems in hours, and sometimes only in minutes for online systems.

3) Long development cycle and non-agile business: Complex architectures increase development and change costs, since data collation and verification are required before any data or business solution is launched. If there is a problem in the process of data collation, problem positioning and diagnosis will be very complicated. In extreme cases, less than 20% of the development time is spent on implementing the code logic of a real-time task. The remaining 80% of the time is spent on lengthy comparison task development, data verification and debugging, and task optimization and O&M. This is a great challenge to the improvement of R&D efficiency.

4) Difficult metadata management and high storage costs: In terms of data service, different database engines are selected for different business scenarios. However, this method increases the storage cost, and is difficult to manage these systems. When multiple underlying storage engines are used, especially with various KV engines included, it is difficult to manage tables and fields in a concise and friendly way due to schema-free character of tables.

To solve the above pain points, we need to consider whether Apache Flink and Lindorm are capable for simplifying the real-time part. Lindorm is a distributed NoSQL database, which stores data as key-value pairs and supports high-concurrency point queries with millisecond-level response. Apache Flink is the most advanced streaming computing engine in the industry. Its dynamic management of table state and retracement mechanisms can meet most of the metric computing requirements.

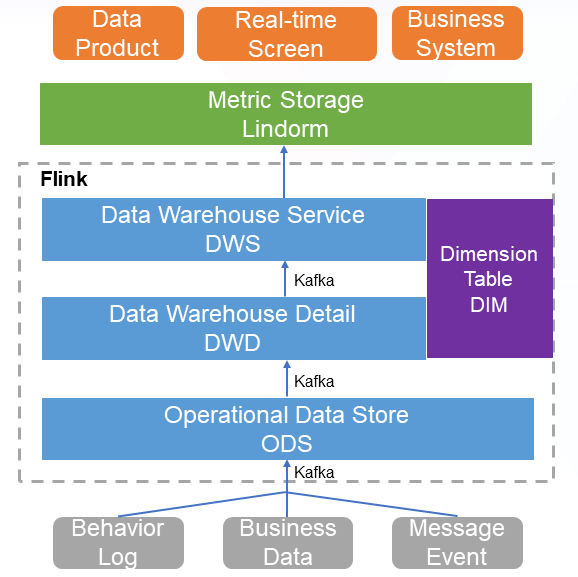

Figure 2 - Architecture of real-time data warehouse based on Flink and Lindorm

The architecture diagram shows that metric computing, including table join, metric aggregation, and dimension table query, are completed in the Flink engine. Specifically, the Flink engine processes messages in real time and stores the results of metric computing in the engine memory. When metrics are updated, the results are retrieved through the retracement mechanism. The old and new values of metric results are sent to downstream operator. Then, the updated metric results are synchronized to Lindorm.

The biggest feature of this scheme is that, metrics are computed through Flink streaming engine. The pre-calculated multi-dimensional Cube is then imported into the low-latency and Lindorm distributed database. Thus, sub-second query response is achieved. However, there are obvious storage disadvantages to this architecture.

1) The large resource consumption in computing logic: Different metric combinations based on dimensions need to be stored in Flink memory. As the granularity of metrics becomes finer or the metric time spans wider, the resource consumption increases.

2) The lack of query flexibility: The computing logic must be defined in advance and cannot flexibly adjust based on queries. However, in traffic channel scenarios, there are scenarios similar to multi-dimensional flexible cross analysis, such as industries, products, commodities, and merchants.

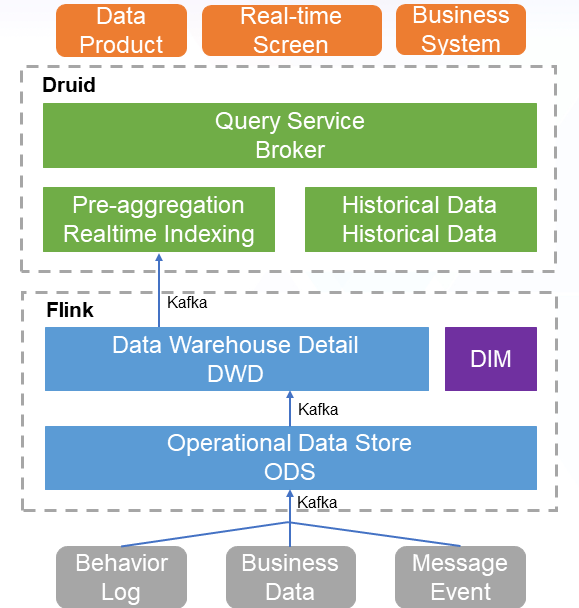

How about Apache Flink + Apache Druid solution? Druid is a distributed data processing system that supports multi-dimensional OLAP analysis in real time. A key feature of Druid is that, it allows pre-aggregated ingestion and aggregated analysis of data based on timestamps. In addition, it supports high-concurrency queries at sub-second level. In this solution, Flink is only responsible for simple ETL work, and the metric computing is completed by Druid. Druid pre-aggregates the computing and storage based on the pre-defined metric computing template, and improves the query service.

Figure 3 - Architecture of real-time data warehouse based on Flink and Druid

However, the limitations of this solution are also very obvious.

The preceding two solutions have good application value in some specific scenarios. For example, the Apache Flink + Lindorm solution is suitable for scenarios with high timeliness requirements, such as real-time big screens. The Apache Flink + Apache Druid solution is suitable for real-time and multi-dimensional analysis of a single table with massive data. However, for traffic analysis scenarios, both solutions have obvious limitations and cannot meet our needs.

By chance, we learned that other departments in the Alibaba Group were trying to implement real-time data warehouses through Realtime Compute for Apache Flink and Hologres. It has given us great confidence after detailed learning. The three most attractive capabilities of Hologres are as follows.

1) High-performance data ingestion: Hologres supports instant real-time data writing and query with millions of requests per second (RPS).

2) High-performance queries: Based on MPP, analysis engine and node responsibility pair of distributed Relational OLAP (ROLAP) performs partial data processing, respectively. Hologres makes full use of the hardware advantages. For example, it leveraged Single Instruction Multiple Data (SIMD) of CPU and developed vectorized execution engine. Generally, the maximum CPU performance can be reached to achieve the purpose of efficient computing, when facing massive data computing. Hologres can support multi-business scenarios such as point query, Ad-hoc, OLAP, and offline federated analytics in real time. Thus, it is possible to adopt a unified engine for external services.

3) High-scalability storage: Hologres adopts storage-compute separation architecture which facilitates horizontal scaling. It supports TB-level to PB-level data storage. In terms of data storage format, Hologres supports both row-oriented storage and column-oriented storage.

Figure 4 - Hologres architecture

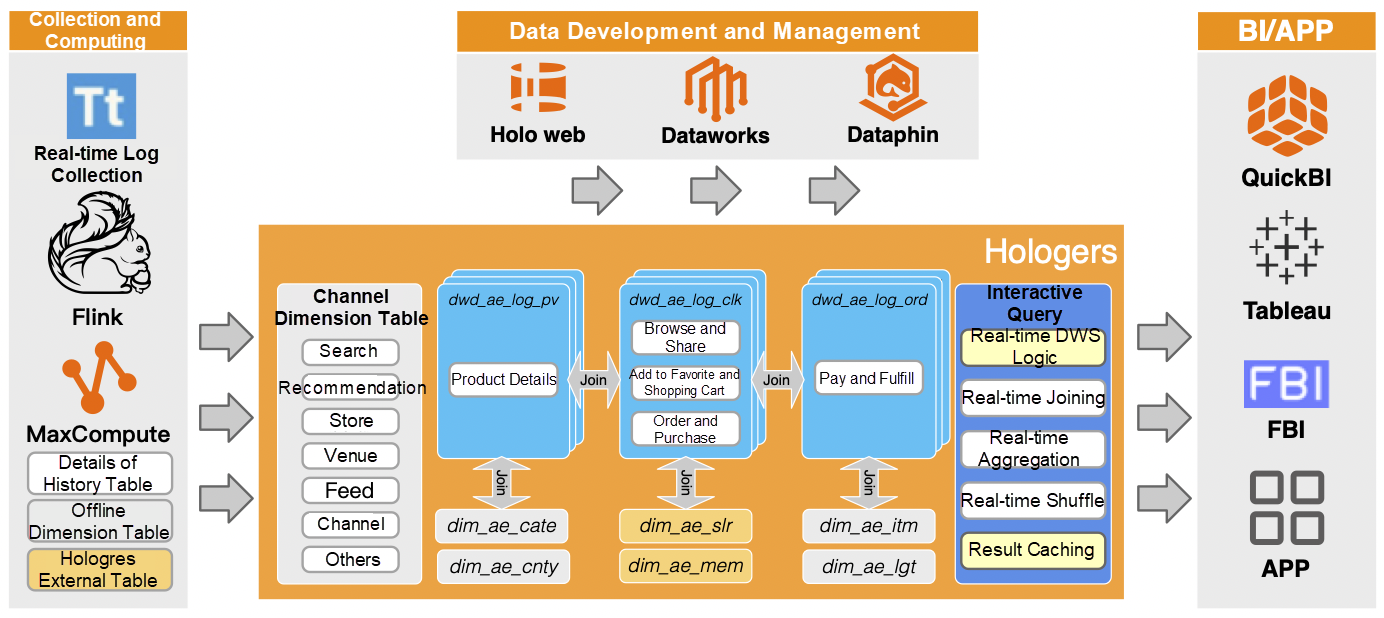

Based on Apache Flink and Hologres, we have designed the real-time architecture of traffic channels.

Figure 5 - Implementation of the real-time data warehouse based on Flink and Hologres for traffic channels

How does the upgraded architecture of real-time data warehouse based on Apache Flink and Hologres solve the existing problems of the one based on Lindorm or Druid?

From knowing to completing use of Hologres, Hologres has brought many remarkable business values, mainly in real-time decision-making, R&D efficiency improvement, and cost reduction.

Real-time and accurate business decision-making: Within 48 hours of Double 11, the greatest value of real-time response is to make the most direct response to the current situation based on the real-time performance of traffic. This value ensures the accuracy and timeliness of the solution. In 2020, the overall effective data review of the promotion activity has increased from one time in the past to three times. The 300% increase on timeliness value leaves a lager imagination space toward the future.

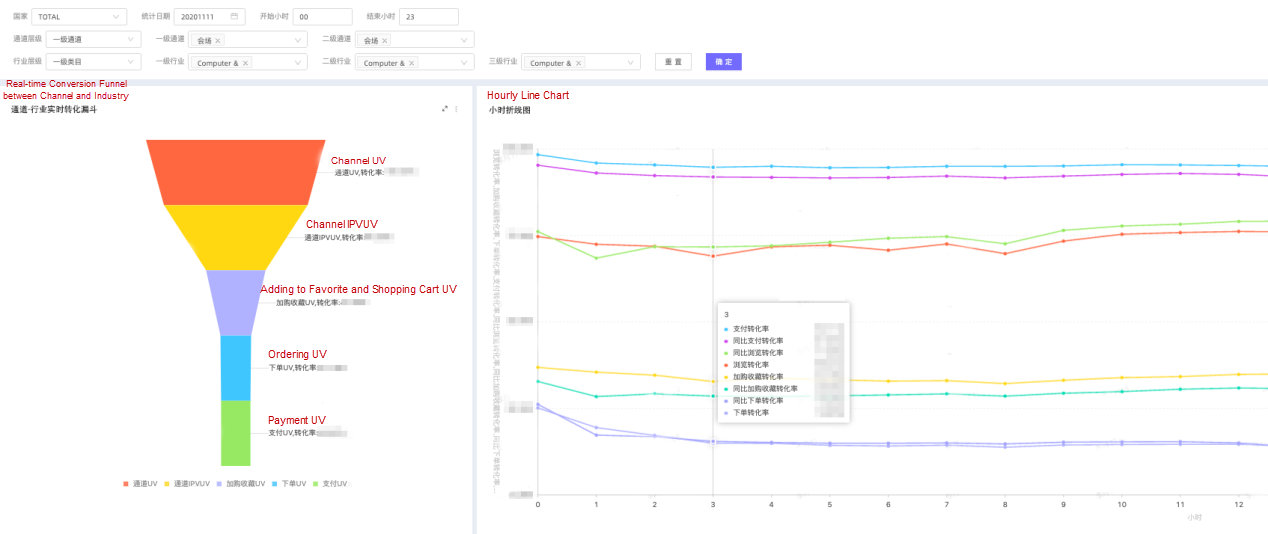

As shown in the figure, the operation from 02:00 to 03:00 shows that the channel browsing rate of the venue continues to decline. After timely adjustment, real-time data shows that the improvement is in line with our expectation.

Flexible multi-dimensional data analysis: After real-time data is generated, analysts, business operations, and others can select, filter, analyze, and display real-time data according to their demands. The Double 11 Fenghuotai (a data product used inside the Alibaba Group), quickly precipitated channel efficiency analysis in multiple perspectives, including industries, products, merchants, commodities, and overseas warehouses. Among them, channel analysis on commodities and overseas warehouses are conducted by the operations themselves.

The biggest surprise that the Apache Flink + Hologres architecture brings to Data Analysis (DA) personnel is the significant improvement in R&D efficiency. At the beginning of the project, the workload of overall evaluation is 40 person-days. With the data-side output in intermediate layer, metric production, data verification, analytical report generating, and other works are performed in parallel. The actual days needed reduced by 11, and the efficiency is increased by nearly 30%. Therefore, we had more energy to optimize SQL and performed multiple performance stress testing of Hologres before the promotion activity.

Fact DWD layer: The detailed data from the DWD layer can be directly viewed by operations, BA, and BI personnel. The interactive method for demands is based on SQL and the metric standards are verified in real time. The development duration is reduced from days to hours, and overseas warehouse demands are combined, improving efficiency by 400%.

Fact DWS layer: Although DWS visualization poses many performance challenges, the benefits of shorter troubleshooting path and more flexible metric changes without data refreshing are obvious.

Data display: BI and BA personnel can build a real-time screen by themselves through FBI or Vshow and Hologres. This improves business experience of self-service data analysis, and fills the gap between business demands of annual promotion activities and data development resources.

In 2020, Russia has been affected by the devaluation of the ruble. This has caused a hike in prices because AE commodity prices are denominated in U.S. dollars. In the last four hours of 2020 Double 11, users from standard product industries were less willing to place orders, so the operations added Top Brand selection demands temporarily. Brands were selected based on the relative performance of its page traffic, which allowed AE to boost the traffic of underperforming products. This helped AE and the brands to achieve the goal of the overall GMV.

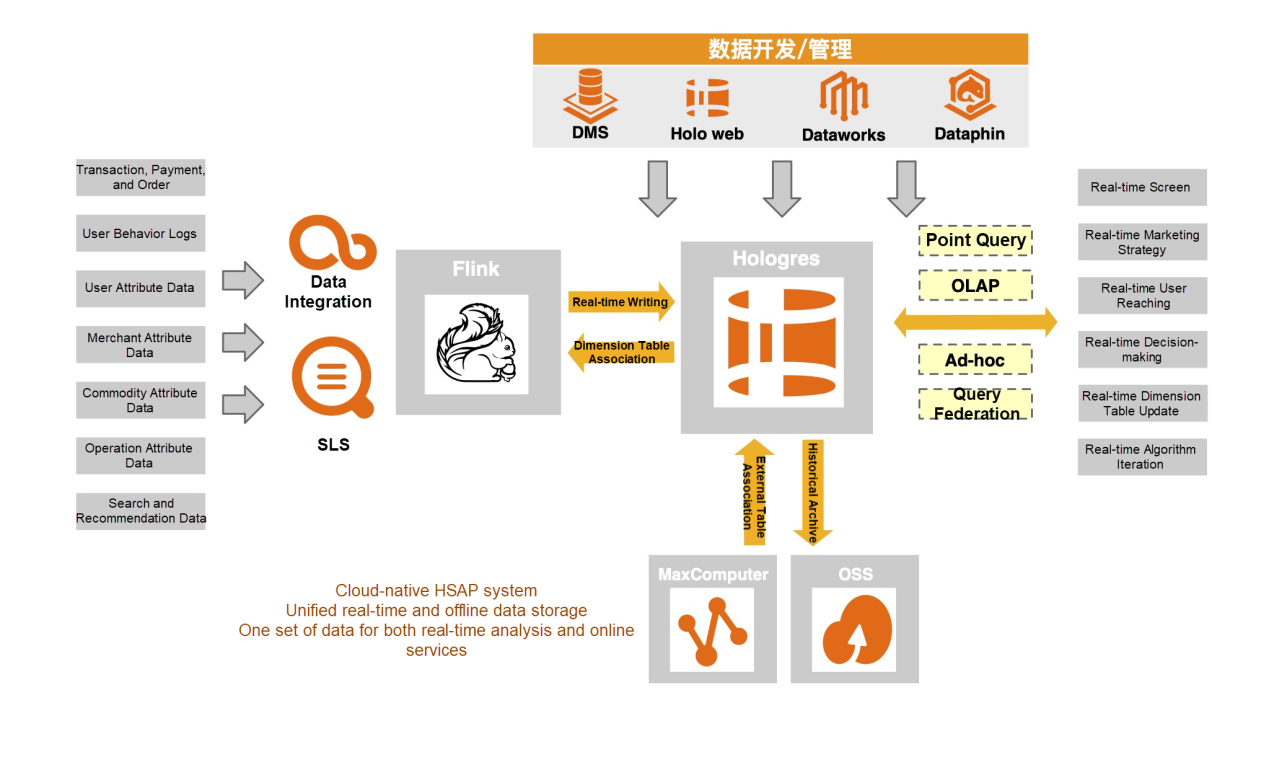

We hope that Hologres will continue to make more in-depth explorations on stream-batch unification and Hybrid Serving and Analytical Processing (HSAP) in the future. We also look forward to adopt Hologres in more scenarios. The expected architecture and capabilities of Hologres are as follows.

Figure 7 - Expectations on future Hologres ecosystem

Resource isolation: In the long run, the stream-batch unification processing is inevitable to cause resources preemption between large queries and small queries. The resource groups are isolated. This way, computing resources are elastically divided, and computing resources in different resource groups are physically isolated from each other. By binding account to different resource groups, the SQL query is automatically routed to the corresponding resource group based on the binding relationship for instruction execution. Different query execution modes can be selected for different resource groups. By doing so, users can implement multiple tenants and hybrid loads within the instance. It would be better if Hologres scheduling can automatically deal with the resource preemption problem.

Stream-batch unification: Same engines are used for batch and streaming processing, and utilize same resources. They provide natural load shifting and natural blending. Thus, they greatly reduce development, O&M, and resource costs.

1)Unified Storage (Direction 1): MaxCompute can directly access Hologres data. The quasi-real-time procedure is gradually upgraded to real-time procedure. Hologres data can be archived to MaxCompute. In addition, offline ODS is gradually removed. Thus, the unification of offline and real-time data is achieved.

2)Unified Computing (Direction 2): Hologres-based data services with stream-batch unification can execute streaming and batch tasks in different time with MPP mode.

Scheduling elasticity: The load of the data warehouse fluctuates during specific time periods, and resources are often idle during off-peak hours. The scheduled and automatic scaling feature allows users to flexibly customize elastic plans. Therefore, users are able to automatically scale out resources to deal with the business traffics before the business peak period. This method meets the business demands of traffic peak and reduces the cost. Moreover, resource groups can even allow no node existing during off-peak hours, which is extremely low in cost.

Data hierarchy: The hot and cold storage mediums are selected independently based on table granularity and secondary partition granularity of table. For example, all dimension table data can be specified to be stored on the local SSD, or local SSD can be specified to store part of partition data of transaction tables. Cold partitions are stored in OSS to realize the lowest storage cost.

Row-column hybrid storage: The TT stream enables real-time dimension table updates through dimension table subscription. The row-column hybrid storage supports row storage updates and column join aggregation of dimension tables.

Automatic conversion between row and column storages: When writing Flink or business data in real time, row storage tables can be selected, so that a more friendly way can be provided to update and delete single-record fields. If row storage tables can be automatically converted into column storage tables, with combination or multi-dimensional sorting, column storage tables can provide the best query performance suitable for business scenarios.

Materialized view: The data cleansing procedure (ETL operations) is simplified. This can help users accelerate data analysis, for example, big screen acceleration. By cooperating with BI tools or caching some public intermediate results, materialized views can accelerate slow queries.

Looking at the Development Trend of Real-Time Data Warehouses from the Core Scenarios of Alibaba

Hologres - June 30, 2021

Hologres - July 7, 2021

Hologres - July 13, 2021

Hologres - July 16, 2021

Hologres - June 16, 2022

Alibaba Cloud New Products - January 19, 2021

Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn More E-Commerce Solution

E-Commerce Solution

Alibaba Cloud e-commerce solutions offer a suite of cloud computing and big data services.

Learn MoreMore Posts by Hologres