By Sun Jiabao

Sun Jiabao shared how to realize Flink MongoDB CDC Connector through MongoDB Change Streams features based on Flink CDC.

The main contents include:

XTransfer focuses on providing cross-border financial and risk control services for small and medium-sized cross-border B2B e-commerce enterprises. XTransfer builds a global financial management platform and provides comprehensive solutions for opening global and local collection accounts, foreign exchange, overseas foreign exchange control country declaration, and other cross-border financial services through the establishment of data-based, automated, Internet-based, and intelligent risk control infrastructure.

In the early stage of business development, we chose the traditional offline data warehouse architecture and adopted the data integration mode of the full collection, batch processing, and overwrite. The data timeliness was poor. With the development of business, offline data warehouses cannot meet the requirements for data timeliness. We decided to evolve from offline to real-time data warehouses. The key point of building a real-time data warehouse is to change the choice of data collection tools and real-time computing engines.

After a series of investigations, we noticed the Flink CDC project in February 2021. It embeds Debezium, which enables Flink to capture change data, reduces the development threshold, and simplifies the deployment complexity. In addition, Flink's powerful real-time computing capabilities and rich external system access capabilities have become a key tool for us to build real-time data warehouses.

We have also made extensive use of MongoDB in production. As a result, we have implemented Flink MongoDB CDC Connector through MongoDB Change Streams features based on Flink CDC and contributed to the Flink CDC community. Currently, MongoDB has been released in version 2.1. We are honored to share the implementation details and production practices here.

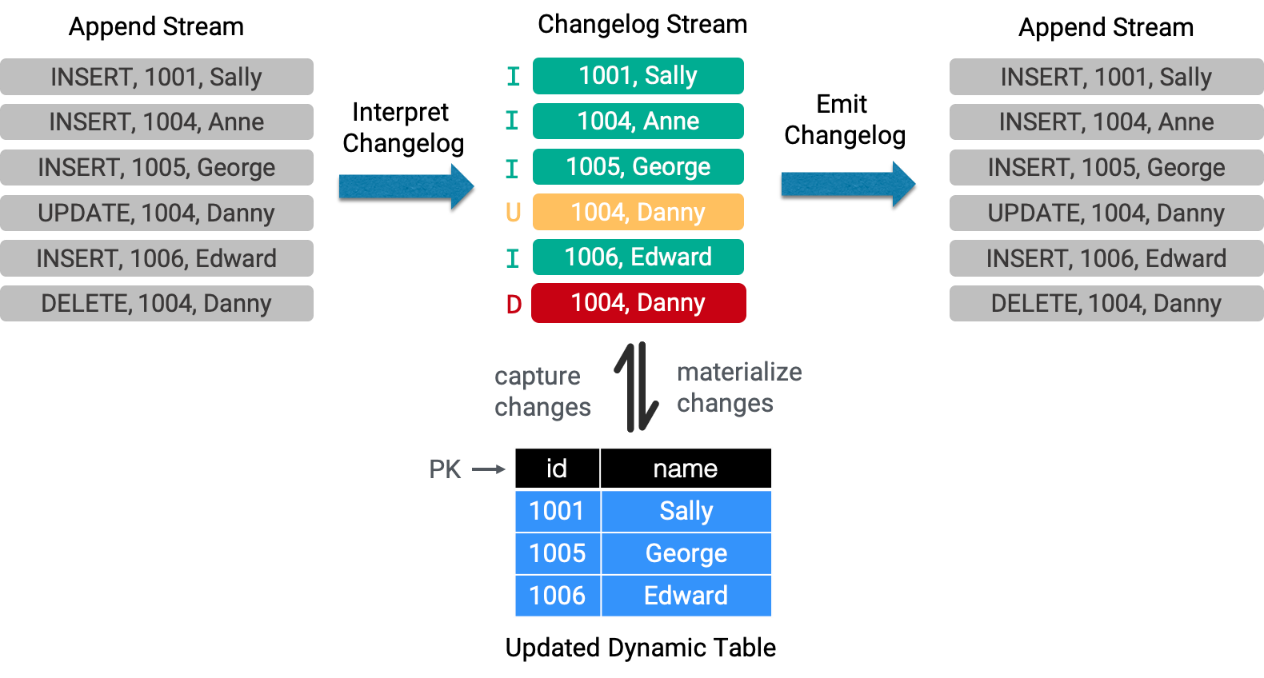

Dynamic tables are the core concepts of Flink's table API and SQL that support streaming data. Streams and tables are a duality. You can convert a table into a changelog stream or replay the Change Stream into a table.

There are two forms of change stream: Append Mode and Update Mode. Append Mode will only be added, not changed or deleted, such as event streams. Update Mode may be added, changed, and deleted, such as database operation logs. Before Flink 1.11, only dynamic tables can be defined on Append Mode.

Flink 1.11 introduces the new TableSource and TableSink in FLIP-95 to implement support for the Update Mode changelog. In FLIP-105, support for Debezium and Canal CDC format was introduced. You can define dynamic tables from changelogs by implementing ScanTableSource, receiving external system changelogs (such as database changelogs), interpreting them as identifiable changelogs of Flink, and forwarding them down.

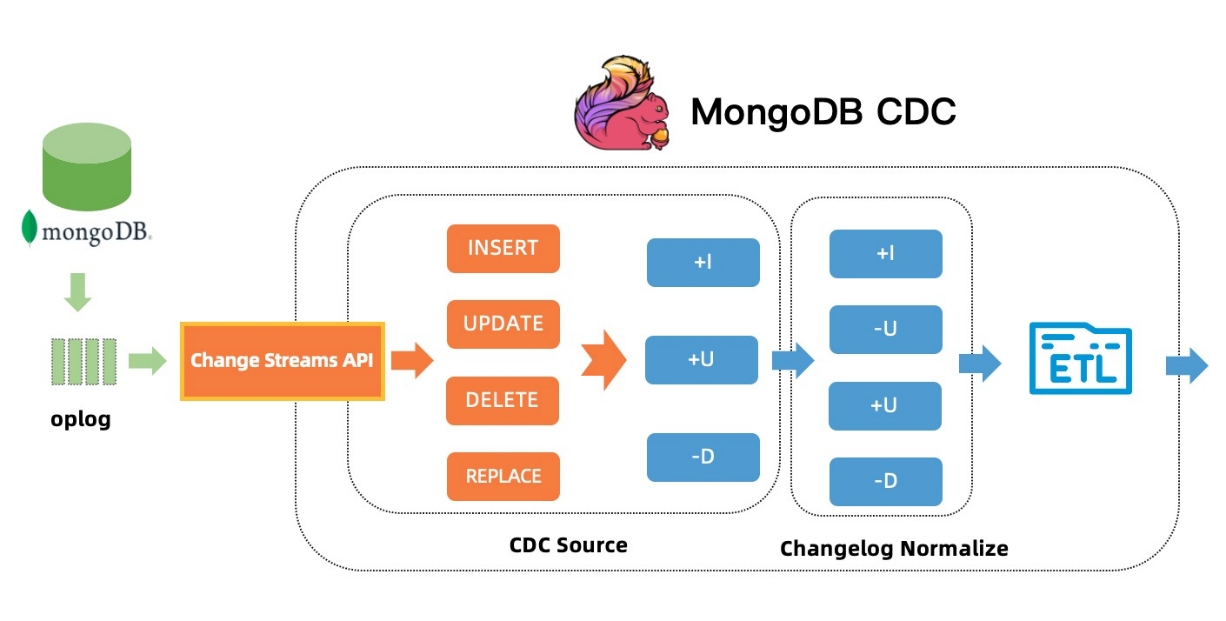

In Flink, changelog records are represented by RowData. RowData includes four types: +I (INSERT), -U (UPDATE_BEFORE), +U (UPDATE_AFTER), and -D (DELETE). According to the different types of changelog records, there are three types of changelog modes.

As mentioned in the previous section, the key point in implementing Flink CDC MongoDB is how to convert the operation logs of MongoDB into a changelog supported by Flink. If you want to solve this problem, you need to understand the cluster deployment and replication mechanism of MongoDB.

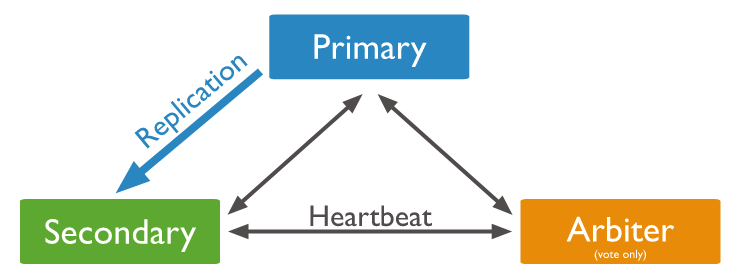

A replica set is a highly available deployment mode provided by MongoDB. The replica set is replicated by oplog (operation log) to synchronize data between replica set members.

A split cluster is a deployment mode in which MongoDB supports large-scale datasets and high-throughput operations. Each split consists of one replica set.

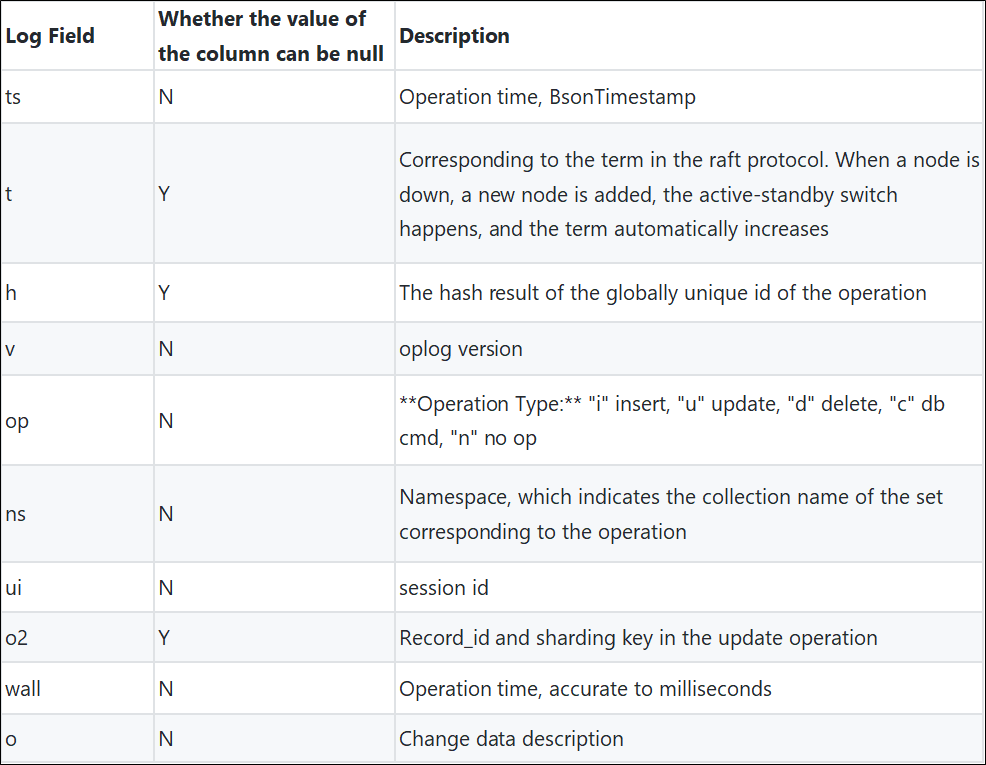

Operation log is a special capped collection (fixed capacity collection) in MongoDB. It is used to record operation logs of data and is used for synchronization between replica set members. The following figure shows the data structure of the oplog record:

{

"ts" : Timestamp(1640190995, 3),

"t" : NumberLong(434),

"h" : NumberLong(3953156019015894279),

"v" : 2,

"op" : "u",

"ns" : "db.firm",

"ui" : UUID("19c72da0-2fa0-40a4-b000-83e038cd2c01"),

"o2" : {

"_id" : ObjectId("61c35441418152715fc3fcbc")

},

"wall" : ISODate("2021-12-22T16:36:35.165Z"),

"o" : {

"$v" : 1,

"$set" : {

"address" : "Shanghai China"

}

}

}

As shown in the example, the update records of MongoDB oplog do not contain the information before the update or the complete record after the update. Therefore, the update records cannot be converted to the ALL-type changelog supported by Flink or the UPSERT-type changelog.

In addition, data writing may occur in different split replica sets in a split cluster. Therefore, the oplog of each split only records data changes that occur on that split. Thus, if you want to obtain complete data changes, you need to sort and merge the oplogs of each split according to the operation time, which increases the difficulty and risk of capturing change records.

Before the 1.7 version of Debezium MongoDB Connector, change data was captured by traversing oplogs. For the preceding reasons, we did not use Debezium MongoDB Connector and chose the official MongoDB Change Streams-based MongoDB Kafka Connector.

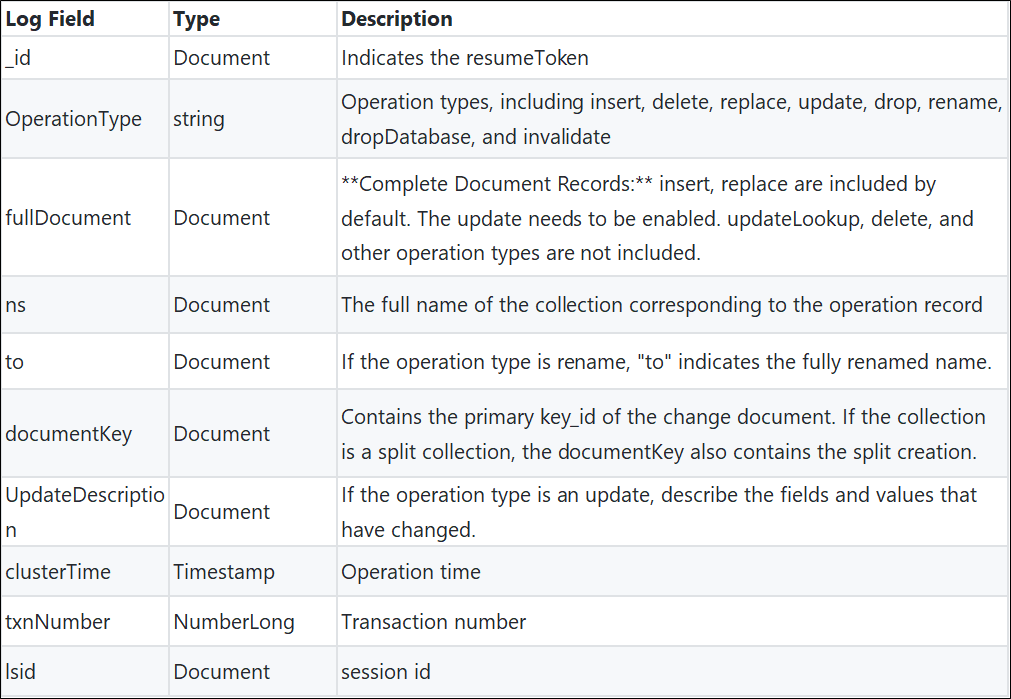

Change Streams is a new feature released in MongoDB 3.6. It shields the complexity of traversing oplogs and enables users to subscribe to data changes at the cluster, database, and collection levels through simple API operations.

Change Events is a change record returned by Change Streams. The following is its data structure:

{

_id : { <BSON Object> },

"operationType" : "<operation>",

"fullDocument" : { <document> },

"ns" : {

"db" : "<database>",

"coll" : "<collection>"

},

"to" : {

"db" : "<database>",

"coll" : "<collection>"

},

"documentKey" : { "_id" : <value> },

"updateDescription" : {

"updatedFields" : { <document> },

"removedFields" : [ "<field>", ... ],

"truncatedArrays" : [

{ "field" : <field>, "newSize" : <integer> },

...

]

},

"clusterTime" : <Timestamp>,

"txnNumber" : <NumberLong>,

"lsid" : {

"id" : <UUID>,

"uid" : <BinData>

}

}

Since the update operation of the oplog only contains the fields after the change, the complete document after the change cannot be obtained from the oplog. However, when converting to changelog in UPSERT mode, the UPDATE_AFTER RowData must have a complete row record. Change Streams can return the latest status of the document when getting the change record by setting fullDocument = updateLookup. In addition, each record of a Change Event contains documentKey (_id and shard key), which identifies the primary key information of the change record, which means the condition for an idempotent update is met. Therefore, you can use the Update Lookup feature to convert the change records of MongoDB into the UPSERT changelog of Flink.

In terms of a specific implementation, we have integrated the MongoDB Kafka Connector implemented by MongoDB based on Change Streams. Drivers can easily run MongoDB Kafka Connector in Flink by using Debezium EmbeddedEngine. MongoDB CDC TableSource is implemented by converting Change Stream into Flink UPSERT changelog. With the resume mechanism of Change Streams, the function of restoring from checkpoint and savepoint is implemented.

As described in FLIP-149, some operations (such as aggregation) are difficult to handle correctly when -U message is missing. For a changelog of the UPSERT type, Flink Planner introduces an additional compute node (Changelog Normalize) to standardize it to a changelog of the ALL type.

Capabilities

Changelog Normalize adds additional status overhead to the pre-image value of -U. We recommend using RocksDB State Backend in the production environment.

MongoDB oplog.rs is a special collection of capacities. When the oplog.rs capacity reaches the maximum, historical data is discarded. Change Streams uses the resume token for recovery. If the oplog capacity is too small, the oplog record corresponding to the resume token may no longer exist. As a result, the recovery fails.

When the specified oplog capacity is not displayed, the default oplog capacity of the WiredTiger engine is 5% of the disk size, the lower limit is 990 MB, and the upper limit is 50 GB. After MongoDB 4.4, you can set the minimum retention period for oplogs. The oplog records are only reclaimed when the oplog records are full and exceed the minimum retention period.

You can use the replSetResizeOplog command to reset the oplog capacity and minimum retention time. In the production environment, we recommend not setting the oplog capacity to less than 20 GB and not setting the oplog retention period to less than seven days.

db.adminCommand(

{

replSetResizeOplog: 1, // fixed value 1

size: 20480, // The unit is MB, which ranges from 990MB to 1PB

minRetentionHours: 168 // Optional. Unit: hours

}

)The Flink MongoDB CDC periodically writes the resume token to the checkpoint to restore the Change Stream. MongoDB change events or heartbeat events can trigger the update of the resume token. If the subscribed collection changes slowly, the resume token corresponding to the last change record may expire and fails to be recovered from the checkpoint. Therefore, we recommend enabling the heartbeat event (set heartbeat.interval.ms > 0) to keep the resume token updated.

WITH (

'connector' = 'mongodb-cdc',

'heartbeat.interval.ms' = '60000'

)If the default connection fails to meet the requirements, you can use the connection.options configuration items to pass connection parameters supported by MongoDB.

WITH (

'connector' = 'mongodb-cdc',

'connection.options' = 'authSource=authDB&maxPoolSize=3'

)You can realize fine-grained pull of configuration change events in Flink DDL through poll.await.time.ms and poll.max.batch.size.

When the change event is pulled, the default value is 1500 ms. You can reduce the pull interval to improve the processing effectiveness for collections with frequent changes. You can increase the pull interval to reduce the pressure on the database for collections with slow changes.

The maximum number of pull change events in each batch. The default value is 1000. Upgrading the parameters speeds up pulling change events from the Cursor but increases the memory overhead.

The DataStream API can use pipelines to filter db and collections that need subscriptions. Currently, the filtering of Snapshot collections is not supported.

MongoDBSource.<String>builder()

.hosts("127.0.0.1:27017")

.database("")

.collection("")

.pipeline("[{'$match': {'ns.db': {'$regex': '/^(sandbox|firewall)$/'}}}]")

.deserializer(new JsonDebeziumDeserializationSchema())

.build();MongoDB supports fine-grained control over users, roles, and permissions. Users that enable Change Stream must have the find and changeStream permissions.

{ resource: { db: <dbname>, collection: <collection> }, actions: [ "find", "changeStream" ] }{ resource: { db: <dbname>, collection: "" }, actions: [ "find", "changeStream" ] }{ resource: { db: "", collection: "" }, actions: [ "find", "changeStream" ] }In the production environment, we recommend creating Flink users and roles and granting fine-grained authorization to the role. Note: MongoDB can create users and roles in any database. If the user is not created in admin, you need to specify authSource = in the connection parameters.

use admin;

// Create a user

db.createUser(

{

user: "flink",

pwd: "flinkpw",

roles: []

}

);

// Create a role

db.createRole(

{

role: "flink_role",

privileges: [

{ resource: { db: "inventory", collection: "products" }, actions: [ "find", "changeStream" ] }

],

roles: []

}

);

// Grant a role to a user

db.grantRolesToUser(

"flink",

[

// Note:db refers to the db when the role is created. Roles created under admin can have access permissions on different databases

{ role: "flink_role", db: "admin" }

]

);

// Add permissions to the role

db.grantPrivilegesToRole(

"flink_role",

[

{ resource: { db: "inventory", collection: "orders" }, actions: [ "find", "changeStream" ] }

]

);In the development and test environment, you can grant the read and readAnyDatabase built-in roles to Flink users to enable Change Stream for any collection.

use admin;

db.createUser({

user: "flink",

pwd: "flinkpw",

roles: [

{ role: "read", db: "admin" },

{ role: "readAnyDatabase", db: "admin" }

]

});Currently, MongoDB CDC Connector does not support incremental snapshots, and the advantages of Flink parallel computing cannot be fully utilized for tables with large data volumes. The incremental Snapshot feature of MongoDB will be implemented in the future to support the checkpoint and concurrency settings in the Snapshot phase.

Currently, the MongoDB CDC Connector only supports the subscription of Change Stream from the current time. Later, the Change Stream subscription at a specified time will be provided.

Currently, MongoDB CDC Connector supports change subscription and filtering for clusters and entire libraries but does not support filtering for snapshot collections. This feature will be improved in the future.

[1] Duality of Streams and Tables

[2] FLIP-95: New TableSource and TableSink interfaces

[3] FLIP-105: Support to Interpret Changelog in Flink SQL (Introducing Debezium and Canal Format)

[4] FLIP-149: Introduce the upsert-kafka Connector

[5] Apache Flink 1.11.0 Release Announcement

[6] Introduction to SQL in Flink 1.11

[7] MongoDB Manual

Flink CDC Series – Part 1: How Flink CDC Simplifies Real-Time Data Ingestion

206 posts | 56 followers

FollowApache Flink Community - May 28, 2024

Apache Flink Community - January 31, 2024

Apache Flink Community China - May 18, 2022

Apache Flink Community China - April 13, 2022

Alibaba Cloud Community - January 4, 2026

Alibaba Clouder - January 7, 2021

206 posts | 56 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More ApsaraDB for MongoDB

ApsaraDB for MongoDB

A secure, reliable, and elastically scalable cloud database service for automatic monitoring, backup, and recovery by time point

Learn MoreMore Posts by Apache Flink Community