By Li Xiang and Zhang Lei

In October 2019, Alibaba announced the joint launch of Open Application Model (OAM) with Microsoft. This key technology had been gradually evolved during the upgrade of Alibaba's standardized and unified application management architecture.

OAM is a standardized open-source specification for defining and describing cloud-native applications and their O&M capabilities. Therefore, OAM is not only a standard project for defining Kubernetes applications (compared with an inactive Kubernetes application Custom Resource Definition (CRD) project), but also a project for encapsulating, organizing, and managing various O&M Kubernetes capabilities and connecting O&M capabilities and applications at the platform layer. By the core functions of defining applications and organizing and managing application O&M capabilities, the OAM project became the optimal choice for Alibaba to upgrade its unified application management architecture and build the next-generation PaaS or Serverless architecture.

In addition, OAM does not implement applications and capabilities. Instead, they are implemented by API primitives and controllers provided by Kubernetes. Therefore, OAM has become a major approach for Alibaba to build the native PaaS architecture of Kubernetes.

In OAM, an application contains three core concepts:

Alibaba provides OAM with a lot of experience in managing Kubernetes clusters and Alibaba Cloud products in Internet scenarios, especially the gains in the process of Alibaba's shift from countless internal application CRDs to OAM-based standard application definitions. As engineers, we learn from our failures and errors and continue innovating and developing.

In this article, we will detail the motivation of OAM to help more users better understand it.

We are Alibaba's infrastructure operators, also known as the Kubernetes team. We are responsible for developing, installing, and maintaining functions of various Kubernetes levels, including but not limited to maintaining large-scale Kubernetes clusters, implementing controllers and operators, and developing various Kubernetes plug-ins. At Alibaba, we are referred to as the platform builders.

To distinguish us from the PaaS engineers responsible for Kubernetes, in this article we are called infrastructure operators. In the past few years, we have achieved great successes through Kubernetes and have also learned valuable lessons.

We maintain the world's largest and most complex Kubernetes clusters for the e-commerce businesses of Alibaba. These clusters can:

We also support Alibaba Cloud Container Service for Kubernetes (ACK). This service hosts about 10,000 small- and medium-sized clusters and is similar to other Alibaba Cloud Kubernetes products for external customers. Our internal and external customers have diverse requirements and use cases for workload management.

Similar to other Internet companies, Alibaba's technology stack is jointly implemented by infrastructure operators, application operators, and business developers. The roles of business developers and application operators are:

Business developers deliver business value in code. Most business developers are unfamiliar with infrastructure or Kubernetes. Instead, they interact with PaaS and CI pipelines to manage their applications. The productivity of business developers is of great value to Alibaba.

Application operators provide business developers with expertise in cluster capacity, stability, and performance, helping them configure, deploy, and run applications (for example, update, scale out, and restore applications) on a large scale. Although application operators have certain knowledge of Kubernetes APIs and functions, they do not directly work on Kubernetes. In most cases, they use PaaS to provide basic Kubernetes functions for business developers. Many application operators are PaaS engineers.

In summary, infrastructure operators provide services for application operators that serve business developers.

As mentioned earlier, the three parties possess different professional knowledge, but they need to coordinate with each other to ensure smooth cooperation, which can be difficult to achieve for Kubernetes.

We will discuss the pain points of these parties in the following sections. In short, the root problem is that they lack a standard for efficient and accurate interaction between them. This leads to an inefficient application management process and eventual operation failures. OAM is the key to solving this problem.

Kubernetes is highly scalable, enabling infrastructure operators to build their O&M capabilities as they wish. However, the flexibility of Kubernetes also brings problems to users of these functions (application operators.)

For example, we developed Cron Horizontal Pod Autoscaler (CronHPA) Custom Resource Definition (CRD) to extend applications based on CRON expressions. This is useful when different scale-up policies are required in the daytime and at night. CronHPA is an optional feature that is deployed on demand only in clusters. A sample CronHPA YAML file looks like this:

apiVersion: "app.alibaba.com/v1"

kind: CronHPA

metadata:

name: cron-scaler

spec:

timezone: America/Los_Angeles

schedule:

- cron: '0 0 6 * * ?'

minReplicas: 20

maxReplicas: 25

- cron: '0 0 19 * * ?'

minReplicas: 1

maxReplicas: 9

template:

spec:

scaleTargetRef:

apiVersion: apps/v1

name: php-apache

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50This is a typical CRD in Kubernetes, which can be directly installed and used. However, after we deliver these functions to application operators, application operators soon encounter problems when using CRDs such as CronHPA CRD.

Application operators often complain about the casual distribution of spec. Spec sometimes appears in CRDs, sometimes in ConfigMap, and sometimes in configuration files in random paths. Application operators are also confused about the reasons why the CRDs of many plug-ins in Kubernetes, such as the CNI and Container Storage Interface (CSI) plug-ins, are merely their installation instructions, but not the description of corresponding capabilities such as the network and storage functions.

Application operators are not sure whether an O&M capability is ready in a cluster, especially when this capability is provided by a newly developed plug-in. Infrastructure operators and application operators need to communicate repeatedly to clarify these issues. There is also a manageability-related challenge.

The relationship between O&M capabilities in a Kubernetes cluster can be classified into the following types:

Orthogonal and combinable capabilities are more secure, while conflicting capabilities may lead to unexpected or unpredictable operations.

Kubernetes cannot send a pre-warning for conflicts and application operators may use two conflicting O&M capabilities in the same application. If conflicts occur, the cost for resolving them is high. In extreme cases, these conflicts can lead to disasters. Certainly, application operators want to find a better way to avoid conflicts in advance.

So, how do application operators identify and manage O&M capabilities that may conflict with each other? Can infrastructure operators build O&M capabilities in favor of application operators?

In OAM, we use O&M traits to describe and build discoverable and manageable capabilities on the platform layer. These platform-layer capabilities are essentially the applications' O&M traits.

In ACK, most traits are defined by the infrastructure operators and implemented by using custom controllers in Kubernetes. For example:

Traits are not equivalent to Kubernetes plug-ins. For example, a cluster may have multiple network-related traits, such as dynamic quality of service (QoS) traits, bandwidth control traits, and traffic mirroring traits. All these traits are provided by a single CNI plug-in.

Traits are installed in Kubernetes clusters for application operators. When capabilities are presented as traits, application operators can run the kubectl get command to discover the O&M capabilities supported by the cluster:

$ kubectl get traitDefinition

NAME AGE

cron-scaler 19m

auto-scaler 19mThe preceding example shows that the cluster supports both the cron-scaler and autoscaler capabilities. Users can deploy applications that require CRON-based scale-out policies to the cluster.

A trait provides a structured description of a specific O&M capability. This allows application operators to easily and accurately understand the specific capability by running the kubectl describe command. The capability description includes the workload and usage of the trait.

For example, you can run the kubectl describe command to query the cron-scaler capability of the TraitDefinition type.

apiVersion: core.oam.dev/v1alpha2

kind: TraitDefinition

metadata:

name: cron-scaler

spec:

appliesTo:

- core.oam.dev/v1alpha1.ContainerizedWorkload

definitionRef:

name: cronhpas.app.alibaba.comIn OAM, CRDs are used to describe trait usage in definitionRef, which are also decoupled from Kubernetes CRDs.

Trait specifications are separated from trait implementation. The specifications of a trait can be implemented based on different technologies in different platforms and environments. The implementation layer can be connected to an existing CRD, an intermediate layer with unified descriptions, or different implementations at the bottom layer. The separation of specifications and implementations is very useful because a specific capability such as ingress in Kubernetes may have dozens of implementations. A trait provides a unified description to help application operators accurately understand and use it.

Application operators can use ApplicationConfiguration to configure one or more installed traits for an application. The ApplicationConfiguration controller handles trait conflicts. The following shows a sample ApplicationConfiguration:

apiVersion: core.oam.dev/v1alpha2

kind: ApplicationConfiguration

metadata:

name: failed-example

spec:

components:

- name: nginx-replicated-v1

traits:

- trait:

apiVersion: core.oam.dev/v1alpha2

kind: AutoScaler

spec:

minimum: 1

maximum: 9

- trait:

apiVersion: app.alibabacloud.com/v1

kind: CronHPA

spec:

timezone: "America/Los_Angeles"

schedule: "0 0 6 * * ?"

cpu: 50

...In OAM, the ApplicationConfiguration controller must ensure the compatibility between these traits. If this fails, application deployment fails immediately. Therefore, when an application operator submits the YAML file to Kubernetes, the OAM controller reports the deployment failure due to trait conflicts. In this way, application operators can foresee O&M capability conflicts.

In general, our Kubernetes team prefers to use OAM traits to present Kubernetes-based discoverable and manageable O&M capabilities, rather than using the lengthy maintenance specifications and O&M guidelines, which cannot prevent application operators from making mistakes. This allows application operators to combine O&M capabilities to build application O&M solutions without conflicts.

Kubernetes APIs are open to both application operators and business developers. This means that anyone can be responsible for any field in a Kubernetes API. Such APIs are called all-in-one APIs. They are friendly to beginners.

When multiple teams with different concerns need to collaborate on the same Kubernetes cluster, especially when application operators and business developers need to collaborate on the same API, shortcomings of such APIs are prominent. The following shows a simple YAML file for deployment:

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

deploy: example

template:

metadata:

labels:

deploy: example

spec:

containers:

- name: nginx

image: nginx:1.7.9

securityContext:

allowPrivilegeEscalation: falseApplication operators and business developers need to hold an offline meeting before completing the YAML file in a cluster. This raises the question: Why should we conduct such time-consuming and complex cooperation?

In this case, the most straightforward method is to have the business developers complete the YAML file for deployment. However, they may find that some fields for deployment have nothing to do with them. For example, consider the following questions:

How many business developers know the allowPrivilegeEscalation field?

Few of them know the field. In the production environment, this field must be set to false (whereas the default is true) to ensure that the application has appropriate permissions in the host. In practice, this field can be set only by application operators. The business developers can only guess the meaning of such fields or even ignore these fields.

If you know about Kubernetes, you may find that it is difficult to determine the party to complete the fields in Kubernetes.

For example, when business developers set replicas to 3 in the YAML file for deployment, they assume that the value will be constant throughout the application lifecycle.

However, most business R&D personnel do not know that the HPA controller of Kubernetes can take over the replicas field and may change its value according to the pod load. The change automatically made by the system will lead to the following problem. When business developers try to change the value of replicas, the new value never takes effect.

In this case, the YAML file of Kubernetes cannot specify the final workload, which confuses the business developers. We have tried to use fieldManager to solve this problem. It is a challenging task because we cannot figure out why the changes are required.

When using Kubernetes APIs, the concerns of business developers and application operators are inevitably mixed. This makes it difficult for multiple participants to collaborate based on the same API.

We also find that many application management systems of Alibaba, such as PaaS, do not expose all the capabilities of Kubernetes. That is, they do not expose more Kubernetes concepts to business developers.

The simplest solution is to set a boundary between business developers and application operators. For example, we can allow only business developers to set certain fields in the YAML file for deployment, which has been adopted in many PaaS platforms at Alibaba. However, this solution may not work.

In many cases, business developers want application operators to accept their opinions on O&M. For example, business developers have defined 10 parameters for an application, only to find that application operators may override these parameters to adapt to different runtime environments. Then, how can business developers allow application operators to modify only five specific parameters?

It is extremely difficult to convey the O&M opinions of business developers in the application management process with business developers and application operators separated. In many cases, business developers may want to convey a lot of information about their applications:

All the preceding requests are reasonable because the business developers know the applications best. In this context, the urgent issue is whether Alibaba Kubernetes can provide APIs for business developers and application operators separately and allow business developers to effectively convey their O&M needs.

In OAM, we logically split Kubernetes APIs and enable business developers to specify their fields and convey their demands to application operators. This involves defining but not only describing the application.

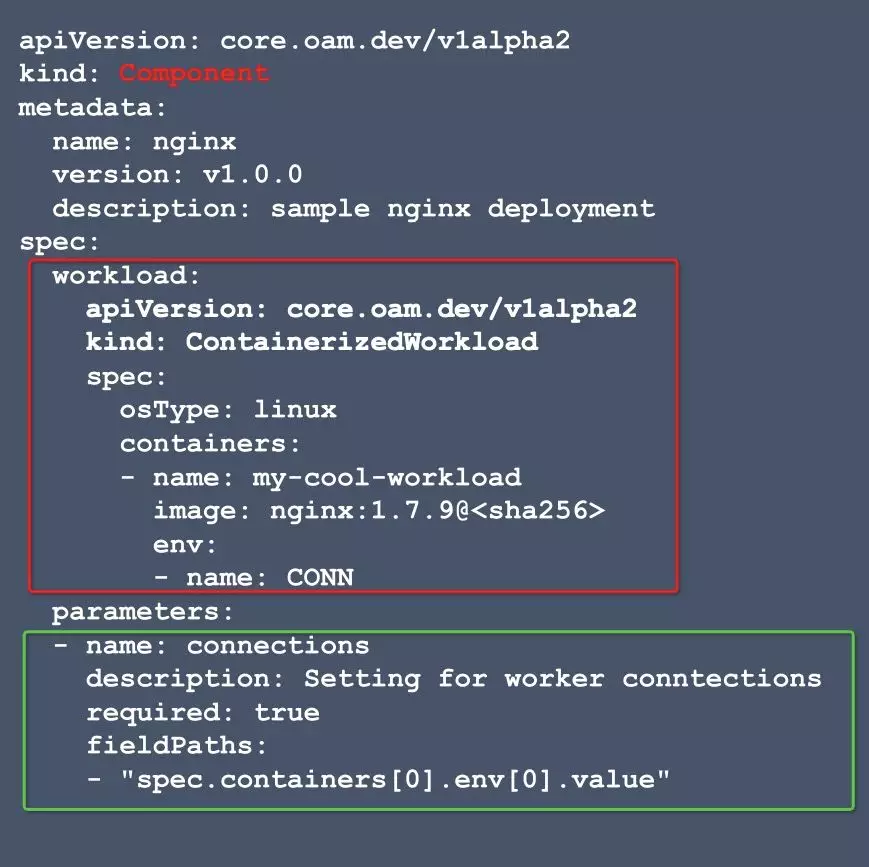

In OAM, a component is a carrier designed only for business developers to define applications without considering O&M details. An application contains one or more components. For example, a web application can consist of a Java web component and a database component. The following figure shows a sample component defined by business developers to deploy NGINX.

In OAM, a component consists of the following parts:

In a component, the workload field conveys to application operators how business developers run their applications. In addition, ContainerizedWorkload is defined for container applications in OAM, covering typical patterns of cloud-native applications.

Workloads can be defined and extended in OAM to declare users' workload types. We always extend workloads to enable business developers to define Alibaba Cloud service components, such as Function Compute.

In the preceding example, business developers do not need to set replicas. Instead, they allow HPA or application operators to control the replicas value. In general, a component allows business developers to define the declarative description of an application in their own way. The component also enables them to accurately convey opinions or information to application operators at any time. The information includes O&M demands, for example, which parameters are rewritable and how to run an application.

By using the component name and binding traits to the application, application operators can use ApplicationConfiguration to instantiate the application. The following shows a sample collaboration workflow for using a component and ApplicationConfiguration:

The content of the app-config.yaml file is:

apiVersion: core.oam.dev/v1alpha1

kind: ApplicationConfiguration

metadata:

name: my-awesome-app

spec:

components:

- componentName: nginx

parameterValues:

- name: connections

value: 4096

traits:

- trait:

apiVersion: core.oam.dev/v1alpha2

kind: AutoScaler

spec:

minimum: 1

maximum: 9

- trait:

apiVersion: app.aliaba.com/v1

kind: SecurityPolicy

spec:

allowPrivilegeEscalation: falseThe following lists the key fields in the YAML file of ApplicationConfiguration:

ApplicationConfiguration enables application operators or the system to understand and use the information conveyed by business developers so that they can bind O&M capabilities to the component for final O&M.

In conclusion, we use OAM to solve the following problems in application management:

Therefore, OAM is an Application CRD specification proposed by the Alibaba Kubernetes team. It allows all participants to use a structured standard method to define applications and their O&M capabilities.

In addition, Alibaba develops OAM for software distribution and delivery in hybrid clouds and multiple environments. With the emergence of Google Anthos and Microsoft Arc, we can see that Kubernetes is becoming a new Android system, and the cloud-native ecosystem value is rapidly shifting to the application layer. The cases mentioned in this article are provided by the cloud-native teams of Alibaba Cloud and Ant Financial.

Currently, OAM specifications and models have already solved many problems, but this is just the beginning. For example, we are studying how to use OAM to handle component dependencies and how to integrate Dapr workloads into OAM.

We look forward to working with the community on OAM specifications and Kubernetes implementation. OAM is a neutral open-source project. All its contributors must follow the Contributor License Agreement (CLA) of non-profit foundations.

The Alibaba team is contributing to and maintaining this technology. If you have any questions or feedback, please contact us.

You can join us by clicking one of the links below:

Li Xiang is a Senior Technical Expert at Alibaba Cloud. He works on the cluster management system at Alibaba and assists in promoting Kubernetes adoption in Alibaba Group. Before working for Alibaba, Li Xiang was the owner of the upstream Kubernetes team at CoreOS. He is also the creator of etcd and Kubernetes Operators.

Zhang Lei is a Senior Technical Expert at Alibaba Cloud. He is one of the maintainers of Kubernetes projects. He is working in the Alibaba Kubernetes team, dealing with Kubernetes and cloud-native application management systems.

Since 2019, the cloud-native application platform team of Alibaba Cloud has started to upgrade the unified architecture of application management products and projects based on standard application definitions and delivery models throughout the Alibaba economy.

At the end of 2018 when Kubernetes officially became Alibaba's application infrastructure, application management fragmentation problems occurred at Alibaba and in Alibaba Cloud's product lines.

With the rapid development of cloud-native ecosystems, application management product architectures of Alibaba and Alibaba Cloud, including Alibaba and Alibaba Cloud Platform as a Service (PaaS) products, must embrace the cloud-native ecosystem and use the ever-changing capabilities of the ecosystem to build a more powerful PaaS service.

However, you cannot solve the problem only by migrating or integrating PaaS to Kubernetes. There has never been a clear boundary between PaaS and Kubernetes, and Kubernetes is not designed for end users.

To solve the problem of using Kubernetes or PaaS, the key is to figure out how to give Alibaba R&D and O&M personnel access to the benefits of cloud-native technology innovations, seamlessly migrate or integrate the existing PaaS system to Kubernetes, and enable the new PaaS system to fully utilize the capabilities and values of the Kubernetes technology and ecosystem.

Open Application Model: Carving building blocks for Platforms

659 posts | 55 followers

FollowAlibaba Developer - March 2, 2020

Alibaba Cloud Native Community - January 27, 2022

Alibaba Developer - October 25, 2019

Alibaba Cloud Native Community - November 11, 2022

Alibaba Cloud Native Community - March 20, 2023

Alibaba Developer - February 1, 2021

659 posts | 55 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Cloud Native Community