DataX-On-Hadoop uses the Hadoop task scheduler to schedule DataX tasks to a Hadoop execution cluster, on which each task is executed based on the process of Reader -> Channel -> Writer. This means that you can upload Hadoop data to MaxCompute and ApsaraDB for RDS through multiple MapReduce tasks without the need to install and deploy DataX software in advance or prepare an additional execution cluster for DataX. However, you still have access to the plug-in logic, throttling, and robust retry functions of DataX.

DataX is an offline data synchronization tool and platform widely used in Alibaba Group. It efficiently synchronizes data among MySQL, Oracle, HDFS, Hive, OceanBase, HBase, Table Store, MaxCompute, and other heterogeneous data sources. The DataX synchronization engine splits, schedules, and runs tasks internally without depending on the Hadoop environment. For more information about DataX, visit https://github.com/alibaba/DataX

DataX-On-Hadoop is the implementation of DataX in the Hadoop scheduling environment. DataX-On-Hadoop uses the Hadoop task scheduler to schedule DataX tasks to a Hadoop execution cluster, on which each task is executed based on the process of Reader -> Channel -> Writer. This means that you can upload Hadoop data to MaxCompute and ApsaraDB for RDS through multiple MapReduce tasks without the need to install and deploy DataX software in advance or prepare an additional execution cluster for DataX. However, you still have access to the plug-in logic, throttling, and robust retry functions of DataX.

Currently, DataX-On-Hadoop supports uploading HDFS data to MaxCompute on the public cloud.

The procedure is as follows:

Submit an Alibaba Cloud ticket to apply for the DataX-On-Hadoop software package. The software package is a Hadoop MapReduce JAR package.

Run the following commands to submit a MapReduce task on the Hadoop client. You only need to focus on the content in the configuration file of the job. Here, the ./bvt_case/speed.json file is used as an example. The configuration file is the same as a common DataX configuration file.

./bin/hadoop jar datax-jar-with-dependencies.jar

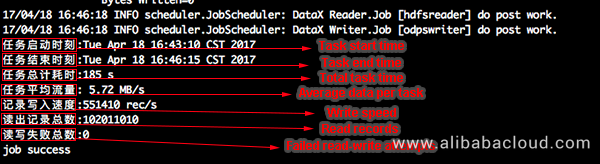

com.alibaba.datax.hdfs.odps.mr.HdfsToOdpsMRJob ./bvt_case/speed.jsonAfter the task is completed, log information similar to the following is displayed.

HDFS Reader and MaxCompute Writer in this example are configured as follows.

{

"core": {

"transport": {

"channel": {

"speed": {

"byte": "-1",

"record": "-1"

}

}

}

},

"job": {

"setting": {

"speed": {

"byte": 1048576

},

"errorLimit": {

"record": 0

}

},

"content": [

{

"reader": {

"name": "hdfsreader",

"parameter": {

"path": "/tmp/test_datax/big_data*",

"defaultFS": "hdfs://localhost:9000",

"column": [

{

"index": 0,

"type": "string"

},

{

"index": 1,

"type": "string"

}

],

"fileType": "text",

"encoding": "UTF-8",

"fieldDelimiter": ","

}

},

"writer": {

"name": "odpswriter",

"parameter": {

"project": "",

"table": "",

"partition": "pt=1,dt=2",

"column": [

"id",

"name"

],

"accessId": "",

"accessKey": "",

"truncate": true,

"odpsServer": "http://service.odps.aliyun.com/api",

"tunnelServer": "http://dt.odps.aliyun.com",

"accountType": "aliyun"

}

}

}

]

}

}This section describes parameters related to the performance and dirty data based on the preceding example:

core.transport.channel.speed.byte: indicates the maximum number of bytes that each mapper can synchronize per second after a synchronization task is split into multiple mappers for concurrent running. The default value is –1, indicating that no rate limit is set. If the value is 1,048,576, the maximum synchronization speed of each mapper is 1 MB/s.

core.transport.channel.speed.record: indicates the maximum number of records that each mapper can synchronize per second after a synchronization task is split into multiple mappers for concurrent running. The default value is –1, indicating that no rate limit is set. If the value is 10,000, the maximum synchronization speed of each mapper is 10,000 records per second.

job.setting.speed.byte: indicates the overall maximum speed of a synchronization task. This parameter is available in Hadoop 2.7.0 and later versions. The maximum number of concurrently running mappers is controlled by the mapreduce.job.running.map.limit parameter.

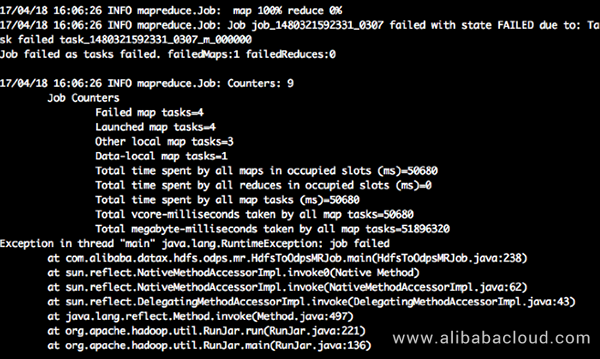

job.setting.errorLimit.record: indicates the maximum number of dirty data records. This parameter is not set by default, indicating that no dirty data check is performed. In this case, the task does not fail even if there is any dirty data record. The value 0 indicates that no dirty data is allowed. In this case, the task fails if there is any dirty data record. The value 1 indicates that only a maximum of one dirty data record is allowed. The following is a task that fails due to dirty data.

The following example shows where to set performance parameters for jobs.

{

"core": {

"transport": {

"channel": {

"speed": {

"byte": "-1",

"record": "-1"

}

}

}

},

"job": {

"setting": {

"speed": {

"byte": 1048576

},

"errorLimit": {

"record": 0

}

},

"content": [

{

"reader": {},

"writer": {}

}

]

}

}The following text describes several variable parameters and job naming parameters.

DataX-On-Hadoop supports variable parameters. For example, a .json job configuration file contains the following variable parameter.

"path": "/tmp/test_datax/dt=${dt}/abc.txt"When running a task, you can pass the following parameters to make the configuration code reusable.

./bin/hadoop jar datax-jar-with-dependencies.jar com.alibaba.datax.hdfs.odps.mr.HdfsToOdpsMRJob

datax.json -p "-Ddt=20170427 -Dbizdate=123" -t hdfs_2_odps_mrDataX-On-Hadoop supports job naming. The parameter -t indicates the job trace ID, that is, the job name, for example, -t hdfs_2_odps_mr in the preceding example. It allows you to understand the usage of the job.

Sections 2 and 3 describe the configurations of the Reader and Writer plug-ins.

HDFS Reader provides the capability of reading data stored in HDFS. In the underlying implementation, HDFS Reader reads data from HDFS files, converts the data into the DataX transmission protocol, and transfers it to Writer.

TextFile is the default storage format for creating Hive tables without data compression. Essentially, a TextFile file is stored in HDFS as text. For DataX, the implementation of HDFS Reader is similar to that of TxtFileReader. The Optimized Row Columnar File (ORCFile) format is an optimized RCFile format. As stated in the official documentation, the ORCFile format provides an efficient method for storing Hive data. HDFS Reader uses the OrcSerde class provided by Hive to read and parse data of ORCFile files. Currently, HDFS Reader provides the following functions:

Temporarily, HDFS Reader does not support multi-thread concurrent reading of a single file because it involves the internal splitting algorithm of the single file. This function will be supported in the future.

Configuration Example

{

"core": {

"transport": {

"channel": {

"speed": {

"byte": "-1048576",

"record": "-1"

}

}

}

},

"job": {

"setting": {

"speed": {

"byte": 1048576

},

"errorLimit": {

"record": 0

}

},

"content": [

{

"reader": {

"name": "hdfsreader",

"parameter": {

"path": "/tmp/test_datax/*",

"defaultFS": "hdfs://localhost:9000",

"column": [

{

"index": 0,

"type": "string"

},

{

"index": 1,

"type": "string"

}

],

"fileType": "text",

"encoding": "UTF-8",

"fieldDelimiter": ","

}

},

"writer": {}

}

]

}

}Parameter Descriptions

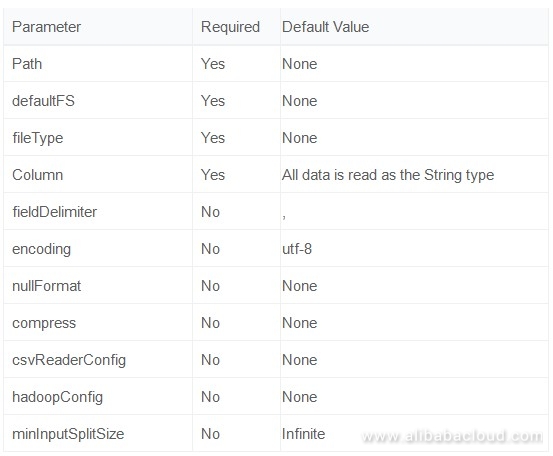

path: This parameter specifies the file path to be read. To read multiple files, use the regular expression "". You can enter multiple paths. If a wildcard is used, HDFS Reader attempts to traverse multiple files. For example, if / is used, HDFS Reader reads all the files under the / directory. If /yixiao/ is used, HDFS Reader reads all the files under the yixiao directory. Currently, HDFS Reader only supports "" and "?" as the file wildcards.

Note: DataX considers all the files to be synchronized in a job as one data table. You must make sure that all the files adapt to the same schema information and grant the read permission to DataX.

defaultFS: This parameter specifies the NameNode address in HDFS.

fileType: This parameter specifies the file type. Currently, the options are text, orc, rc, seq, and csv.

text: indicates the TextFile format.

orc: indicates the ORCFile format.

rc: indicates the RCFile format.

seq: indicates the SequenceFile format.

csv: indicates the common HDFS file format (a logical two-dimensional table).

Note: HDFS Reader automatically identifies the ORCFile, RCFile, SequenceFile, TextFile, and CSV files. Therefore, this parameter is required. Before synchronizing data, HDFS Reader checks whether the types of all the files to be synchronized in the specified path are consistent with the value of fileType. If they are inconsistent, an exception is thrown.

Because TextFile and ORCFile are totally different file formats, HDFS Reader parses the two file types in different ways. For this reason, the formats of the converted results vary when the complex compound types (such as map, array, struct, and union) supported by Hive are converted into the String type supported by DataX.

The following uses the map type as an example:After HDFS Reader parses and converts the ORCFile map type into the String type supported by DataX, the result is "{job=80, team=60, person=70}". After HDFS Reader parses and converts the TextFile map type into the String type supported by DataX, the result is "job:80,team:60,person:70".

From the preceding results, the data itself remains unchanged but the formats differ slightly. If the fields to be synchronized in the specified file path are compound in Hive, we recommended that you set a unified type for the files.

To unify the file types parsed from compound types, we recommended that you export TextFile tables as ORCFile tables on the Hive client.

column: This parameter indicates the list of fields to be read. type indicates the type of source data. index indicates the number (which starts from 0) of the column in the text. value indicates that the current type is constant, which means the corresponding column is automatically generated based on the value instead of the data read from the source file.

You can read data by taking String as the only type by default. The configuration is as follows:

You also can configure the column parameter as follows:

"column": ["*"]For the specified column information, you must set type and set either index or value.

{

"type": "long",

"index": 0 //Obtain the int field from the first column of the local file text.

},

{

"type": "string",

"value": "alibaba" //HDFS Reader internally generates the alibaba string field as the current field.

}fieldDelimiter: This parameter specifies the delimiter between fields to be read. Note: When HDFS Reader reads the TextFile data, a field delimiter is required, which defaults to a comma (,) if no delimiter is used. When HDFS Reader reads the ORCFile data, no field delimiter is required. The default delimiter of Hive is u0001. To use each row as a column of the target, use characters that are not included in the content of rows as the delimiter, such as the invisible characters u0001. Additionally, n cannot be used as the delimiter.

encoding: This parameter indicates the encoding of the read files.

nullFormat: Defining null (null pointer) with a standard string is not allowed in text files. Therefore, DataX provides this parameter to define strings that can be expressed as null. For example, if you set nullFormat:"N" and the source data is "N", DataX considers that this field is a null field.

compress: This parameter specifies the file compression formats supported when fileType is set to csv. The options are gzip, bz2, zip, lzo, lzo_deflate, hadoop-snappy, and framing-snappy. Note: Both the lzo and lzo_deflate compression formats are available. Exercise caution when selecting these two formats during configuration. As no unified stream format is available for Snappy, DataX currently only supports the most popular compression formats: hadoop-snappy (the Snappy stream format in Hadoop) and framing-snappy (the Snappy stream format recommended by Google). This parameter is not required when fileType is set to orc.

csvReaderConfig: This parameter specifies the configuration of CsvReader. It belongs to the map type. CsvReader is used to read CSV files. The reading involves many configuration items, whose default values are used if they are not configured.

Common configuration:

"csvReaderConfig":{

"safetySwitch": false,

"skipEmptyRecords": false,

"useTextQualifier": false

}For all the configuration items and default values, you must configure the map of csvReaderConfig strictly based on the following field names.

hadoopConfig: In hadoopConfig, you can set Hadoop-related advanced parameters, such as HA.

"hadoopConfig":{

"dfs.nameservices": "testDfs",

"dfs.ha.namenodes.testDfs": "namenode1,namenode2",

"dfs.namenode.rpc-address.youkuDfs.namenode1": "",

"dfs.namenode.rpc-address.youkuDfs.namenode2": "",

"dfs.client.failover.proxy.provider.testDfs":

"org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

}minInputSplitSize: Some file types of HDFS support internal file splitting. In this case, a file can be split and scheduled to multiple mappers for concurrent running. Each mapper reads a part of the file. The test result in the test environment has verified the linear expansion of the speed. Note: Because files are split at a finer granularity, the startup of a larger number of mappers may occupy more machine resources. Currently, the file types that support internal file splitting include RCFile, TextFile, CSV, and Parquet.

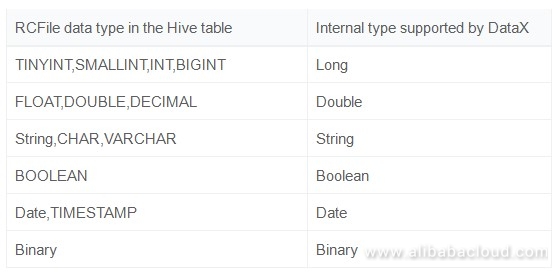

RCFile

The data storage mode varies with the data type during RCFile underlying storage. HDFS Reader does not support accessing or querying data in Hive metadata databases. Therefore, if the HDFS file being synchronized is RCFile, you must use the type field of a column to specify the data type of the column in the Hive table. For example, if the column type is Bigint, Double, or Float, enter bigint, double, or float accordingly as the data type. Note: If the column type is Varchar or Char, enter the number of bytes, for example, varchar(255) or char(30). Make sure that the column type is the same as the data type of the column in the Hive table. Alternatively, you can enter string.

If column is set to *, DataX reads all columns as the String type by default. In this case, the column type can only be String, Char, or Varchar.

RCFile data types are converted into the internal types supported by DataX by default, as described in the following table.

ParquetFile

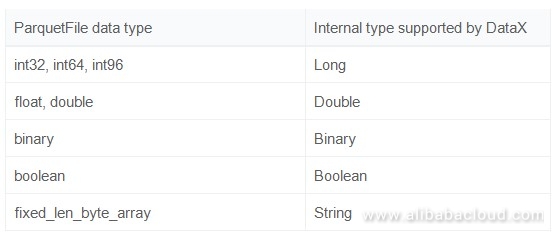

If column is set to *, DataX reads all columns as the String type by default. The Double and other types are converted into the String type. If column is set to a specified column, the following table describes the relationship between types supported by DataX and ParquetFile data types.

textfile,orcfile,sequencefile:

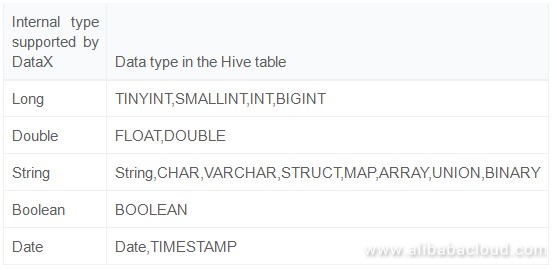

The metadata in TextFile and ORCFile file tables is maintained by Hive and stored in the database (such as MySQL) maintained by Hive itself. HDFS Reader currently does not support accessing or querying data in Hive metadata databases. Therefore, you must specify a data type for type conversion. If column is set to *, all column types are converted into the String type by default. The following table lists the recommended data type conversion relationships supported by HDFS Reader.

where,

Note:

When creating a Hive table, you can specify partitions. For example, after partition (day="20150820",hour="09") is created, the /20150820 and /09 directories are added to the directory of the table in HDFS and /20150820 is the parent directory of /09. As partitions are organized in a directory structure, you only need to set the value of path in JSON when reading all the data of a table by partition.

For example, to read all the data of the table named mytable01 on the partition day of 20150820, set as follows:

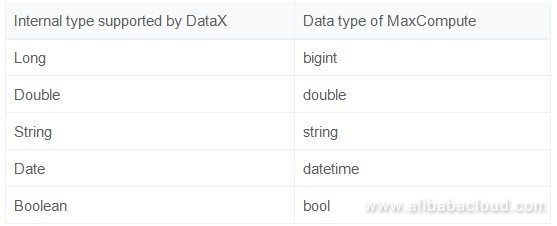

"path": "/user/hive/warehouse/mytable01/20150820/*"The MaxCompute Writer plug-in is designed for ETL developers to insert or update business data in MaxCompute. It is applicable to transmission of terabytes or gigabytes of data. In the underlying implementation, MaxCompute Writer writes data into MaxCompute by using Tunnel based on the configured project, table, partition, table field, and other information. MaxCompute Writer supports the following data types of MaxCompute: Bignit, Double, String, Datetime, and Boolean. The following table describes the data type conversion relationships supported by MaxCompute Writer.

In the underlying implementation, MaxCompute Writer writes data into MaxCompute by using Tunnel. For more information about MaxCompute, see https://www.alibabacloud.com/product/maxcompute

Configuration Example

The following is the data generated from the memory and imported to MaxCompute.

{

"core": {

"transport": {

"channel": {

"speed": {

"byte": "-1048576",

"record": "-1"

}

}

}

},

"job": {

"setting": {

"speed": {

"byte": 1048576

},

"errorLimit": {

"record": 0

}

},

"content": [

{

"reader": {},

"writer": {

"name": "odpswriter",

"parameter": {

"project": "",

"table": "",

"partition": "pt=1,dt=2",

"column": [

"col1",

"col2"

],

"accessId": "",

"accessKey": "",

"truncate": true,

"odpsServer": "http://service.odps.aliyun.com/api",

"tunnelServer": "http://dt.odps.aliyun.com",

"accountType": "aliyun"

}

}

}

]

}

}Parameter Descriptions

accessId: This parameter indicates the ID used to log on to MaxCompute.

accessKey: This parameter indicates the key used to log on to MaxCompute.

project: This parameter indicates the project of a MaxCompute table. The project name can only contain English letters and digits. The Chinese name of the MaxCompute project that you see in Alibaba Cloud is only a project display name. Make sure that you enter the English project ID in the code.

table: This parameter indicates the name of the table to write data into. Do not enter multiple table names because DataX does not support writing data into multiple tables simultaneously.

partition: This parameter indicates the partitions of the data table to write data into. You must specify the partition to the last hierarchy. For example, to write data into a three-hierarchy partition table, you must specify the partition to the third hierarchy, such as pt=20150101/type=1/biz=2.

column: This parameter indicates the list of fields to be imported. To import all fields, you can set the parameter to "column": ["*"]. To insert the specified MaxCompute columns, specify only these columns, for example, "column": ["id", "name"]. MaxCompute Writer supports column filtering and column order changing. For example, a table has three fields: a, b, and c. If you want to synchronize only fields c and b, you can set the parameter to "column": ["c","b"]. During the import process, field a is automatically set to null.

truncate: "truncate": "true" is set to ensure the idempotence of write operations. When a failed write operation reruns, MaxCompute Writer cleans up this data and imports new data. This ensures that the data is consistent after each re-running.

The truncate option is not an atomic operation. MaxCompute SQL cannot be atomic. Therefore, when multiple tasks clean up a table or partition at the same time, the concurrent timing problem may occur. To avoid this problem, we recommend that you do not process one partition with multiple job DDLs simultaneously or that you create partitions before starting multiple concurrent jobs.

odpsServer: This parameter indicates the MaxCompute server. For more information, visit https://www.alibabacloud.com/help/doc-detail/34951.htm

tunnelServer: This parameter indicates the MaxCompute Tunnel server. For more information, visit https://www.alibabacloud.com/help/doc-detail/34951.htm

blockSizeInMB: To improve data writing efficiency, MaxCompute Writer buffers data and submits a certain amount of data at one time. This parameter indicates the buffer size in megabytes. The default value is 64.

Alibaba Cloud DFSMN Breaks the Barriers of Speech Recognition Accuracy with MaxCompute

137 posts | 21 followers

FollowAlibaba Clouder - July 20, 2020

Alibaba Cloud MaxCompute - June 24, 2019

Alibaba Cloud MaxCompute - September 30, 2022

Alibaba Cloud MaxCompute - April 26, 2020

Alibaba Clouder - March 31, 2021

Alibaba Cloud MaxCompute - March 2, 2020

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba Cloud MaxCompute