By David Zhang (Yuanyi)

As the implementation standard in the container orchestration field, Kubernetes is applied in wider scenarios. As an important part of observability construction, logs can record detailed access requests and error information, which is helpful for problem location. All kinds of log data will be generated by applications on Kubernetes, Kubernetes components, and hosts. Log Service (SLS) supports the collection and analysis of these data. This article describes the basic principles of SLS for Kubernetes log collection.

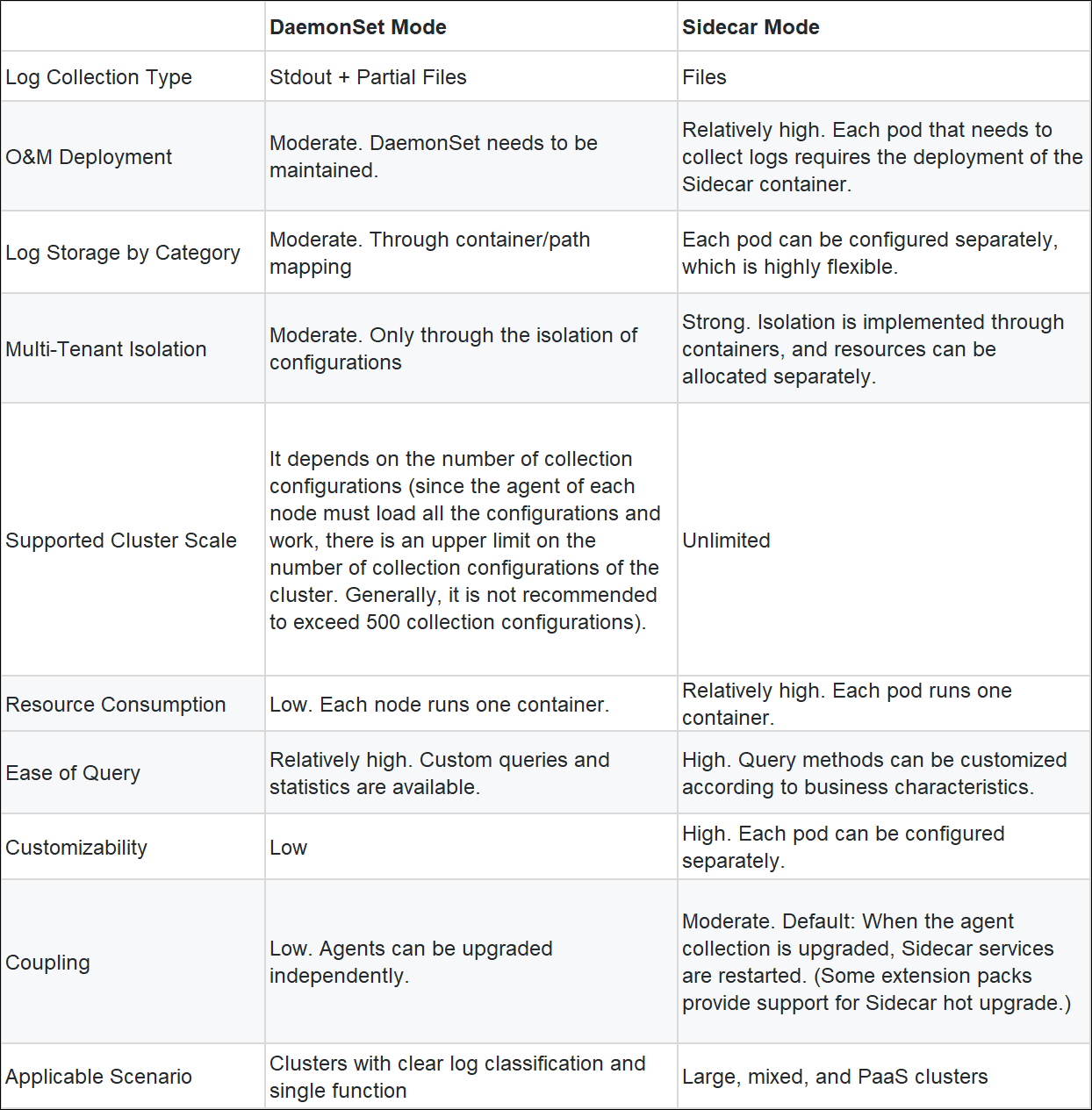

There are two modes in Kubernetes log collection: Sidecar and DaemonSet. Generally, DaemonSet is applied in small and medium-sized clusters. Sidecar is applied in ultra-large clusters (to provide services for multiple business parties. Each business party has clear custom log collection requirements, and the number of collection configurations will exceed 500).

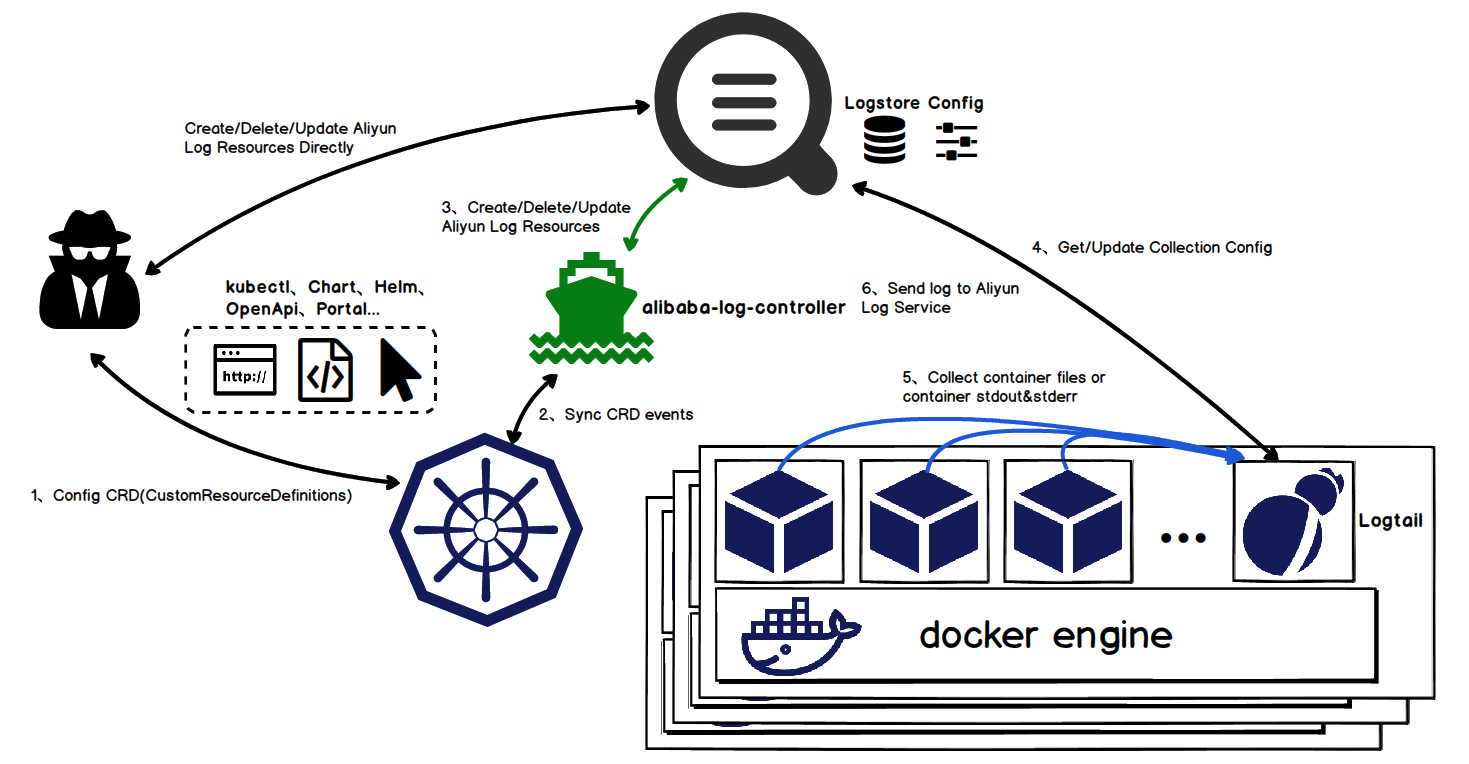

The log collection process consists of deploying the agent, configuring the agent, and the agent working according to the configuration. The log collection process of SLS is similar. SLS provides more deployment, configuration, and collection methods compared with the open-source collection software. There are two main deployment methods in Kubernetes: DaemonSet and Sidecar. The configuration methods include CRD, environment variable, console, and API. The collection methods include container file, container stdout, and standard file. The entire process includes:

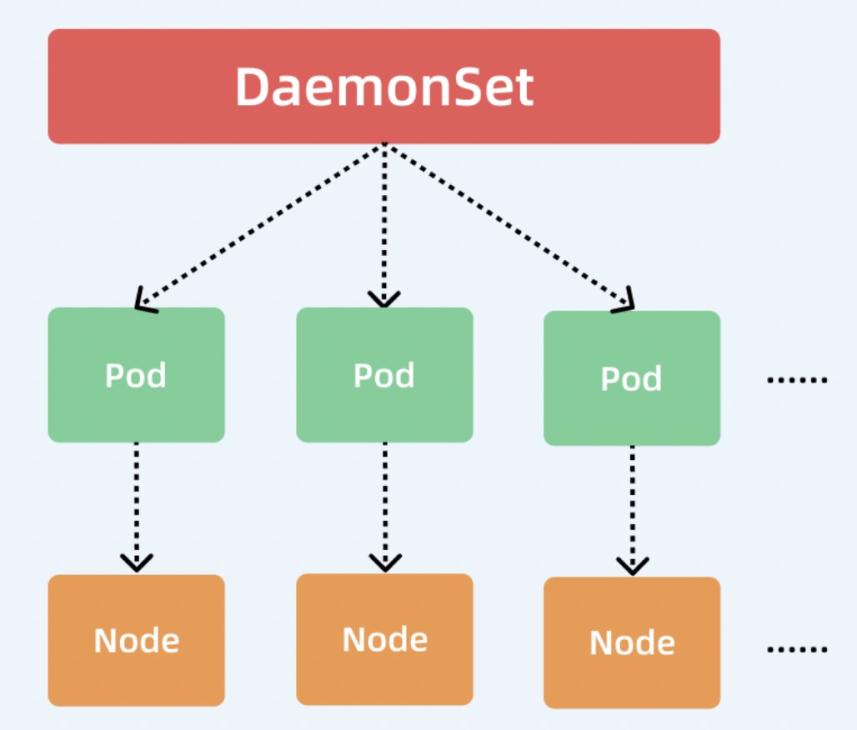

DaemonSet (see DaemonSet), Deployment, and StatefullSet are all advanced orchestration methods (controllers) for pods in Kubernetes. Both Deployment and StatefullSet define a replica factor and Kubernetes schedules based on the factor. There is no replica factor in DaemonSet mode. A Node is started on each node by default. It is generally used for O&M-related tasks (such as log collection, monitoring, and disk cleaning). Therefore, DaemonSet is recommended for the log collection of Logtail by default.

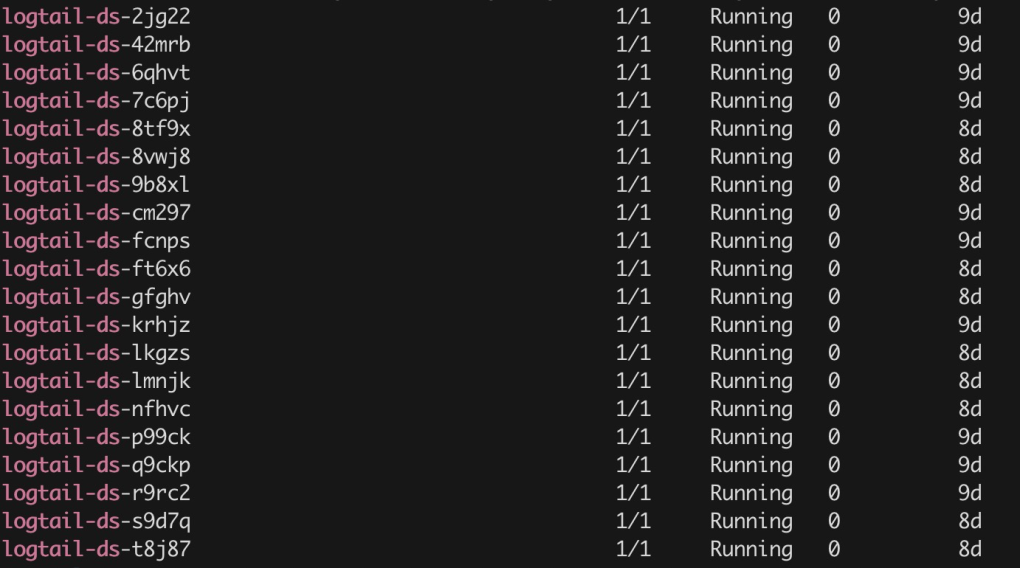

In DaemonSet mode, the Logtail installed by default is in the kube-system namespace. The DaemonSet is named logtail-ds, and the Logtail pod on each node is responsible for collecting data (including stdout and files) of all running pods on this node.

kubectl get pod -n kube-system | grep logtail-ds

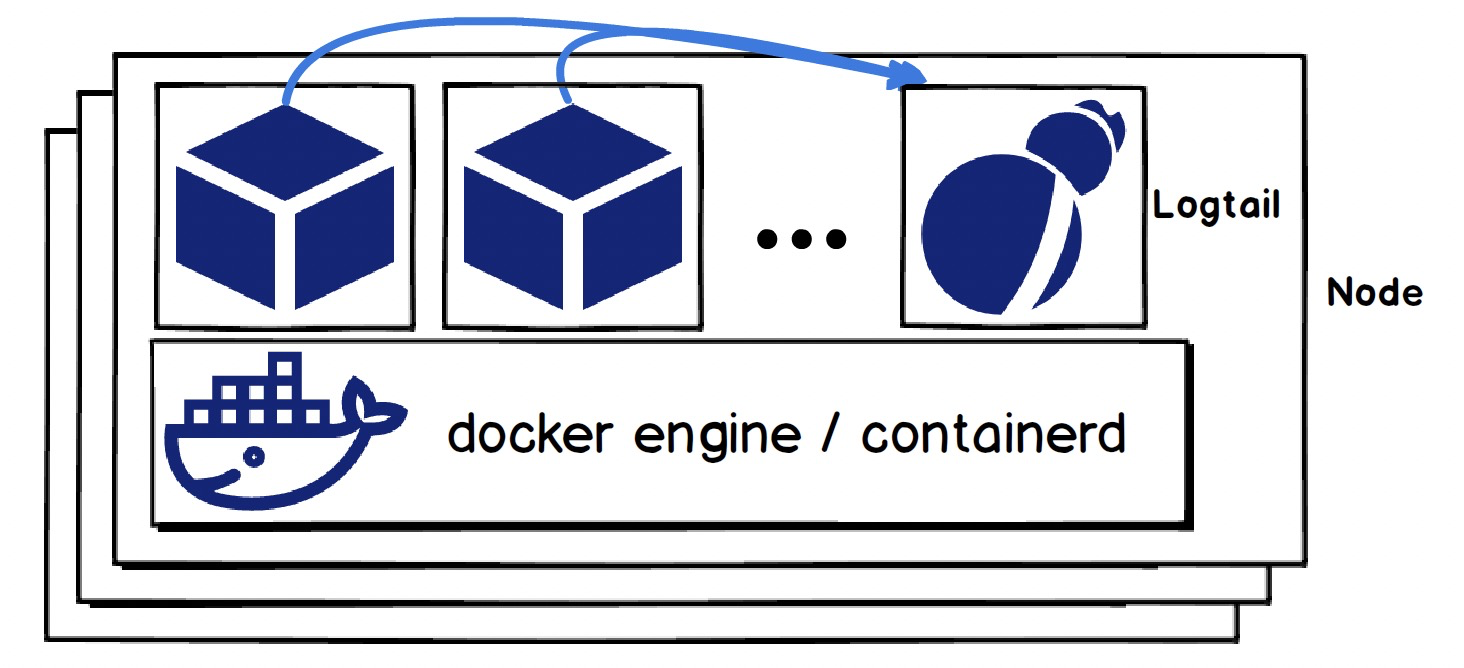

Logtail collects data from other pods or containers only if it can access the container runtime on the host and the data of other containers:

After these two prerequisites are met, Logtail loads the collection configuration from the SLS server and starts to work. For the log collection of containers, the working processes after Logtail starts are divided into two parts:

1. Discover containers whose logs will be collected. This mainly includes:

1) Obtain all containers and their configuration information from the container runtime (Docker Engine/ContainerD), such as the container name, ID, mount point, environment variables, and labels.

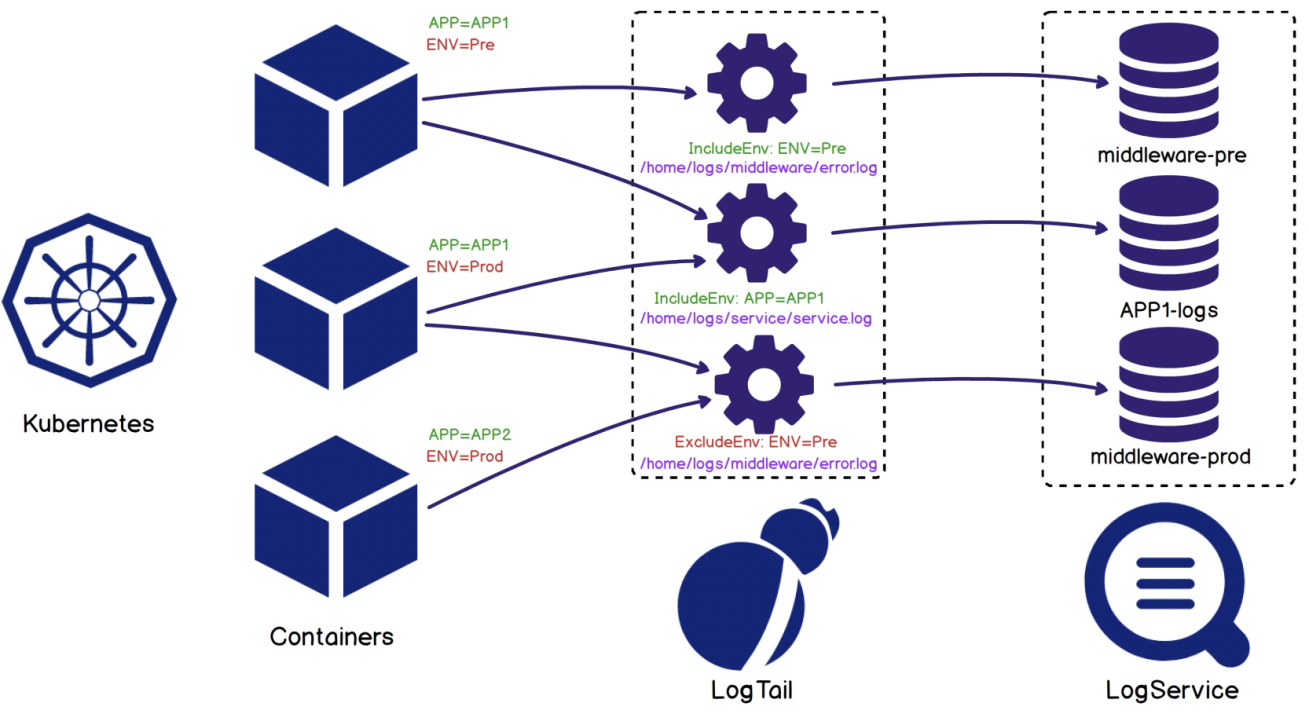

2) Locate the containers whose logs will be collected based on IncludeEnv, ExcludeEnv, IncludeLabel, and ExcludeLabel in the collection configuration. Then, the target to be collected can be located, preventing resource waste and the difficulty in data splitting caused by collecting all container logs. As shown in the following figure, configuration 1 only collects containers whose Env value is Pre. Configuration 2 only collects containers whose APP is APP1. Configuration 3 only collects containers whose ENV value is not Pre. These three configurations collect different data from different Logstores.

2. Collect the data of containers. This includes:

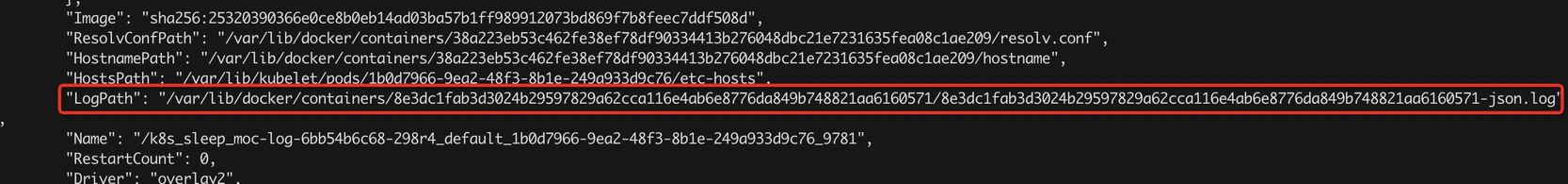

1) Determine the address of data collected, including the address of stdout and files. This information is in the configuration of the container. For example, the following figure identifies the LogPath of the stdout and the storage path of the container file. Pay attention to the following points:

2) Collect data according to the corresponding address, but the stdout is an exception. Stdout files need to be parsed to get the users' actual stdout.

3) Parse the original logs according to the configured parsing rules. The parsing rules include performing the regular expression at the beginning of a text line, field extraction (such as regular expression, delimiter, JSON, and Anchor), filtering /discarding, and desensitization.

4) Upload data to SLS

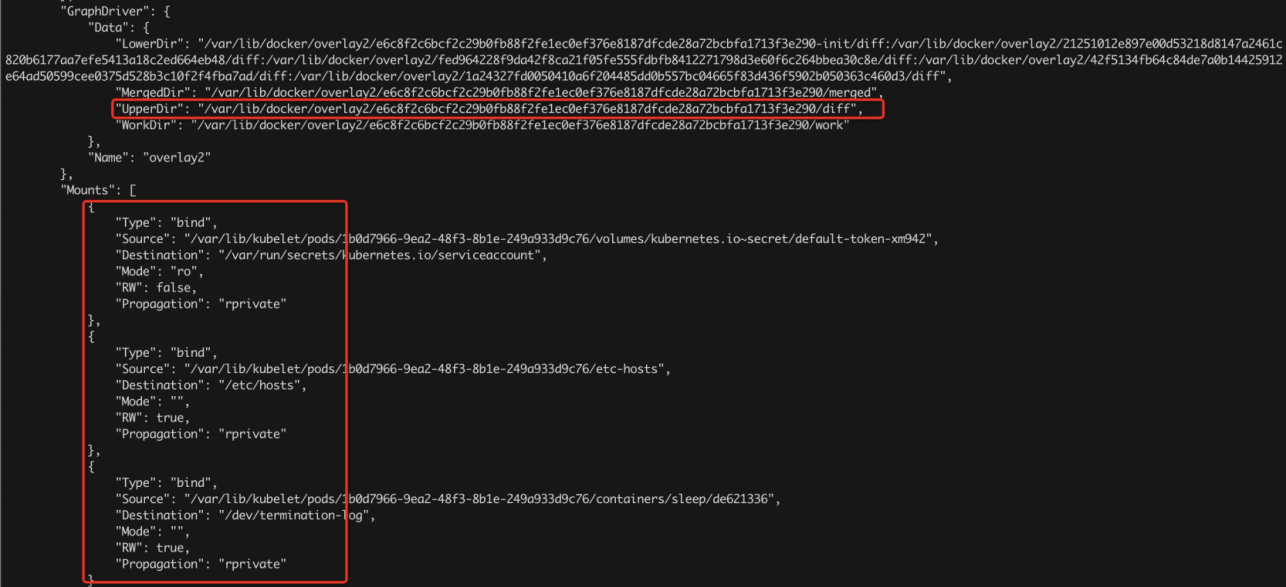

Multiple containers can run on one pod in Kubernetes. These containers share a namespace. The core working container is called the main container, and the other containers are the Sidecar containers. The sidecar container plays an auxiliary role. Functions (such as synchronizing files, collecting/monitoring logs, and file cleaning) are realized through the shared volume. The same principle is used for the Sidecar log collection of Logtail. In Sidecar mode, the Logtail Sidecar containers run in addition to the main business container. The Logtail and the main container share the volumes of logs. The collection process is listed below:

Please see Use the Log Service console to collect container text logs in Sidecar mode and Kubernetes File Collection Practices: Sidecar + hostPath Volumes for more information about the best practices on the Sidecar mode.

Log collection agent Logtail of SLS supports CRD, environment variable, console, and API configuration. The section below lists our recommendations for different configuration scenarios:

The advantages and disadvantages of each configuration method are listed on the chart below:

| CRD Configuration | Environment Variable Configuration | Console Configuration | API Configuration | |

| Applicable Scenario | CICD automated log collection configuration Complex log collection configuration and management |

Low requirements for customized log collection Simple application with few types of logs and low complexity (We do not recommend this configuration method). |

Manual management of log collection configurations | Advanced customization for requirements Users with high development capabilities |

| Configuration Difficulty | Moderate. Only need to understand the configuration format of SLS | Low. Only environment variables need to be configured | Low. Booting the program through the console, and the configuration is simple. | High. It is necessary to use the SDK of SLS and understand the Logtail configuration format of SLS. |

| Collection Configuration Customization | High. It supports all configuration parameters of SLS. | Low. Only the file address configuration is supported, and other resolution configurations are not supported. | High. It supports all configuration parameters of SLS. | High. It supports all configuration parameters of SLS. |

| O&M | Relatively high. It manages operation and maintenance through the CRD of Kubernetes. | Low. It only supports creating configurations and does not support modifying or deleting configurations. | Moderate. Manual management is required. | High. Users can customize the management mode for the service scenario based on the SLS interface. |

| Ability to Integrate with CICD | High. CRD is essentially an interface for Kubernetes, so it supports all Kubernetes CICD automation processes. | High. Environment variables are configured on pods, and seamless integration is supported. | Low. Manual processing is required. | High. Works for scenarios with self-developed CICD |

| Usage Notes |

Use CRDs to collect container logs in DaemonSet mode Use CRDs to collect container text logs in Sidecar mode |

Collect log data from containers by using Log Service |

Use the Log Service console to collect container stdout and stderr in DaemonSet mode Use the Log Service console to collect container text logs in Sidecar mode |

API operations relevant to Logtail configuration files, Logtail configurations, and Overview of Log Service SDKs |

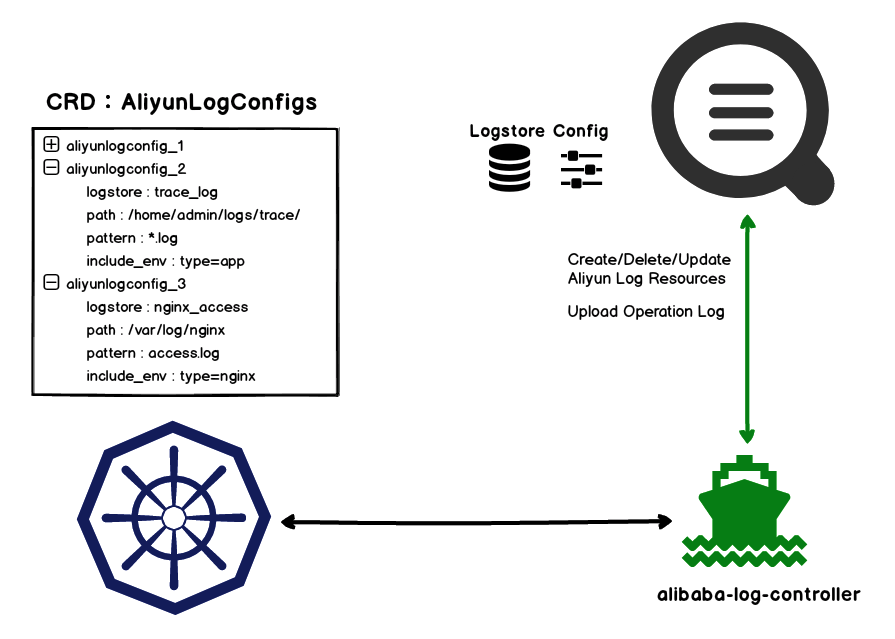

Log Service adds a CustomResourceDefinition extension named AliyunLogConfig for Kubernetes. An alibaba-log-controller is developed to monitor the AliyunLogConfig events and automatically create Logtail collection configurations. When users create, delete, or update AliyunLogConfig resources, the alibaba-log-controller monitors the resource changes and creates, deletes, or updates the corresponding collection configurations in Log Service. Then, the association between the AliyunLogConfig of Kubernetes and the collection configuration in the log service is implemented.

As shown in the preceding figure, Log Service adds a CustomResourceDefinition extension named AliyunLogConfig for Kubernetes. An alibaba-log-controller is developed to monitor AliyunLogConfig events.

When users create, delete, or update AliyunLogConfig resources, the alibaba-log-controller monitors the resource changes and creates, deletes, or updates the corresponding collection configurations in Log Service. Then, the association between the AliyunLogConfig of Kubernetes and the collection configuration in the log service is implemented.

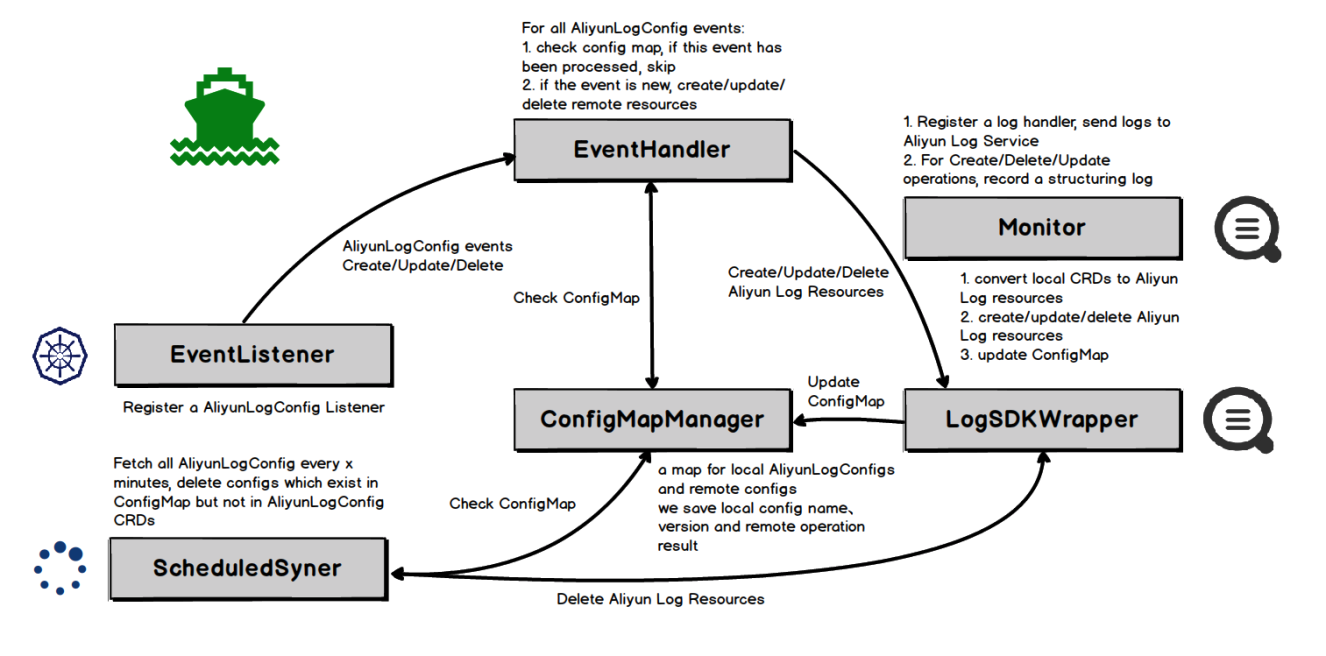

Internal Implementation of alibaba-log-controller

The alibaba-log-controller consists of six modules. The functions and dependencies of each module are shown in the preceding figure:

EventListener: It monitors AliyunLogConfig CRD resources. This EventListener is a listener in a broad sense. Its main functions are listed below:

EventHandler: It handles the corresponding events of Create, Update, and Delete. It serves as the core module of the alibaba-log-controller. Its main functions are listed below:

ConfigMapManager: It depends on the ConfigMap mechanism of Kubernetes to implement checkpoint management of controllers, including:

LogSDKWrapper: It is the secondary encapsulation based on Alibaba Cloud LOG golang sdk. Its main functions are listed below:

ScheduledSyner: It is a regular synchronization module in the background to prevent configuration changes and omission events during process/node failures. This ensures the eventual consistency of configuration management:

Monitor: In addition to outputting local running logs to stdout, the alibaba-log-controller collects logs directly to Log Service for remote troubleshooting. The types of collected logs are listed below:

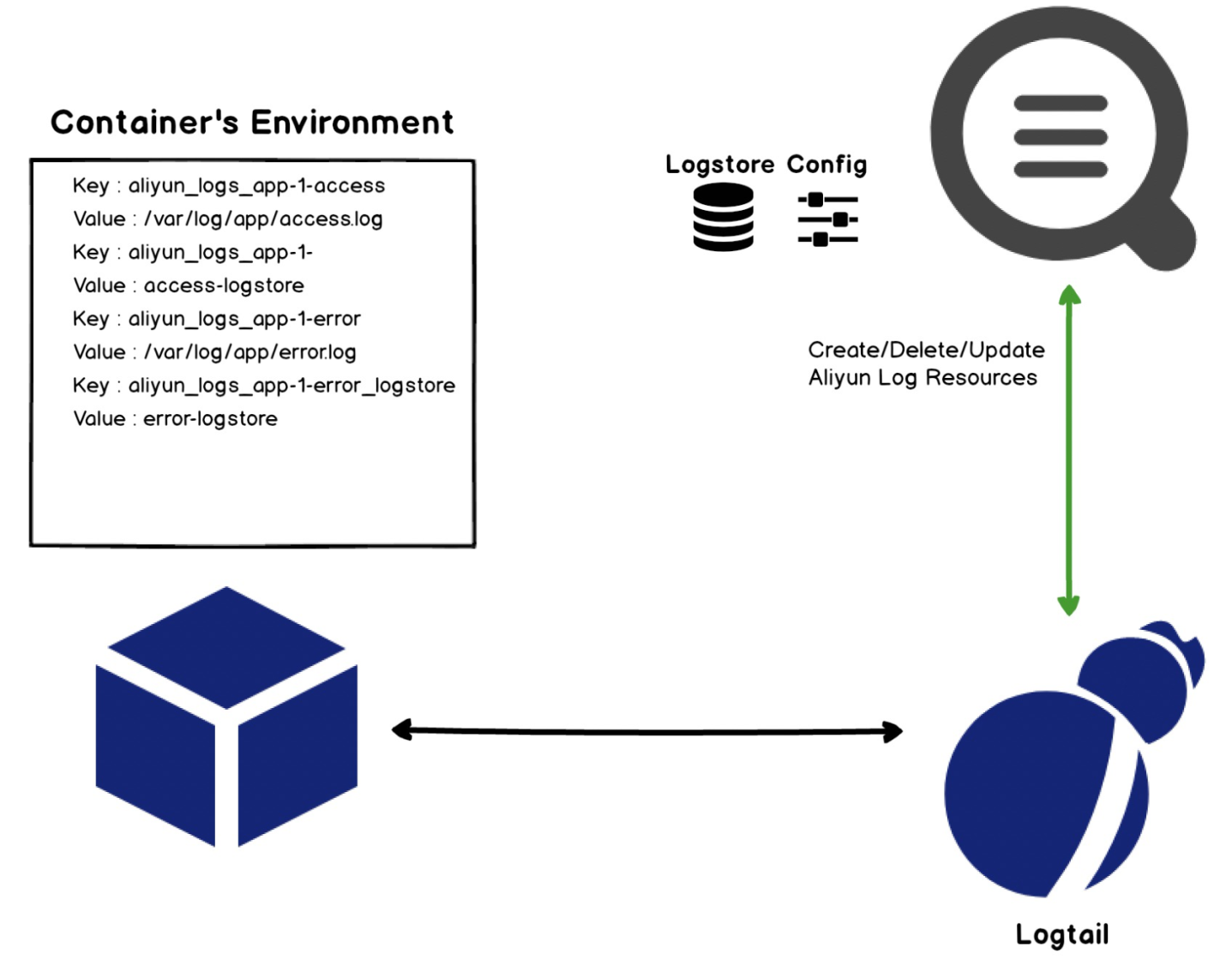

The environment variable configuration is relatively easy. When configuring Pod, users only need to add environment variables starting with the special field aliyun_logs to complete the configuration definition and data collection. This configuration is implemented by Logtail:

aliyun_logs.aliyun_logs, map them to the Logtail collection configuration of SLS, and invoke the SLS interface to create the collection configuration.The Kubernetes log collection solution can be implemented in various ways with different complexity and effects. Generally, we need to select the collection method and configuration method. Here, we recommend using the following methods:

Collection Methods

Configuration Methods

Serverless Gateway Enhancing: Integration of Alibaba Cloud Knative with Cloud Product ALB

Kubernetes File Collection Practices: Sidecar + hostPath Volumes

162 posts | 29 followers

FollowAlibaba Cloud Native - November 6, 2024

Alibaba Cloud Community - October 14, 2022

Alibaba Cloud Community - June 20, 2023

Alibaba Cloud Storage - June 19, 2019

DavidZhang - December 30, 2020

Alibaba Developer - January 5, 2022

162 posts | 29 followers

Follow Simple Log Service

Simple Log Service

An all-in-one service for log-type data

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Log Management for AIOps Solution

Log Management for AIOps Solution

Log into an artificial intelligence for IT operations (AIOps) environment with an intelligent, all-in-one, and out-of-the-box log management solution

Learn MoreMore Posts by Alibaba Container Service