# coding=utf-8

import os, time, json, requests, traceback, oss2, fc2

from requests.exceptions import *

from fc2.fc_exceptions import *

from oss2.models import PartInfo

from oss2.exceptions import *

from multiprocessing import Pool

from contextlib import closing

MAX_PROCCESSES = 20 # The number of worker processes in each subtask

BLOCK_SIZE = 6 * 1024 * 1024 # The size of each part

BLOCK_NUM_INTERNAL = 18 # The default number of blocks in each subtask in case of internal url

BLOCK_NUM = 10 # The default number of blocks in each subtask

MAX_SUBTASKS = 49 # The number of worker processes to do subtasks

CHUNK_SIZE = 8 * 1024 # The size of each chunk

SLEEP_TIME = 0.1 # The initial seconds to wait for retrying

MAX_RETRY_TIME = 10 # The maximum retry times

def retry(func):

"""

Return the result of the lambda function func with retry.

:param func: (required, lambda) the function.

:return: The result of func.

"""

wait_time = SLEEP_TIME

retry_cnt = 1

while True:

if retry_cnt > MAX_RETRY_TIME:

return func()

try:

return func()

except (ConnectionError, SSLError, ConnectTimeout, Timeout) as e:

print(traceback.format_exc())

except (OssError) as e:

if 500 <= e.status < 600:

print(traceback.format_exc())

else:

raise Exception(e)

except (FcError) as e:

if (500 <= e.status_code < 600) or (e.status_code == 429):

print(traceback.format_exc())

else:

raise Exception(e)

print('Retry %d times...' % retry_cnt)

time.sleep(wait_time)

wait_time *= 2

retry_cnt += 1

def get_info(url):

"""

Get the CRC64 and total length of the file.

:param url: (required, string) the url address of the file.

:return: CRC64, length

"""

with retry(lambda : requests.get(url, {}, stream = True)) as r:

return r.headers['x-oss-hash-crc64ecma'], int(r.headers['content-length'])

class Response(object):

"""

The response class to support reading by chunks.

"""

def __init__(self, response):

self.response = response

self.status = response.status_code

self.headers = response.headers

def read(self, amt = None):

if amt is None:

content = b''

for chunk in self.response.iter_content(CHUNK_SIZE):

content += chunk

return content

else:

try:

return next(self.response.iter_content(amt))

except StopIteration:

return b''

def __iter__(self):

return self.response.iter_content(CHUNK_SIZE)

def migrate_part(args):

"""

Download a part from url and then upload it to OSS.

:param args: (bucket, object_name, upload_id, part_number, url, st, en)

:bucket: (required, Bucket) the goal OSS bucket.

:object_name: (required, string) the goal object_name.

:upload_id: (required, integer) the upload_id of this upload task.

:part_number: (integer) the part_number of this part.

:url: (required, string) the url address of the file.

:st, en: (required, integer) the byte range of this part, denoting [st, en].

:return: (part_number, etag)

:part_number: (integer) the part_number of this part.

:etag: (string) the etag of the upload_part result.

"""

bucket = args[0]

object_name = args[1]

upload_id = args[2]

part_number = args[3]

url = args[4]

st = args[5]

en = args[6]

try:

headers = {'Range' : 'bytes=%d-%d' % (st, en)}

resp = Response(retry(lambda : requests.get(url, headers = headers, stream = True)))

result = retry(lambda : bucket.upload_part(object_name, upload_id, part_number, resp))

return (part_number, result.etag)

except Exception as e:

print(traceback.format_exc())

raise Exception(e)

def do_subtask(event, context):

"""

Download a range of the file from url and then upload it to OSS.

:param event: (required, json) the json format of event.

:param context: (required, FCContext) the context of handler.

:return: parts

:parts: ([(integer, string)]) the part_number and etag of each process.

"""

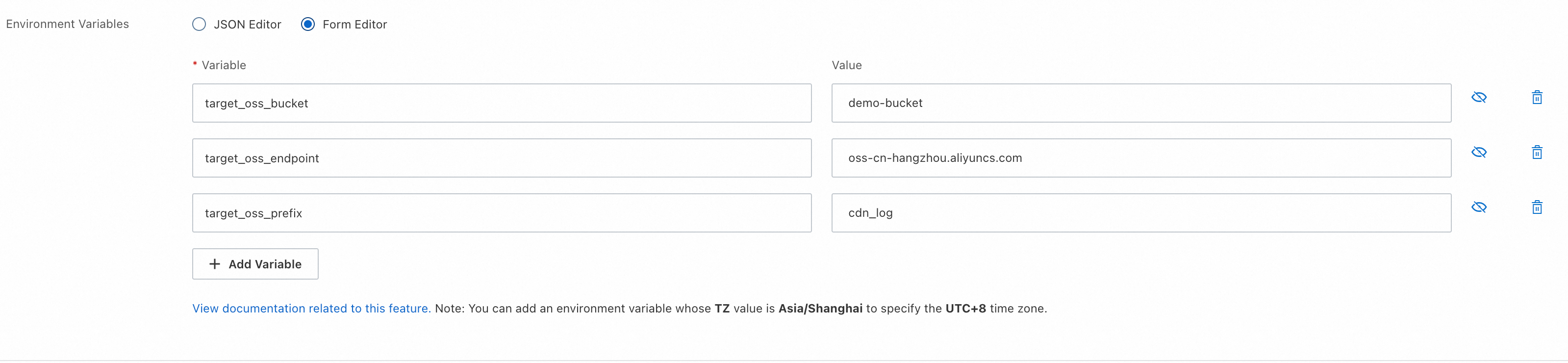

oss_endpoint = os.environ.get('target_oss_endpoint')

oss_bucket_name = os.environ.get('target_oss_bucket')

access_key_id = context.credentials.access_key_id

access_key_secret = context.credentials.access_key_secret

security_token = context.credentials.security_token

auth = oss2.StsAuth(access_key_id, access_key_secret, security_token)

bucket = oss2.Bucket(auth, oss_endpoint, oss_bucket_name)

object_name = event['object_name']

upload_id = event['upload_id']

part_number = event['part_number']

url = event['url']

st = event['st']

en = event['en']

if part_number == 1:

return [migrate_part((bucket, object_name, upload_id, part_number, url, st, en))]

pool = Pool(MAX_PROCCESSES)

tasks = []

while st <= en:

nxt = min(en, st + BLOCK_SIZE - 1)

tasks.append((bucket, object_name, upload_id, part_number, url, st, nxt))

part_number += 1

st = nxt + 1

parts = pool.map(migrate_part, tasks)

pool.close()

pool.join()

return parts

def invoke_subtask(args):

"""

Invoke the same function synchronously to start a subtask.

:param args: (object_name, upload_id, part_number, url, st, en, context)

:object_name: (required, string) the goal object_name.

:upload_id: (required, integer) the upload_id of this upload task.

:part_number: (integer) the part_number of the first part in this subtask.

:url: (required, string) the url address of the file.

:st, en: (required, integer) the byte range of this subtask, denoting [st, en].

:context: (required, FCContext) the context of handler.

:return: the return of the invoked function.

"""

object_name = args[0]

upload_id = args[1]

part_number = args[2]

url = args[3]

st = args[4]

en = args[5]

context = args[6]

account_id = context.account_id

access_key_id = context.credentials.access_key_id

access_key_secret = context.credentials.access_key_secret

security_token = context.credentials.security_token

region = context.region

service_name = context.service.name

function_name = context.function.name

endpoint = 'http://%s.%s-internal.fc.aliyuncs.com' % (account_id, region)

client = fc2.Client(

endpoint = endpoint,

accessKeyID = access_key_id,

accessKeySecret = access_key_secret,

securityToken = security_token

)

payload = {

'object_name' : object_name,

'upload_id' : upload_id,

'part_number' : part_number,

'url' : url,

'st' : st,

'en' : en,

'is_children' : True

}

if part_number == 1:

return json.dumps(do_subtask(payload, context))

ret = retry(lambda : client.invoke_function(service_name, function_name, payload = json.dumps(payload)))

return ret.data

def divide(n, m):

"""

Calculate ceil(n / m) without floating point arithmetic.

:param n, m: (integer)

:return: (integer) ceil(n / m).

"""

ret = n // m

if n % m > 0:

ret += 1

return ret

def migrate_file(url, oss_object_name, context):

"""

Download the file from url and then upload it to OSS.

:param url: (required, string) the url address of the file.

:param oss_object_name: (required, string) the goal object_name.

:param context: (required, FCContext) the context of handler.

:return: actual_crc64, expect_crc64

:actual_crc64: (string) the CRC64 of upload.

:expect_crc64: (string) the CRC64 of source file.

"""

crc64, total_size = get_info(url)

oss_endpoint = os.environ.get('target_oss_endpoint')

oss_bucket_name = os.environ.get('target_oss_bucket')

access_key_id = context.credentials.access_key_id

access_key_secret = context.credentials.access_key_secret

security_token = context.credentials.security_token

auth = oss2.StsAuth(access_key_id, access_key_secret, security_token)

bucket = oss2.Bucket(auth, oss_endpoint, oss_bucket_name)

upload_id = retry(lambda : bucket.init_multipart_upload(oss_object_name)).upload_id

pool = Pool(MAX_SUBTASKS)

st = 0

part_number = 1

tasks = []

block_num = BLOCK_NUM_INTERNAL if '-internal.aliyuncs.com' in oss_endpoint else BLOCK_NUM

block_num = min(block_num, divide(divide(total_size, BLOCK_SIZE), MAX_SUBTASKS + 1))

while st < total_size:

en = min(total_size - 1, st + block_num * BLOCK_SIZE - 1)

tasks.append((oss_object_name, upload_id, part_number, url, st, en, context))

size = en - st + 1

cnt = divide(size, BLOCK_SIZE)

part_number += cnt

st = en + 1

subtasks = pool.map(invoke_subtask, tasks)

pool.close()

pool.join()

parts = []

for it in subtasks:

for part in json.loads(it):

parts.append(PartInfo(part[0], part[1]))

res = retry(lambda : bucket.complete_multipart_upload(oss_object_name, upload_id, parts))

return str(res.crc), str(crc64)

def get_oss_object_name(url):

"""

Get the OSS object name.

:param url: (required, string) the url address of the file.

:return: (string) the OSS object name.

"""

prefix = os.environ.get('target_oss_prefix')

tmps = url.split('?')

if len(tmps) != 2:

raise Exception('Invalid url : %s' % url)

urlObject = tmps[0]

if urlObject.count('/') < 3:

raise Exception('Invalid url : %s' % url)

objectParts = urlObject.split('/')

objectParts = [prefix] + objectParts[len(objectParts) - 3 : len(objectParts)]

return '/'.join(objectParts)

def handler(event, context):

evt = json.loads(event)

if list(evt.keys()).count('is_children'):

return json.dumps(do_subtask(evt, context))

url = evt['events'][0]['eventParameter']['filePath']

if not (url.startswith('http://') or url.startswith('https://')):

url = 'https://' + url

oss_object_name = get_oss_object_name(url)

st_time = int(time.time())

wait_time = SLEEP_TIME

retry_cnt = 1

while True:

actual_crc64, expect_crc64 = migrate_file(url, oss_object_name, context)

if actual_crc64 == expect_crc64:

break

print('Migration object CRC64 not matched, expected: %s, actual: %s' % (expect_crc64, actual_crc64))

if retry_cnt > MAX_RETRY_TIME:

raise Exception('Maximum retry time exceeded.')

print('Retry %d times...' % retry_cnt)

time.sleep(wait_time)

wait_time *= 2

retry_cnt += 1

print('Success! Total time: %d s.' % (int(time.time()) - st_time))