If Logtail fails to collect logs from your standard or Kubernetes containers, follow the instructions in this topic to troubleshoot the issue and check the running status.

Check for machine group heartbeat errors

Check the heartbeat status of the machine group to determine whether Logtail is installed correctly in your container environment.

Check the heartbeat status of the machine group.

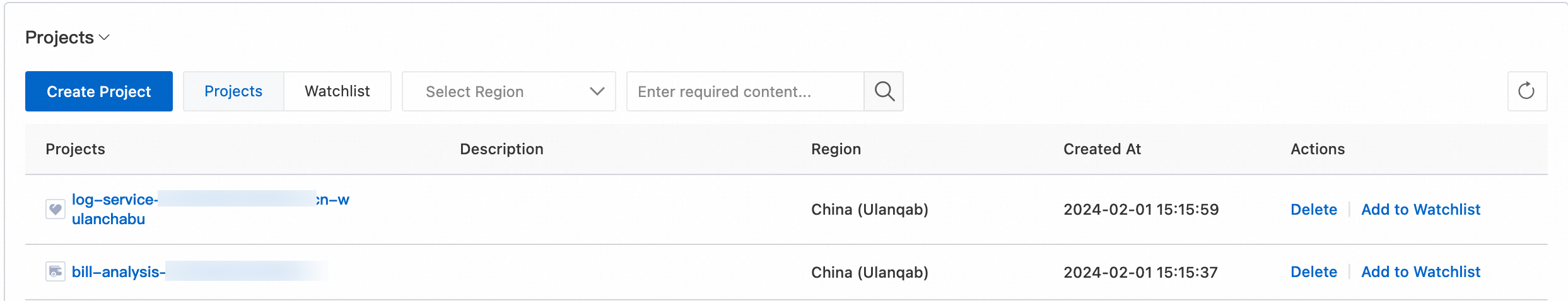

Log on to the Simple Log Service console.

In the Projects section, click the one you want.

In the navigation pane, choose .

In the list of machine groups, click the destination machine group.

On the Machine Group Configurations page, view the machine group status and record the number of nodes whose heartbeat status is OK.

Check the number of worker nodes in the container cluster.

Run the following command to view the number of worker nodes in the cluster.

kubectl get node | grep -v masterA result similar to the following is returned.

NAME STATUS ROLES AGE VERSION cn-hangzhou.i-bp17enxc2us3624wexh2 Ready <none> 238d v1.10.4 cn-hangzhou.i-bp1ad2b02jtqd1shi2ut Ready <none> 220d v1.10.4

Compare the number of nodes whose heartbeat status is OK with the number of worker nodes in the container cluster. Troubleshoot the issue based on the comparison result.

The heartbeat status of all nodes in the machine group is Failed.

If you collect logs from standard Docker containers, see Collect logs from Docker containers (stdout and files) and check whether

${your_region_name},${your_aliyun_user_id}, and${your_machine_group_user_defined_id}are correctly specified.If you use a self-managed Kubernetes cluster, see Collect text logs from Kubernetes containers using a sidecar and check whether

{regionId},{aliuid},{access-key-id}, and{access-key-secret}are correctly specified.If they are incorrectly specified, run the

helm del --purge alibaba-log-controllercommand to delete the installation package and then reinstall it.

The number of nodes whose heartbeat status is OK is less than the number of worker nodes in the cluster.

Check whether a DaemonSet is manually deployed using a YAML file.

Run the following command. If a result is returned, it means that a DaemonSet was manually deployed using a YAML file.

kubectl get po -n kube-system -l k8s-app=logtailConfigure parameters such as ${your_region_name}, ${your_aliyun_user_id}, and ${your_machine_group_name} with your actual values.

Run the following command to update the file.

kubectl apply -f ./logtail-daemonset.yaml

Check for container log collection errors

If you cannot find logs on the Preview page or the Logstore query page in the Simple Log Service console, it indicates that Simple Log Service has not collected your container logs. In this case, check the container status and then perform the following checks.

Note the following when you collect logs from container files.

Logtail collects only incremental logs. If a log file is not updated after a Logtail configuration is applied, Logtail does not collect logs from the file. For more information, see Read log files.

Logtail can collect logs only from files that use the default container storage or are mounted to a local path. Other storage methods are not supported.

After logs are collected, you must create an index to query and analyze the logs in the Logstore. For more information, see Create an index.

Check for machine group heartbeat errors. For more information, see Check for machine group heartbeat errors.

Check whether the Logtail configuration is correct.

Check whether the IncludeLabel, ExcludeLabel, IncludeEnv, ExcludeEnv, and other settings in the Logtail configuration meet your requirements.

NoteThe label is a container label, which is the label in the Docker inspect output, not a Kubernetes label.

You can temporarily remove the IncludeLabel, ExcludeLabel, IncludeEnv, and ExcludeEnv settings to check whether logs can be collected. If logs can be collected, this indicates that the settings of these parameters are incorrect.

Other O&M operations

Log on to a Logtail container

Standard Docker

On the host, run the following command to find the Logtail container.

docker ps | grep logtailA result similar to the following is returned.

223****6e registry.cn-hangzhou.aliyuncs.com/log-service/logtail "/usr/local/ilogta..." 8 days ago Up 8 days logtail-ibaRun the following command to start a bash shell in the Logtail container.

docker exec -it 223****6e bashIn the command,

223****6eis the container ID. Replace it with the actual ID.

Kubernetes

Run the following command to find the Logtail pod.

kubectl get po -n kube-system | grep logtailA result similar to the following is returned.

logtail-ds-****d 1/1 Running 0 8d logtail-ds-****8 1/1 Running 0 8dRun the following command to log on to the pod.

kubectl exec -it -n kube-system logtail-ds-****d -- bashIn the command,

logtail-ds-****dis the pod ID. Replace it with the actual ID.

View the Logtail running logs

Logtail logs are stored in the /usr/local/ilogtail/ directory of the Logtail container. The log files are named ilogtail.LOG and logtail_plugin.LOG.

Log on to the Logtail container. For more information, see Log on to a Logtail container.

Go to the /usr/local/ilogtail/ directory.

cd /usr/local/ilogtailView the ilogtail.LOG and logtail_plugin.LOG files.

cat ilogtail.LOG cat logtail_plugin.LOG

Description of the standard output (stdout) of a Logtail container

The standard output of a Logtail container does not provide meaningful information for troubleshooting. You can ignore the following content.

start umount useless mount points, /shm$|/merged$|/mqueue$

umount: /logtail_host/var/lib/docker/overlay2/3fd0043af174cb0273c3c7869500fbe2bdb95d13b1e110172ef57fe840c82155/merged: must be superuser to unmount

umount: /logtail_host/var/lib/docker/overlay2/d5b10aa19399992755de1f85d25009528daa749c1bf8c16edff44beab6e69718/merged: must be superuser to unmount

umount: /logtail_host/var/lib/docker/overlay2/5c3125daddacedec29df72ad0c52fac800cd56c6e880dc4e8a640b1e16c22dbe/merged: must be superuser to unmount

......

xargs: umount: exited with status 255; aborting

umount done

start logtail

ilogtail is running

logtail status:

ilogtail is runningView the status of Simple Log Service components in a Kubernetes cluster

Run the following command to view the status and information of the Simple Log Service deployment.

kubectl get deploy -n kube-system | grep -E 'alibaba-log-controller|loongcollector-operator'The following result is returned:

NAME READY UP-TO-DATE AVAILABLE AGE

alibaba-log-controller 1/1 1 1 11dRun the following command to view the status information of the DaemonSet resource.

kubectl get ds -n kube-system | grep -E 'logtail-ds|loongcollector-ds'The following result is returned:

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

logtail-ds 2 2 2 2 2 **ux 11dView the version number, IP address, and startup time of Logtail

On the host, run the following command to view the version number, IP address, and startup time of Logtail.

The information is stored in the

/usr/local/ilogtail/app_info.jsonfile of the Logtail container.kubectl exec logtail-ds-****k -n kube-system cat /usr/local/ilogtail/app_info.jsonA result similar to the following is returned.

{ "UUID" : "", "hostname" : "logtail-****k", "instance_id" : "0EB****_172.20.4.2_1517810940", "ip" : "172.20.4.2", "logtail_version" : "0.16.2", "os" : "Linux; 3.10.0-693.2.2.el7.x86_64; #1 SMP Tue Sep 12 22:26:13 UTC 2017; x86_64", "update_time" : "2018-02-05 06:09:01" }

Handle the accidental deletion of a Logstore created by a CRD

If you delete a Logstore that was automatically created by a Custom Resource Definition (CRD), the collected data cannot be recovered. In addition, the CRD configuration for this Logstore becomes invalid. To prevent log collection errors, use one of the following solutions.

Use another Logstore in the CRD configuration. Do not use the Logstore that was accidentally deleted.

Restart the alibaba-log-controller pod.

You can run the following command to find the pod.

kubectl get po -n kube-system | grep alibaba-log-controller