This topic describes how to configure Logtail in the Simple Log Service console to collect data from Beats and Logstash.

Prerequisites

Logtail is installed on the server that you use to collect data from Beats and Logstash. Servers that run Linux support Logtail 0.16.9 or later. Servers that run Windows support Logtail 1.0.0.8 or later. For more information, see Install Logtail on a Linux server or Install Logtail on a Windows server.

Data is collected by using Logstash or Beats.

For more information about how to collect data from Logstash, visit Logstash-Lumberjack-Output.

For more information about how to collect data from Beats, visit Beats-Lumberjack-Output.

The procedure in this topic describes how to use Packetbeat to collect data transmitted on the local network, and use the Logtail Lumberjack plug-in to upload the data to Simple Log Service. Data collected by using Packetbeat is sent to Logstash, as shown in the following sample script:

output.logstash: hosts: ["127.0.0.1:5044"]

Background information

Logstash and Beats (such as MetricBeat, PacketBeat, Winlogbeat, Auditbeat, Filebeat, and Heartbeat) support the Lumberjack protocol. Therefore, Logtail can use the protocol to upload data that is collected by Beats and Logstash to Simple Log Service.

You can configure multiple Lumberjack plug-ins, but these plug-ins cannot listen on the same port.

Lumberjack plug-ins support SSL. Data uploaded to Simple Log Service from Logstash must be encrypted by using SSL.

Procedure

Log on to the Simple Log Service console.

In the Import Data section, select Custom Data Plug-in.

Select the project and Logstore. Then, click Next.

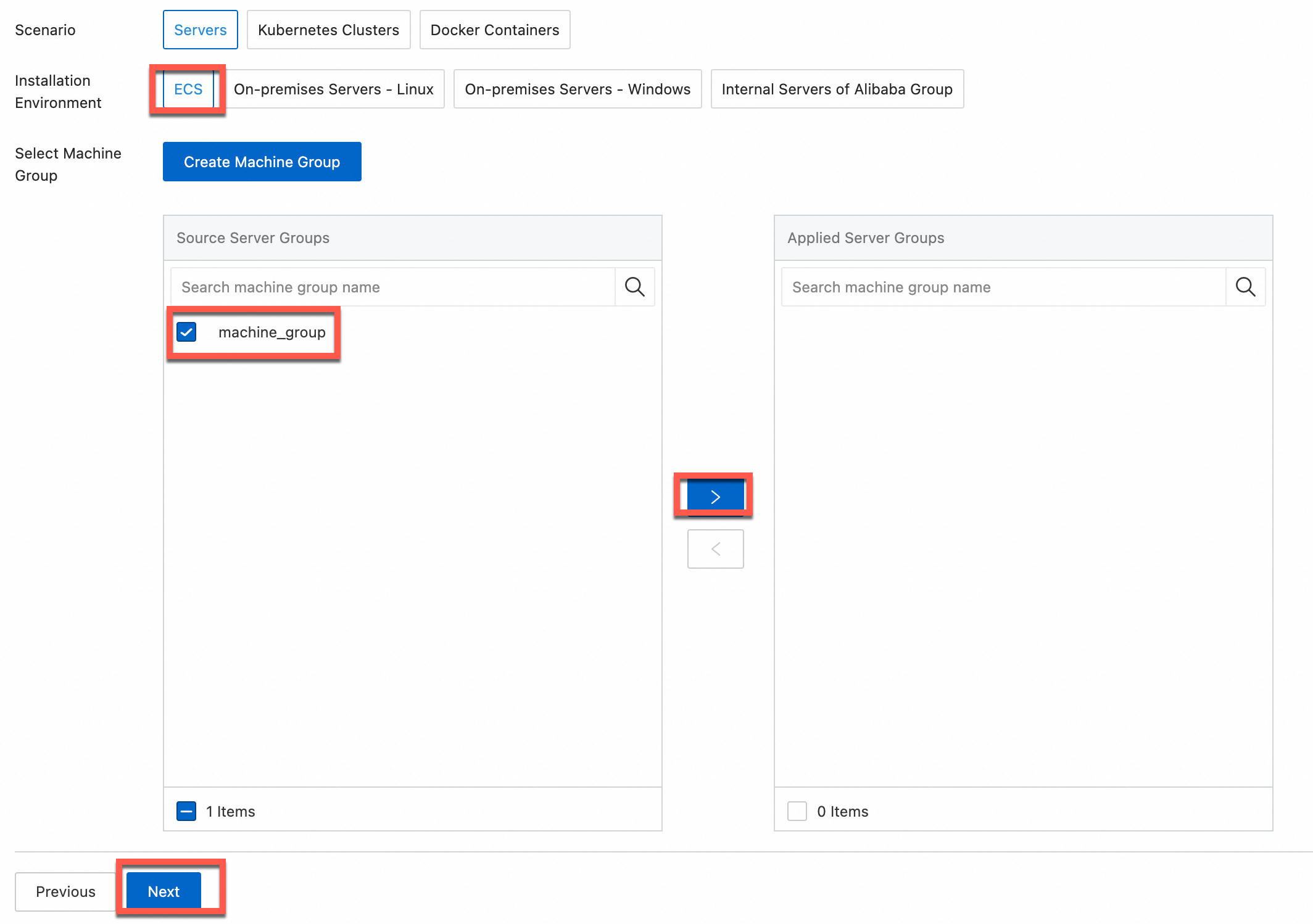

In the Machine Group Configurations step, configure a machine group.

Configure the Scenario and Installation Environment parameters based on your business requirements.

ImportantYou must configure the Scenario and Installation Environment parameters regardless of whether a machine group is available. The parameter settings affect subsequent configurations.

Make sure that a machine group is displayed in the Applied Server Groups section and click Next.

Machine group available

Select a machine group from the Source Machine Group section.

No machine group available

Click Create Machine Group. In the Create Machine Group panel, configure the parameters. You can set the Machine Group Identifier parameter to IP Address or Custom Identifier. For more information, see Create a custom identifier-based machine group or Create an IP address-based machine group.

ImportantIf you apply a machine group immediately after you create the machine group, the heartbeat status of the machine group may be FAIL. This issue occurs because the machine group is not connected to Simple Log Service. To resolve this issue, you can click Automatic Retry. If the issue persists, see What do I do if no heartbeat connections are detected on Logtail?

In the Configure Data Source step, set the Configuration Name and Plug-in Configuration parameters, and then click Next.

inputs is required and is used to configure the data source settings for the Logtail configuration.

ImportantYou can specify only one type of data source in inputs.

processors is optional and is used to configure the data processing settings for the Logtail configuration to parse data. You can specify one or more processing methods.

If your logs cannot be parsed based only on the setting of inputs, you can configure processors in the Plug-in Configuration field to add plug-ins for data processing. For example, you can extract fields, extract log time, mask data, and filter logs. For more information, see Overview of Logtail plug-ins for data processing.

Create indexes and preview data. Then, click Next. By default, full-text indexing is enabled in Simple Log Service. You can also manually create field indexes for the collected logs or click Automatic Index Generation. Then, Simple Log Service generates field indexes. For more information, see Create indexes.

ImportantIf you want to query all fields in logs, we recommend that you use full-text indexes. If you want to query only specific fields, we recommend that you use field indexes. This helps reduce index traffic. If you want to analyze fields, you must create field indexes. You must include a SELECT statement in your query statement for analysis.

Click Query Log. You are redirected to the query and analysis page of your Logstore.

You must wait approximately 1 minute for the indexes to take effect. Then, you can view the collected logs on the Raw Logs tab. For more information, see Guide to log query and analysis.

Troubleshooting

If no data is displayed on the preview page or query page after logs are collected by using Logtail, you can troubleshoot the errors based on the instructions that are provided in What do I do if errors occur when I use Logtail to collect logs?

What to do next

After Logtail uploads data to Simple Log Service, you can view the data in the Simple Log Service console. The following content is the sample data uploaded to Simple Log Service.

_@metadata_beat: packetbeat

_@metadata_type: doc

_@metadata_version: 6.2.4

_@timestamp: 2018-06-05T03:58:42.470Z

__source__: **. **. **.**

__tag__:__hostname__: *******

__topic__:

_beat_hostname: bdbe0b8d53a4

_beat_name: bdbe0b8d53a4

_beat_version: 6.2.4

_bytes_in: 56

_bytes_out: 56

_client_ip: 192.168.5.2

_icmp_request_code: 0

_icmp_request_message: EchoRequest(0)

_icmp_request_type: 8

_icmp_response_code: 0

_icmp_response_message: EchoReply(0)

_icmp_response_type: 0

_icmp_version: 4

_ip: 127.0.0.1

_path: 127.0.0.1

_responsetime: 0

_status: OK

_type: icmp