This topic compares multiple monitoring and analysis platforms and provides the O&M and site reliability engineering (SRE) teams with multiple solutions to build monitoring and analysis platforms.

Background information

The O&M and SRE teams play key roles and perform multiple complex tasks, such as application deployment, performance and availability monitoring, alerting, shift scheduling, capacity planning, and business support. The rapid development of cloud native services, containerized services, and microservices has accelerated the iteration of related features and posed the following challenges to the O&M and SRE teams:

Widespread business lines

Business lines are widely spread to clients, frontend web applications, and backend applications.

Up to dozens of business lines must be supported at the same time.

Manpower shortage

In some companies, the O&M and SRE teams consist of only 1% of employees or less compared with developers in the companies.

High pressure on online service stability

The O&M and SRE teams must frequently respond to emergencies and fix urgent issues.

Complex business processes and the tremendous number of system components put great pressure on service troubleshooting and recovery.

Disperse and inefficient monitoring and analysis platforms

Various types of data is monitored from different dimensions. This results in excessive scripts, monitoring tools, and data silos.

Various types of data is stored in different monitoring systems that do not support association analysis. As a result, root causes cannot be located in an efficient manner.

Each system may have thousands of alert rules. This increases management costs and results in excessive alerts. False positives and false negatives may be reported.

To address the preceding challenges, the O&M and SRE teams require an easy-to-use and powerful monitoring and analysis platform. The platform is expected to analyze data with high efficiency, improve the work efficiency, locate root causes in an accurate and efficient manner, and ensure business continuity.

Pending-handling data issues for monitoring and analysis platforms

To ensure service stability and support business development, the O&M and SRE teams must collect and analyze large amounts of data. The data includes hardware data, network metrics, and user behavior data. After data is collected, the O&M and SRE teams also need a suitable system to convert, store, process, and analyze the data based on business requirements. The O&M and SRE teams face the following data issues:

Diversified data types

System data: hardware metrics related to CPU, memory, network, and disk resources, and system logs

Golden metrics: latency, traffic, errors, and saturation

Access logs

Application logs: Java application logs and error logs

User behavior data: website clicks

Instrumentation points in apps: statistics on instrumentation points in Android and iOS apps

Framework data: Kubernetes framework data

Call chains: trace data

Diverse requirements

The O&M and SRE teams need to ensure the stability of services in which the preceding types of data is involved. The teams also need to support other business teams based on the following data management requirements:

Monitoring and alerting: Small amounts of streaming data can be processed within seconds or minutes.

Customer service and troubleshooting: Data can be queried by keyword within seconds.

Risk management: Traffic can be processed within seconds.

Operations and data analysis: Large-scale data can be analyzed within seconds or hours. For example, data can be analyzed in online analytical processing (OLAP) scenarios.

Difficult resource estimation

The amount of data from fast-growing business is difficult to estimate at the early stage due to the following reasons:

No references can be used to estimate the data amount of new data sources.

The rapid business growth results in data surges.

The previous data storage methods and data retention periods no longer meet the changing data management requirements.

Solutions to building monitoring and analysis platforms

The O&M and SRE teams need a monitoring and analysis platform to process data that is collected from various sources in different formats. To meet diverse business requirements, the O&M and SRE teams may use and maintain multiple systems based on the following open source services:

Telegraf + InfluxDB + Grafana

Telegraf is a lightweight and plugin-driven server agent that can be used to collect metrics from operating systems, databases, and middleware. The collected metrics can be written to and read from InfluxDB that is used to store and analyze the data. Then, the analysis results can be rendered into visualized charts and interactively queried by using Grafana.

Prometheus

Prometheus is a basic tool that is used to process metrics in the cloud native ecosystem of Kubernetes. Prometheus can collect metrics by using exporters. Prometheus can also be integrated with Grafana.

ELK

The Elasticsearch, Logstash, and Kibana (ELK) stack is an open source component that is commonly used to perform multidimensional log query and analysis. The ELK stack provides fast, flexible, and powerful query capabilities to meet most query requirements of R&D, O&M, and customer service teams.

Trace systems

In a microservice and distributed system, call chains are complex. If no suitable trace system is used, root causes of errors cannot be located in an efficient manner. Trace systems such as Zipkin, Jaeger, OpenTelemetry, and SkyWalking are applicable solutions. OpenTelemetry and SkyWalking are standard trace systems in the industry. However, these trace systems do not provide data storage components and must be used together with Elasticsearch or Cassandra to store traces.

Kafka + Flink

In data cleansing and risk management scenarios, full data needs to be consumed in real time. This requires support from a platform that can provide real-time data pipelines and process streaming data. In most cases, the platform is built by combining Kafka and Flink.

ClickHouse, Presto, and Druid

In scenarios in which operational analysis and reports are required, data is often imported to an OLAP engine for higher responsiveness. This way, large amounts of data can be analyzed and various ad hoc queries can be performed within seconds to minutes.

Different system components are used to process different types of data. The data is distributed to these components or a data copy is stored in multiple systems, which increases system complexity and usage costs.

If the amount of data increases, the O&M and SRE teams face big challenges in terms of component stability, service performance, cost control, and support for a large number of business lines.

Challenges for monitoring and analysis platforms

To maintain multiple systems and support various business lines, the O&M and SRE teams must address challenges in the following aspects:

System stability

System dependencies: Data is distributed to multiple systems that depend on each other. If an error occurs in one system, the associated systems are affected. For example, if the write speed of the downstream system Elasticsearch becomes slow, the storage usage of Kafka clusters that are used to cache data becomes high. In this case, the Kafka clusters may have insufficient space for written data.

Traffic bursts: Traffic bursts frequently occur on the Internet. Traffic bursts also occur when large amounts of data is written to a system. The O&M and SRE teams must ensure that the system can run as expected and that the read and write operations are not affected by traffic bursts.

Resource isolation: Different data has different priorities. Data can be separately stored in different physical storage resources. If you use only this method to isolate data, a large number of cluster resources are wasted and the O&M costs are greatly increased. If you share resources for data, the interference between the resources must be minimized. For example, a query operation on a large amount of data in a system may break down all clusters of the system.

Technical issues: The O&M and SRE teams must optimize a large number of parameters for multiple systems. This requires a long period of time to compare and adjust optimization solutions to meet various requirements in different scenarios.

Predictable performance

Data size: The size of data in a system has a significant impact on the system performance. For example, the O&M and SRE teams must predict whether tens of millions to hundreds of millions of rows of metric data can be read from and written to a system, and whether 1 billion to 10 billion rows of data can be queried in Elasticsearch.

Quality of service (QoS) control: Each system consists of limited hardware resources, which must be allocated and managed based on the QPS and concurrent requests for different data types. In some cases, a QoS-degrade measure is required to prevent the use of a service from affecting the performance of other services. Most open source components are developed without considering QoS control.

Cost control

Resource costs: The deployment of each system component consumes hardware resources. If a data copy exists in multiple systems at a time, large amounts of hardware resources are consumed. In addition, the amount of business data is difficult to estimate. If the amount of business data is overestimated, resources are wasted.

Data access costs: The O&M and SRE teams require a tool or platform to support automatic access to business data. The tool or platform is expected to adapt data formats, manage environments, maintain configurations, and estimate resources. This way, the O&M and SRE teams can focus more on important work.

Support costs: Technical support is required to resolve issues that may occur when multiple systems are used. The issues include unsuitable usage modes and invalid parameter settings. Bugs in open source software may result in additional costs.

O&M costs: The O&M and SRE teams require a long period of time to resolve hardware and software issues of each system. For example, the teams may need to replace hardware, scale the systems, and upgrade software.

Cost sharing: To effectively utilize resources and develop strategic budgets and plans, the O&M and SRE teams must accurately estimate resource consumption of different business lines. In this case, a monitoring and analysis platform is required to support cost sharing based on valid metering data.

Simulation in an actual scenario

Background information

A company developed more than 100 applications, and each application generates NGINX access logs and Java application logs.

The log size of each application greatly fluctuates, ranging from 1 GB to 1 TB per day. A total of 10 TB of logs are newly generated by all applications per day. The data retention period ranges from 7 to 90 days, and the average data retention period is 15 days.

Logs are used for business monitoring and alerting, online troubleshooting, and real-time risk management.

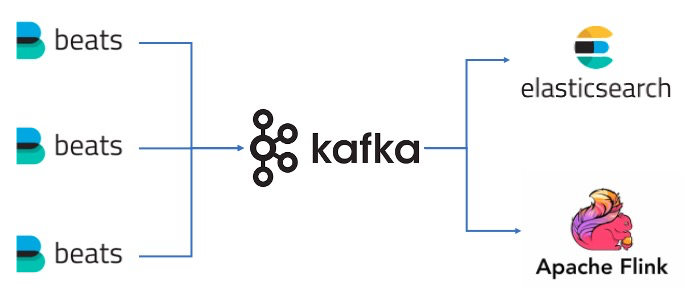

Business architecture

Beats: collects data and sends the data to Kafka in real time.

Kafka: temporarily stores data, sends the data to Flink for real-time consumption, and sends the data to Elasticsearch.

Flink: monitors and analyzes service data and manages risks in real time.

Elasticsearch: queries and analyzes logs and troubleshoots errors.

The preceding architecture describes how data is processed on a monitoring and analysis platform. When the O&M and SRE teams of the company develop an Elasticsearch solution, the following items must be considered:

Capacity planning: The O&M and SRE teams must calculate the required disk capacity by using the following formula: Disk capacity = Size of raw data × Expansion coefficient × (1 + Number of replicas) × (1 + Percentage of reserved space). The expansion coefficient ranges from 1.1 to 1.3. The number of replicas is 1. The percentage of reserved space is 25%. The reserved space can be used in the future or to store temporary files. In this case, the required disk capacity is 2.75 to 3.5 times the size of raw data. If the _all parameter is enabled, more reserved space is required for data expansion.

Cold and hot data isolation: If all data is stored in an SSD, the storage cost is excessively high. Some indexed data can be stored in an HDD based on the priority and time-related factors of the data. The indexed data can also be migrated by using the rollover feature of Elasticsearch.

Index setting: The NGINX access logs and Java application logs of each application are periodically indexed in chronological order. The number of shards is specified based on the size of data, and the capacity of each shard can be 30 GB to 50 GB. The accurate amount of logs for each application is difficult to estimate. If issues occur when data is written and queried, the number of shards may need to be adjusted and the reindexing process consumes more resources.

Kafka consumption setting: Logstash is used to consume data from Kafka, and then the data is written to Elasticsearch. The number of partitions of Kafka topics must match the value of the logconsumer_threads parameter. Otherwise, data cannot be evenly consumed among the partitions.

Elasticsearch parameter optimization: The O&M and SRE teams must first evaluate the performance of Elasticsearch based on write throughput, latency visibility, data security, and data query. Then, the O&M and SRE teams can optimize the related parameters based on the CPU and memory resources of clusters to improve the performance of Elasticsearch. Common parameters include the number of threads, memory control, translog settings, queue length, intervals between various operations, and merge-related parameters.

Memory: The memory size of a Java virtual machine (JVM) heap is usually less than 32 GB, and the remaining memory is used as the cache memory of the operating system. Frequent garbage collection (GC) operations affect application performance and may result in service unavailability.

The memory usage of a master node varies based on the number of shards in a cluster. In most cases, the number of shards in a cluster is less than 10,000. In the default settings of Elasticsearch, the maximum number of shards allowed in a node is 1,000. The numbers of indexes and shards must be limited.

The memory size of a data node is based on the size of indexes. The finite-state transducer (FST) of Elasticsearch resides in the memory of data nodes for a long period of time. If you use Elasticsearch of 7.3 or later, the system reclaims cache memory because the mmap mechanism is introduced. However, this degrades data query performance. If you use Elasticsearch of a version earlier than 7.3, only the data size of a node can be controlled.

Query and analysis: The O&M and SRE teams require a long period of time to continuously test the query and analysis performance of Elasticsearch by using the trial and error method.

Mappings must be configured based on the actual scenario. For example, when the text and keyword fields are configured, nested mappings must be minimized.

Long query time ranges and complex query statements must be avoided to prevent excessive consumption of system resources. For example, nested GROUP BY clauses must be minimized. To prevent the out of memory (OOM) error that may occur when an aggregate query or fuzzy search operation is performed on a large data set, the size of the data set must be limited.

The number of segments must be limited. The force merge operation must be avoided unless required. The amount of disk I/O and resource consumption after the force merge operation must be evaluated.

A filter context and a query context must be selected based on the actual scenario. In scenarios in which computation is not required, the query cache feature of Elasticsearch can be used with higher efficiency in a filter context than in a query context.

If scripts are used to query and analyze data, system instability and performance issues may occur.

If the routing parameter is used to route search requests to a specific shard, the performance can be improved.

Data corruption: If a failure occurs, files may be corrupted. If a segment or translog file is corrupted, the data in the related shard cannot be loaded, and damaged data must be manually deleted or by using tools. Data loss may occur during this process.

The preceding issues may occur when the O&M and SRE teams use and maintain an Elasticsearch cluster. If the data size increases to hundreds of terabytes and a large number of data access requests are generated, the stability of the cluster is difficult to maintain. The same issues may exist in other systems, which is a significant challenge for the O&M and SRE teams.

Integrated cloud service

To meet the requirements of the O&M and SRE teams for a monitoring and analysis platform, the Alibaba Cloud Simple Log Service team provides a high-performance and cost-effective solution that is easy to use, stable, and reliable. This solution helps the O&M and SRE teams fix the issues that may occur when the teams build the platform and improve work efficiency. In the early stage of its development, Simple Log Service is an internal logging system that supports only Alibaba Group and Ant Group. After years of evolution, Simple Log Service has become a cloud native observability and analysis platform that supports petabytes of data of multiple types such as logs, metrics, and traces.

Simple data import methods

Logtail: Logtail is easy to use and reliable, and provides high-performance after being tested on millions of servers. Logtail can be managed in the Simple Log Service console.

SDKs and producers: Data of mobile devices can be collected by using SDK for Java, C, Go, iOS, or Android or by using the web tracking feature.

Cloud native data collection: Custom Resource Definitions (CRDs) can be created to collect data from Container Service for Kubernetes (ACK).

Real-time data consumption and ecosystem connection

Simple Log Service supports elastic scalability that can be implemented within seconds. Petabytes of data can be written to and consumed from Simple Log Service in real time.

Simple Log Service is natively compatible with Flink, Storm, and Spark Streaming.

Large-scale data query and analysis

Tens of billions of data entries can be queried within seconds.

Simple Log Service supports the SQL-92 syntax, interactive query, machine learning functions, and security check functions.

Data transformation

Compared with the traditional extract, transform, and load (ETL) process, the data transformation feature of Simple Log Service can help reduce up to 90% of development costs.

Simple Log Service is a fully-managed service that provides high availability and elastic scalability.

Metrics

Cloud native metrics can be imported to Simple Log Service. Hundreds of millions of rows of Prometheus metrics can be collected and stored.

Unified trace import methods

The OpenTelemetry protocol can be used to collect traces. The OpenTracing protocol can be used to import traces from Jaeger and Zipkin to Simple Log Service. Traces from OpenCensus and SkyWalking can also be imported to Simple Log Service.

Data monitoring and alerting

Simple Log Service provides an integrated alerting feature that can be used to monitor data, trigger alerts, denoise alerts, manage alerts, and send alert notifications.

Intelligent anomaly detection

Simple Log Service offers unsupervised process monitoring and fault diagnosis and supports manual data labeling. These features significantly improve monitoring efficiency and accuracy.

Compared with solutions that combine multiple open source systems, Simple Log Service provides one-stop logging capabilities. Simple Log Service meets all data monitoring and analysis requirements of O&M and SRE teams. The O&M and SRE teams can use Simple Log Service to replace multiple combined systems, such as Kafka, Elasticsearch, Prometheus, and OLAP. Simple Log Service has the following advantages:

Lower O&M complexity

Simple Log Service is an out-of-the-box and maintenance-free cloud service.

Simple Log Service supports visualization management. Data can be imported within 5 minutes. The business support cost can be significantly reduced.

Lower cost

Only one copy of data is retained, and the data copy does not need to be transferred among multiple systems.

Resources are scaled based on the actual scenario. This helps prevent reserved resources from being wasted.

Comprehensive technical support is provided to reduce labor costs.

Better resource permission management

Simple Log Service provides complete consumption data for internal separate billing and cost optimization.

Simple Log Service supports permission management and resource isolation to prevent information leakage.

Simple Log Service provides a large-scale, low-cost, and real-time monitoring and analysis platform for logs, metrics, and traces. This helps O&M and SRE teams manage business with higher efficiency.