For multitenancy scenarios, such as e-commerce or Software as a Service (SaaS), you may need to provide differentiated service quality and resource guarantees for different tenant levels, such as VIP and regular customers. Traditional solutions often face challenges, including incomplete resource isolation and complex tenant scale-out or scale-in operations. PolarDB-X provides the storage pool feature, which lets you logically divide physical data nodes (DNs) into different resource groups. By binding a database or table partition to a specific resource pool, you can achieve physical resource isolation between tenants, flexibly adjust resource allocation, and ensure the stability of core services while simplifying operations management.

Feature overview

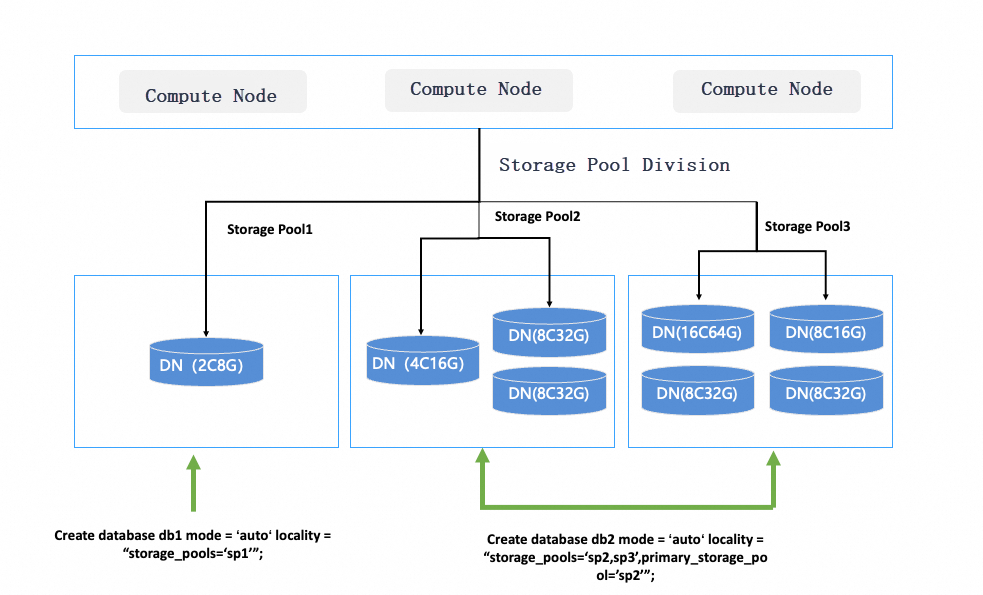

A storage pool is a resource management feature in PolarDB-X that lets you divide the physical data nodes (DNs) in an instance into multiple logical resource groups. Each storage pool consists of one or more DNs, and the DNs in different storage pools do not overlap.

You can bind database objects, such as databases, tables, or table partitions, to specific storage pools to physically isolate the data of these objects on the specified DNs. If the nodes within a storage pool or the binding of a database object changes, the system automatically triggers data migration to satisfy the new resource layout requirements.

This feature is primarily used to implement resource isolation, service-level differentiation, and fine-grained management of business workloads in multitenancy scenarios.

Scope

The instance kernel version must be

5.4.20-20250806or later.NoteFor information about the instance version naming rules, see Release notes.

For information about how to view the version of an instance, see View and update the version of an instance.

The partition mode (

MODE) of the logical database must beauto.

Usage instructions

Storage pools

We recommend that you use the Data Node Management feature in the PolarDB-X console to create and manage storage pools. The following SQL statements related to storage pools are provided only to help you understand how they work. We do not recommend that you run these statements directly in your production environment.

View storage pools

Before you perform any operations, you must understand the existing storage pools and their status in the current instance.

Default system pools

A PolarDB-X instance includes two system storage pools by default:

_default: The default storage pool. Initially, this pool contains all active data nodes (DNs) in the instance. If you do not specify a storage pool when you create a database object, its data is stored in this pool by default._recycle: The recycle storage pool. This pool stores idle data nodes that are removed from other storage pools or have not yet been allocated. These nodes are the source for creating new storage pools or scaling out existing ones.

Query method

You can query the information_schema.storage_pool_info view to obtain detailed information about all storage pools.

SELECT * FROM information_schema.storage_pool_info;Sample output

+------+----------+-----------------------------------------------------+-----------------+---------------------------+--------+---------------------+---------------------+

| ID | NAME | DN_ID_LIST | IDLE_DN_ID_LIST | UNDELETABLE_DN_ID | EXTRAS | GMT_CREATED | GMT_MODIFIED |

+------+----------+-----------------------------------------------------+-----------------+---------------------------+--------+---------------------+---------------------+

| 1 | _default | dn0,dn1,dn2,dn3,dn4,dn5,dn6,dn7,dn8,dn9 | | dn0 | NULL | 2024-04-15 19:46:05 | 2024-04-15 19:46:05 |

| 2 | _recycle | | | | NULL | 2024-04-15 19:46:05 | 2024-04-15 19:46:05 |

+------+----------+-----------------------------------------------------+-----------------+---------------------------+--------+---------------------+---------------------+Modify storage pools

You can add nodes to an existing storage pool (scale-out) or remove nodes from it (scale-in). When you remove a node, it is automatically moved to the _recycle storage pool. However, you cannot remove the UNDELETABLE_DN from a storage pool. Each storage pool must contain at least one DN, and one of them is designated as the UNDELETABLE DN. This node cannot be removed until the storage pool is deleted.

Scale-in

Remove a node from a storage pool. During a scale-in, the data on the node is automatically and asynchronously migrated.

-- Remove a node from a resource pool. The data on the node is automatically migrated.

ALTER STORAGE POOL <pool_name> DRAIN NODE "<dn_id_list>";Example

Scale in the _default storage pool. After the operation, only dn0 remains in the _default storage pool, and all other nodes are moved to the _recycle storage pool.

ALTER STORAGE POOL _default DRAIN NODE 'dn1,dn2,dn3,dn4,dn5,dn6,dn7,dn8,dn9';View the storage pools. The result is as follows:

SELECT * FROM information_schema.storage_pool_info;

+------+----------+-----------------------------------------------------+-----------------+---------------------------+--------+---------------------+---------------------+

| ID | NAME | DN_ID_LIST | IDLE_DN_ID_LIST | UNDELETABLE_DN_ID | EXTRAS | GMT_CREATED | GMT_MODIFIED |

+------+----------+-----------------------------------------------------+-----------------+---------------------------+--------+---------------------+---------------------+

| 1 | _default | dn0 | | dn0 | NULL | 2024-04-15 19:46:05 | 2024-04-15 19:46:05 |

| 2 | _recycle | dn1,dn2,dn3,dn4,dn5,dn6,dn7,dn8,dn9 | | | NULL | 2024-04-15 19:46:05 | 2024-04-15 19:46:05 |

+------+----------+-----------------------------------------------------+-----------------+---------------------------+--------+---------------------+---------------------+Scale-out

Add a node to a storage pool.

-- Add a node to a resource pool.

ALTER STORAGE POOL <pool_name> APPEND NODE "<dn_id_list>";The new node must not contain any database objects.

The DNs of different storage pools cannot overlap.

A single storage pool can contain DNs of different specifications.

Example

Scale out the _default storage pool. Move the dn1 node back to the _default storage pool.

ALTER STORAGE POOL _default APPEND NODE 'dn1';View the storage pools. The result is as follows:

SELECT * FROM information_schema.storage_pool_info;

+------+----------+-----------------------------------------------------+-----------------+---------------------------+--------+---------------------+---------------------+

| ID | NAME | DN_ID_LIST | IDLE_DN_ID_LIST | UNDELETABLE_DN_ID | EXTRAS | GMT_CREATED | GMT_MODIFIED |

+------+----------+-----------------------------------------------------+-----------------+---------------------------+--------+---------------------+---------------------+

| 1 | _default | dn0,dn1 | | dn0 | NULL | 2024-04-15 19:46:05 | 2024-04-15 19:46:05 |

| 2 | _recycle | dn2,dn3,dn4,dn5,dn6,dn7,dn8,dn9 | | | NULL | 2024-04-15 19:46:05 | 2024-04-15 19:46:05 |

+------+----------+-----------------------------------------------------+-----------------+---------------------------+--------+---------------------+---------------------+Create a storage pool

You can combine idle data nodes (DNs) from the recycle pool (_recycle) to create a new custom storage pool.

CREATE STORAGE POOL <pool_name> DN_LIST = "<dn_id_1>,<dn_id_2>,..." UNDELETABLE_DN = "<dn_id>";Parameters

pool_name: The name of the custom storage pool.DN_LIST: The list of data node IDs that the storage pool contains. These nodes must be idle.UNDELETABLE_DN: Specifies an undeletable node to ensure the minimum availability of the storage pool.

Rules

Each storage pool must contain at least one DN, and one of them is designated as the

UNDELETABLE DN. This node cannot be removed until the storage pool is deleted.When you create a storage pool, the specified DNs must not contain any database objects.

The DNs of different storage pools cannot overlap.

A single storage pool can contain DNs of different specifications.

Example

-- Create a resource pool named pool_1 that contains three nodes: dn4, dn5, and dn6.

CREATE STORAGE POOL pool_1 DN_LIST="dn4,dn5,dn6" UNDELETABLE_DN="dn4";

-- Create a resource pool named pool_2 that contains one node: dn7.

CREATE STORAGE POOL pool_2 DN_LIST="dn7" UNDELETABLE_DN="dn7";

-- Create a resource pool named pool_3 that contains two nodes: dn8 and dn9.

CREATE STORAGE POOL pool_3 DN_LIST="dn8,dn9" UNDELETABLE_DN="dn8";Associate storage pools with database objects

Define an association

When you create a database, table, or partition, you can use the LOCALITY clause to specify the storage pool where its data is stored. You can specify multiple storage pools (storage_pools) and a primary storage pool (primary_storage_pool). A complete storage pool binding declaration is as follows:

CREATE DATABASE | TABLE ... LOCALITY = "storage_pools=<pool_list>;primary_storage_pool=<pool_name>";Parameters

storage_pools: The list of all storage pools that this database object can use. For a broadcast table, a replica is created in all storage pools in this list.primary_storage_pool: The storage pool used by default. If you do not explicitly specify aLOCALITYfor child objects, such as tables or partitions, they use this primary storage pool by default.NoteWhen you explicitly specify a storage pool for a child object, such as a table or partition, it must belong to the set of storage pools (

storage_pools) available to its parent object.

Example

-- Create a database that can use pool_1, pool_2, and pool_3, with pool_1 and pool_2 as the default pools.

CREATE DATABASE db_test MODE = "auto" LOCALITY = "storage_pools=pool_1,pool_2,pool_3;primary_storage_pool=pool_1,pool_2";Modify an association

For a database, table group, or partition, you can directly change its storage pool. You can modify its LOCALITY definition to achieve online data migration.

If you add a storage pool to the object's association, no action is taken except to expand the node scope for broadcast tables.

If you remove a storage pool from the object's association, the storage pool definitions for that object and its child objects on the removed pool are removed, and their data is migrated.

(This operation is not supported at the database level.) If you completely clear the storage pools associated with an object, the object's association is changed to the default storage pool.

ALTER DATABASE | TABLE <object_name> [SET] LOCALITY = "...";This operation triggers a background data migration task that moves data from the old storage pool to the new one. The entire process is online and transparent to your application.

For database-level objects, regardless of how you change the associated storage pools, you cannot remove the

primary_storage_poolfrom thestorage_poolslist, nor can you change a definedprimary_storage_pool. This restriction does not apply to table groups and partitions.

Example

ALTER TABLE db_test LOCALITY = "storage_pools=pool_1;primary_storage_pool=pool_1";Sample scenarios

Scenario 1: Database-level tenants

An e-commerce platform, Enterprise X, needs to maintain orders from multiple sellers. These sellers include several large sellers with many orders and many small sellers. The following items describe how Enterprise X wants to allocate resources to different sellers:

Use separate storage resources to ensure stable online traffic and provide separate batch data analytics capabilities for large sellers.

Use a set of storage resources for all small sellers and ensure that the resources are distributed as evenly as possible among them.

As business changes, small sellers may become large sellers, and large sellers may migrate their business to or from other platforms.

Tenant definition

You can perform database-level resource isolation to meet the requirements of Enterprise X. You can divide existing storage nodes into multiple storage pools to create separate storage space for each large seller. Then, you can use the default storage pool as the shared storage space for small sellers.

CREATE STORAGE POOL sp1 dn_list="dn4,dn5,dn6" undeletable_dn="dn4";

CREATE STORAGE POOL sp2 dn_list="dn7,dn8,dn9" undeletable_dn="dn7";

CREATE DATABASE orders_comm MODE = "auto"

LOCALITY= "storage_pools='_default'"; /* Small sellers share the _default storage pool (dn1, dn2, dn3). */

CREATE DATABASE orders_seller1 MODE = "auto"

LOCALITY= "storage_pools='sp1'"; /* Large Seller 1 uses the sp1 storage pool (dn4, dn5, dn6). */

CREATE DATABASE orders_seller2 MODE = "auto"

LOCALITY= "storage_pools='sp2'"; /* Large Seller 2 uses the sp2 storage pool (dn7, dn8, dn9). */

...After you execute the preceding statements, the following results are achieved:

The storage resources of large sellers and small sellers are completely isolated.

Small sellers share dn1, dn2, and dn3 in the default storage pool.

The table creation statements for large and small sellers are independent. Each seller can configure the table schema and storage space separately.

Resource balancing

You can create non-partitioned tables based on the locality principle or create partitioned tables in the database used for small sellers. To balance resources among small sellers due to data distribution differences, you only need to run the statement that balances resources in the storage pool.

REBALANCE TENANT "_default" POLICY="data_balance";After you run the REBALANCE statement, the system automatically balances non-partitioned tables and partitions based on the object definitions in the storage pool. Similar to instance scaling, this operation automatically satisfies resource constraints and evenly distributes all non-partitioned tables and partitions on dn1, dn2, and dn3.

Tenant onboarding and offboarding

When a large seller migrates its business to Enterprise X, you can add a storage node to the instance, use the node to create a storage pool, and then create a database for the large seller that exclusively uses the new storage pool. By default, a new storage node is associated with the `_recycle` storage pool.

CREATE STORAGE POOL spn dn_list = "dn10,dn11,dn12" undeletable_dn="dn10";

/* Define a new storage pool spn (dn10, dn11, dn12). */

CREATE DATABASE orders_sellern MODE = "auto"

LOCALITY="storage_pools='spn'";

/* Large Seller N uses the spn storage pool. */If a large seller migrates its business to another platform, you can delete the corresponding database and release the associated resources.

DROP DATABASE orders_sellern;

DELETE STORAGE POOL spn;/* Delete the storage pool. The corresponding nodes are automatically moved to the _recycle storage pool. */

ALTER STORAGE POOL _recycle DRAIN NODE "dn7, dn8, dn9"; /* Release the corresponding nodes from the cluster. */When you add or remove the storage pool and database, the existing business is not affected and applications experience minimal interruption.

Scenario 2: Partition-level tenants

You can also isolate resources based on partitions to meet the requirements of Enterprise X from Scenario 1. Compared with the database-level tenant solution, the partition-level tenant solution provides the same isolation capability for each tenant and offers the following advantages:

All partitions share the definition of logical tables. Data administration operations, such as adding or removing columns and indexes, and pushdown relationships for broadcast tables are automatically managed by the database.

With partition management capabilities such as partition splitting and merging, this solution lets you change resources more flexibly. For example, you can upgrade the service levels of some tenants to VIP and merge the resources of other tenants.

Tenant definition

First, divide storage nodes into the corresponding storage pools, and then use all the pools to create a public database.

CREATE DATABASE orders_db MODE = "auto"

LOCALITY = "storage_pools='_default,sp1,sp2,...',primary_storage_pool='_default'"

USE orders_db;

CREATE TABLE commodity(

commodity_id int,

commodity_name varchar(64)

) BROADCAST;

/* As a broadcast table, commodity has table shards created in all storage pools. Therefore, join operations can be pushed down to any partition of the orders_sellers table. */

CREATE TABLE orders_sellers(

order_id int AUTO_INCREMENT primary key,

customer_id int,

commodity_id int,

country varchar(64),

seller_id int,

order_time datetime not null)

partition BY list(seller_id)

(

partition p1 VALUES IN (1, 2, 3, 4),

partition p2 VALUES IN (5, 6, 7, 8),

/* By default, partitions p1 and p2 of the orders_sellers table use the nodes in the _default storage pool and share the resources in that pool. */

...

partition pn VALUES IN (k) LOCALITY = "storage_pools='sp1'",

partition pn+1 VALUES IN (k+1, k+2) LOCALITY = "storage_pools='sp2'",

/* Partitions pn and pn+1 of the orders_sellers table use the nodes in the sp1 and sp2 storage pools, respectively, and exclusively occupy the resources of the corresponding storage nodes. */

...

) LOCALITY = "storage_pools='_default,sp1,sp2,...'";The database definition includes all storage pools, but it also defines the `primary_storage_pool` as the `_default` storage pool. Therefore, partitions in the `orders_sellers` table use the `_default` storage pool as the list of storage nodes by default, while the broadcast table is automatically distributed across all storage pool nodes.

The definition of each partition contains a different set of tenants. You can specify custom storage resources for each partition.

Tenant onboarding and offboarding

For new small tenants, you can directly add a partition. By default, data is not migrated. Only a new partition is created.

ALTER TABLE orders_sellers ADD PARTITION

(PARTITION p3 values in (32, 33));For new large tenants, you can add a node to the existing storage nodes, add a partition by routing directly to it, and specify the storage pool that it occupies.

CREATE STORAGE POOL spn dn_list = "dn10,dn11,dn12" undeletable_dn="dn10";

/* Add a new storage pool named spn to the instance. */

ALTER DATABASE orders_db SET LOCALITY =

"storage_pools='_default,sp1,sp2,...,spn',primary_storage_pool='_default'"

/* Add spn to the list of storage pools for the orders_db database. This change automatically triggers the migration of the broadcast table to the nodes of the new storage pool. */

ALTER TABLE orders_sellers ADD PARTITION

(PARTITION p4 values in (34) LOCALITY="storage_pools='spn'");

/* Add a new tenant partition to the orders_sellers table. This partition is distributed on the custom storage pool spn. */Similarly, for tenant offboarding, you can delete the corresponding partition and release the storage pool resources.

ALTER TABLE orders_sellers DROP PARTITION p4;Alternatively, you can change the corresponding partition definition to delete small sellers within the partition.

ALTER TABLE orders_sellers MODIFY PARTITION p1 DROP VALUES (4, 5);For partition-level tenants, all partitions share the definition of the logical table. Therefore, data schema changes can be uniformly managed by the database. The preceding partition change statements are all online operations that affect only the involved partitions. Existing services on other partitions are not affected.

Tenant service level changes

When a small seller's service level needs to be upgraded to the VIP level, you can modify the partition definition to assign it to a separate storage pool:

ALTER TABLE orders_sellers SPLIT PARTITION p2 INTO

(PARTITION p2 VALUES in (6, 7, 8) ,

PARTITION `pn+2` VALUES in (5) LOCALITY = "storage_pools='spn+3'");Similarly, you can downgrade the service level for a large seller.

ALTER TABLE orders_sellers MERGE PARTITION p1, pn TO p1 LOCALITY="";The preceding operation directly merges the data of partitions `p1` and `pn`, and the new partition is still stored in the default storage pool.

While retaining the resource isolation capabilities of database-level tenants, partition-level tenants provide more flexible tenant resource change and control capabilities through comprehensive partition management interfaces.

Resource balancing

When load imbalances occur between tenants at the partition level, you can also use the storage-pool-level `REBALANCE` operation to balance the load in the public storage pool.

REBALANCE TENANT "_default" POLICY="data_balance";Scenario 3: Migration from a standalone to a distributed database

Consider the process of migrating from a standalone relational database to a distributed database. In the initial stage of migration, you may want to reuse the original usage model as much as possible to benefit from the scale-out capability of the distributed database while making the transformation as seamless as possible. As the business grows, you can gradually evolve single tables with heavy business workloads into distributed tables, while ensuring that the existing database and table models and resource usage are affected as little as possible.

By defining storage resources for different tables using storage pools, you can achieve an integrated model where standalone and distributed tables coexist. This helps you smoothly transition to a distributed database.

Create databases and tables

Except for a few tables with broadcast table semantics, you can use the non-partitioned table sharding pattern in PolarDB-X to reuse your original table creation statements from a standalone database. This way, you can migrate and transform your existing business with minimal changes.

CREATE DATABASE orders_db MODE = "auto" DEFAULT_SINGLE=on

LOCALITY = "storage_pools='_default',primary_storage_pool='_default'";

use orders_db;

CREATE TABLE orders_region1(

order_id int AUTO_INCREMENT primary key,

customer_id int,

country varchar(64),

city int,

order_time datetime not null);

CREATE TABLE orders_region2(

order_id int AUTO_INCREMENT primary key,

customer_id int,

country varchar(64),

city int,

order_time datetime not null);

CREATE TABLE orders_region3(

order_id int AUTO_INCREMENT primary key,

customer_id int,

country varchar(64),

city int,

order_time datetime not null);

CREATE TABLE commodity(

commodity_id int,

commodity_name varchar(64)

) BROADCAST;The preceding statements used to create databases and tables achieve the following results:

All non-partitioned tables created using these statements are automatically balanced in the `_default` storage pool based on the data volume and the number of tables.

Broadcast tables are replicated across all nodes in the `_default` storage pool.

Isolate some non-partitioned tables

For non-partitioned tables that have excessive data volumes and high traffic, you can add storage nodes to the instance and isolate the tables on the new storage nodes:

ALTER DATABASE orders_db set LOCALITY = "storage_pools='_default,sp1',primary_storage_pool='_default'";

/* If a change operation is performed on the database, the system automatically handles the table shard expansion of the broadcast table. */

ALTER TABLE orders_region_single1 single LOCALITY = "storage_pools='sp1'";

/* Isolate non-partitioned tables that have excessive workloads on the nodes of the sp1 storage pool. */Transform tables into distributed tables

As the business develops, you can transform tables whose workloads exceed the limit of a single physical table into distributed tables and specify their storage nodes:

ALTER DATABASE orders_db set LOCALITY = "storage_pools='_default,sp1,sp2,sp3',primary_storage_pool='_default'";

ALTER TABLE orders_region_single2 partition by hash(order_id) partitions 16

LOCALITY = "storage_pools='sp2'";

/* The orders_region_single2 table uses the sp2 storage pool. */

ALTER TABLE orders_region_single3 partition by hash(order_id) partitions 16

LOCALITY = "storage_pools='sp3'";

/* The orders_region_single3 table uses the sp3 storage pool. */Sharding tasks allow you to specify custom partitioning methods and storage resources online. This helps transform table-level business models and convert non-partitioned tables into distributed tables seamlessly.